XPUB2 Research Board / Martin Foucaut: Difference between revisions

| (87 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

=<p style="font-family:helvetica">Links</p>= | =<p style="font-family:helvetica">Links</p>= | ||

*[[Martin (XPUB)-project proposal]] | *[[Martin (XPUB)-project proposal]] | ||

*[[Martin (XPUB)-thesis outline]] | *[[Martin (XPUB)-thesis outline]] | ||

*[[Martin (XPUB)-thesis]] | |||

=<p style="font-family:helvetica"> | =<p style="font-family:helvetica">Draft Thesis</p>= | ||

=== | ===What do you want to make?=== | ||

' | My project is a data collection installation that monitors people's behaviors in public physical spaces while explicitly encouraging them to help the algorithm collect more information. An overview of how it works is presented here in the project proposal and will be subject to further developments in the practice. | ||

<br><br> | |||

The way the device is designed doesn’t pretend to give any beneficial outcomes for the subject, but only makes visible the benefits that the machine is getting from collecting their data. Yet, the way the device visually or verbally presents this collected data is done in a grateful way, which might be stimulating for the subject. In that sense, the subject, despite knowing that their actions are done solely to satisfy the device, could become intrigued, involved, or even addicted by a mechanism that deliberately uses it as a commodity. In that way, I intend to trigger conflictual feelings in the visitor’s mind, situated between a state of awareness regarding the operating monetization of their physical behaviors, and a state of engagement/entertainment /stimulation regarding the interactive value of the installation. | |||

<br><br> | |||

My first desire is to make the mechanisms by which data collection is carried out, marketized and legitimized both understandable and accessible. The array of sensors, the Arduinos and the screen are the mainly technological components of this installation. Rather than using an already existing and complex tracking algorithm, the program is built from scratch, kept open source and limits itself to the conversion of a restricted range of physical actions into interactions. These include the detection of movements, positions, lapse of time spent standing still or moving, and entry or exit from a specific area of detection. Optionally they may also include the detection of the subject smartphone device or the log on a local Wi-Fi hotspot made by the subject. | |||

<br><br> | |||

In terms of mechanic, the algorithm creates feedback loops starting from: <br> | |||

_the subject behaviors being converted into information; <br> | |||

_the translation of this information into written/visual feedback; <br> | |||

_and the effects of this feedbacks on subject’s behavior; and so on. <br> | |||

By doing so, it tries to shape the visitors as free data providers inside their own physical environment, and stimulate their engagement by converting each piece of collected information into points/money, feeding a user score among a global ranking. | |||

<br><br> | |||

On the screen, displayed events can be: | |||

* | _ “subject [] currently located at [ ]” <br> | ||

[x points earned/given]<br> | |||

_ “subject [] entered the space” <br> | |||

[x points earned/given]<br> | |||

_ “subject [] left the space”<br> | |||

[x points earned/given]<br> | |||

_ “subject [] moving/not moving”<br> | |||

[x points earned/given]<br> | |||

_ “subject [] distance to screen: [ ] cm” <br> | |||

[x points earned/given]<br> | |||

_ “subject [] stayed at [ ] since [ ] seconds” <br> | |||

[x points earned/given]<br> | |||

_ “subject [] device detected <br> | |||

[x points earned/given] (optional)<br> | |||

_ “subject logged onto local Wi-Fi<br> | |||

[x points earned/given] (optional)<br> | |||

<br> | |||

Added to that comes the instructions and comments from the devices in reaction to the subject’s behaviors:<br> | |||

<br> | |||

_ “Congratulations, you have now given the monitor 25 % of all possible data to collect!” <br> | |||

[when 25-50-75-90-95-96-97-98-99% of the total array of events has been detected at least once]<br> | |||

_ “Are you sure you don’t want to move to the left? The monitor has only collected data from 3 visitors so far in this spot!”<br> | |||

[if the subject stands still in a specific location]<br> | |||

_ “Congratulations, the monitor has reached 1000 pieces of information from you!”<br> | |||

[unlocked at x points earned/given]<br> | |||

_ “If you stay there for two more minutes, there is a 99% chance you will be in the top 100 of ALL TIME data-givers!”<br> | |||

[if the subject stand still in a specific location]<br> | |||

_ “Leaving all ready? The monitor has yet to collect 304759 crucial pieces of information from you!”<br> | |||

[if the subject is a the edge of the detection range]<br> | |||

_ “You are only 93860 pieces of information away from being the top one data-giver!”<br> | |||

[unlocked at x points earned/given]<br> | |||

_ “Statistics show that people staying for more than 5 minutes average will benefit me on average 10 times more!”<br> | |||

[randomly appears]<br> | |||

_ “The longer you stay on this spot, the more chance you have to win a “Lazy data-giver” badge”<br> | |||

[if the subject stands still for a long time any location]<br> | |||

<br> | |||

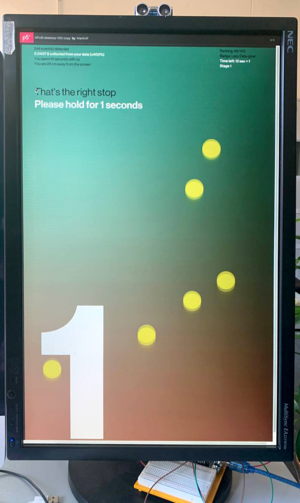

Responding positively to the monitors instructions unlocks special achievement and extra points<br> | |||

<br> | |||

—Accidental data-giver badge <br> | |||

[unlocked if the subject has passed the facility without deliberately wishing to interact with it] + [x points earned/given]<br> | |||

—Lazy data-giver badge <br> | |||

[unlocked if the subject has been standing still for at least one minute] + [x points earned/given]<br> | |||

—Novice data-giver badge <br> | |||

[unlocked if the subject has been successfully completing 5 missions from the monitor] + [x points earned/given]<br> | |||

—Hyperactive data-giver badge <br> | |||

[unlocked if the subject has never been standing still for 10 seconds within 2 minutes lapse time] + [x points earned/given]<br> | |||

—Expert data-giver badge <br> | |||

[unlocked if the subject has been successfully completing 10 missions from the monitor within 10 minutes] + [x points earned/given]<br> | |||

—Master data-giver badge <br> | |||

[unlocked if the subject has been successfully logging on the local Wi-Fi hotspot] + [x points earned/given] (optional)<br> | |||

<br> | |||

On the top left side of the screen, a user score displays the number of points generated by the collected pieces of information, and the unlocking of special achievements instructed by the monitor.<br> | |||

<br> | |||

—Given information: 298 pieces <br> | |||

[displays number of collected events]<br> | |||

—Points: 312000 <br> | |||

[conversion of collected events and achievement into points]<br> | |||

<br> | |||

On the top right of the screen, the user is ranked among x number of previous visitors and the prestigious badge recently earned is displayed bellow<br> | |||

<br> | |||

—subject global ranking: 3/42 <br> | |||

[compares subject’s score to all final scores from previous subjects]<br> | |||

—subject status: expert data-giver<br> | |||

[display the most valuable reward unlocked by the subject]<br> | |||

<br> | |||

When leaving the detection range, the subject gets a warning message and a countdown starts, and encouraging it to take the quick decision to come back<br> | |||

<br> | |||

—“Are you sure you want to leave? You have 5-4-3-2-1-0 seconds to come back within the detection range”<br> | |||

[displayed as long as the subject remains completely undetected]<br> | |||

<br> | |||

If the subject definitely stands out of the detection range for more than 5 seconds, the monitor will also address a thankful message and the amount of money gathered, achievements, ranking, complete list of collected information and a qr code will be printed as a receipt with the help of a thermal printer. The QR will be a link to my thesis.<br> | |||

<br> | |||

—* “Thank you for helping today, don’t forget to take your receipt in order to collect and resume your achievements”<br> | |||

[displayed after 5 seconds being undetected]<br> | |||

<br> | |||

In order to collect, read or/and use that piece of information, the visitor will inevitably have to come back within the range of detection, and intentionally, or not, reactivate the data tracking game. It is therefore impossible to leave the area of detection without leaving at least one piece of your own information printed in the space. Because of this, the physical space should gradually be invaded by tickets scattered on the floor. As in archaeology, these tickets give a precise trace of the behavior and actions of previous subjects for future subjects. <br> | |||

===Why do you want to make it?=== | |||

When browsing online or/and using connected devices in the physical world, even the most innocent action/information can be invisibly recorded, valued and translated into informational units, subsequently generating profit for monopolistic companies. While social platforms, brands, public institutions and governments explicitly promote the use of monitoring practices in order to better serve or protect us, we could also consider these techniques as implicitly political, playing around some dynamics of visibility and invisibility in order to assert new forms of power over targeted audiences. | |||

<br><br> | |||

In the last decade, a strong mistrust of new technologies has formed in the public opinion, fueled by events such as the revelations of Edward Snowden, the Cambridge Analytica scandal or the proliferation of fake news on social networks. We have also seen many artists take up the subject, sometimes with activist purposes. But even if a small number of citizens have begun to consider the social and political issues related to mass surveillance, and some individuals/groups/governments/associations have taken legal actions, surveillance capitalism still remains generally accepted, often because ignored or/and misunderstood. | |||

<br><br> | |||

Thanks to the huge profits generated by the data that we freely provide every day, big tech companies have been earning billions of dollars over the sale of our personal information. With that money, they could also further develop deep machine learning programs, powerful recommendation systems, and to broadly expand their range of services in order to track us in all circumstances and secure their monopolistic status. Even if we might consider this realm specific to the online world, we have seen a gradual involvement from the same companies to monitor the physical world and our human existences in a wide array of contexts. For example, with satellite and street photography (Google Earth, Street View), geo localization systems, simulated three-dimensional environments (augmented reality, virtual reality or metaverse) or extensions of our brains and bodies (vocal assistance and wearable devices). Ultimately, this reality has seen the emergence of not only a culture of surveillance but also of self-surveillance, as evidenced by the popularity of self-tracking and data sharing apps, which legitimize and encourage the datafication of the body for capitalistic purposes. | |||

<br><br> | |||

For the last 15 years, self-tracking tools have made their way to consumers. I believe that this trend is showing how ambiguous our relationship can be with tools that allow such practices. Through my work, I do not wish to position myself as a whistleblower, a teacher or activist. Indeed, to adopt such positions would be hypocritical, given my daily use of tools and platforms that resort to mass surveillance. Instead, I wish to propose an experience that highlights the contradictions in which you and I, internet users and human beings, can find ourselves. This contradiction is characterized by a paradox between our state of concern about the intrusive surveillance practices operated by the Web giants (and their effects on societies and humans) and a state of entertainment or even active engagement with the tools/platforms through which this surveillance is operated/allowed. By doing so, I want to ask how do these companies still manage to get our consent and what human biases do they exploit in order to do so. That’s is how my graduation work and my thesis will investigate the effect of gamification, gambling or reward systems as well as a the esthetization of data/self-data as means to hook our attention, create always more interactions and orientate our behaviors. | |||

===How to you plan to make it and on what timetable?=== | |||

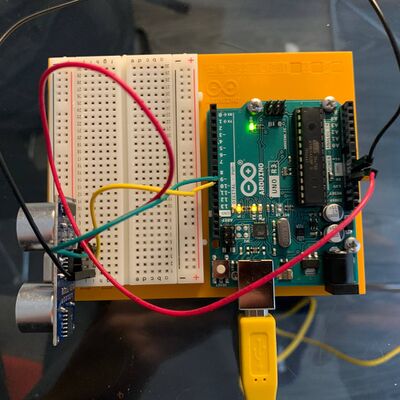

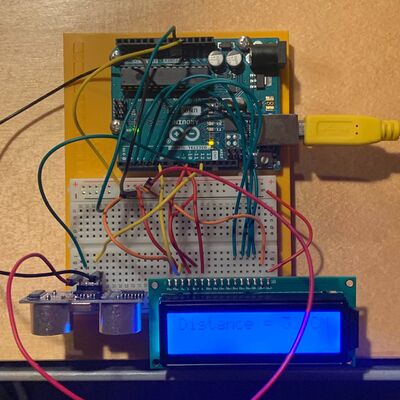

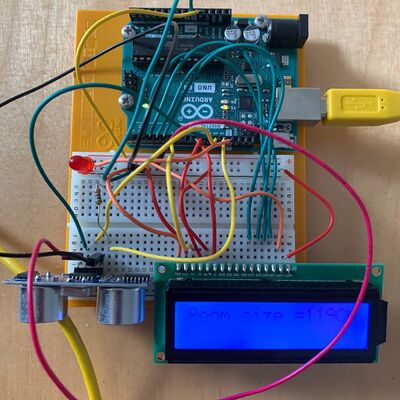

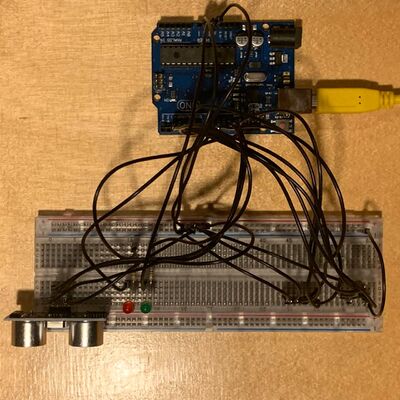

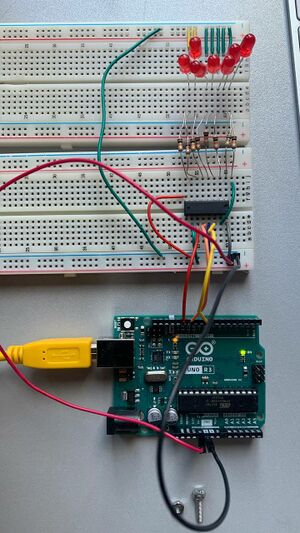

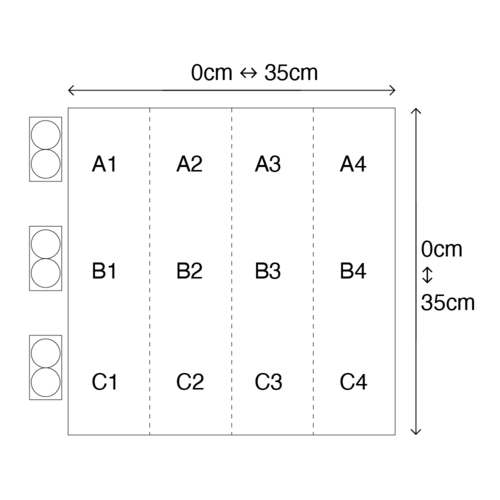

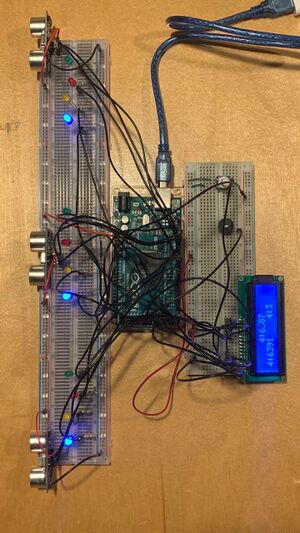

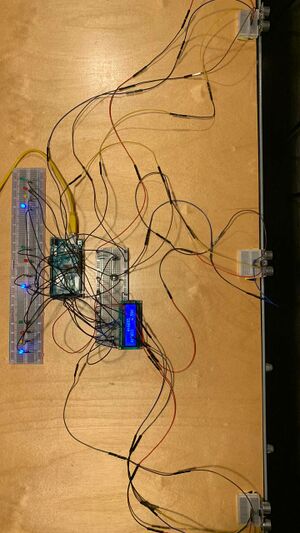

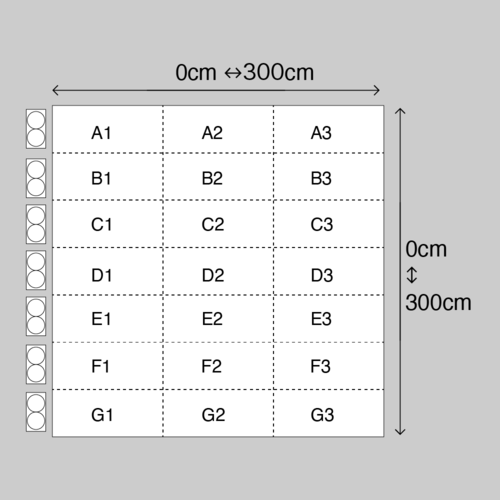

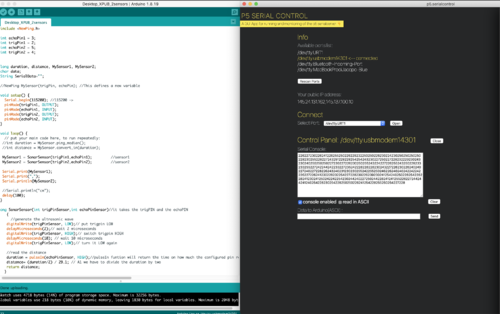

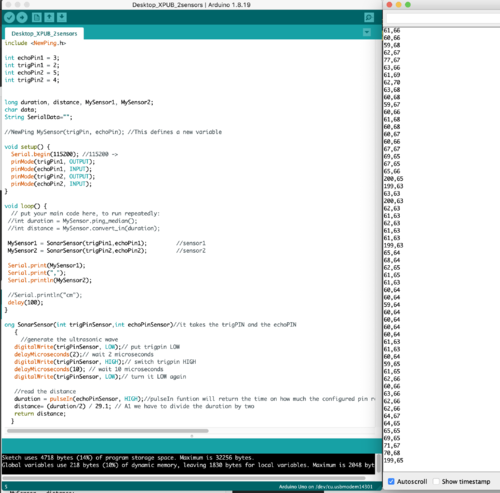

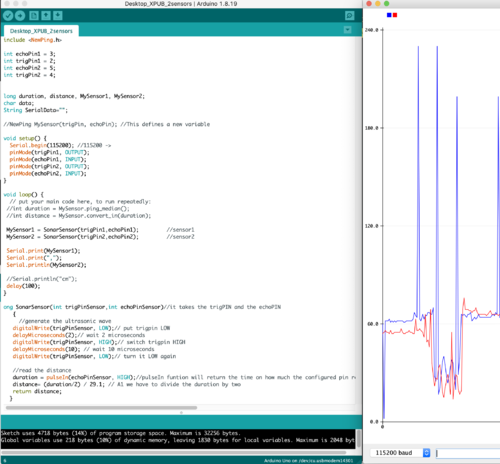

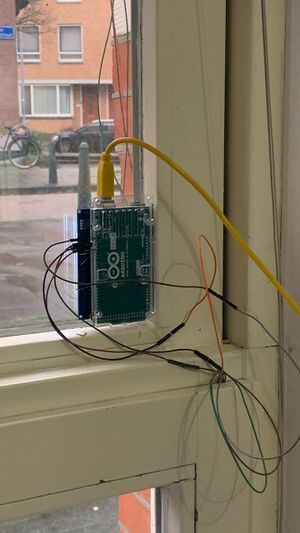

I am developing this project with Arduino Uno/Mega boards, an array of ultrasonic sensor, P5.js and screens.<br><br> | |||

<b>How does it work?</b> | |||

<br><br> | |||

The ultrasonic sensors can detect obstacles in a physical space and know the distance between the sensor and obstacle(s) by sending and receiving back an ultrasound. The Arduino Uno/Mega boards are microcontrollers which can receive this information, run it in a program in order to convert these values into a mm/cm/m but also map the space into an invisible grid. Ultimately, values collected on the Arduino’s serial monitor can be sent to P5.js through P5.serialcontrol. P5.js will then allow a greater freedom in way the information can be displayed on the screens. | |||

<br><br> | |||

Process: | |||

<br><br> | |||

<b>1st semester: Building a monitoring device, converting human actions into events, and events into visual feedbacks</b> | |||

<br><br> | |||

During the first semester, I am focused on exploring monitoring tools that can be used in the physical world, with a specific attention to ultrasonic sensors. Being new to Arduino programming, my way of working is to start from the smallest and most simple prototype and gradually increase its scale/technicality until reaching human/architectural scale. Prototypes are subject to testing, documentation and comments helping to define which direction to follow. The first semester also allows to experiment with different kind of screen (LCD screens, Touch screens, computer screens, TV screens) until finding the most adequate screen monitor(s) for the final installation. Before building the installation, the project is subject to several sketching and animated simulations in 3 dimensions, exploring different scenarios and narrations. At the end of the semester, the goal is to be able to convert a specific range of human actions into events and visual feedback creating a feedback loop from the human behaviors being converted into information; the translation of this information into written/visual feedbacks; and the effects of this feedbacks on human behavior; and so on. | |||

<br><br> | |||

<b>2nd semester: Implementing gamification with the help of collaborative filtering, point system and ranking.</b> | |||

<br><br> | |||

During the second semester, it is all about building and implementing a narration with the help of gaming mechanics that will encourage humans to feed the data gathering device with their own help. An overview of how it works is presented here in the project proposal and will be subject to further developments in the practice. | |||

<br><br> | |||

To summarize the storyline, the subject being positioned in the detection zone finds herself/himself unwillingly embodied as the main actor of a data collection game. Her/His mere presence generates a number of points/dollars displayed on a screen, growing as she/he stays within the area. The goal is simple: to get a higher score/rank and unlock achievements by acting as recommended by a data-collector. This can be done by setting clear goals/rewards to the subject, and putting its own performance in comparison to all the previous visitors, giving unexpected messages/rewards, and give an aesthetic value to the displayed informations. | |||

<br><br> | |||

The mechanism is based on a sample of physical events that have been already explored in the first semester of prototyping (detection of movements, positions, lapse of time spent standing still or moving, and entry or exit from a specific area of detection). Every single detected event in this installation is stored in a data bank, and with the help of collaborative filtering, will allow to the display of custom recommendations such as: | |||

<b> | |||

<br><br> | <br><br> | ||

_ “Congratulations, you have now given the monitor 12 % of all possible data to collect”<br> | |||

_ “Are you sure you don’t want to move to the left? The monitor has only collected data from 3 visitors so far in this spot”<br> | |||

_ “Congratulations, the monitor has reached 1000 pieces of information from you!”<br> | |||

_ “If you stay there for two more minutes, there is a 99% chance you will be in the top 100 of ALL TIME data-givers”<br> | |||

_ “Leaving all-ready? The monitor has yet 304759 crucial pieces of information to collect from you”<br> | |||

_ “You are only 93860 actions away from being the top one data-giver”<br> | |||

_ “Statistics are showing that people staying for more than 5 minutes average will be 10 times more benefitting for me”<br> | |||

_ “The longer you stay on this spot, the more chance you have to win a “Lazy data-giver” badge”<br> | |||

<br><br> | <br><br> | ||

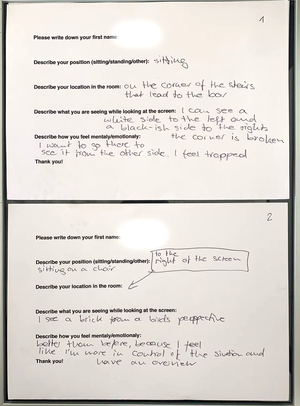

The guideline is set out here, but will be constantly updated with the help of experiments and the results observed during the various moments of interaction between the students and the algorithm. For this purpose, the installation under construction will be left active and autonomous in its place of conception (studio) and will allow anyone who deliberately wishes to interact with it to do so. Beyond the voluntary interactions, my interest is also to see what can be extracted from people simply passing in front of this installation. In addition to this, some of the mechanics of the installation will be further explored by collaborating with other students, and setting up more ephemeral and organized experiences with the participants. (ex: 15 February 2022 with Louisa) | |||

<br><br> | <br><br> | ||

This semester will also include the creation of a definite set of illustrations participating to engage the participants of the installation in a more emotional way, the illustrations will be made by an illustrator/designer, with whom I usually collaborate. | |||

<br><br> | <br><br> | ||

<b>3rd semester: Build the final installation of final assessment and graduation show. Test runs, debug and final touchs.</b> | |||

<br><br> | <br><br> | ||

During the third semester, the installation should be settled in the school, in the alumni area, next to XPUB studio for the final assessment, and ultimately settled again at WORM for the graduation show. I am interested in putting this installation into privileged spaces of human circulation, (such as hallways) that would more easily involve the detection of people, and highlight the intrusive aspect of such technologies. The narration, the mechanics, illustrations and graphic aspect should be finalized at the beginning of the 3rd semester, and subject to intense test runs during all that period until meeting the deadline. | |||

== | ===Relation to larger context=== | ||

As GAFAM companies are facing more and more legal issues, and held accountable in growing numbers of social and political issues around the world, the pandemic context has greatly contributed to make all of us more dependent than ever on the online services provided by these companies and to somehow force our consent. While two decades of counter-terrorism measures legitimized domestic and public surveillance techniques such as online and video monitoring, the current public health crisis made even more necessary the use of new technologies for regulating the access to public spaces and services, but also for socializing, working together, accessing to culture, etc. In a lot of countries, from a day to another, and for an undetermined time, it has become necessary to carry a smartphone (or a printed QR code) in order to get access transport, entertainment, cultural and catering services, but also in order to do simple things such as to have a look at the menu in a bar/restaurant or to make an order.. Thus, this project takes place in a context where techno-surveillance has definitely taken a determining place in the way we can access spaces and services related to the physical world. <br><br> | |||

Data Marketisation / Self Data: Quantified Self / Attention Economy / Public Health Surveillance / Cybernetics | |||

= | ===Relation to previous practice?=== | ||

During my previous studies in graphic design, I started being engaged with the new media by making a small online reissue of Raymond Queneau’s book called Exercices de Style. In this issue called Incidences Médiatiques (2017), the user/reader was encouraged to explore the 99 different versions of a same story written by the author in a less-linear way. The idea was to consider each user graphic user interface as a unique reading context. It would determine which story could be read, depending on the device used by the reader, and the user could navigate through these stories by resizing the Web window, by changing browser or by using on a different device. | |||

<br><br> | <br><br> | ||

As part of my graduation project called Media Spaces (2019), I wanted to reflect on the status of networked writing and reading, by programming my thesis in the form of Web to Print website. Subsequently, this website became translated in the physical space as a printed book, and a series of installations displayed in an exhibition space that was following the online structure of my thesis (home page, index, part 1-2-3-4). In that way, I was interested to inviting to visitors to make a physical experience some aspects of the Web | |||

<br><br> | <br><br> | ||

As a first-year student of Experimental Publishing, I continued to work in that direction by eventually creating a meta-website called Tense (2020) willing to display the invisible html <meta> tags inside of an essay in order to affect our interpretation of the text. In 2021, I worked on a geocaching pinball game highlighting invisible Web event, and a Web oscillator, which amplitude and frequency range were directly related to the user’s cursor position and browser screen-size. | |||

<br><br> | <br><br> | ||

While it has always been clear to me that these works were motivated by the desire to define media as context, subject or/and content, the projects presented here have often made use of surveillance tools to detect and translate user information into feedbacks, participating in the construction of an individualized narrative or/and a unique viewing/listening context (interaction, screen size, browser, mouse position). The current work aims to take a critical look at the effect of these practices in the context of techno surveillance. | |||

<br><br> | <br><br> | ||

Similarly, projects such as Media Spaces have sought to explore the growing confusion between human and web user, physical and virtual space or online and offline spaces. This project will demonstrate that these growing confusions will eventually lead us to be tracked in all circumstances, even in our most innocuous daily human activities/actions. | |||

===Selected References=== | |||

<b>Works:</b> | |||

* M. DARKE, fairlyintelligent.tech (2021) https://fairlyintelligent.tech/ | |||

« invites us to take on the role of an auditor, tasked with addressing the biases in a speculative AI »Alternatives to techno-surveillance | |||

<br> | |||

* MANUEL BELTRAN, Data Production Labor (2018) https://v2.nl/archive/works/data-production-labour/ | |||

Expose humans as producers of useful intellectual labor that is benefiting to the tech giants and the use than can be made out of that labor. | |||

<br> | |||

* TEGA BRAIN and SURYA MATTU, Unfit-bits (2016) http://tegabrain.com/Unfit-Bits | |||

Claims that that technological devices can be manipulated easily and hence, that they are fallible and subjective. They do this by simply placing a self-tracker (connected bracelet) in another context, such as on some other objects, in order to confuse these devices. | |||

<br> | |||

* JACOB APPELBAUM, Autonomy Cube (2014), https://www.e-flux.com/announcements/2916/trevor-paglen-and-jacob-appelbaumautonomy-cube/ | |||

Allows galleries to enjoy encrypted internet access and communications, through a Tor Network | |||

<br> | |||

* STUDIO MONIKER, Clickclickclick.click (2016) https://clickclickclick.click/ | |||

You are rewarded for exploring all the interactive possibilities of your mouse, revealing how our online behaviors can be monitored and interpretated by machines. | |||

<br> | |||

* | * RAFAEL LOZANO-HEMMER, Third Person (2006) https://www.lozano-hemmer.com/third_person.php | ||

Portrait of the viewer is drawn in real time by active words, which appear automatically to fill his or her silhouette https://www.lozano-hemmer.com/third_person.php | |||

<br> | |||

* JONAL LUND, What you see is what you get (2012) http://whatyouseeiswhatyouget.net/ | |||

«Every visitor to the website’s browser size, collected, and played back sequentially, ending with your own.» | |||

<br> | |||

* USMAN HAQUE, Mischievous Museum (1997) https://haque.co.uk/work/mischievous-museum/ | |||

Readings of the building and its contents are therefore always unique -- no two visitors share the same experience. https://haque.co.uk/work/mischievous-museum/ | |||

<br><br> | |||

<b>Books & Articles:</b> | |||

* | |||

* | |||

* | |||

* | |||

* | |||

* SHOSHANA ZUBOFF, The Age of Surveillance Capitalism (2020) | |||

Warns against this shift towards a «surveillance capitalism». Her thesis argues that, by appropriating our personal data, the digital giants are manipulating us and modifying our behavior, attacking our free will and threatening our freedoms and personal sovereignty. | |||

<br> | |||

* EVGENY MOROZOV, Capitalism’s New Clothes (2019) | |||

Extensive analysis and critic of Shoshana Zuboff research and publications. | |||

<br> | |||

* BYRON REEVES AND CLIFFORD NASS, The Media Equation, How People Treat Computers, Television, and New Media Like Real People and Places (1996) | |||

Precursor study of the relation between humans and machine, and how do you human relate to them. | |||

<br> | <br> | ||

* OMAR KHOLEIF, Goodbye, World! — Looking at Art in the digital Age (2018) | |||

Authors shares it’s own data as a journal in a part of the book, while on another part, question how the Internet has changed the way we perceive and relate, and interact with/to images. | |||

<br> | <br> | ||

* KATRIN FRITSCH, Towards an emancipatory understanding of widespread datafication (2018) | |||

Suggests that in response to our society of surveillance, artists can suggest activist response that doesn’t necessarily involve technological literacy, but instead can promote strong counter metaphors or/and counter use of these intrusive technologies. | |||

=<p style="font-family:helvetica">Prototyping</p>= | =<p style="font-family:helvetica">Prototyping</p>= | ||

| Line 555: | Line 256: | ||

[[File:Rail.jpg|200px|thumb|center|Rail]] | [[File:Rail.jpg|200px|thumb|center|Rail]] | ||

<br><br><br><br><br><br><br> | <br><br><br><br><br><br><br> | ||

===<p style="font-family:helvetica"> About the ultrasonic Sensor (HC-SR04)</p> === | ===<p style="font-family:helvetica"> About the ultrasonic Sensor (HC-SR04)</p> === | ||

| Line 854: | Line 554: | ||

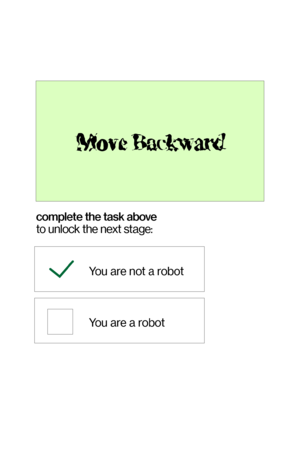

===<p style="font-family:helvetica">Sketch 10: Arduino Mega + 7 Sensors + LCD + 3 buzzers + P5.js </p> === | ===<p style="font-family:helvetica">Sketch 10: Arduino Mega + 7 Sensors + LCD + 3 buzzers + P5.js </p> === | ||

[[File:P5.js sensor.gif|300px|thumb|left|P5.js and ultrasonic sensor]] | |||

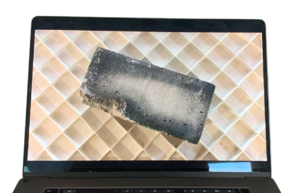

The goal here was to create a first communication between the physical setup and a P5.js web page | The goal here was to create a first communication between the physical setup and a P5.js web page | ||

<br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br> | |||

===<p style="font-family:helvetica">Sketch 11: Arduino Mega + UltraSonicSensor + LCD TouchScreen </p> === | ===<p style="font-family:helvetica">Sketch 11: Arduino Mega + UltraSonicSensor + LCD TouchScreen </p> === | ||

| Line 1,499: | Line 567: | ||

<br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br> | <br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br> | ||

==<p style="font-family:helvetica">Semester 2</p> == | |||

[[File:Simu part 02.gif|left|1000px]] | |||

<br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br> | |||

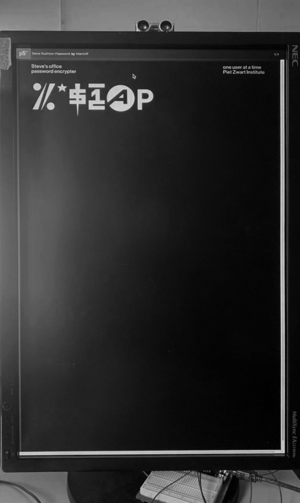

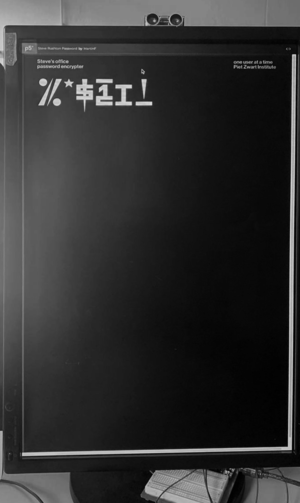

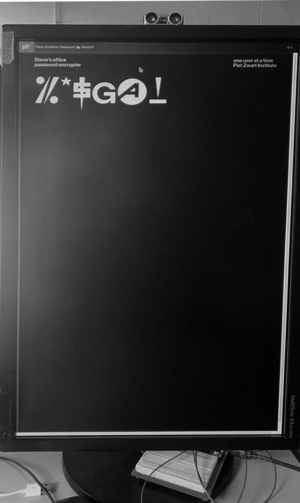

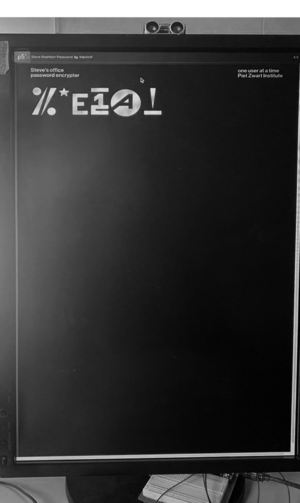

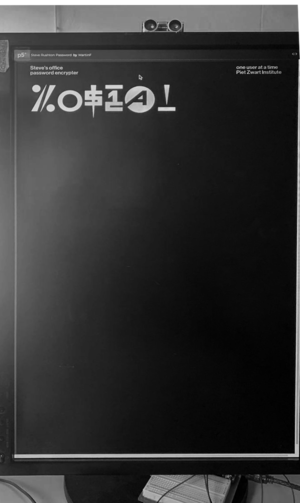

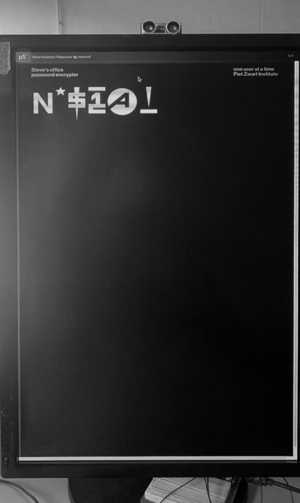

===<p style="font-family:helvetica">Sketch 12: Arduino Uno + P5serialcontrol + P5.js web editor = Code descrypter </p> === | |||

[[File:Codeword 01.png|300px|thumb|left|P]] | |||

[[File:Codeword 02.png|300px|thumb|center|I]] | |||

[[File:Codeword 03.png|300px|thumb|left|G]] | |||

[[File:Codeword 04.png|300px|thumb|center|E]] | |||

[[File:Codeword 05.png|300px|thumb|left|O]] | |||

[[File:Codeword 06.png|300px|thumb|center|N]] | |||

<br><br> | |||

<br><br> | |||

===<p style="font-family:helvetica">Sketch 13: Arduino Uno + P5serialcontrol + P5.js web editor = Game </p> === | |||

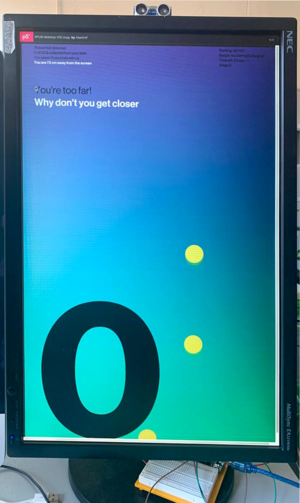

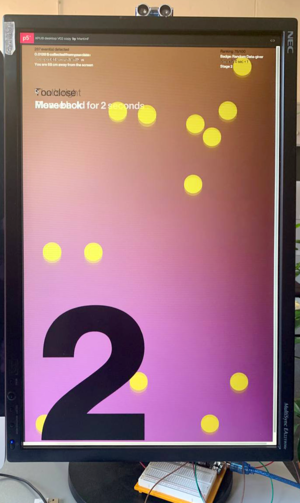

[[File:01 Screen .png|300px|thumb|left|Stage 0<br>The subject is located too far away]] | |||

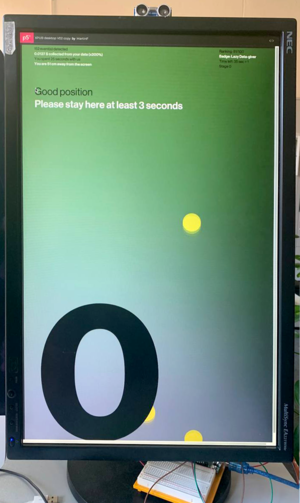

[[File:02 Screen.png|300px|thumb|center|Stage 0<br>The subject is well located and will hold position to reach next stage]] | |||

[[File:03 Screen.png|300px|thumb|left|Stage 1<br>The subject unlocked Stage 1 and will hold position to reach next stage ]] | |||

[[File:04 Screen.png|300px|thumb|center|Stage 2<br>The subject unlocked Stage 2 and is located too close]] | |||

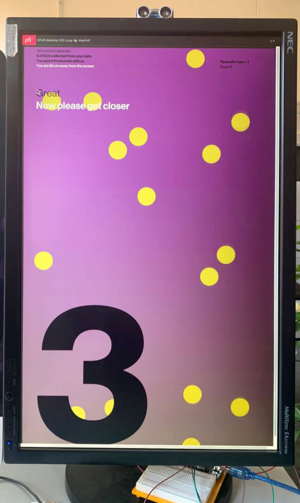

[[File:06 Screen.png|300px|thumb|left|Stage 3<br>The subject unlocked Stage 3 and need to get closer]] | |||

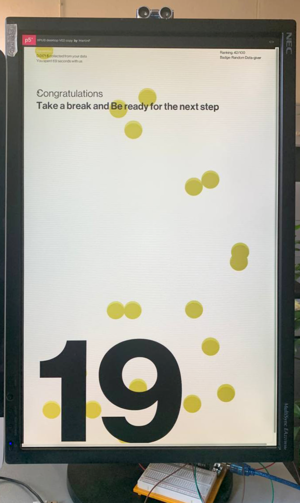

[[File:07 Screen.png|300px|thumb|center|Transition Stage<br>The subject unlocked all stage and needs to wait the countdown for following steps]] | |||

<br><br> | |||

===<p style="font-family:helvetica">Sketch 14: Arduino Uno + P5serialcontrol + P5.js web editor = Simplified interface</p> === | |||

[[File:Data Collector Sample 01.gif|400px|thumb|left]] | |||

<br><br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br><br> | |||

<br><br> | |||

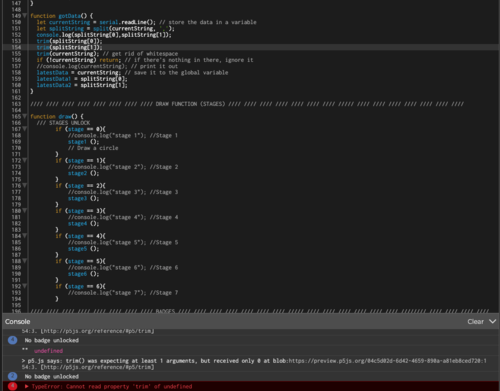

====<p style="font-family:helvetica">How to add split serial data value with more than one sensor</p>==== | |||

* Use Split: function https://p5js.org/reference/#/p5/split | |||

* Pad example: https://hub.xpub.nl/soupboat/pad/p/Martin | |||

[[File:Debug Martin 01.png|500px|thumb|left]] | |||

[[File:Debug Martin 05.png|500px|thumb|center]] | |||

[[File:Debug Martin 02.png|500px|thumb|left]] | |||

[[File:Debug Martin 03.png|500px|thumb|center]] | |||

[[File:Debug Martin 04.png|500px|thumb|left]] | |||

[[File:Debug Martin 06.png|500px|thumb|center]] | |||

<br><br><br><br><br><br><br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br><br><br><br><br><br><br> | |||

===<p style="font-family:helvetica">Installation update</p>=== | |||

[[File:Installation Update 01.jpg|300px|thumb|left]] | |||

[[File:Installation Update 02.jpg|300px|thumb|center]] | |||

<br><br><br><br><br><br><br><br> | |||

===<p style="font-family:helvetica">To do</p> === | |||

= | * Manage to store the data with WEB STORAGE API | ||

** https://www.w3schools.com/JS/js_api_web_storage.asp | |||

* Import Live data or Copy data from | |||

** https://www.worldometers.info/ | |||

* Import Live data from stock market | |||

** https://money.cnn.com/data/hotstocks/index.html | |||

** https://www.tradingview.com/chart/?symbol=NASDAQ%3ALIVE | |||

** https://www.google.com/finance/portfolio/972cea17-388c-4846-95da-4da948830b03 | |||

* Make a Bar graph | |||

** https://openprocessing.org/sketch/1152792 | |||

===<p style="font-family:helvetica">Stages Design</p> === | |||

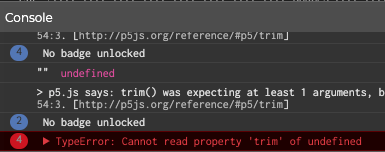

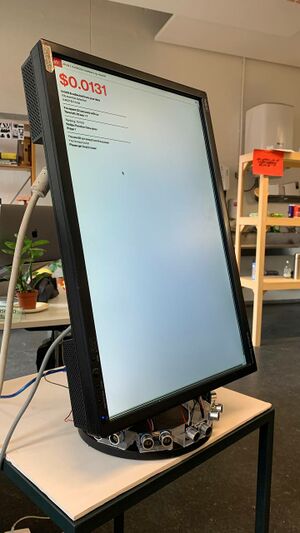

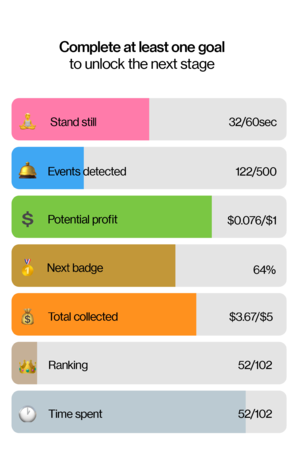

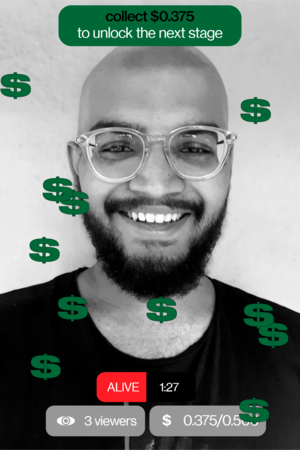

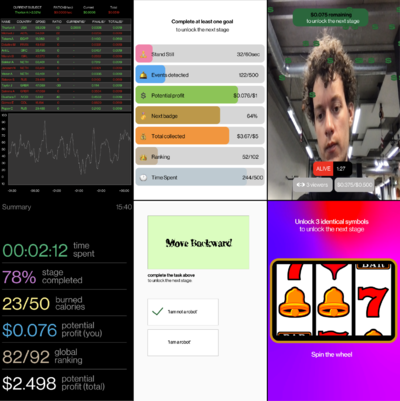

Many stages (mini-levels) are being designed. They are all intended to evoke the different contexts and pretexts for which we perform daily micro-tasks to collect data. | |||

<br> | |||

The visitor can unlock the next step by successfully completing one or more tasks in a row. After a while, even if the action is not successful, a new step will appear with a different interface and instructions. | |||

The list below details the different stages being produced, they will follow each others randomly during the session: | |||

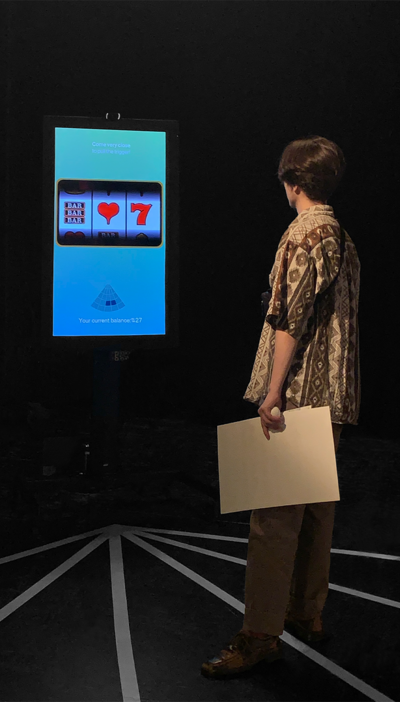

* | ** Money/Slot Machine | ||

* | ** Well-Being | ||

* | ** Healthcare | ||

* | ** Yoga | ||

* | ** Self-Management | ||

* | ** Stock Market Exchange | ||

* | ** Military interface | ||

The visuals bellow illustrate their design. | |||

[[File:Captcha 01.png|thumb|left|Captcha:<br>one action needed | |||

moving forward, backward or standing | |||

stillnext stage unlock after action done | |||

and short animation]] | |||

[[File:Self Track 01.png|thumb|center|Self Tracking:<br>no interaction needed | |||

visitor must stand still until | |||

one of the goal is completed]] | |||

[[File:Self Track 02.png|thumb|left|Self Tracking:<br>no interaction needed | |||

visitor must stand still until | |||

one of the goal is completed]] | |||

[[File:Slot Machine 01.png|thumb|center|Slot Machine:<br>no interactions needed | |||

transition between 2 stages | |||

determines randomly the next stage | |||

displayed when nobody detected]] | |||

[[File:Social Live 01.png|thumb|left|Social Live:<br>no interaction needed | |||

visitor must stand still until | |||

money goal is completed]] | |||

[[File:Stock Ticket 01.png|thumb|center|Stock Ticket:<br>no interactions needed | |||

displayed when nobody detected]] | |||

<br><br><br><br><br><br><br> | |||

===<p style="font-family:helvetica"> | ===<p style="font-family:helvetica">Stages Design with P5.js</p> === | ||

[[File:AllStages HomoData.png|400px|thumb|left]] | |||

[[File:Homo Data 02.gif|400px|thumb|left|6 levels in a row then randomnized, more to come]] | |||

[[File:Consolelog 01.gif|400px|thumb|center]] | |||

<br><br> | |||

<br><br> | |||

<br><br> | |||

<br><br> | |||

<br><br> | |||

<br><br> | |||

<br><br> | |||

<br><br> | |||

<br><br> | |||

<br><br> | |||

<br><br> | |||

<br><br> | |||

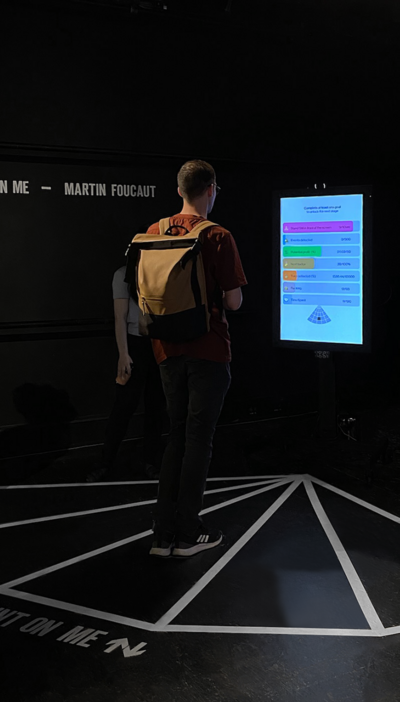

===<p style="font-family:helvetica">Grad Show: Worm</p> === | |||

[[File:CountonMephoto 01 RD.png|left|thumb|702x702px|Count on Me - Worm - 01]] | |||

[[File:CountonMephoto 03 RD.png|thumb|702x702px|Count on Me - Worm - 02]] | |||

[[File:CountonMephoto 04 RD.png|left|thumb|702x702px|Count on Me - Worm - 03]] | |||

[[File:CountonMephoto 05 RD.png|thumb|702x702px|Count on Me - Worm - 04]] | |||

[[File:CountonMephoto 06 RD.png|left|thumb|702x702px|Count on Me - Worm - 05]] | |||

[[File:CountonMephoto 07 RD.png|thumb|702x702px|Count on Me - Worm - 06]] | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

<br><br><br><br><br><br><br><br> | |||

==<p style="font-family:helvetica"> Prototyping Ressources</p> == | ==<p style="font-family:helvetica"> Prototyping Ressources</p> == | ||

| Line 1,813: | Line 804: | ||

*https://forensic-architecture.org/ | *https://forensic-architecture.org/ | ||

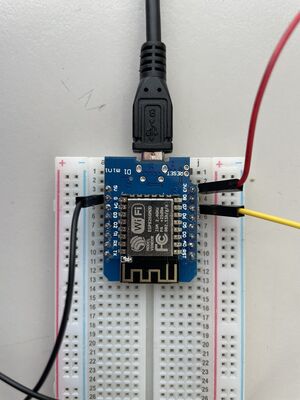

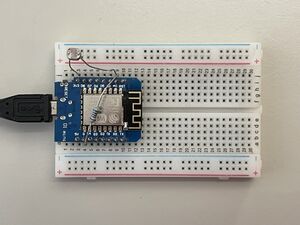

==<p style="font-family:helvetica"> | ====<p style="font-family:helvetica"> About the ESP8266 module</p> ==== | ||

The ESP8266 is a microcontroller IC with Wi-Fi connection, it will allow us to connect the arduino to the internet so we can get the values obtained from sensors received directly on a self-hosted webpage. From this same web page, it would also be possible to control LESs, motors, LCD screens, etc. | |||

====<p style="font-family:helvetica"> Ressources about ESP8266 module</p> ==== | |||

Kindly fowarded by Lousia:<br> | |||

* https://www.youtube.com/watch?v=6hpIjx8d15s | |||

* https://randomnerdtutorials.com/getting-started-with-esp8266-wifi-transceiver-review/ | |||

* https://www.youtube.com/watch?v=dWM4p_KaTHY | |||

* https://randomnerdtutorials.com/esp8266-web-server/ | |||

* https://www.youtube.com/watch?v=6hpIjx8d15s | |||

* https://electronoobs.com/eng_arduino_tut101.php | |||

* http://surveillancearcade.000webhostapp.com/index.php (interface) | |||

===<p style="font-family:helvetica"> | ====<p style="font-family:helvetica">Which ESP8266 to buy</p> ==== | ||

* https://makeradvisor.com/tools/esp8266-esp-12e-nodemcu-wi-fi-development-board/ | |||

* https://randomnerdtutorials.com/getting-started-with-esp8266-wifi-transceiver-review/ | |||

* https://www.amazon.nl/-/en/dp/B06Y1ZPNMS/ref=sr_1_5?crid=3U8B6L2J834X0&dchild=1&keywords=SP8266%2BNodeMCU%2BCP2102%2BESP&qid=1635089256&refresh=1&sprefix=sp8266%2Bnodemcu%2Bcp2102%2Besp%2Caps%2C115&sr=8-5&th=1 | |||

< | ==<p style="font-family:helvetica">Installation</p>== | ||

===<p style="font-family:helvetica">Ressources</p> === | ===<p style="font-family:helvetica">Ressources</p> === | ||

| Line 1,831: | Line 835: | ||

=<p style="font-family:helvetica">Venues</p> = | =<p style="font-family:helvetica">Venues</p> = | ||

==<p style="font-family:helvetica">Venue 1: Aquarium </p>== | ==<p style="font-family:helvetica">Venue 1: Aquarium </p>== | ||

| Line 1,916: | Line 911: | ||

<br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br> | <br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br><br> | ||

=<p style="font-family:helvetica">Venues</p> = | |||

==<p style="font-family:helvetica">Venue 2: Aquarium 2.0 </p>== | |||

===<p style="font-family:helvetica">Description</p>=== | |||

<br> | |||

Date 29th Nov — 4th Dec 2021 <br> | |||

Time 15h — 18h <br> | |||

29th Nov — 4th Dec 2021 (all day)<br> | |||

Location: De Buitenboel, Rosier Faassenstraat 22 3025 GN Rotterdam, NL<br> | |||

<br><br> | |||

AQUARIUM 2.0 <br> | |||

<br> | |||

An ongoing window exhibition with Clara Gradel, Floor van Meeuwen, Martin Foucaut, Camilo Garcia, Federico Poni, Nami Kim, Euna Lee, Kendal Beynon, Jacopo Lega and Louisa Teichmann<br> | |||

<br> | |||

Tap upon the glass and peer into the research projects we are currently working on. | |||

From Monday 29th of November until Saturday 4th of December we put ourselves on display in the window of De Buitenboel as an entry point into our think tank. Navigating between a range of technologies, such as wireless radio waves, virtual realities, sensors, ecological and diffractive forms of publishing, web design frameworks, language games, and an ultra-territorial residency; we invite you to gaze inside the tank and float with us. Welcome back to the ecosystem of living thoughts.<br> | |||

==<p style="font-family:helvetica">Aquarium LCD Portal (29 Nov – 4th Dec)</p>== | |||

This interactive micro-installation composed of a LCD screen and sensor(s) invites users/visitors to change the color of the screen and displayed messages by getting more or less close from the window. | |||

[https://www.pzwart.nl/blog/2021/11/30/aquarium-2-0/ Link] | |||

[[File:ScreenPortalFront.jpg|300px|thumb|left|ScreenPortalFront]] | |||

[[File:ScreenPortalback.jpg|300px|thumb|right|ScreenPortalback]] | |||

[[File:LCDScreenTest.gif|600px|thumb|center|LCDScreenTest]] | |||

<br><br><br><br><br><br><br><br><br><br><br><br><br> | |||

=<p style="font-family:helvetica">Readings (new)(english)(with notes in english) </p>= | =<p style="font-family:helvetica">Readings (new)(english)(with notes in english) </p>= | ||

| Line 2,005: | Line 1,029: | ||

* Library vs Exhibition Space = Use vs Display | * Library vs Exhibition Space = Use vs Display | ||

* Book-theme exhibitions | * Book-theme exhibitions | ||

==<p style="font-family:helvetica">About User vs Visitor, or user in exhibition space</p>== | |||

[[Designing the user experience in exhibition spaces - Elisa Rubegni, Caporali Maurizio, Antonio Rizzo, Erik Grönvall]] | |||

* What are the GUI intentions | |||

* What is the WIMP interaction model | |||

* What are the post-Wimp models | |||

* About Memex | |||

==<p style="font-family:helvetica">About User Interface</p>== | ==<p style="font-family:helvetica">About User Interface</p>== | ||

| Line 2,103: | Line 1,135: | ||

| | ||

Grenoble, 21 December 2001. | Grenoble, 21 December 2001. | ||

→ 9. [[Graspable interfaces (Fitzmaurice et al., 1995)]] [https://www.dgp.toronto.edu/~gf/papers/PhD%20-%20Graspable%20UIs/Thesis.gf.html link] | |||

==<p style="font-family:helvetica">About User Condition</p>== | ==<p style="font-family:helvetica">About User Condition</p>== | ||

| Line 2,283: | Line 1,317: | ||

→ http://all-html.net/?<br> | → http://all-html.net/?<br> | ||

<div style=' | |||

width: 75%; | |||

font-size:16px; | |||

background-color: white; | |||

color:black; | |||

float: left; | |||

border:1px black; | |||

font-family: helvetica; | |||

'> | |||

<div style=' | |||

width: 75%; | |||

font-size:16px; | |||

background-color: white; | |||

color:black; | |||

float: left; | |||

border:1px black; | |||

font-family: helvetica; | |||

'> | |||

Latest revision as of 20:53, 26 February 2024

Links

Draft Thesis

What do you want to make?

My project is a data collection installation that monitors people's behaviors in public physical spaces while explicitly encouraging them to help the algorithm collect more information. An overview of how it works is presented here in the project proposal and will be subject to further developments in the practice.

The way the device is designed doesn’t pretend to give any beneficial outcomes for the subject, but only makes visible the benefits that the machine is getting from collecting their data. Yet, the way the device visually or verbally presents this collected data is done in a grateful way, which might be stimulating for the subject. In that sense, the subject, despite knowing that their actions are done solely to satisfy the device, could become intrigued, involved, or even addicted by a mechanism that deliberately uses it as a commodity. In that way, I intend to trigger conflictual feelings in the visitor’s mind, situated between a state of awareness regarding the operating monetization of their physical behaviors, and a state of engagement/entertainment /stimulation regarding the interactive value of the installation.

My first desire is to make the mechanisms by which data collection is carried out, marketized and legitimized both understandable and accessible. The array of sensors, the Arduinos and the screen are the mainly technological components of this installation. Rather than using an already existing and complex tracking algorithm, the program is built from scratch, kept open source and limits itself to the conversion of a restricted range of physical actions into interactions. These include the detection of movements, positions, lapse of time spent standing still or moving, and entry or exit from a specific area of detection. Optionally they may also include the detection of the subject smartphone device or the log on a local Wi-Fi hotspot made by the subject.

In terms of mechanic, the algorithm creates feedback loops starting from:

_the subject behaviors being converted into information;

_the translation of this information into written/visual feedback;

_and the effects of this feedbacks on subject’s behavior; and so on.

By doing so, it tries to shape the visitors as free data providers inside their own physical environment, and stimulate their engagement by converting each piece of collected information into points/money, feeding a user score among a global ranking.

On the screen, displayed events can be:

_ “subject [] currently located at [ ]”

[x points earned/given]

_ “subject [] entered the space”

[x points earned/given]

_ “subject [] left the space”

[x points earned/given]

_ “subject [] moving/not moving”

[x points earned/given]

_ “subject [] distance to screen: [ ] cm”

[x points earned/given]

_ “subject [] stayed at [ ] since [ ] seconds”

[x points earned/given]

_ “subject [] device detected

[x points earned/given] (optional)

_ “subject logged onto local Wi-Fi

[x points earned/given] (optional)

Added to that comes the instructions and comments from the devices in reaction to the subject’s behaviors:

_ “Congratulations, you have now given the monitor 25 % of all possible data to collect!”

[when 25-50-75-90-95-96-97-98-99% of the total array of events has been detected at least once]

_ “Are you sure you don’t want to move to the left? The monitor has only collected data from 3 visitors so far in this spot!”

[if the subject stands still in a specific location]

_ “Congratulations, the monitor has reached 1000 pieces of information from you!”

[unlocked at x points earned/given]

_ “If you stay there for two more minutes, there is a 99% chance you will be in the top 100 of ALL TIME data-givers!”

[if the subject stand still in a specific location]

_ “Leaving all ready? The monitor has yet to collect 304759 crucial pieces of information from you!”

[if the subject is a the edge of the detection range]

_ “You are only 93860 pieces of information away from being the top one data-giver!”

[unlocked at x points earned/given]

_ “Statistics show that people staying for more than 5 minutes average will benefit me on average 10 times more!”

[randomly appears]

_ “The longer you stay on this spot, the more chance you have to win a “Lazy data-giver” badge”

[if the subject stands still for a long time any location]

Responding positively to the monitors instructions unlocks special achievement and extra points

—Accidental data-giver badge

[unlocked if the subject has passed the facility without deliberately wishing to interact with it] + [x points earned/given]

—Lazy data-giver badge

[unlocked if the subject has been standing still for at least one minute] + [x points earned/given]

—Novice data-giver badge

[unlocked if the subject has been successfully completing 5 missions from the monitor] + [x points earned/given]

—Hyperactive data-giver badge

[unlocked if the subject has never been standing still for 10 seconds within 2 minutes lapse time] + [x points earned/given]

—Expert data-giver badge

[unlocked if the subject has been successfully completing 10 missions from the monitor within 10 minutes] + [x points earned/given]

—Master data-giver badge

[unlocked if the subject has been successfully logging on the local Wi-Fi hotspot] + [x points earned/given] (optional)

On the top left side of the screen, a user score displays the number of points generated by the collected pieces of information, and the unlocking of special achievements instructed by the monitor.

—Given information: 298 pieces

[displays number of collected events]

—Points: 312000

[conversion of collected events and achievement into points]

On the top right of the screen, the user is ranked among x number of previous visitors and the prestigious badge recently earned is displayed bellow

—subject global ranking: 3/42

[compares subject’s score to all final scores from previous subjects]

—subject status: expert data-giver

[display the most valuable reward unlocked by the subject]

When leaving the detection range, the subject gets a warning message and a countdown starts, and encouraging it to take the quick decision to come back

—“Are you sure you want to leave? You have 5-4-3-2-1-0 seconds to come back within the detection range”

[displayed as long as the subject remains completely undetected]

If the subject definitely stands out of the detection range for more than 5 seconds, the monitor will also address a thankful message and the amount of money gathered, achievements, ranking, complete list of collected information and a qr code will be printed as a receipt with the help of a thermal printer. The QR will be a link to my thesis.

—* “Thank you for helping today, don’t forget to take your receipt in order to collect and resume your achievements”

[displayed after 5 seconds being undetected]

In order to collect, read or/and use that piece of information, the visitor will inevitably have to come back within the range of detection, and intentionally, or not, reactivate the data tracking game. It is therefore impossible to leave the area of detection without leaving at least one piece of your own information printed in the space. Because of this, the physical space should gradually be invaded by tickets scattered on the floor. As in archaeology, these tickets give a precise trace of the behavior and actions of previous subjects for future subjects.

Why do you want to make it?

When browsing online or/and using connected devices in the physical world, even the most innocent action/information can be invisibly recorded, valued and translated into informational units, subsequently generating profit for monopolistic companies. While social platforms, brands, public institutions and governments explicitly promote the use of monitoring practices in order to better serve or protect us, we could also consider these techniques as implicitly political, playing around some dynamics of visibility and invisibility in order to assert new forms of power over targeted audiences.

In the last decade, a strong mistrust of new technologies has formed in the public opinion, fueled by events such as the revelations of Edward Snowden, the Cambridge Analytica scandal or the proliferation of fake news on social networks. We have also seen many artists take up the subject, sometimes with activist purposes. But even if a small number of citizens have begun to consider the social and political issues related to mass surveillance, and some individuals/groups/governments/associations have taken legal actions, surveillance capitalism still remains generally accepted, often because ignored or/and misunderstood.

Thanks to the huge profits generated by the data that we freely provide every day, big tech companies have been earning billions of dollars over the sale of our personal information. With that money, they could also further develop deep machine learning programs, powerful recommendation systems, and to broadly expand their range of services in order to track us in all circumstances and secure their monopolistic status. Even if we might consider this realm specific to the online world, we have seen a gradual involvement from the same companies to monitor the physical world and our human existences in a wide array of contexts. For example, with satellite and street photography (Google Earth, Street View), geo localization systems, simulated three-dimensional environments (augmented reality, virtual reality or metaverse) or extensions of our brains and bodies (vocal assistance and wearable devices). Ultimately, this reality has seen the emergence of not only a culture of surveillance but also of self-surveillance, as evidenced by the popularity of self-tracking and data sharing apps, which legitimize and encourage the datafication of the body for capitalistic purposes.

For the last 15 years, self-tracking tools have made their way to consumers. I believe that this trend is showing how ambiguous our relationship can be with tools that allow such practices. Through my work, I do not wish to position myself as a whistleblower, a teacher or activist. Indeed, to adopt such positions would be hypocritical, given my daily use of tools and platforms that resort to mass surveillance. Instead, I wish to propose an experience that highlights the contradictions in which you and I, internet users and human beings, can find ourselves. This contradiction is characterized by a paradox between our state of concern about the intrusive surveillance practices operated by the Web giants (and their effects on societies and humans) and a state of entertainment or even active engagement with the tools/platforms through which this surveillance is operated/allowed. By doing so, I want to ask how do these companies still manage to get our consent and what human biases do they exploit in order to do so. That’s is how my graduation work and my thesis will investigate the effect of gamification, gambling or reward systems as well as a the esthetization of data/self-data as means to hook our attention, create always more interactions and orientate our behaviors.

How to you plan to make it and on what timetable?

I am developing this project with Arduino Uno/Mega boards, an array of ultrasonic sensor, P5.js and screens.

How does it work?

The ultrasonic sensors can detect obstacles in a physical space and know the distance between the sensor and obstacle(s) by sending and receiving back an ultrasound. The Arduino Uno/Mega boards are microcontrollers which can receive this information, run it in a program in order to convert these values into a mm/cm/m but also map the space into an invisible grid. Ultimately, values collected on the Arduino’s serial monitor can be sent to P5.js through P5.serialcontrol. P5.js will then allow a greater freedom in way the information can be displayed on the screens.

Process:

1st semester: Building a monitoring device, converting human actions into events, and events into visual feedbacks

During the first semester, I am focused on exploring monitoring tools that can be used in the physical world, with a specific attention to ultrasonic sensors. Being new to Arduino programming, my way of working is to start from the smallest and most simple prototype and gradually increase its scale/technicality until reaching human/architectural scale. Prototypes are subject to testing, documentation and comments helping to define which direction to follow. The first semester also allows to experiment with different kind of screen (LCD screens, Touch screens, computer screens, TV screens) until finding the most adequate screen monitor(s) for the final installation. Before building the installation, the project is subject to several sketching and animated simulations in 3 dimensions, exploring different scenarios and narrations. At the end of the semester, the goal is to be able to convert a specific range of human actions into events and visual feedback creating a feedback loop from the human behaviors being converted into information; the translation of this information into written/visual feedbacks; and the effects of this feedbacks on human behavior; and so on.

2nd semester: Implementing gamification with the help of collaborative filtering, point system and ranking.

During the second semester, it is all about building and implementing a narration with the help of gaming mechanics that will encourage humans to feed the data gathering device with their own help. An overview of how it works is presented here in the project proposal and will be subject to further developments in the practice.

To summarize the storyline, the subject being positioned in the detection zone finds herself/himself unwillingly embodied as the main actor of a data collection game. Her/His mere presence generates a number of points/dollars displayed on a screen, growing as she/he stays within the area. The goal is simple: to get a higher score/rank and unlock achievements by acting as recommended by a data-collector. This can be done by setting clear goals/rewards to the subject, and putting its own performance in comparison to all the previous visitors, giving unexpected messages/rewards, and give an aesthetic value to the displayed informations.

The mechanism is based on a sample of physical events that have been already explored in the first semester of prototyping (detection of movements, positions, lapse of time spent standing still or moving, and entry or exit from a specific area of detection). Every single detected event in this installation is stored in a data bank, and with the help of collaborative filtering, will allow to the display of custom recommendations such as:

_ “Congratulations, you have now given the monitor 12 % of all possible data to collect”

_ “Are you sure you don’t want to move to the left? The monitor has only collected data from 3 visitors so far in this spot”

_ “Congratulations, the monitor has reached 1000 pieces of information from you!”

_ “If you stay there for two more minutes, there is a 99% chance you will be in the top 100 of ALL TIME data-givers”

_ “Leaving all-ready? The monitor has yet 304759 crucial pieces of information to collect from you”

_ “You are only 93860 actions away from being the top one data-giver”

_ “Statistics are showing that people staying for more than 5 minutes average will be 10 times more benefitting for me”

_ “The longer you stay on this spot, the more chance you have to win a “Lazy data-giver” badge”

The guideline is set out here, but will be constantly updated with the help of experiments and the results observed during the various moments of interaction between the students and the algorithm. For this purpose, the installation under construction will be left active and autonomous in its place of conception (studio) and will allow anyone who deliberately wishes to interact with it to do so. Beyond the voluntary interactions, my interest is also to see what can be extracted from people simply passing in front of this installation. In addition to this, some of the mechanics of the installation will be further explored by collaborating with other students, and setting up more ephemeral and organized experiences with the participants. (ex: 15 February 2022 with Louisa)

This semester will also include the creation of a definite set of illustrations participating to engage the participants of the installation in a more emotional way, the illustrations will be made by an illustrator/designer, with whom I usually collaborate.

3rd semester: Build the final installation of final assessment and graduation show. Test runs, debug and final touchs.

During the third semester, the installation should be settled in the school, in the alumni area, next to XPUB studio for the final assessment, and ultimately settled again at WORM for the graduation show. I am interested in putting this installation into privileged spaces of human circulation, (such as hallways) that would more easily involve the detection of people, and highlight the intrusive aspect of such technologies. The narration, the mechanics, illustrations and graphic aspect should be finalized at the beginning of the 3rd semester, and subject to intense test runs during all that period until meeting the deadline.

Relation to larger context

As GAFAM companies are facing more and more legal issues, and held accountable in growing numbers of social and political issues around the world, the pandemic context has greatly contributed to make all of us more dependent than ever on the online services provided by these companies and to somehow force our consent. While two decades of counter-terrorism measures legitimized domestic and public surveillance techniques such as online and video monitoring, the current public health crisis made even more necessary the use of new technologies for regulating the access to public spaces and services, but also for socializing, working together, accessing to culture, etc. In a lot of countries, from a day to another, and for an undetermined time, it has become necessary to carry a smartphone (or a printed QR code) in order to get access transport, entertainment, cultural and catering services, but also in order to do simple things such as to have a look at the menu in a bar/restaurant or to make an order.. Thus, this project takes place in a context where techno-surveillance has definitely taken a determining place in the way we can access spaces and services related to the physical world.

Data Marketisation / Self Data: Quantified Self / Attention Economy / Public Health Surveillance / Cybernetics

Relation to previous practice?

During my previous studies in graphic design, I started being engaged with the new media by making a small online reissue of Raymond Queneau’s book called Exercices de Style. In this issue called Incidences Médiatiques (2017), the user/reader was encouraged to explore the 99 different versions of a same story written by the author in a less-linear way. The idea was to consider each user graphic user interface as a unique reading context. It would determine which story could be read, depending on the device used by the reader, and the user could navigate through these stories by resizing the Web window, by changing browser or by using on a different device.

As part of my graduation project called Media Spaces (2019), I wanted to reflect on the status of networked writing and reading, by programming my thesis in the form of Web to Print website. Subsequently, this website became translated in the physical space as a printed book, and a series of installations displayed in an exhibition space that was following the online structure of my thesis (home page, index, part 1-2-3-4). In that way, I was interested to inviting to visitors to make a physical experience some aspects of the Web

As a first-year student of Experimental Publishing, I continued to work in that direction by eventually creating a meta-website called Tense (2020) willing to display the invisible html <meta> tags inside of an essay in order to affect our interpretation of the text. In 2021, I worked on a geocaching pinball game highlighting invisible Web event, and a Web oscillator, which amplitude and frequency range were directly related to the user’s cursor position and browser screen-size.

While it has always been clear to me that these works were motivated by the desire to define media as context, subject or/and content, the projects presented here have often made use of surveillance tools to detect and translate user information into feedbacks, participating in the construction of an individualized narrative or/and a unique viewing/listening context (interaction, screen size, browser, mouse position). The current work aims to take a critical look at the effect of these practices in the context of techno surveillance.

Similarly, projects such as Media Spaces have sought to explore the growing confusion between human and web user, physical and virtual space or online and offline spaces. This project will demonstrate that these growing confusions will eventually lead us to be tracked in all circumstances, even in our most innocuous daily human activities/actions.

Selected References

Works:

- M. DARKE, fairlyintelligent.tech (2021) https://fairlyintelligent.tech/

« invites us to take on the role of an auditor, tasked with addressing the biases in a speculative AI »Alternatives to techno-surveillance

- MANUEL BELTRAN, Data Production Labor (2018) https://v2.nl/archive/works/data-production-labour/

Expose humans as producers of useful intellectual labor that is benefiting to the tech giants and the use than can be made out of that labor.

- TEGA BRAIN and SURYA MATTU, Unfit-bits (2016) http://tegabrain.com/Unfit-Bits

Claims that that technological devices can be manipulated easily and hence, that they are fallible and subjective. They do this by simply placing a self-tracker (connected bracelet) in another context, such as on some other objects, in order to confuse these devices.

- JACOB APPELBAUM, Autonomy Cube (2014), https://www.e-flux.com/announcements/2916/trevor-paglen-and-jacob-appelbaumautonomy-cube/

Allows galleries to enjoy encrypted internet access and communications, through a Tor Network

- STUDIO MONIKER, Clickclickclick.click (2016) https://clickclickclick.click/

You are rewarded for exploring all the interactive possibilities of your mouse, revealing how our online behaviors can be monitored and interpretated by machines.

- RAFAEL LOZANO-HEMMER, Third Person (2006) https://www.lozano-hemmer.com/third_person.php

Portrait of the viewer is drawn in real time by active words, which appear automatically to fill his or her silhouette https://www.lozano-hemmer.com/third_person.php

- JONAL LUND, What you see is what you get (2012) http://whatyouseeiswhatyouget.net/

«Every visitor to the website’s browser size, collected, and played back sequentially, ending with your own.»

- USMAN HAQUE, Mischievous Museum (1997) https://haque.co.uk/work/mischievous-museum/

Readings of the building and its contents are therefore always unique -- no two visitors share the same experience. https://haque.co.uk/work/mischievous-museum/

Books & Articles:

- SHOSHANA ZUBOFF, The Age of Surveillance Capitalism (2020)

Warns against this shift towards a «surveillance capitalism». Her thesis argues that, by appropriating our personal data, the digital giants are manipulating us and modifying our behavior, attacking our free will and threatening our freedoms and personal sovereignty.

- EVGENY MOROZOV, Capitalism’s New Clothes (2019)

Extensive analysis and critic of Shoshana Zuboff research and publications.

- BYRON REEVES AND CLIFFORD NASS, The Media Equation, How People Treat Computers, Television, and New Media Like Real People and Places (1996)

Precursor study of the relation between humans and machine, and how do you human relate to them.

- OMAR KHOLEIF, Goodbye, World! — Looking at Art in the digital Age (2018)

Authors shares it’s own data as a journal in a part of the book, while on another part, question how the Internet has changed the way we perceive and relate, and interact with/to images.

- KATRIN FRITSCH, Towards an emancipatory understanding of widespread datafication (2018)

Suggests that in response to our society of surveillance, artists can suggest activist response that doesn’t necessarily involve technological literacy, but instead can promote strong counter metaphors or/and counter use of these intrusive technologies.

Prototyping

Arduino

Early sketch that is about comparing and questioning our Spectator experience of a physical exhibition space (where everything is often fixed and institutionalized), with our User experience of a Web space (where everything is way more elastic, unpredictable and obsolete). I’m interested about how slighly different can be rendered a same Web page to all different users depending on technological contexts (device nature, browser, IP address, screen size, zoom level, default settings, updates, luminosity, add-ons, restrictions, etc). I would like to try to create a physical exhibition space/installation that would be inspired from the technology of a Web user window interface in order then to play with exhbitions parameters such as the distance between the spectator and the artwork, the circulation in space, the luminosity/lighting of the artwork(s), the sound/acoustics, etc etc etc.

Distance between wall behind the spectator and the artwork has to be translated into a variable that can affect sound or light in the room.

Wall position could be connected to the dimensions of a user interface in real time with arduino and a motor.

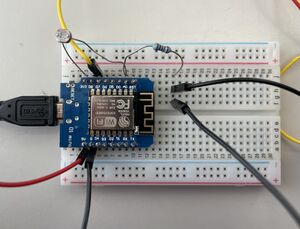

Create a connected telemeter with an Arduino, a ultrasonic Sensor (HC-SR04) and a ESP8266 module connected to Internet

It seems possible to create your own telemeter with a arduino by implementing an ultrasonic Sensor HC-SR04

By doing so, the values capted by the sensor could potentaialy be directly translated as a variable.

Then with the ESP8266 module, the values could be translated on a database on the internet.

Then I could enter that website and see the values from anywhere and use them to control light, sound or anything else I wish.

Tool/Material list:

- Telemeter (user to get the distance between the device and an obstacle)

- Rails

- Handles

- Wheels

- Movable light wall

- Fixed walls

- USB Cable

- Connexion cables

- Arduino

- ESP8266

About the ultrasonic Sensor (HC-SR04)

Characteristics

Here are a few of it's technical characteristic of the HC-SR04 ultrasonic sensor :

- Power supply: 5v.

- Consumption in use: 15 mA.

- Distance range: 2 cm to 5 m.

- Resolution or accuracy: 3 mm.

- Measuring angle: < 15°.

Ref More infos about the sensor here and here

Where to buy the ultrasonic Sensor (HC-SR04)

- 1piece = 9,57 € - https://fr.shopping.rakuten.com/offer/buy/7112482554/module-de-mesure-a-ultrasons-hc-sr04-capteur-de-mesure-de-distance-5v-pour.html?t=7036&bbaid=8830210388

- 20 pieces = 34,22 € - https://fr.shopping.rakuten.com/offer/buy/7112482554/module-de-mesure-a-ultrasons-hc-sr04-capteur-de-mesure-de-distance-5v-pour.html?t=7036&bbaid=8830210388

Prototype 1 : Arduino + Resistor

During a workshop, we started with a very basic fake arduino kit, a led, a motor, and a sensor. After making a few connections, we got to understand a bit how it works.

#include <Servo.h> Servo myservo; // create servo object to control a servo int pos = 0; // variable to store the servo position int ldr = 0; // vairable to store light intensity

void setup() {

Serial.begin(9600); // begin serial communication, NOTE:set the same baudrate in the serial monitor/plotter

myservo.attach(D7); // attaches the servo on pin 9 to the servo object

}

void loop() {

//lets put the LDR value in a variable we can reuse

ldr = analogRead(A0);

//the value of the LDR is between 400-900 at the moment

//the servo can only go from 0-180

//so we need to translate 400-900 to 0-180

//also the LDR value might change depending on the light of day

//so we need to 'contrain' the value to a certain range

ldr = constrain(ldr, 400, 900);

//now we can translate ldr = map(ldr, 400, 900, 0, 180);

//lets print the LDR value to serial monitor to see if we did a good job Serial.println(ldr); // read voltage on analog pin 0, print the value to serial monitor

//now we can move the sensor accoring to the light/our hand! myservo.write(ldr); // tell servo to go to position in variable 'pos' delay(15); }

Split Screen Arduino + Sensor + Serial Plotter + Responsive Space

Trying here to show the simutaneous responses between the sensor, the values, and the simualtion.

Prototype 2: Arduino + Ultrasonic sensor

For this very simple first sketch and for later, I will include newPing library that improves a lot the ultrasonic sensor capacities.

#include <NewPing.h>

int echoPin = 10;

int trigPin = 9;

NewPing MySensor(trigPin, echoPin); //This defines a new variable

void setup() {

// put your setup code here, to run once:

Serial.begin(9600);

}

void loop() {

// put your main code here, to run repeatedly:

int duration = MySensor.ping_median();

int distance = MySensor.convert_in(duration);

Serial.print(distance);

Serial.println("cm");

delay(250);

}

Prototype 3: Arduino Uno + Sensor + LCD (+ LED)

All together from https://www.youtube.com/watch?v=GOwB57UilhQ

#include <LiquidCrystal.h>

LiquidCrystal lcd(10,9,5,4,3,2);

const int trigPin = 11;

const int echoPin = 12;

long duration;

int distance;

void setup() {

// put your setup code here, to run once:

analogWrite(6,100);

lcd.begin(16,2);

pinMode(trigPin, OUTPUT); // Sets the trigPin as an Output

pinMode(echoPin, INPUT); // Sets the echoPin as an Input

Serial.begin(9600); // Starts the serial communication

}

void loop() {

long duration, distance;

digitalWrite(trigPin,HIGH);

delayMicroseconds(1000);

digitalWrite(trigPin, LOW);

duration=pulseIn(echoPin, HIGH);

distance =(duration/2)/29.1;

Serial.print(distance);

Serial.println("CM");

delay(10);

// Prints the distance on the Serial Monitor

Serial.print("Distance: ");

Serial.println(distance);

lcd.clear();

lcd.setCursor(0,0);

lcd.print("Distance = ");

lcd.setCursor(11,0);

lcd.print(distance);

lcd.setCursor(14,0);

lcd.print("CM");

delay(500);

}

From this sketch, I start considering that the distance value could be directly sent to a computer and render a Web page depending on its value.

Note: It looks like this sensor max range is 119cm, which is almost 4 times less than the 4 meters max range stated in component description.

Prototype 4: Arduino Uno + Sensor + LCD + 2 LED = Physical vs Digital Range detector

Using in-between values to activate the green LED

Once again, puting together the simulation and the device in use.

#include <LiquidCrystal.h>

#include <LcdBarGraph.h>

#include <NewPing.h>

LiquidCrystal lcd(10,9,5,4,3,2);

const int LED1 = 13;

const int LED2 = 8;

const int trigPin = 11;

const int echoPin = 12;

long duration; //travel time

int distance;

int screensize;

void setup() {

// put your setup code here, to run once:

analogWrite(6,100);

lcd.begin(16,2);

pinMode(trigPin, OUTPUT); // Sets the trigPin as an Output

pinMode(echoPin, INPUT); // Sets the echoPin as an Input

Serial.begin(9600); // Starts the serial communication

pinMode(LED1, OUTPUT);

pinMode(LED2, OUTPUT);

}

void loop() {

long duration, distance;

digitalWrite(trigPin,HIGH);

delayMicroseconds(1000);

digitalWrite(trigPin, LOW);

duration=pulseIn(echoPin, HIGH);

distance =(duration/2)/29.1; //convert to centimers

screensize = distance*85;

Serial.print(distance);

Serial.println("CM");

Serial.print(screensize);

delay(10);

if ((distance >= 15) && (distance<=20))

{

digitalWrite(LED2, HIGH);

digitalWrite(LED1, LOW);

}

else

{

digitalWrite(LED1, HIGH);

digitalWrite(LED2, LOW);

}

// Prints the distance on the Serial Monitor

Serial.print("Distance: ");

Serial.println(distance);

lcd.clear();

lcd.setCursor(0,0);

lcd.print("ROOM");

lcd.setCursor(6,0);

lcd.print(distance);

lcd.setCursor(9,0);

lcd.print("cm");

lcd.setCursor(0,2);

lcd.print("SCR");

lcd.setCursor(6,2);

lcd.print(screensize);

lcd.setCursor(9,2);

lcd.print("x1080px");

delay(500);

}

I brought a second arduino, 2 long breadboards, black cables, another LCD screen, and remade the setup on this format.

For some reasons the new LCD screen is not going in the breadboard, and I need more male to female cables in order to connect it correctly.

With this longer breadboard, I want to extend the range value system, and make it visible with leds and sounds.

How to get more digital pins [not working]

- How to use analog pins as digital pins https://www.youtube.com/watch?v=_AAbGLBWk5s

- Up to 60 more pins with Arduino Mega https://www.tinytronics.nl/shop/en/development-boards/microcontroller-boards/arduino-compatible/mega-2560-r3-with-usb-cable

I tried 4 different tutorials but still didn't find a way to make the thing work, that's very weird, so I will just give up and take a arduino mega =*(

Prototype 5: Arduino Uno + 3 Sensor + 3 LEDS

With a larger breadboard, connecting 3 sensors all together. Next step will be to define different ranges of inbetween values for each sensor in order to make a grid. To accomplish this grid I will make a second row of sensors such as this, in order to get x and y values in space

Prototype 6: Arduino Uno + 3 Sensor + 12 LEDS

With 3 sensors, added on 2 long breadboads, and with a different set of range values, we can start mapping a space.

Prototype 7: Arduino Uno + 12 LEDS + 3 Sensor + Buzzer + Potentiometer + LCD