User:Federico

Federico or Poni who likes pigeons and purple and fans of computers and unzipping folders

Federico or Poni who doesn't like capitalism and yellow and labour and php

The Magic First Year

Prototy-ping pong

#0

A random picker for 'volountaries' from XPUB1 2020/21

Download the file from here and store it in the home folder of your computer

Just open the terminal/cmd and digit:

python3 xpub.py

#1

Since I do not like the first article of italian constitution that says

"Italy is a Democratic Republic, founded on work"[1]

I wanted to detourn the firsts 12 articles of the constitution, taking contents from Utopia by Thomas Moore and The Anarchist Cookbook.

Here the Speculative Constitution!

import random #otherwise random does not work :)

#first parts of the first 12 articles of the italian constitutes

it_articles = ["\n\nART.1 \nItaly is a Democratic Republic, founded", 'ART.2 \nThe Republic recognises and guarantees', 'ART.3 \nAll citizens have equal social dignity and are','ART.4 \nThe Republic recognises the right of all citizens to','ART.5 \nThe Republic, one and indivisible, recognises and promotes','ART.6 \nThe Republic shall protect','ART.7 \nThe State and the Catholic Church are','ART.8 \nAll religious confessions enjoy','ART.9 \nThe Republic shall promote the development of','ART.10 \nThe Italian legal system conforms to the generally recognised rules of','ART.11 \nItaly rejects war as an instrument of','ART.12 \nThe flag of the Republic is']

with open("Utopia.txt") as utopia: #open the external .txt

contents = utopia.read().replace('\n',' ').replace('\r ', '').replace('-','').replace(' ',' ').strip(' and ')#delete useless stuff

with open("anarchist_cookbook.txt") as cookbook:

contents2 = cookbook.read().replace('\n', ' ').replace('\r', '').strip('1234567890').replace('-','').replace(' ',' ').strip(' and ')

utopia_splitted = contents.split("," or "." or '"' or '; ' or 'and') #splits the texts and makes a list

cookbook_splitted = contents2.split("," or "." or '"' or '; ' or 'and')

export = './export.txt'

with open(export, 'w') as export: #This creates a txt file

for i in range(5): #creates more possibilities, change the value to have more or less results

for x in range(12):

utopia_random = random.choice(utopia_splitted) #it takes a random sentence from the list

cookbook_random = random.choice(cookbook_splitted)

constitute = it_articles[x] #it prints in order the articles

speculative_constitute = constitute + (utopia_random or cookbook_random)

export.write(f"{speculative_constitute}.\n \n") #This prints on txt the results

print(f'''{speculative_constitute}.\n''')

#2

I tried to re/create my favorite twitter bot, il pleut

import random

width = 10

height = 10

drops = [',',' ',' ',' ']

rain = ''

for x in range(width):

for y in range(height):

rain += random.choice(drops)

print(rain)

rain = ''

#3

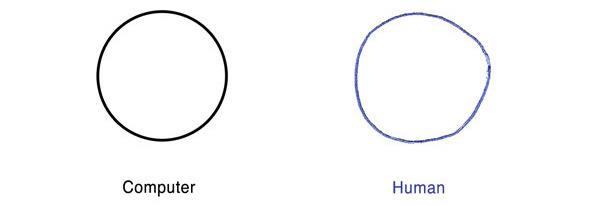

Minimal tool for converting an image to ASCII:

#HERE IMPORT LIBRARIES FOR USE URLs, IMAGES AND AALIB (FOR ASCII)

from urllib.request import urlopen

from PIL import Image, ImageDraw

import aalib

pic = Image.open('') #HERE PUT THE PIC'S PATH!!

#OTHERWISE

pic = Image.open(urlopen('')) #HERE PUT THE PIC'S URL!!

screen = aalib.AsciiScreen(width=100, height=50) #THIS IS FOR RESIZE THE CHARACTERS OF ASCII

pic = pic.convert('L').resize(screen.virtual_size) #THIS IS FOR CONVERT THE PIC TO GREYSCALE + FIX THE PIC'S SIZE WITH THE RENDER'S ONE

screen.put_image((0,0), pic) #THIS IS FOR CALCULATE THE ASCII TNX TO AALIB

print(screen.render()) #THIS PRINT THE ASCII

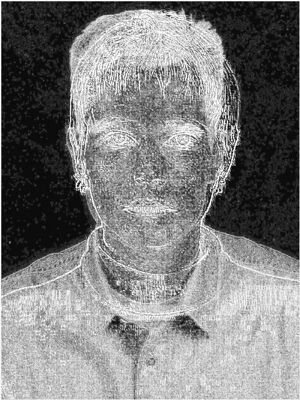

This tool mix together two pictures (better portraits) taking their edges!

from PIL import Image, ImageChops, ImageFilter

from urllib.request import urlopen

pic1 = Image.open(r'') #Pic from a file OR

pic1 = Image.open(urlopen('https://d7hftxdivxxvm.cloudfront.net/?resize_to=fit&width=480&height=640&quality=80&src=https%3A%2F%2Fd32dm0rphc51dk.cloudfront.net%2FoMDDSm77JFzMIYbi81xOaw%2Flarge.jpg')) #Pic from an url

pic2 = Image.open(r'') #Pic from a file OR

pic2 = Image.open(urlopen('https://publicdelivery.b-cdn.net/wp-content/uploads/2020/01/Thomas-Ruff-Portrait-E.-Zapp-1990-scaled.jpg')) #Pic from an url

pic1 = pic1.convert("L").filter(ImageFilter.FIND_EDGES).filter(ImageFilter.Kernel((3, 3), (1, -3, -1, -1, 8, -1, 8, -1, -1), 1, 2)) #Create edges

pic2 = pic2.convert("L").filter(ImageFilter.FIND_EDGES).filter(ImageFilter.Kernel((3, 3), (9, -3, -1, -1, 8, -1, 8, -1, -1), 1, 10))

size1 = pic1.size

size2 = pic2.size

if size1 > size2: #make sizes of pics the same

pic2 = pic2.resize(size1)

elif size2 > size1:

pic1 = pic1.resize(size2)

pics = ImageChops.screen(pic1, pic2) #Mix the pics!

pics #Show the mix

pics = pics.save('mix.jpg') #Save the mix

Generate perlin-noise! IN PROGRESS

From terminal install:

pip3 install numpy

pip3 install git+https://github.com/pvigier/perlin-numpy

pip3 install matplotlibfrom PIL import Image, ImageDraw

import matplotlib.pyplot as plt

import numpy as np

from matplotlib.animation import FuncAnimation #??

from perlin_numpy import (

generate_perlin_noise_2d, generate_fractal_noise_2d

)

np.random.seed(0)

noise = generate_perlin_noise_2d((256, 256), (8, 8))

plt.imshow(noise, cmap='gray', interpolation='lanczos')

plt.colorbar()

np.random.seed(0)

noise = generate_fractal_noise_2d((256, 256), (8, 8), 5)

plt.figure()

plt.imshow(noise, cmap='gray', interpolation='lanczos')

plt.colorbar()

plt.matplotlib.animation.PillowWriter(*args, **kwargs)

plt.show()

Function for save the results with Pillow, probably

#4

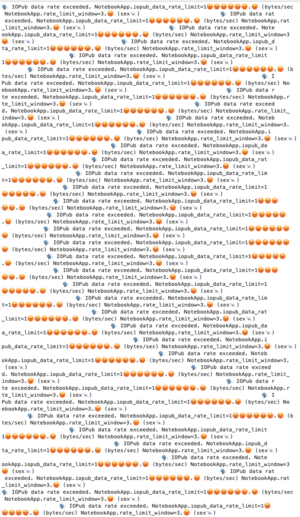

First Episode of Quilting

Everybody makes a patch which will be included in an A0 "Quilt".

import random

width = 82 #canvas measures

height = 74

sentence = '🗣 IOPub data rate exceeded. NotebookApp.iopub_data_rate_limit=1000000.0 (bytes/sec) NotebookApp.rate_limit_window=3.0 (secs)'

sentence = sentence.replace('secs','sex🍬').replace('0', '😡') #replace stuff with funny stuff

lines = ''

lista = []

for x in range(width): #it would be any number since the counter will break the characters

lines += sentence #fill the lines with the text

lines += ' ' * (x+25) #and also fill with big aesthetic spaces which will create a beautiful wave

tmp_line = ''

counter = 0

for character in lines:

if counter == height:

break

elif len(tmp_line) < width:

tmp_line += character

else:

lista.append(tmp_line)

tmp_line = ''

counter += 1

patch = "\n".join(lista) #creates effectivly the quilt computing the charachters

f = 'patcherico.txt' #export the patch for the quilt in .txt

export = open(f, 'w')

export.write(patch)

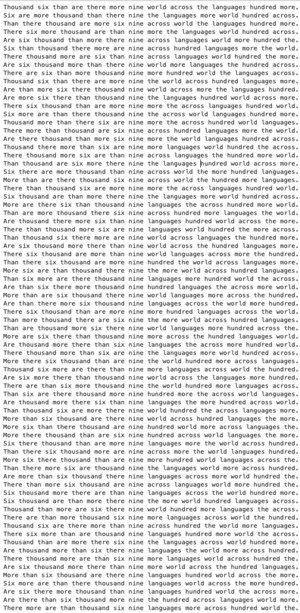

#5

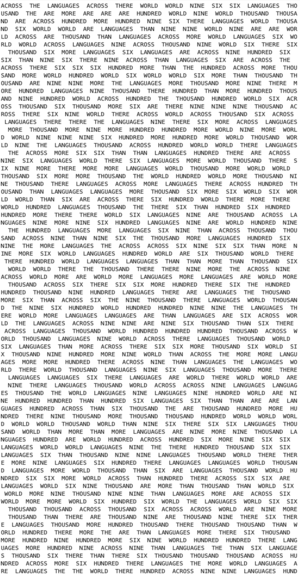

Second Episode of Quilting

import random

sentence = 'there are more than six thousand nine hundred more languages across the world'.upper() #makes the sentence caps-locked

words = sentence.split(' ') #split the sentence (taken from "Practical Vision") in a list of words

width = 82 #canvas measures

height = 74

spaces = ''

lista = []

br = [' ','. ']

for x in range(999):

rwords = random.choices(words, weights=(8,4,5,4,8,15,9,20,4,8,6,3,10)) #pick randomly words from the list 'word' with different random ratio

spaces += rwords[0] #fill the canvas

rbr = random.choices(br, weights=(70,10)) #pick a dot or a blank space

spaces += rbr[0] #fill the canvas

tmp_line = '' #here quilting time

counter = 0

for character in spaces:

if counter == height: #it stops when rows = 74

break

elif len(tmp_line) < width:

tmp_line += character #it stops when charachters per line = 82

else:

tmp_line += character

lista.append(tmp_line)

tmp_line = ''

counter += 1

patch = "\n".join(lista) #creates effectivly the quilt computing the charachters

f = 'patcherico.txt' #export the patch for the quilt in .txt

export = open(f, 'w')

export.write(patch)

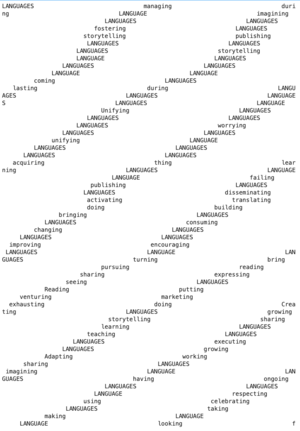

#6

Third Episode of Quilting

For the first patch I experimented some tools from nltk:

import nltk

nltk.download(download_dir="/usr/local/share/nltk_data")

from nltk.book import * #import all the book stored in nltk

from nltk.text import Text

text.tokens

width = 82

height = 74

spaces = ''

lang = []

lista = []

for w in vision:

if w.startswith('lang'): #pick all the words starting with

lang.append(w)

elif w.endswith('ing'): #pick all the words ending with

lang.append(w)

for l in lang:

if l.startswith('lang'):

spaces += l.upper()

spaces += ' '

else:

spaces += l

spaces += ' '

tmp_line = ''

counter = 0

for character in spaces:

if counter == height:

break

elif len(tmp_line) < width:

tmp_line += character

else:

tmp_line += character

lista.append(tmp_line)

tmp_line = ''

counter += 1

patch = "\n".join(lista)

But the collective work wanted to be more "concordanced":

import nltk

from nltk import word_tokenize

from urllib.request import urlopen

import random

url = 'https://git.xpub.nl/XPUB/S13-Words-for-the-Future-notebooks/raw/branch/master/txt/words-for-the-future/PRACTICAL-VISION.txt' #take the txt

vision = urlopen(url).read().decode('utf-8') #read it and decode it

tokens = word_tokenize(vision) #tokenize it

vision = nltk.text.Text(tokens)

width = 82 #canvas measures

height = 74

lista = []

tmp_line = ''

counter = 0

for line in vision.concordance_list('nine', width=85, lines=1): #analyses the given word and print the lines where it is situated, in this case there is only one but

for x in range(74): #it is repeated 74 times

l = line.left_print[7:] #this picks only the desired words and defines the left side

m = line.query #defines the center (the given word)

r = line.right_print #defines the right side

l = l.split(' ') #split the sentence, both sides

r = r.split(' ')

random.shuffle(l) #shuffles the results for every line, both sides excepts the center, it will be always 'nine'

rl = ' '.join(l).lower()

random.shuffle(r)

rr= ' '.join(r)

results= (f" {rl.capitalize()} {m} {rr}. ") #capitalize the first letter of the first word of every line

lista.append(results)

patch = "\n".join(lista) #creates effectivly the quilt computing the charachters

f = 'patcherico.txt' #export the patch for the quilt in .txt

export = open(f, 'w')

export.write(patch)

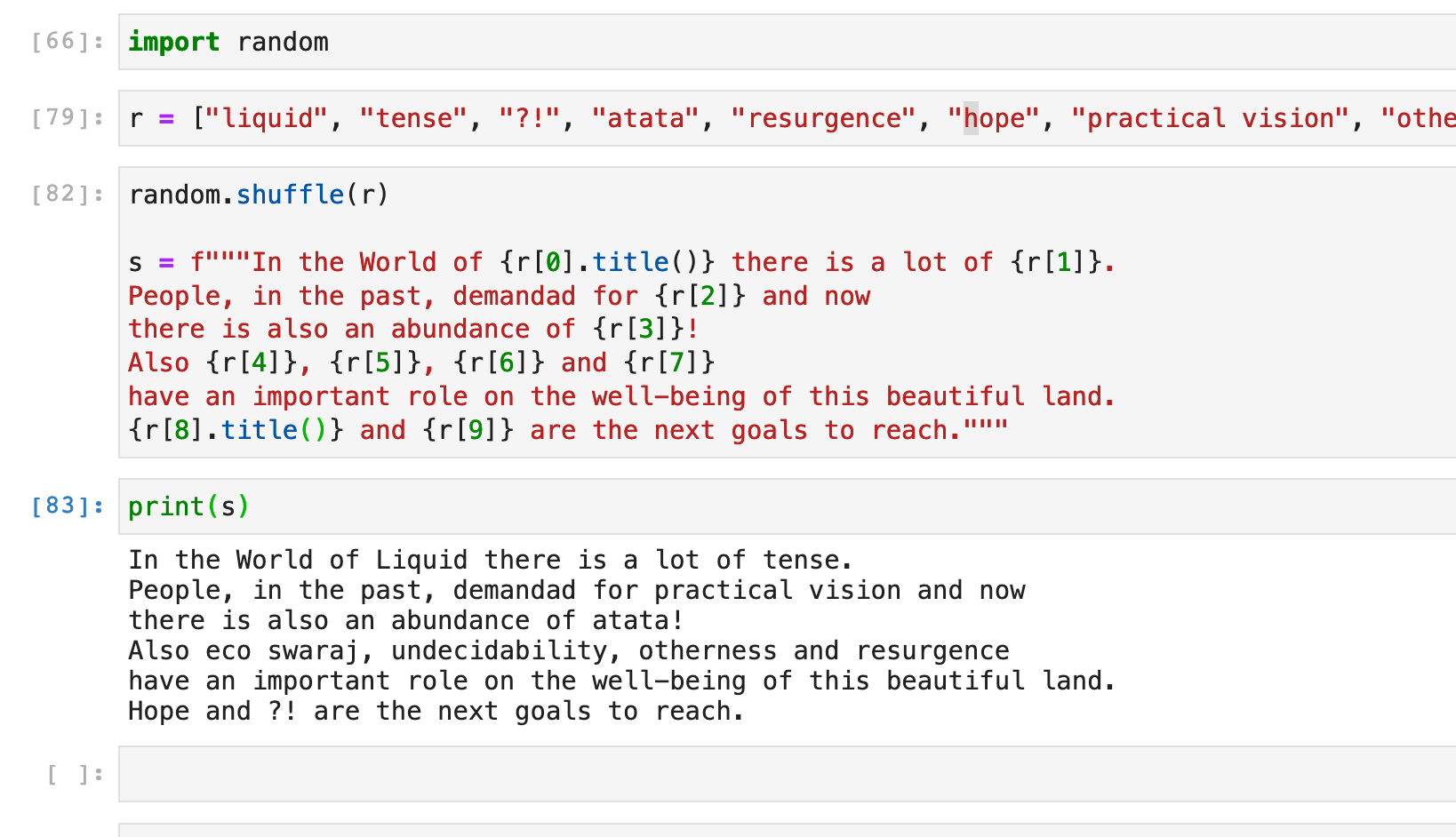

#7

import nltk

import random

s = ' '

text = open('language.txt').read().replace('.','').replace(',','').replace('(','').replace(')','').replace(':','').replace(';','')

text = text.split()

textSet = set(text)

tagged = nltk.pos_tag(textSet)

#create array of single sigles, in order to use them as tags for dictionaries

sigle = '''

1. CC Coordinating conjunction

2. CD Cardinal number

3. DT Determiner

4. EX Existential there

5. FW Foreign word

6. IN Preposition or subordinating conjunction

7. JJ Adjective

8. JJR Adjective, comparative

9. JJS Adjective, superlative

10. LS List item marker

11. MD Modal

12. NN Noun, singular or mass

13. NNS Noun, plural

14. NNP Proper noun, singular

15. NNPS Proper noun, plural

16. PDT Predeterminer

17. POS Possessive ending

18. PRP Personal pronoun

19. PRP$ Possessive pronoun

20. RB Adverb

21. RBR Adverb, comparative

22. RBS Adverb, superlative

23. RP Particle

24. SYM Symbol

25. TO to

26. UH Interjection

27. VB Verb, base form

28. VBD Verb, past tense

29. VBG Verb, gerund or present participle

30. VBN Verb, past participle

31. VBP Verb, non-3 person singular present

32. VBZ Verb, 3 person singular present

33. WDT Wh-determiner

34. WP Wh-pronoun

36. WRB Wh-adverb

'''

sigle = sigle.replace('.','').split()

sigle = [sigle for sigle in sigle if len(sigle) < 4 and not sigle.isdigit() and not sigle == 'or' and not sigle == 'to' and not sigle == '3rd']

#This is for prepare a grammar constructio based on a picked random part of text

s = ' '

textlines = open('language.txt').readlines()

nl = len(textlines)

r = random.randrange(0,nl)

line = textlines[r]

sentence = line.split()

sentence = nltk.pos_tag(sentence)

dat = {}

for word, tag in sentence:

dat[tag] = word

keys = dat.keys()

print(" + s + ".join([pos for pos in keys])) #copypaste the result to generate sentences

for gr in sigle: #to create storing list for various pos

print(f'{gr}lista = []'.replace('$','ç'))

print(f'''my_dict('{gr}','data{gr}',{gr}lista)'''.replace('$','ç'))

print('\n')

dataset = {}

def my_dict(gr,data,grlista): #to store words in pos lists

data = {}

for word, tag in tagged:

dataset[tag] = word

if tag == gr:

data[tag] = word

grlista.append(word)

for x in data:

if len(grlista) == 0:

None

else:

print(f'{gr} = random.choice({gr}lista)'.replace('$','ç')) #to print the random picker, if the list is empty, doesn't print the randomic variable for that pos

#copy paste from up:

CClista = []

my_dict('CC','dataCC',CClista)

CDlista = []

my_dict('CD','dataCD',CDlista)

DTlista = []

my_dict('DT','dataDT',DTlista)

EXlista = []

my_dict('EX','dataEX',EXlista)

FWlista = []

my_dict('FW','dataFW',FWlista)

INlista = []

my_dict('IN','dataIN',INlista)

JJlista = []

my_dict('JJ','dataJJ',JJlista)

JJRlista = []

my_dict('JJR','dataJJR',JJRlista)

JJSlista = []

my_dict('JJS','dataJJS',JJSlista)

LSlista = []

my_dict('LS','dataLS',LSlista)

MDlista = []

my_dict('MD','dataMD',MDlista)

NNlista = []

my_dict('NN','dataNN',NNlista)

NNSlista = []

my_dict('NNS','dataNNS',NNSlista)

NNPlista = []

my_dict('NNP','dataNNP',NNPlista)

PDTlista = []

my_dict('PDT','dataPDT',PDTlista)

POSlista = []

my_dict('POS','dataPOS',POSlista)

PRPlista = []

my_dict('PRP','dataPRP',PRPlista)

RBlista = []

my_dict('RB','dataRB',RBlista)

RBRlista = []

my_dict('RBR','dataRBR',RBRlista)

RBSlista = []

my_dict('RBS','dataRBS',RBSlista)

RPlista = []

my_dict('RP','dataRP',RPlista)

SYMlista = []

my_dict('SYM','dataSYM',SYMlista)

TOlista = []

my_dict('TO','dataTO',TOlista)

UHlista = []

my_dict('UH','dataUH',UHlista)

VBlista = []

my_dict('VB','dataVB',VBlista)

VBDlista = []

my_dict('VBD','dataVBD',VBDlista)

VBGlista = []

my_dict('VBG','dataVBG',VBGlista)

VBNlista = []

my_dict('VBN','dataVBN',VBNlista)

VBPlista = []

my_dict('VBP','dataVBP',VBPlista)

VBZlista = []

my_dict('VBZ','dataVBZ',VBZlista)

WDTlista = []

my_dict('WDT','dataWDT',WDTlista)

WPlista = []

my_dict('WP','dataWP',WPlista)

WRBlista = []

my_dict('WRB','dataWRB',WRBlista)

#copy paste result from up in order to randomize at every refresh a new word

CC = random.choice(CClista)

CD = random.choice(CDlista)

DT = random.choice(DTlista)

EX = random.choice(EXlista)

IN = random.choice(INlista)

JJ = random.choice(JJlista)

JJS = random.choice(JJSlista)

MD = random.choice(MDlista)

NN = random.choice(NNlista)

NNS = random.choice(NNSlista)

NNP = random.choice(NNPlista)

PRP = random.choice(PRPlista)

RB = random.choice(RBlista)

TO = random.choice(TOlista)

VB = random.choice(VBlista)

VBD = random.choice(VBDlista)

VBG = random.choice(VBGlista)

VBN = random.choice(VBNlista)

VBP = random.choice(VBPlista)

VBZ = random.choice(VBZlista)

WDT = random.choice(WDTlista)

WP = random.choice(WPlista)

EX + s + VBP + s + IN + s + JJS + s + CD + s + NNS + s + JJ + s + NN + s + WDT + s + DT + s + VBZ + s + CC + s + VBD

#examples:

'There are as least two layers syntactical processing which this is and processed'

'There are of least two data so-called example which these is and processed'

'There are by least two languages spoken process which the is and expressed'

RB + s + NN + s + VBZ + s + JJ + s + NNS + s + IN + s + DT + s + WDT + s + EX + s + VBP + s + VBN + s + CC + s + NNP

#examples:

'however software is symbolic languages In these which There are written and Software'

'however example is different macro within these which There are implemented and Software'

'however process is so-called layers since these which There are implemented and Yet'

#8

Playing across txt ↔ html ↔ css ↔ nltk ↔ pdf

So, I created an easy aesthetical data vizualisation for texts mapping all the differents grammatical elements from nltk in css with different colors and shades.

from weasyprint import HTML, CSS

from weasyprint.fonts import FontConfiguration

import nltk

font_config = FontConfiguration()

txt = open('txt/practicalvision.txt').read()

words = nltk.word_tokenize(txt) #tokenizing the text

tagged_words = nltk.pos_tag(words) #generating grammar tags for the tokens

content = ''

content += '<h1>Practical Vision, by Jalada</h1>'

for word, tag in tagged_words:

content += f'<span class="{tag}">{word}</span> ' #for every word, generate an html tag wich includes the grammar tag as class

if '.' in word:

content += '<br> \n'

with open("txt/practical_viz.html", "w") as f: #save as html

f.write(f"""<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<link rel="stylesheet" type="text/css" href="grammar_viz.css">

<title></title>

</head>

<body>

{content}

</body>

""")

html = HTML("txt/practical_viz.html") #define as HTML the genereted html file

css = CSS(string='''

body{

size: A4;

font-family: serif;

font-size: 12pt;

line-height: 1.4;

padding: 3vw;

color: rgba(0,0,0,0)

}

h1{

width: 100%;

text-align: center;

font-size: 250%;

line-height: 1.25;

color: black;

}''', font_config=font_config) #define CSS for print final A4 pdf

html.write_pdf('txt/practical_viz.pdf', stylesheets=[css], font_config=font_config) #generate A4 pdf

Here the css file with POS linked to colors:

body{

font-size: 2vw;

text-align: justify;

text-justify: inter-word;

line-height: 1;

font-family: sans-serif;

color: rgb(0,0,0,0)

}

span.CC{

background-color:#333300

}

span.CD{

background-color:#999900

}

span.DT{

background-color: #ff00ff

}

span.EX{

background-color: #006080

}

span.FW{

background-color:#f6f6ee

}

span.IN{

background-color:#ffccff

}

span.JJ{

background-color: #f2f2f2;

}

span.JJR{

background-color: #b3b3b3

}

span.JJS{

background-color:#737373

}

span.LS{

background-color:#666633

}

span.MD{

background-color:#00cc00

}

span.NN{

background-color:#33ff33

}

span.NNS{

background-color:#80ff80

}

span.NNP{

background-color:#ccffcc

}

span.PDT{

background-color:#ffd1b3

}

span.POS{

background-color:#ffb380

}

span.PRP{

background-color:#ff8533

}

span.PRP${

background-color:#e65c00

}

span.RB{

background-color:#ff8080

}

span.RBR{

background-color:#ff4d4d

}

span.RBS{

background-color:#e60000

}

span.RP{

background-color:#992600

}

span.SYM{

background-color:#99ff33

}

span.TO{

background-color: black

}

span.UH{

background-color:#ffff00

}

span.VB{

background-color: #000099

}

span.VBD{

background-color: #0000e6

}

span.VBG{

background-color:#1a1aff

}

span.VBN{

background-color: #4d4dff

}

span.VBP{

background-color: #b3b3ff

}

span.VBZ{

background-color: #ccccff

}

span.WDT{

background-color:#ccffff

}

span.WP{

background-color:#66ffff

}

span.WP${

background-color:#00e6e6

}

span.WRB{t

background-color: #008080

}

pre {

white-space: pre-wrap;

}

h1{

font-style: bold;

width: 100%;

text-align: center;

color: black

}

#9

Easy tool for export from html to pdf

html = HTML(string=html)

print(html)

css = CSS(string='''

@page{

size: A4;

margin: 15mm;

}

body{

font-family: serif;

font-size: 12pt;

line-height: 1.4;

color: magenta;

}

''')

html.write_pdf('language.pdf', stylesheets=[css])

to print a pdf to an another one from terminal, first cut first page of pdf because I don't want the title of the pdf and then stamps as a stamp the file on the other file

pdftk language.pdf cat 2-end output troncatolanguage.pdf

mix.pdf stamp troncatolanguage.pdf output output.pdf

Issue 13

Elements for my republish [Practical Vision]:

- Map Side:

- Official Artistic Response's shapes are the elements for my map

- Squares seem like to move from the grid of the map

- Think to more variables if colours allowed

- Texts side:

- In any case:

- Telegram Dictionary Bot explaination [a bot created in order to store translated sentences in every languages users wants] [maybe have a 'weekly sentence' to translate] [people can ask to bot to discover a random translated sentence]

- i.e. the bot we did in Senegal, Coubanao: @jolanetbot and here the database

- Original Text w/ arrows which link certain words to my thoughts on:

- "PICTURE AS INTERFACE OF LANGUAGE" + M. Fisher about language capitalism non-heritage --> neo-colonialism

- Touring, relationship language with stuff

- F. Cramer, various kind of languages --> "computer language"

- Telegram Dictionary Bot explaination [a bot created in order to store translated sentences in every languages users wants] [maybe have a 'weekly sentence' to translate] [people can ask to bot to discover a random translated sentence]

- In any case:

- if w/ color:

- Grammar Visualization

- if w/out color:

- Another Visualization?

- if w/ color:

The Wiki of the Special Issue 13: Words for the future.

Analyzing Words

Team: 🗂 Floor, Martin  , 🍭fə_erico [ Poni ]______

, 🍭fə_erico [ Poni ]______

Words/publications to read/annotate from Words of the Future:

- TENSE PDF PAD Simon(e) van Saarloos

- Practical Vision PDFPAD Moses Kilolo

- Eco-Swaraj PDF PAD Ashish Kothari

Creating Licenses

Writing a License: First Attempt

This is a first attempt to write a license, with Camilo* , 🗂 Floor and Martin  .

.

XPUB - Words for the future Vol.2

General License

© 2020 XPUB

We are really happy that you are reading this, so please continue to read this short license you will be rewarded:

Permissions:

Any individual, group(s), non-profit organization(s)

may morally use, modify, distribute the content of this work.

We also encourage to translate the contents into other languages

User(s) may extend this license, as long as these initial conditions remain in force.

You are free to use, modify and republish the contents as long as you follow all of the conditions stated in the Obligations section of this license

Obligations:

Any re-publication of the contents must explicitly provide the name(s) of the original author(s).

If you want to republish the contents, you must upload it on the *link/web/git* in order to keep track of the dialogue between all the re-publications.

Prohibitions:

Not for commercial use.

Users must not remove this license from the work.

No put in microwave, in IKEA bookshelves, in pink usb sticks.

Warranty

This license remains active as long as the Internet exists.

Sanction

Users recognise breach of the above terms may be sanctioned to the maximum extent applicable by Karma.[1]

[1] Karma is the name of my lawer

Thank you if you have finished to read it

♥

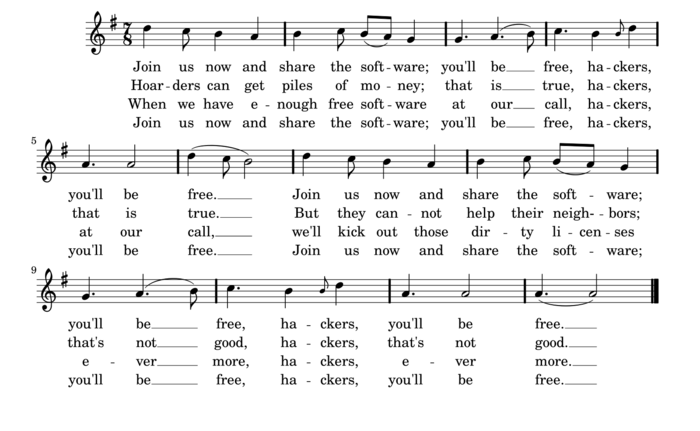

Intro

"The idea of copyleft is basic to the natural propagation of digital information among humans in a society" [2]

- The freedom to run the program as you wish, for any purpose (freedom 0).

- The freedom to study how the program works, and change it so it does your computing as you wish (freedom 1). Access to the source code is a precondition for this.

- The freedom to redistribute copies so you can help others (freedom 2).

- The freedom to distribute copies of your modified versions to others (freedom 3). By doing this you can give the whole community a chance to benefit from your changes. Access to the source code is a precondition for this.

Reading PhD's thesis of Aymeric.

In Search of Pluralism

About the link between free software (and open source) and its expansion into other forms of cultural production (pp. 76-91)

- The word "free" refers to freedom, not price: Any individual has the freedo of copying, distribuiting and modifying * for personal, noncommercial purpose

- Copyright not very useful to the digital interworked realm

- From 1998 people from every field started to write their own license

- Different understandings of what freedom and openness mean in the context of culture and knoweldge

- Licenses as expressive tools to empower and materialise various ideology

- 'BUT' this proliferation is not necessarily a positive semantic disorder. Every licenses seem to connect theirself under the same umbrella but they have distinctive fts and attitudes on the understanding of the free/open software template

- Metaphor with liberalism?

- but the proliferation of licenses is not driven for competition but for an emergence of identity politics!

- but democratic system depends on the multiplication of discourse

- multi attempts to protect cultural freedom and openness as a whole.

- What about the legality of these licenses?

- Here arrives CreativeCommons: more generic approach to openness in culture

- CC embraces the strategy of economics by providing a collection of licences to fit every purpose

- Cascading, license's communities which did not embrace CC started to aggregate theirselves

- Proto free culture definitions

Four kinds of free knowledge by Pena-Lopez

- The freedom to use the knowledge, for any purpose (freedom 0).

- The freedom to study how the knowledge applies, and adapt it to your needs (freedom 1). Access to the source information is a precondition for this.

- The freedom to redistribute knowledge so you can help your neighbour (freedom 2).

- The freedom to improve the knowledge, and release your im- provements to the public, so that the whole community bene- fits (freedom 3). Access to the source information is a precon- dition for this.

The Open Knowledge Definition (three meanings of open: legally socially technologically open)

- Access

- Redistribution

- Re-Use

- Absence of Technological Restriction

- Attribution

- Integrity

- No Discrimination Against Persons or Groups 8. No Discrimination Against Fields of Endeavor 9. Distribution of License

- License Must Not Be Specific to a Package

- License Must Not Restrict the Distribution of Other Works

4Rs Framework Willey's distinction between rework and remix

- Reuse – Use the work verbatim, just exactly as you found it

- Revise – Alter or transform the work so that it better meets your needs

- Remix – Combine the (verbatim or altered) work with other works to better meet your needs

- Redistribute – Share the verbatim work, the reworked work, or the remixed work with others

- Moral --> different readings of the free software template are possible.

- But --> "As the concept spreads so we are seeing of proliferation of licences and a potential blurring of what is open and what is not": Important to preserve compability

We assume that the Free Software template is a model for large-scale productive social relation where generous collaboration take place and not just a more effective and liberal form of efficient production and sharing:

RELATIONS > PRODUCTIONS

- Critical appropriation of technologies + Subvertion of hegemony

- Copyleft, DIY, consensus-based decision-making and free-open source software

- breakin down barriers between producer and consumer is an example of collective intelligence

- changes in aesthetic, economic and social paradigms

"...the role of free and open source software in forging a culture that goes beyond software and exist outside of the “economical time of unlimited profit”; where new ways of learning, creating, and participating, offer an alternative to a dominant productive model of time. [...] free culture was therefore more than a chaotic collection of definitions and licenses, it was also the concrete manifestation of different ideas about society, structured and grounded by the free software template." <== :)

From Techno-Legal Templates to Sandbox Culture

About the problematics/limits of unrestricted sharing, remix, appropriation, etc (pp.285-297)

- Free software is a model to develop free culture:

- Innovation is central in this discourse

- Remix has been increasingly used to demonstrate the power of combinational practice

- is not a poor practice per se, that solely exemplifies the failure of artists to change the productive apparatus

- Change modalities, not content: Ecological choice

- Remix as appropiation of a certain medium and instruments

- Also be a framework to analyse the semantics of political discourse

- Remix as a folk political tool <-- But then, commodification arrived?

- For example 1800+- Paris: folk songs used as a vector to memorise and spread commentary and critiques on pubblic affairs: original lyrics replaced by critical texts

- From entertainment to political communication network! Other necessities

- Remix as a cultural glue instead of a movement/something framed

Remix and free culture relate to each other in file-sharing culture

Read Only vs Read-Write file system permission?

- With the internet + P2P filesharing > increased democratization of practices > used to accelerate the spread of new ideas

- CC used remix as

- inspiration

- shortcut to communicate about licensing changes

- to show potentiality

- Bourriaud, 2002, fuck new, what can we do with what we have?

- Artists as remixers

- consumption and production of informations are no longer so separate

- Ecology again, extractive datas issue

- Artists as seminauts

- produce endless narratives and journeys within informations

- Artist as comunicator? yes

Remix as creative mechanism VS remix as a controlled environment

/// Issues on Dub Reggae culture and industry: precarious conditions of musician and copyrights... /// Public domain derived corporation +-

Free circulation and transformation of informations cannot be directly linked to an egalitarian participation in a liberated productive apparatus Ignoring the aspect of political economy in relation to these practices

- OCIO: "According to Veal, the whole versioning process can therefore be sensed as a direct result of capitalist influence in the making of music, that turns folkloric practices into a calculated economic strategy based on a complex and possibly endless archaeology."

- Hegemony eats folk.

- who infrings copyright remixing stuff is often potrayed as a kind of hero?

- but also who prevent the free circulation of information are sustematically impersonated as evil entities

- Ressentiment, hostility from free culture supporters

"The author-centered regime of the information society that Boyle had warned against35 is therefore not resolved in free culture but only displaced. How such information comes into existence, what kind of technological, social, and political frameworks permit its access, what networks of software it requires or gives rise to, its wider aesthetic inherences and affordances.

- It should also become clear how such frameworks influence the groups that inhabit the structures formed by these templates.

- who owns the file?

- Where is it located?

- Why can it be accessed?

- Who benefits from reading from or writing to it?

- Full permissions over a small element of a system does not imply complete control over the latter.

Ground 0: Various Lectures

Collaboration, Conflict & Consent with Eleonor

Here our proposal about the the issue of Rhode statue at Cambridge: 𝕗𝕣𝕖𝕖𝕫𝕖

David Benque here

The other David here

Maybe you wanna know what I do

- Here my web, hope to have time to develope my new one

- Here un * salta web, not yet mobile-friendly neither in english but still 😎

- Here my are.na