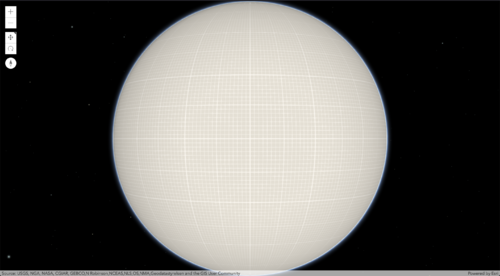

MA documentation: Difference between revisions

Max Lehmann (talk | contribs) |

Max Lehmann (talk | contribs) No edit summary |

||

| (124 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

'''[[https://lehmannmax.de/MA_documentation/MA_documentation.html Look at the project documentation here]]''' | |||

=My Motivation= | =My Motivation= | ||

*My sister was born with Trisomy 21 (Down Syndrom). | *My sister was born with Trisomy 21 (Down Syndrom). | ||

| Line 6: | Line 8: | ||

*I believe that everyone is valuable and should have equal opportunities. | *I believe that everyone is valuable and should have equal opportunities. | ||

*In my previous studies (Communication Design) I worked on the concept of inclusive factbooks. [[https://maxvalentin.weebly.com/a-factbook-for-everybody---universe.html See here]] [[https://maxvalentin.weebly.com/a-factbook-for-everybody---mankind.html and here]] | *In my previous studies (Communication Design) I worked on the concept of inclusive factbooks. [[https://maxvalentin.weebly.com/a-factbook-for-everybody---universe.html See here]] [[https://maxvalentin.weebly.com/a-factbook-for-everybody---mankind.html and here]] | ||

*I am convinced that equal access to information and knowledge for all people is a basic prerequisite for | *I am convinced that equal access to information and knowledge for all people is a basic prerequisite for a just society. | ||

[[https://lehmannmax.de/Thesis/Thesis.html#my_motivation Read more about my motivation in my thesis]] | [[https://lehmannmax.de/Thesis/Thesis.html#my_motivation Read more about my motivation in my thesis]] | ||

| Line 19: | Line 21: | ||

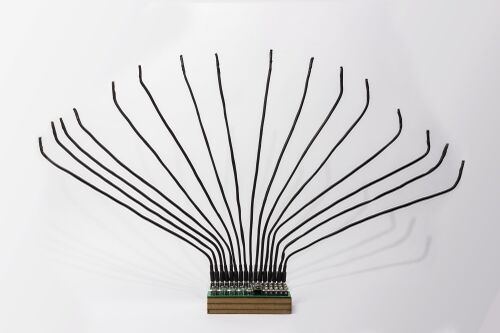

For [[https://issue.xpub.nl/10/index.html Special Issue X]] I built '''GLARE''', a 16 voice polyphonic synthesizer instrument that is based on Arduino. GLARE module is controlled by gestures only and thereby works in a very intuitive way. This project is based on the approach of simplifying complex processes in such a way that they can be used by a wide range of people with different abilities without much prior knowledge. The sensors can be easily removed from the unit for storage or transport. | For [[https://issue.xpub.nl/10/index.html Special Issue X]] I built '''GLARE''', a 16 voice polyphonic synthesizer instrument that is based on Arduino. GLARE module is controlled by gestures only and thereby works in a very intuitive way. This project is based on the approach of simplifying complex processes in such a way that they can be used by a wide range of people with different abilities without much prior knowledge. The sensors can be easily removed from the unit for storage or transport. | ||

[[File:2000px-LFP_01.jpg|none|thumb|500px|Final Glare Module]] | {| | ||

|- | |||

| [[File:2000px-LFP_01.jpg|none|thumb|500px|Final Glare Module]] || [[File:LFP_deplayer_21.jpg|none|thumb|500px|Glare in action]] | |||

|} | |||

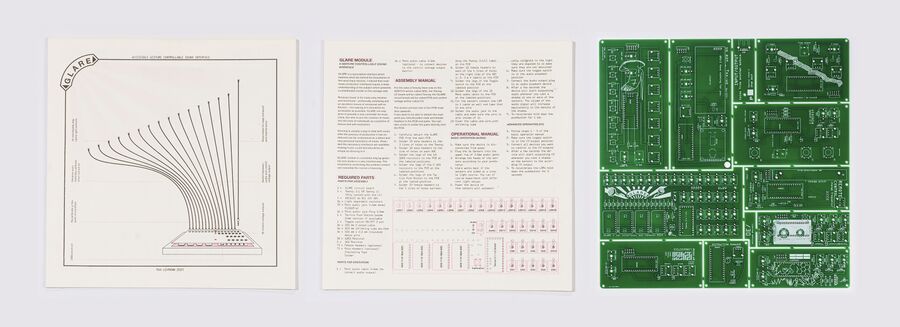

In this project, a group publication of 10 circuit boards was produced by the 10 students in vinyl record format. Together with 10 instructions for assembling the various modules, this publication is available from DePlayer in an original edition of 30. The project was also presented there. | |||

In this project, a group publication of 10 circuit boards was produced by the 10 students in vinyl record format. Together with 10 instructions for assembling the various modules, this publication is available from DePlayer in an original edition of 30. The project was also presented there | |||

[[File:Publication_Max.jpg|none|thumb|900px|Special Issue X publication contribution]] | [[File:Publication_Max.jpg|none|thumb|900px|Special Issue X publication contribution]] | ||

[[https://pzwiki.wdka.nl/mw-mediadesign/images/b/bd/Max.pdf High resolution image of my publication contribution]] | [[https://pzwiki.wdka.nl/mw-mediadesign/images/b/bd/Max.pdf High resolution image of my publication contribution]] | ||

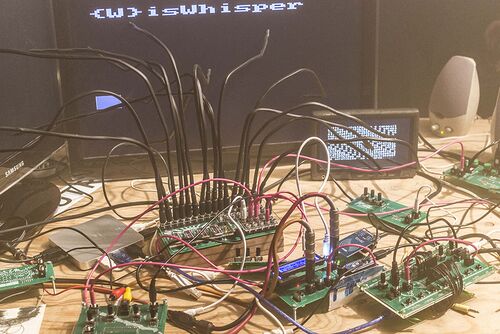

All modules could be connected with and reacted to each other. | |||

[[File:LFP deplayer 06.jpg|none|thumb| | [[File:LFP deplayer 29.jpg|none|thumb|500px|All modules connected]] | ||

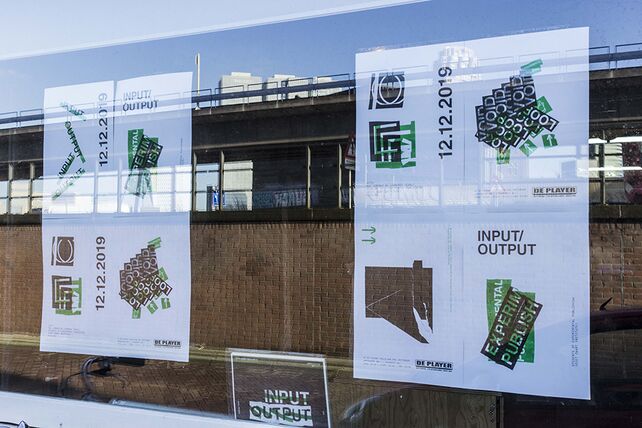

I was involved in the design of the visual identity of this exhibition. | |||

{| | |||

|- | |||

| [[File:PosterSmallFinal.jpg|none|thumb|300px|Input Output poster]] || [[File:LFP deplayer 06.jpg|none|thumb|642px|Input Output modular posters]] | |||

|} | |||

==Special Issue XI: We have secrets to tell you== | ==Special Issue XI: We have secrets to tell you== | ||

| Line 37: | Line 47: | ||

In [[https://issue.xpub.nl/11/index.html Special Issue XI]] I came into first contact with (Semantic) MediaWiki queries. Later in the project, I was mainly involved in coding the publicly accessible part of our web publication. This was the first time for me to code in Javascript. On the website, it is possible to filter content and generate a print document from selected content. | In [[https://issue.xpub.nl/11/index.html Special Issue XI]] I came into first contact with (Semantic) MediaWiki queries. Later in the project, I was mainly involved in coding the publicly accessible part of our web publication. This was the first time for me to code in Javascript. On the website, it is possible to filter content and generate a print document from selected content. | ||

[[File:S11_PUBWEBSITE1.gif|none|thumb|500px|Special Issue XI publication]] | {| | ||

|- | |||

[[File:S11_PUBWEBSITE2.gif|none|thumb|500px|Special Issue XI publication]] | | [[File:S11_PUBWEBSITE1.gif|none|thumb|500px|Special Issue XI publication]] || [[File:S11_PUBWEBSITE2.gif|none|thumb|500px|Special Issue XI publication]] | ||

|} | |||

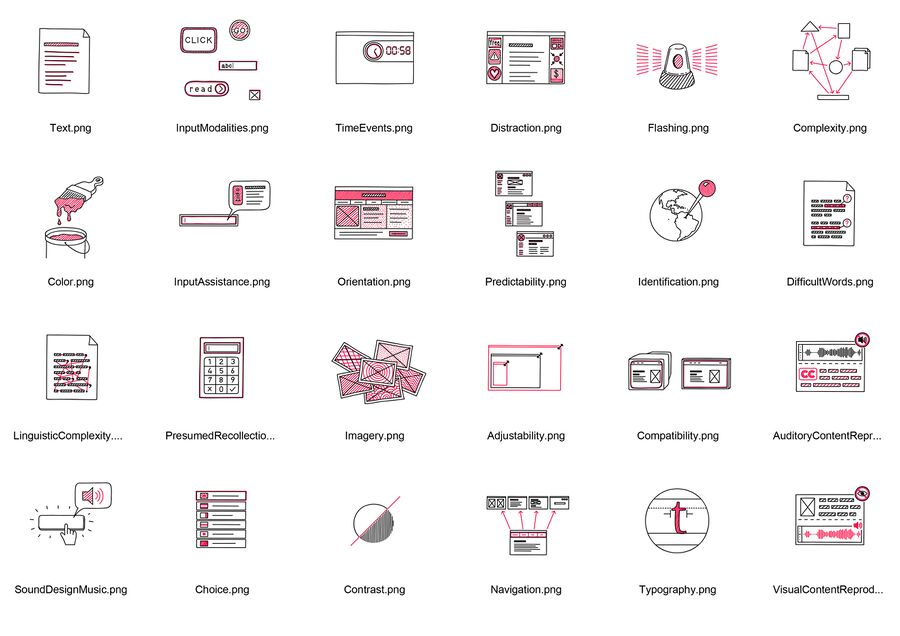

In this project, my focus was on breaking down the collectively used processes into tools and methods. For each collected item I wrote short texts that explain what we used them for. I also developed an icon for each collected item. | In this project, my focus was on breaking down the collectively used processes into tools and methods. For each collected item I wrote short texts that explain what we used them for. I also developed an icon for each collected item. | ||

[[File:0 TOOL Icons Overview4.jpg|none|thumb|500px|Tools - Icons created for Special Issue XI publication]] | {| | ||

|- | |||

[[File:0 METHODS Icons Overview2.jpg|none|thumb|500px|Methods - Icons created for Special Issue XI publication]] | | [[File:0 TOOL Icons Overview4.jpg|none|thumb|500px|Tools - Icons created for Special Issue XI publication]] || [[File:0 METHODS Icons Overview2.jpg|none|thumb|500px|Methods - Icons created for Special Issue XI publication]] | ||

|} | |||

==Special Issue XII: Radio Implicancies== | ==Special Issue XII: Radio Implicancies== | ||

| Line 86: | Line 98: | ||

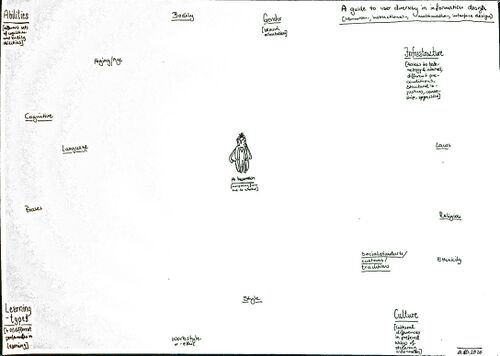

[[File:Diversity-Sketch.jpg|none|thumb|500px|Map: User diversity in information design]] | [[File:Diversity-Sketch.jpg|none|thumb|500px|Map: User diversity in information design]] | ||

====Proposal==== | ====Proposal (Summary)==== | ||

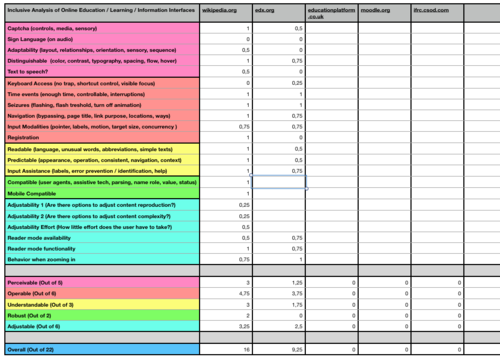

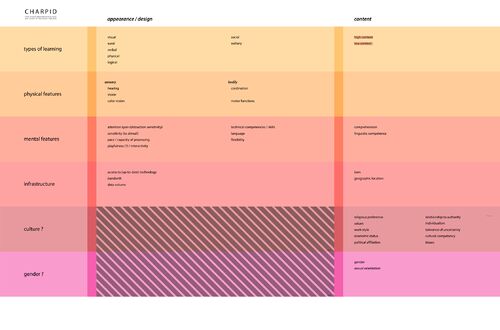

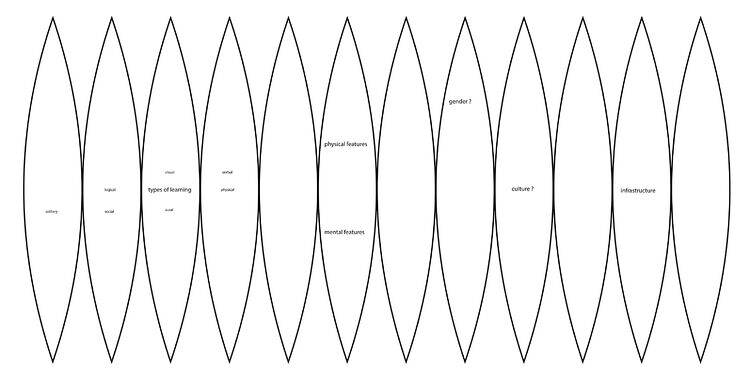

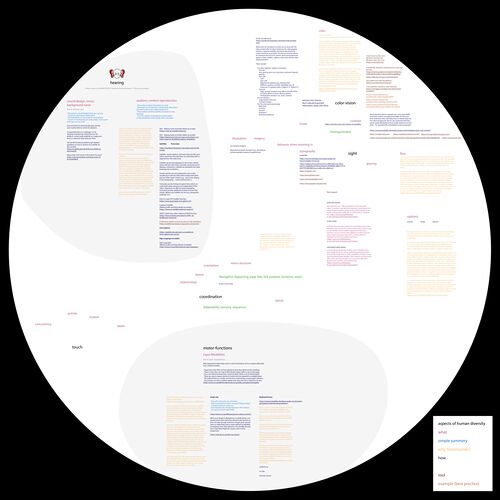

For my master project, I am creating a website that allows for exploration of selected aspects of human diversity, due to which users or groups of users might be disadvantaged when information is presented to them, or they seek to access it. | For my master project, I am creating a website that allows for exploration of selected aspects of human diversity, due to which users or groups of users might be disadvantaged when information is presented to them, or they seek to access it. | ||

| Line 93: | Line 105: | ||

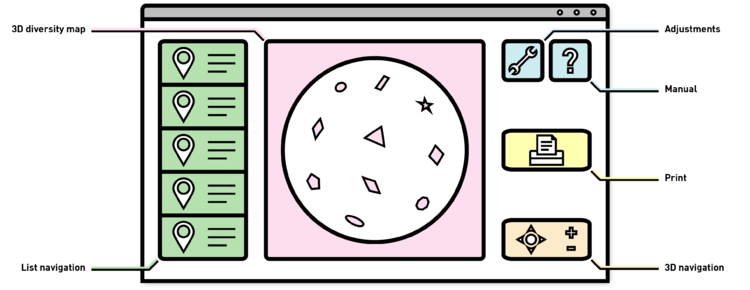

The website will consist of a spherical 3D map. On the 3D map selected aspects of human diversity will be arranged on the basis of their thematic proximity and interconnections. I will create animated illustrations to support the understanding of the information. Additionally, personal reports of various individuals will be provided, telling their experiences of exclusion. I chose the shape of the sphere, as its surface has no ends and no center. | The website will consist of a spherical 3D map. On the 3D map selected aspects of human diversity will be arranged on the basis of their thematic proximity and interconnections. I will create animated illustrations to support the understanding of the information. Additionally, personal reports of various individuals will be provided, telling their experiences of exclusion. I chose the shape of the sphere, as its surface has no ends and no center. | ||

I will speak about the different aspects of human diversity exclusively in the form of symptoms and | The interface of the website will allow for certain adjustments of the website‘s appearance according to user preferences, like font-size, color-scheme, speed of moving content, audio playback, or language. All texts will either be written in simple language, or each text will be available in different levels of complexity, from which the user can choose according to preference. | ||

[[File:Website basic sketch-08.png|none|thumb|750px|Proposal: Globe website]] | |||

I will speak about the different aspects of human diversity exclusively in the form of individual symptoms and refrain from naming diagnoses. | |||

[[File:Diversity_2D_Map_3-02.jpg|none|thumb|500px|Tabel: Cluster of human abilities relevant to the design and content of information publication]] | [[File:Diversity_2D_Map_3-02.jpg|none|thumb|500px|Tabel: Cluster of human abilities relevant to the design and content of information publication]] | ||

[[File:Diversity 2D Map 3-01.jpg|none|thumb|750px|2D representation of a sphere with information arranged on it]] | [[File:Diversity 2D Map 3-01.jpg|none|thumb|750px|2D representation of a sphere with information arranged on it]] | ||

Read my full [[User:Max_Lehmann/Project_Proposal|Project proposal]] | Read my full [[User:Max_Lehmann/Project_Proposal|Project proposal]] | ||

| Line 107: | Line 121: | ||

[[File:Learning_2D3DAnimation.gif|none|thumb|500px|First test with Blender 3D + 2D frame by frame animation]] | [[File:Learning_2D3DAnimation.gif|none|thumb|500px|First test with Blender 3D + 2D frame by frame animation]] | ||

[[File:Visual.gif|none|thumb|500px|Animation: Learning styles - Visual]] | {| | ||

|- | |||

| [[File:Visual.gif|none|thumb|500px|Animation: Learning styles - Visual]] || [[File:Social.gif|none|thumb|500px|Animation: Learning styles - Social]] | |||

|- | |||

| [[File:Aural.gif|none|thumb|500px|Animation: Learning styles - Aural]] || | |||

|} | |||

===Prototyping=== | |||

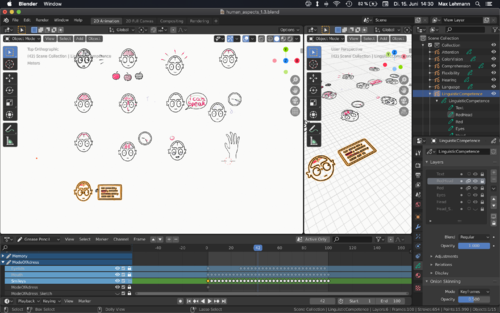

[[ | I used the open-source software [[https://www.blender.org Blender]] to create a prototype of the interface and to create 2D animated illustrations. | ||

{| | |||

|- | |||

| [[File:DiversitySphere.gif|none|thumb|600px|Blender: Sphere with illustrations of learning styles arranged on it]] || [[File:Diversity_sphere_cropped.gif|none|thumb|500px|Blender: Sphere with illustrations of learning styles arranged on it]] | |||

|} | |||

[[https:// | Over time I developed an understanding of various Javascript libraries, especially [[https://www.threejs.org/ Three.js]]. | ||

[[File: | {| | ||

|- | |||

| [[File:threejstest1.gif|none|thumb|500px|First 3JS Tests: 3D objects and mouse interaction]] || [[File:threejstest2.gif|none|thumb|500px|First 3JS Tests: Texture, controls and responsiveness]] | |||

|- | |||

| [[File:threejstest3.gif|none|thumb|500px|First 3JS Tests: Object loading]] || | |||

|} | |||

Over time I developed an interactive sphere interface on which information is arranged. | |||

[[File: | [[File:threejstest8.gif|none|thumb|500px|First 3JS Tests: Sphecirally arranged CSS3DObjects]] | ||

[[ | Check out my complete [[https://hub.xpub.nl/sandbox/~max/ Technical process index]]. | ||

[[ | ===Workshop=== | ||

'''Meet - Interface''' | |||

Together with [[User:AvitalB|Avital]] I developed a workshop in which we analyzed different websites according to criteria that would normally be used to examine people and social interactions. On the basis of this analysis, we played improvised theatre scenes and processed the insights gained as a group. | |||

Read the full [[https://pzwiki.wdka.nl/mediadesign/Category:Py.rate.chnic_sessions/meet_interface#Meet_-_Interface_workshop documentation page]]. | |||

==Resubmition== | |||

I was asked to resubmit my project proposal. The reasons for this were, as I understand it, that the scope of the proposed project was too large. I was advised to reduce the scope in order not to compromise the quality of my publication. | |||

Accordingly, I narrowed the focus of the project. After analysing the possible channels of information communication, I decided to focus on the inclusive creation of websites. This was an obvious choice, as online research of necessary materials on this topic was particularly easy to implement. Also, the field of web design matched the development of my personal interests. | |||

Read more about this decision in my [[https://lehmannmax.de/Thesis/Thesis.html#why_websites Thesis]] | |||

With regard to the groups of aspects of human diversity, I decided to limit myself to disabilities because of my personal connection to this topic. | |||

Read more about this decision in my [[https://lehmannmax.de/Thesis/Thesis.html#full_scope_of_the_project Thesis]] | |||

===New proposal=== | |||

I will create an index of online references that can help with the creation of websites that work well for all people, including those with physical or mental disabilities. The references will be combined with individual testimonials of exclusion in this area, as well as illustrations and simple summaries. A wiki will serve as both, the primary interface to access these contents, as well as a backend from which I will conduct interface experiments. In these experiments, I will write scripts to create websites by querying contents from the wiki. | |||

Read my full [[User:Max_Lehmann/Project_Proposal | Resubmition]]. | |||

[[ | Check out my [[https://hub.xpub.nl/sandbox/~max/threejs_presentation/threejs_presentation.html Resubmition Presentation]]. | ||

==Process== | |||

===Concept / Contents=== | |||

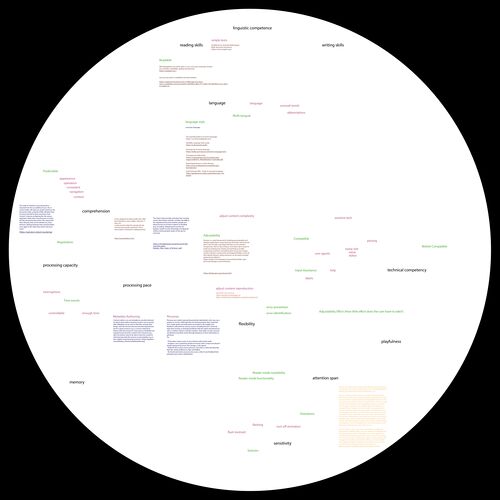

I gathered a collection of references and tools linked to the topic of the inclusive creation of websites. I used Adobe Illustrator to collect and organize the collected content in the beginning. | |||

[[File:Content_inclusive3.jpg|none|thumb|500px|Materials related to inclusion and inclusive websdesign]] | {| | ||

|- | |||

| [[File:Content_inclusive1.jpg|none|thumb|500px|Physical user abilites in inclusive webdesign and related materials]] || [[File:Content_inclusive2.jpg|none|thumb|500px|Mental user abilites in inclusive webdesign and related materials]] | |||

|- | |||

| [[File:Content_inclusive3.jpg|none|thumb|500px|Materials related to inclusion and inclusive websdesign]] || [[File:Content_inclusive_PART.jpg|none|thumb|500px|Hearing ability in inclusive webdesign and related materials]] | |||

|} | |||

I settled on clear thematic areas and affiliations and connections of these. I rely on existing categorisations such as the Web Content Accessibility Guidelines, but sometimes expanded them with my own. | |||

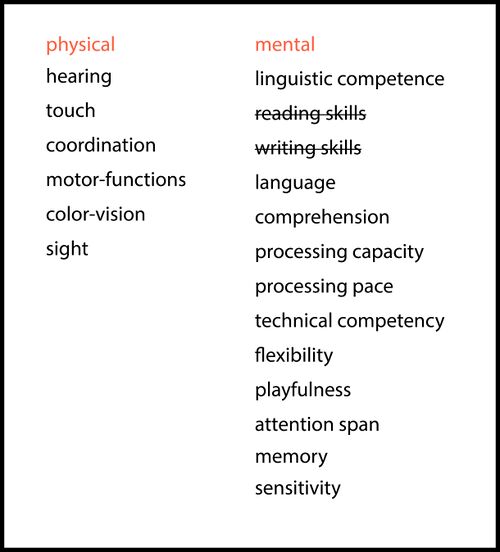

[[File:Content inclusive diversity.jpg|none|thumb|500px|Provisional list of relevant user abilities for inclusive web design]] | [[File:Content inclusive diversity.jpg|none|thumb|500px|Provisional list of relevant user abilities for inclusive web design]] | ||

[[File:MIW_Flowchart.jpg|none|thumb| | [[File:MIW_Flowchart.jpg|none|thumb|900px|Chart of relevant user abilities for inclusive web design and related materials]] | ||

I did a lot of online research and created bookmarks with keywords for all the content that was relevant to my work. Later, I went through all the websites I had collected and embedded them in my structure. | |||

I then listed these references in as uniform a scheme as possible (title, author, source for content and title, short description for tools) and revised them. | |||

[[File:Incl_WEB-CONTENT-06.jpg|none|thumb|900px|Continued chart of relevant user abilities for inclusive web design and related materials]] | |||

[[File:Incl_WEB-CONTENT-04.jpg|none|thumb|900px|Example of the hierarchical structure of the related contents]] | |||

I wrote simple introductions to the different aspects of websites and revised them. | |||

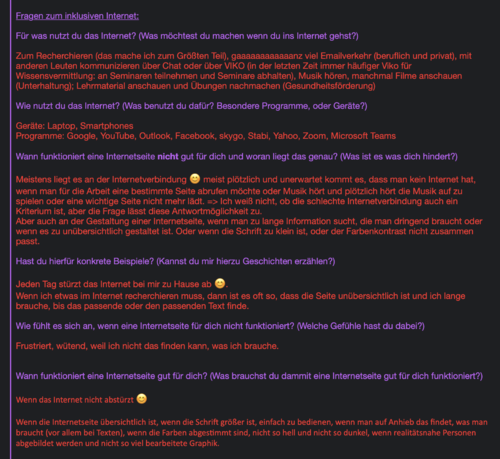

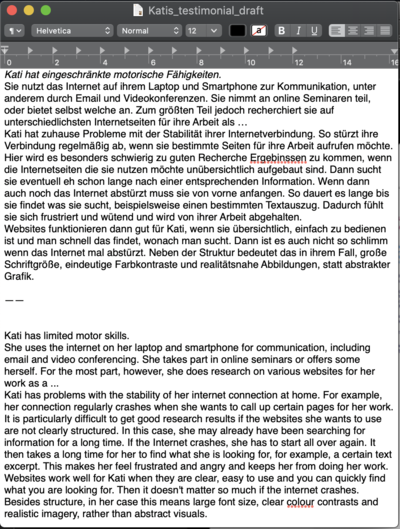

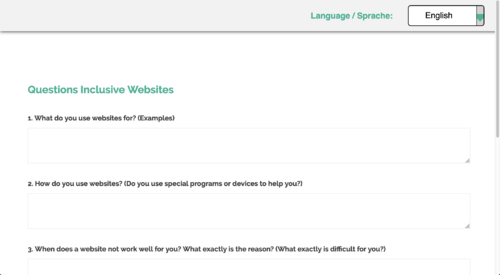

In order to collect the user stories as planned, I first developed a questionnaire in simple language which I made public via various distributors. The only response I received during the whole project was one from my personal contacts. | |||

[[File: | {| | ||

|- | |||

| [[File:Kati_mail.png|none|thumb|500px|Provisional questionnaire to collect user stories with first answers (in German)]] || [[File:Kati_text.png|none|thumb|400px|Summary of the first answers received]] | |||

|} | |||

[[ | I made the questionnaire available as an [[https://lehmannmax.de/Survey/survey.html online survey]] using the free [[https://surveyjs.io/Documentation/Library survey.js library]]. | ||

[[File: | [[File:MIW_Survey.png|thumb|none|500px|Online survey to collect user stories of exclusion and websites]] | ||

[[ | I realized, that creating such stories in a respectful and authentic way takes time and direct collaboration with the individuals, which I was not able to do. So due to lack of time and other circumstances, I decided not to make any further efforts to collect these stories. | ||

I replaced the missing stories as much as possible with ones that I found online and that I partially shortened. Where I don't find any, I invite users to share their experiences through the online survey or in the [[https://lehmannmax.de/wiki/index.php?title=Special:WikiForum/Share_your_user_story. forum]]. | |||

===Design=== | |||

The design was strongly adapted to the technical necessities and functionalities of this project. | |||

I decided early on two strong contrast colours (<span style="color:#FF014D">#FF014D</span>, <span style="color:#477CE6">#477CE6</span>), which in combination with grey tones result in my very simple colour scheme. The colour red makes hierarchically superior elements recognisable compared to subordinate elements that use the colour blue. | |||

I also developed a logo consisting of two circles of arrowheads around a broken circle and text, the title of my project. The middle circle, which does not obstruct the arrows, symbolises content that is to be made inclusive. The arrows, that go from the outside to the inside, point from different angles, without barriers, to the centre of the lettering, where the word "Inclusive" is written. This symbolises the removal of barriers to accessing content that is inclusive. The arrows pointing from the inside to the outside form a frame for the described system. They point from the content outwards, in different directions, reflecting the direction of thinking in my technical proposal and representing the collaborative approach. | |||

The choice of fonts was based on the criterion of readability. I chose a non-serif font (Century Gothic) for all flow and sub-headings and a slab-serif font (Arvo), for headings. The font sizes are mostly above 18pt. | |||

The illustrations are simple and as minimalistic as possible. Here, too, I limit myself to very few colours. Where information would be communicated through colour, I additionally used textures. For the human figures, I have tried to give as few clues as possible to classify the faces into social groups. | |||

Overall, I try to keep the design consistent in the different parts of the project and to create clarity and calmness through white space. Of course, the interfaces are responsive and, where appropriate, specially adapted for mobile. All buttons and other interactive elements are clearly recognisable and give distinct feedback. | |||

I tried to get feedback for my project from an agency specialising in inclusive design. After this was confirmed and I had sent the material, I was cancelled due to overload. | |||

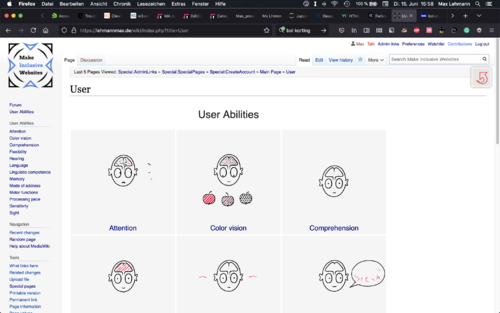

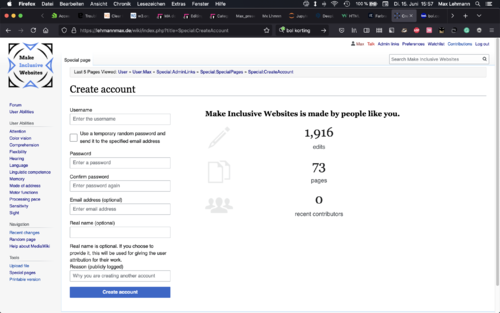

===Wiki=== | ===Wiki=== | ||

[[File:MIW_Wiki.png|none|thumb|500px|Make Inclusive Websites Wiki]] | I structured the content on [[https://lehmannmax.de/wiki/index.php?title=User my Wiki]] using, among other extensions, [[https://www.mediawiki.org/wiki/Extension:Page_Forms Pageforms]] and [[https://www.mediawiki.org/wiki/Extension:Cargo Cargo]]. I set up and published my Wiki, as well as the rest of my publication, on a personal Raspberry Pi. | ||

{| | |||

|- | |||

| [[File:MIW_Wiki.png|none|thumb|500px|Make Inclusive Websites Wiki]] || [[File:PeopleLikeMe.png|none|thumb|500px|Edits made on the 15th of June 2021]] | |||

|} | |||

On my Wiki I implemented Templates and Forms with Page-Forms and stored the information in Cargo tables. | |||

<nowiki> | |||

{{References | |||

|headline1=General | |||

|coordhead1=10.000, 285.000, 0.000 | |||

|references1= | |||

Use headings to convey meaning and structure; | |||

Tips for Getting Started - 3WC Web Accessibility Initiative; | |||

https://www.w3.org/WAI/tips/writing/#use-headings-to-convey-meaning-and-structure; | |||

Section Headings; | |||

Understanding Web Content Accessibility Guidelines (WCAG) 2.1; | |||

https://www.w3.org/WAI/WCAG21/Understanding/section-headings; | |||

Headings and labels; | |||

Understanding Web Content Accessibility Guidelines (WCAG) 2.1; | |||

https://www.w3.org/WAI/WCAG21/Understanding/headings-and-labels.html | |||

|headline2=Dividing Processes | |||

|coordhead2=16.000, 285.000, 0.000 | |||

|references2= | |||

Multi-page Forms; | |||

Web Accessibility Tutorials - 3WC Web Accessibility Initiative; | |||

https://www.w3.org/WAI/tutorials/forms/multi-page/; | |||

Progress Trackers in UX Design; | |||

Nick Babich; | |||

https://uxplanet.org/progress-trackers-in-ux-design-4319cef1c600; | |||

Multi-step form design: Progress indicators and field labels; | |||

Kristen Willis; | |||

https://www.breadcrumbdigital.com.au/multi-step-form-design-part-1-progress-indicators-and-field-labels/ | |||

|linksto= | |||

|belongsto=Complexity | |||

|contains= | |||

}} | |||

</nowiki> | |||

[[File:ExampleCargoTable.png|none|thumb|500px|Part of a Cargo table storing some information]] | |||

===Technical process=== | |||

''[[https://hub.xpub.nl/sandbox/~max/ Technical process index]]'' | |||

====General==== | |||

The information in all the interfaces is retrieved from my Wiki as a cargo query with Javascript "fetch" in JSON format. | |||

<nowiki> | |||

let fetchhuman = (promise) => { | |||

return fetch("/wiki/index.php?title=Special:CargoExport&tables=human%2C&&fields=human._pageName%2C+human.headline%2C+human.coordinates%2C+human.image1%2C&&order+by=%60cargo__human%60.%60_pageName%60%2C%60cargo__human%60.%60headline%60%2C%60cargo__human%60.%60coordinates__full%60+%2C%60cargo__human%60.%60image1%60&origin=*&limit=100&format=json").then(r=>r.json()).then(data=>{ | |||

... | |||

</nowiki> | |||

Then all the contents of the query data are rendered into HTML elements. | |||

<nowiki> | |||

for (let i = 0; i < data.length; i++) { | |||

var content = data[i]; | |||

var div = document.createElement("div"); | |||

div.setAttribute("id", content._pageName); | |||

document.body.appendChild(div); | |||

var image = document.createElement("img"); | |||

image1.setAttribute("src", content.image1); | |||

image1.setAttribute("alt", "Illustration of " + content.headline); | |||

div.append(image1); | |||

var head = document.createElement("h2"); | |||

temphead.innerHTML = content._pageName; | |||

temphead.setAttribute("id", content.headline+"head"); | |||

div.appendChild(temphead); | |||

... | |||

</nowiki> | |||

Some parts of the data, like the references need to be sorted. | |||

<nowiki> | |||

let references = content.references1; | |||

references.unshift("Empty"); | |||

let ref_link = references1.filter(function(value, index, Arr) { | |||

return index % 3 == 0; | |||

}); | |||

ref_link.shift(); | |||

let ref_head = references.filter(function(value, index, Arr) { | |||

return index % 3 == 1; | |||

}); | |||

let ref3desc = references3.filter(function(value, index, Arr) { | |||

return index % 3 == 2; | |||

}); | |||

for (let i = 0; i < ref3head.length; i++) { | |||

if (ref3head[i]!== ""){ | |||

var ref3headline = ref3head[i]; | |||

var ref3description = ref3desc[i]; | |||

var ref3link = ref3lin[i]; | |||

... | |||

</nowiki> | |||

I used the "belongsto" information in my Cargo tables to assign the subtopics to the corresponding parent topics. | |||

<nowiki> | |||

var belongsto = content.belongsto[0]; | |||

var parent = document.getElementById(belongsto); | |||

... | |||

parent.appendChild(div); | |||

</nowiki> | |||

On this basis, the information is then processed according to the requirements of the different interfaces. | |||

Also other elements, like the sidebar navigation are created from the query data | |||

<nowiki> | |||

//First query | |||

var sidebar = document.getElementById("ulist"); | |||

var li = document.createElement("li"); | |||

sidebar.appendChild(li); | |||

var listelem = document.createElement("A"); | |||

listelem.innerHTML = content._pageName; | |||

listelem.setAttribute("href", "#"+content._pageName); | |||

listelem.setAttribute("id", content._pageName+"_listelem"); | |||

li.appendChild(listelem); | |||

var sublist = document.createElement("ul"); | |||

sublist.setAttribute("id", content._pageName+"_sublist"); | |||

li.appendChild(sublist); | |||

//Second query | |||

var listparent = document.getElementById(content.belongsto[0]+"_sublist"); | |||

var li = document.createElement("li"); | |||

listparent.appendChild(li); | |||

var listelem = document.createElement("A"); | |||

listelem.innerHTML = content._pageName; | |||

listelem.setAttribute("href", "#"+content._pageName); | |||

listelem.setAttribute("id", content._pageName+"_listelem"); | |||

li.appendChild(listelem); | |||

</nowiki> | |||

On all my interfaces, I have paid special attention to keyboard navigation. | |||

For some interfaces, I wrote a function that allows navigation of the sidebar with the arrow keys. | |||

<nowiki> | |||

var canvasKBcontrol = true; | |||

$(document).keyup(function(e){ | |||

if ($(":focus").hasClass( "sidebar" )||$(":focus").hasClass( "sidebar_master" )) { | |||

canvasKBcontrol = false; | |||

} else { | |||

canvasKBcontrol = true; | |||

}; | |||

if (e.keyCode == 40 && canvasKBcontrol == false) { | |||

var next0 = $(".listelem.sidebar.focused").next().find('a.listelem.sidebar').first(); | |||

var next1 = $(".listelem.sidebar.focused").parent().next().find('a.listelem.sidebar').first(); | |||

var next2 = $(".listelem.sidebar.focused").parent().parent().parent().next().find('a.listelem.sidebar').first(); | |||

if (next0.length == 1 && canvasKBcontrol == false) { | |||

$(".sidebar.focused").next().find('.sidebar').first().focus(); | |||

} else if (next0.length == 0 && next1.length != 0 && canvasKBcontrol == false){ | |||

$(".listelem.sidebar.focused").parent().next().find('a.listelem.sidebar').first().focus(); | |||

} else if (next0.length == 0 && next1.length == 0 && canvasKBcontrol == false){ | |||

$(".listelem.sidebar.focused").parent().parent().parent().next().find('a.listelem.sidebar').first().focus(); | |||

}; | |||

$(".sidebar.focused").removeClass("focused"); | |||

$(".sidebar:focus").addClass("focused"); | |||

$(".sidebar:focus").on("click", function() { | |||

$(this).id.replace('_listelem', '').prepend('.').append('head').focus; | |||

}); | |||

}; | |||

if (e.keyCode == 38 && canvasKBcontrol == false) { | |||

var next0 = $(".sidebar.focused").parent().parent().prev(); | |||

var next1 = $(".listelem.sidebar.focused").parent().prev().find('a.listelem.sidebar').last(); | |||

var next2 = $(".listelem.sidebar.focused").parent().parent().parent().prev().find('a.listelem.sidebar').last(); | |||

console.log("next0 ", next0) | |||

console.log("next1 ", next1); | |||

console.log("next2 ", next2); | |||

if (next1.length == 0 && next0.length == 1 && canvasKBcontrol == false) { | |||

$(".sidebar.focused").parent().parent().prev().focus(); | |||

} else if (next1.length != 0 && canvasKBcontrol == false){ | |||

$(".listelem.sidebar.focused").parent().prev().find('a.listelem.sidebar').last().focus(); | |||

} else if (next0.length == 0 && next1.length == 0 && canvasKBcontrol == false){ | |||

$(".listelem.sidebar.focused").parent().parent().parent().prev().find('a.listelem.sidebar').last().focus(); | |||

}; | |||

$(".sidebar.focused").removeClass("focused"); | |||

$(".sidebar:focus").addClass("focused"); | |||

$(".sidebar:focus").on("click", function() { | |||

$(this).id.replace('_listelem', '').prepend('.').append('head').focus; | |||

}); | |||

}; | |||

if (canvasKBcontrol == false) { | |||

$(".listelem.sidebar").css("background", "#efefef"); | |||

$(".listelem.sidebar.focused").css("background", "#cecece"); | |||

} else { | |||

$(".listelem.sidebar").css("background", "#efefef"); | |||

}; | |||

}); | |||

$(document).keydown(function(e){ | |||

if (e.keyCode == 40 && canvasKBcontrol == false) { | |||

e.preventDefault(); | |||

$('.focused').focus(); | |||

}; | |||

if (e.keyCode == 38 && canvasKBcontrol == false) { | |||

e.preventDefault(); | |||

$('.focused').focus(); | |||

}; | |||

}); | |||

let setfocus = (promise) => { | |||

$('#Attention_listelem').addClass("focused"); | |||

}; | |||

</nowiki> | |||

To ensure the correct loading of all contents in the appropriate order, I have chained the "fetch" functions with so-called promises (".then"). | |||

<nowiki> | |||

fetchhuman().then(fetchwebsite).then(fetchreferences).then(function(){ | |||

makesidebar(); | |||

removetabindex(); | |||

loadtoposphere(); | |||

doneloading(); | |||

}); | |||

</nowiki> | |||

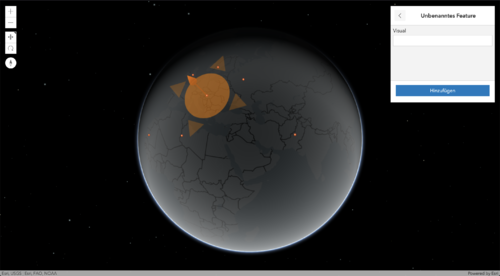

====Globe==== | |||

The interface I have spent the most time on is the "Globe" interface. | |||

Throughout the process, I have steadily increased my understanding of the Threejs library and gradually created my own interface that has typical card functions and controls. During the process, I also looked for and tried other approaches. In particular, I looked for free, highly customisable Javascript-based environments that already provided map functionality. However, in the end, none of the tried possibilities could fulfil all my requirements. | |||

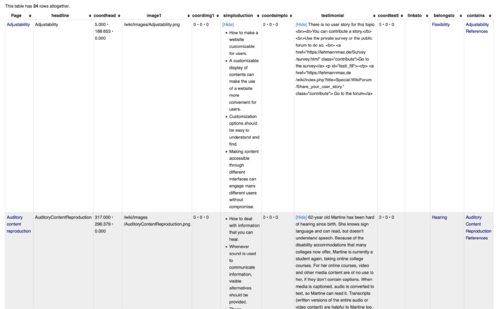

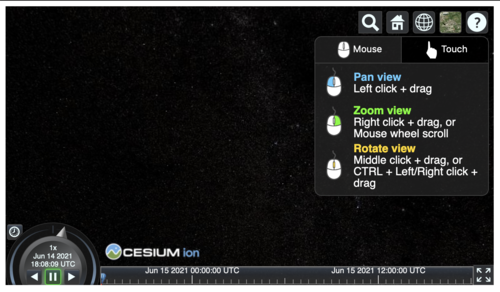

I tried [[https://leafletjs.com/ Leaflet]], [[https://www.arcgis.com/index.html ArcGIS]], [[https://cesium.com/ Cesium]] and others. | |||

{| | |||

|- | |||

| [[File:Cesium.png|none|thumb|500px|Test with Cesium js]] || [[File:Esri1.png|none|thumb|500px|Test with Esri ArcGIS]] | |||

|- | |||

| [[File:Esri2.png|none|thumb|500px|Test with Esri ArcGIS]] || [[File:Esri3.png|none|thumb|500px|Test with Esri ArcGIS]] | |||

|} | |||

As a consequence, I created several of these functionalities myself. | |||

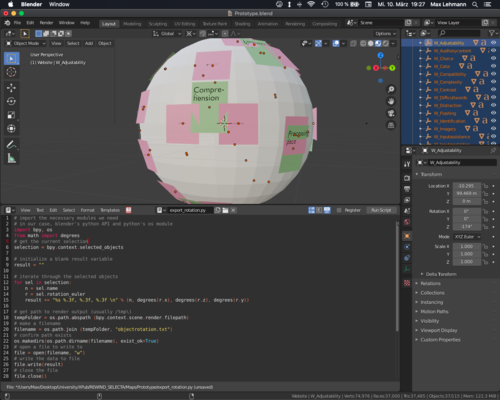

The setup for the basic scene consists of a spherical 3D object (mesh), to which CSS3D Elements are appended. On top of the spherical 3D Object, which is then turned transparent, a GLB model gets loaded. The coordinates for how to position the objects are stored in the Cargo tables. To find the right coordinates in the beginning I recreated the globe in Blender, positioned them there and then exported a list of all coordinates through Blender's Python console. | |||

[[File:BlenderPositioning.png|none|thumb|500px|Positioning objects in Blender and using the Python console to export a list of coordinates.]] | |||

In ThreeJS all CSS3DElements get positioned using the radius of the globe, a pivot point in the middle of the globe and the coordinates. | |||

<nowiki> | |||

var div = document.createElement("div"); | |||

var obj = new CSS3DObject(div); | |||

globe.add(obj); | |||

var pivot = new THREE.Group(); | |||

obj.add(pivot); | |||

pivot.add(obj); | |||

scene.add(pivot); | |||

pivot.position.set(0, 0, 0); | |||

pivot.rotation.set(0, 0, 0); | |||

obj.position.set(0, 0, radius); | |||

rotateObject(pivot,content.coordinates[0],content.coordinates[1],content.coordinates[2]); | |||

</nowiki> | |||

For rotating in a 3D environment it is important to rotate the individual axis in the right order. I use a special function to rotate elements. | |||

<nowiki> | |||

function rotateObject(object, degreeX=0, degreeY=0, degreeZ=0) { | |||

object.rotateZ(THREE.Math.degToRad(degreeZ)); | |||

object.rotateY(THREE.Math.degToRad(degreeY)); | |||

object.rotateX(THREE.Math.degToRad(degreeX)); | |||

}; | |||

</nowiki> | |||

However, I did not want to work with more than one set of coordinates per cargo table entry, as this would not have been feasible given the amount of data. | |||

Therefore, the positions of most objects are calculated in functions and then placed. | |||

<nowiki> | |||

//Placing blocks of text (in this case 'User Stories') with the right distance to another by counting the amount of letters in the texts. | |||

var positioncalc2 = content.testimonial.length/100; | |||

var contcoordhead0_1 = contcoordhead0+positioncalc*1.1+positioncalc2*0.6; | |||

if (contcoordhead0_1 >= 360) {contcoordhead0_1-=360;}; | |||

rotateObject(pivot3,contcoordhead0_1,content.coordhead[1],content.coordhead[2]); | |||

//Placing the references in a grid and adjusting the spacing towards the poles | |||

var vertical_spacer = 6; | |||

var horizontal_spacer = 6; | |||

if (count1 == 0 || count1 == 1 || count1 == 2) { | |||

count1 += 1; | |||

} else if (count1 == 3){ | |||

count1 = 1; | |||

count2 += 1; | |||

}; | |||

var div = document.createElement("div"); | |||

var obj = new CSS3DObject(div); | |||

globe.add(obj); | |||

var pivot = new THREE.Group(); | |||

obj.add(pivot); | |||

pivot.add(obj); | |||

scene.add(pivot); | |||

pivot.position.set(0, 0, 0); | |||

pivot.rotation.set(0, 0, 0); | |||

obj.position.set(0, 0, radius); | |||

var vertical_rotation = content.coordhead1[0]+vertical_spacer*count2; | |||

if (inRange(vertical_rotation, 228, 263)){ | |||

horizontal_spacer = 12; | |||

} else if (inRange(vertical_rotation, 263, 278)){ | |||

horizontal_spacer = 11; | |||

} else if (inRange(vertical_rotation, 278, 293)){ | |||

horizontal_spacer = 10; | |||

} else if (inRange(vertical_rotation, 293, 308)){ | |||

horizontal_spacer = 9; | |||

} else if (inRange(vertical_rotation, 308, 323)){ | |||

horizontal_spacer = 8; | |||

} | |||

else if (inRange(vertical_rotation, 103, 130)){ | |||

horizontal_spacer = 12; | |||

} else if (inRange(vertical_rotation, 88, 103)) { | |||

horizontal_spacer = 11; | |||

} else if (inRange(vertical_rotation, 63, 88)){ | |||

horizontal_spacer = 10; | |||

} else if (inRange(vertical_rotation, 48, 63)){ | |||

horizontal_spacer = 9; | |||

} else if (inRange(vertical_rotation, 38, 48)){ | |||

horizontal_spacer = 8; | |||

} else {}; | |||

if (count1 == 1) { | |||

horizontal_spacer=6; | |||

} | |||

if (count1 == 2) { | |||

horizontal_spacer-=(horizontal_spacer-6)/3; | |||

} | |||

var horizontal_rotation = content.coordhead1[1]+horizontal_spacer*count1; | |||

if (horizontal_rotation > 360){ | |||

horizontal_rotation -= 360; | |||

} else {} | |||

if (vertical_rotation > 360){ | |||

vertical_rotation -= 360; | |||

} else {} | |||

rotateObject(pivot,vertical_rotation,horizontal_rotation,content.coordhead1[2]); | |||

</nowiki> | |||

The CSS3D Elements are rendered in a different way than the globe and GLB model. As a result, the globe doesn't obscure the CSS3D Elements on the back of it as you would expect it to. | |||

[[File:css3dobjects.png|none|thumb|500px|Without further action this is how the CSS3D content gets rendered on top of the 3D Object.]] | |||

This is why I had to write a clipping function to disable the display of content, that is not facing the viewer. I did so by comparing the coordinates of the Objects to the orientation of the cameras pivot point. I had to convert and clean up the numbers multiple times to do this. The "seam" of the globe where the values jump from 360 to 0 degrees was particularly challenging. | |||

<nowiki> | |||

function inRange(x, min, max) { | |||

return ((x-min)*(x-max) <= 0); | |||

}; | |||

=== | function clipping() { | ||

var newY = camera.rotation.y; | |||

var newX = camera.rotation.x; | |||

var Ydegrees = THREE.Math.radToDeg( newY ); | |||

var Xdegrees = THREE.Math.radToDeg( newX ); | |||

if (Ydegrees < 0) { | |||

var Ydgr = Ydegrees + 360; | |||

} else { | |||

var Ydgr = Ydegrees; | |||

}; | |||

if (Xdegrees < 0) { | |||

var Xdgr = Xdegrees + 360; | |||

} else { | |||

var Xdgr = Xdegrees; | |||

}; | |||

for (let i = 0; i < alldivs.length; i++ ) { | |||

var content = alldivs[i]; | |||

var Ycoord = allcoordinatesY[i]; | |||

var Xcoord = allcoordinatesX[i]; | |||

var YLlim = Ydgr+40; | |||

var YRlim = Ydgr-40; | |||

var XLlim = Xdgr+40; | |||

var XRlim = Xdgr-40; | |||

if (inRange(Ycoord,0,60)) { | |||

if (inRange(Ydgr,0,180)) { | |||

} else { | |||

Ycoord += 360; | |||

}; | |||

} else if (inRange(Ycoord,300,360)) { | |||

if (inRange(Ydgr,0,180)) { | |||

Ycoord -= 360; | |||

} else{ | |||

}; | |||

}; | |||

if (inRange(Xcoord,0,60)) { | |||

if (inRange(Xdgr,0,180)) { | |||

} else { | |||

Xcoord += 360; | |||

}; | |||

} else if (inRange(Xcoord,300,360)) { | |||

if (inRange(Xdgr,0,180)) { | |||

Xcoord -= 360; | |||

} else{ | |||

}; | |||

}; | |||

if (inRange(Ycoord,YLlim,YRlim)&&inRange(Xcoord,XLlim,XRlim)){ | |||

content.style.display = ""; | |||

} else { | |||

content.style.display = "none" | |||

}; | |||

== | if (inRange(Xdgr,270,300) && inRange(Xcoord,270,300)) { | ||

content.style.display = ""; | |||

} else if (inRange(Xdgr,90,60) && inRange(Xcoord,90,60)){ | |||

content.style.display = ""; | |||

} else {}; | |||

}; | |||

}; | |||

</nowiki> | |||

What I found particularly exciting about the medium of the interactive map is how it is possible to switch between different levels of complexity through simple and intuitive user input. I wanted to use this to switch between different levels of information by zooming in and out. | |||

Therefore I wrote a function that hides and shows levels of content depending on the zoom level. | |||

<nowiki> | |||

function mapzoom() { | |||

var ZL; | |||

if (zoom > zoomtreshold1) { | |||

ZL = 1; | |||

zoombuttonone.classList.add("active"); | |||

zoombuttontwo.classList.remove("active"); | |||

zoombuttonthree.classList.remove("active"); | |||

rotintervall = 0.1; | |||

} else if (zoom > zoomtreshold2){ | |||

ZL = 2; | |||

zoombuttonone.classList.remove("active"); | |||

zoombuttontwo.classList.add("active"); | |||

zoombuttonthree.classList.remove("active"); | |||

rotintervall = 0.07; | |||

} else { | |||

ZL = 3; | |||

zoombuttonone.classList.remove("active"); | |||

zoombuttontwo.classList.remove("active"); | |||

zoombuttonthree.classList.add("active"); | |||

rotintervall = 0.02; | |||

} | |||

[[ | if (zoomLevel != ZL) { | ||

zoomLevel = ZL; | |||

const mapelem1 = document.querySelectorAll(".head1, .image1, .paragraph1, .link1, .human"); | |||

const mapelem2 = document.querySelectorAll(".head2, .image2, .paragraph2, .link2, .website"); | |||

const mapelem3 = document.querySelectorAll(".head3, .image3, .paragraph3, .link3, .references"); | |||

const headelem1 = document.querySelectorAll("h1"); | |||

const imgelem1 = document.querySelectorAll(".image1"); | |||

if (zoomLevel == 1) {controls.rotateSpeed = 0.6;} | |||

else if (zoomLevel == 2) {controls.rotateSpeed = 0.3;} | |||

else {controls.rotateSpeed = 0.1;} | |||

for (var i = 0; i < mapelem1.length; i++) { | |||

if (zoomLevel == 1) { | |||

mapelem1[i].style.display = ""; | |||

} else if (zoomLevel == 2) { | |||

mapelem1[i].style.display = "none"; | |||

} else { | |||

mapelem1[i].style.display = "none"; | |||

} | |||

} | |||

... | |||

</nowiki> | |||

I have implemented additional keyboard commands for the globe in order to make it easier to use with the keyboard. | |||

<nowiki> | |||

function zoomforme(thisfar) { | |||

var zoomDistance = thisfar; | |||

var factor = zoomDistance/zoom; | |||

camera.position.x *= factor; | |||

camera.position.y *= factor; | |||

camera.position.z *= factor; | |||

} | |||

//Arrowkeys | |||

var UPpressed = false; | |||

var DOWNpressed = false; | |||

var rotintervall = 0.1; | |||

var canvasKBcontrol = true; | |||

document.onkeydown = function(e) { | |||

if (canvasKBcontrol) { | |||

switch (e.keyCode) { | |||

case 38: | |||

controls.rotCamPolarUp(rotintervall); | |||

break; | |||

case 40: | |||

controls.rotCamPolarDown(rotintervall); | |||

break; | |||

case 37: | |||

controls.rotCamAzimuthalLeft(rotintervall); | |||

break; | |||

case 39: | |||

controls.rotCamAzimuthalRight(rotintervall); | |||

break; | |||

} | |||

}; | |||

switch (e.keyCode) { | |||

case 49: | |||

zoomforme(radius + radius/10*5.5); | |||

break; | |||

case 50: | |||

zoomforme(zoomtreshold1-300); | |||

break; | |||

case 51: | |||

zoomforme(zoomtreshold2-100); | |||

break; | |||

}; | |||

}; | |||

</nowiki> | |||

In order to implement all the functions accordingly, I had to modify the control system OrbisControls included in ThreeJS. | |||

Among other things, I have made public methods that were not available before in order to be able to use them. | |||

<nowiki> | |||

this.setPolarAngle = function (angle) { | |||

phi = angle; | |||

this.forceUpdate(); | |||

}; | |||

this.setAzimuthalAngle = function (angle) { | |||

theta = angle; | |||

this.forceUpdate(); | |||

}; | |||

this.zoomIn = function (level) { | |||

dollyIn(level); | |||

this.update(); | |||

}; | |||

= | this.zoomOut = function (level) { | ||

dollyOut(level); | |||

this.update(); | |||

}; | |||

this.rotCamPolarUp = function (rotintervall) { | |||

phi -= rotintervall; | |||

this.forceUpdate(); | |||

}; | |||

this.rotCamPolarDown = function (rotintervall) { | |||

phi += rotintervall; | |||

this.forceUpdate(); | |||

}; | |||

this.rotCamAzimuthalLeft = function (rotintervall) { | |||

theta -= rotintervall; | |||

this.forceUpdate(); | |||

}; | |||

this.rotCamAzimuthalRight = function (rotintervall) { | |||

theta += rotintervall; | |||

this.forceUpdate(); | |||

}; | |||

</nowiki> | |||

To create the GLB model with topography and texture I went through the following steps: | |||

# Replace all CSS3DElements in my ThreeJS scene with sphere 3DObjects (meshes), because as far as I know you cannot export CSS3DElements and open them in other environments. Colour code these objects according to thematic belonging. | |||

# Write a function to export the globe with the attached objects. | |||

# Open the exported PLY model in blender and overlay it on a sphere with a 2-dimensional texture to see which contents are in which places. Paint the shape of the different content groups onto the texture. | |||

# Check the resulting 2-dimensional texture (JPG file) in my ThreeJS scene and adjust it if necessary. | |||

# Clean up the texture (JPG file) in Illustrator and convert it to vectors. | |||

# Import the vectors (SVG file) into a new Blender document, convert into a mesh, correct the distribution of faces and solidify. | |||

# Create a new mesh plane, apply the texture (JPG file) to it and punch out with the created mesh using Blender Booleans. | |||

# Solidify the punched out areas in the mesh layer. | |||

# Bind the whole mesh plane with Blender Surface Deform to another, new mesh plane. | |||

# Modify the new mesh plane with Blender Simple Deform (Bend) to a sphere. | |||

# Export as GLB model, load into the ThreeJS scene, place and scale. | |||

[[File:GlobeMadness.gif|none|thumb| | [[File:GlobeMadness.gif|none|thumb|800px|Highly condensed depiction of the Globe interface's development]] | ||

[[File:FinalTopo.gif|none|thumb|500px|Blender: 2D Vector to texturized 3D mesh to sphere with topography]] | [[File:FinalTopo.gif|none|thumb|500px|Blender: 2D Vector to texturized 3D mesh to sphere with topography]] | ||

=== | ====The other interfaces (overview)==== | ||

The other interfaces have of course also undergone longer developments, but not nearly as long as the Globe interface. | |||

=====Fold-Out===== | |||

For the Fold-Out interface, I used the new [[https://developer.mozilla.org/en-US/docs/Web/HTML/Element/details HTML 5 tag: <details>]]. | |||

Before I found out about this tag, I had tried to code a similar functionality myself. | |||

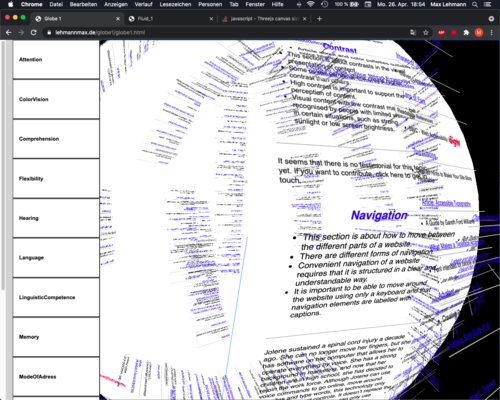

=====UI Options===== | |||

For the UI Options interface, I used the [[https://docs.fluidproject.org/infusion/development/tutorial-userinterfaceoptions/userinterfaceoptions Fluid Infusion UI Options library]] and adapted it slightly (turned off the option to create a table of contents). | |||

<nowiki> | |||

#options > div > ul > li:nth-child(5) { | |||

display: none !important; | |||

} | |||

</nowiki> | |||

In this interface, I also implemented basic [[https://www.w3.org/WAI/standards-guidelines/aria/ ARIA syntax]] to support the use of assisitve tech. | |||

On this page, I have included extra hidden elements that only play a role when navigating with the keyboard, as they let users jump from the content to the sidebar and back. | |||

I also wrote a function, that replaces all GIFs with static images. | |||

<nowiki> | |||

let staticimages = () => { | |||

var images = document.getElementsByClassName('image1'); | |||

console.log(images); | |||

var srcList = [];//This is just for the src attribute | |||

for(var i = 0; i < images.length; i++) { | |||

var sourceList = images[i].src; | |||

console.log(sourceList); | |||

sourceList = sourceList.substr(0, sourceList.lastIndexOf(".")) + ".png"; | |||

console.log(sourceList); | |||

srcList.push(images[i].src); | |||

images[i].src = sourceList; | |||

} | |||

var thebutton = document.getElementsByClassName("playpause"); | |||

console.log(thebutton); | |||

thebutton[0].getElementsByTagName('object')[0].data="../img/GIFplaypause-01.svg"; | |||

thebutton[0].setAttribute( "onClick", "javascript: movingimages();" ); | |||

}; | |||

function movingimages(ele) { | |||

var images = document.getElementsByClassName('image1'); | |||

//console.log(images); | |||

var srcList = [];//This is just for the src attribute | |||

for(var i = 0; i < images.length; i++) { | |||

var sourceList = images[i].src; | |||

//console.log(sourceList); | |||

sourceList = sourceList.substr(0, sourceList.lastIndexOf(".")) + ".gif"; | |||

//console.log(sourceList); | |||

srcList.push(images[i].src); | |||

images[i].src = sourceList; | |||

} | |||

var thebutton = document.getElementsByClassName("playpause"); | |||

console.log(thebutton); | |||

thebutton[0].getElementsByTagName('object')[0].data="../img/GIFplaypause-02.svg"; | |||

thebutton[0].setAttribute( "onClick", "javascript: staticimages();" ); | |||

} | |||

</nowiki> | |||

=====Print===== | |||

For the Print interface, I used code we developed together with [[http://osp.kitchen/ OSP]] in Special Issue XI and adjusted it for my use. This code is based on the [[https://www.pagedjs.org/ PagedJS library]]. | |||

<nowiki> | |||

// BUTTONS | |||

var selectall = document.querySelector("input.selectall"); | |||

selectall.addEventListener("click", function (e) { | |||

var checkboxes = document.querySelectorAll("input[type=checkbox][name=print]"); | |||

var labels = document.querySelectorAll("label"); | |||

console.log(labels); | |||

if (selectall.value == "Select All") { | |||

for (var i=0; i<checkboxes.length; i++) { | |||

checkboxes[i].checked = true; | |||

} | |||

for (var i=0; i<labels.length; i++) { | |||

labels[i].classList.add("checked"); | |||

labels[i].innerHTML = "Remove from PDF"; | |||

} | |||

selectall.value = "Unselect All"; | |||

} else { | |||

for (var i=0; i<checkboxes.length; i++) { | |||

checkboxes[i].checked = false; | |||

} | |||

for (var i=0; i<labels.length; i++) { | |||

labels[i].classList.remove("checked"); | |||

labels[i].innerHTML = "Add to PDF"; | |||

} | |||

selectall.value = "Select All"; | |||

} | |||

}); | |||

// CHECKBOXES | |||

window.PagedConfig = { | |||

auto: false | |||

}; | |||

/* TOC */ | |||

function createToc(config){ | |||

const content = config.content; | |||

const tocElement = config.tocElement; | |||

const titleElements = config.titleElements; | |||

let tocElementDiv = content.querySelector(tocElement); | |||

let tocUl = document.createElement("ul"); | |||

tocUl.id = "list-toc-generated"; | |||

tocElementDiv.appendChild(tocUl); | |||

// add class to all title elements | |||

let tocElementNbr = 0; | |||

for(var i= 0; i < titleElements.length; i++){ | |||

let titleHierarchy = i + 1; | |||

let titleElement = content.querySelectorAll(titleElements[i]); | |||

titleElement.forEach(function(element) { | |||

// add classes to the element | |||

element.classList.add("title-element"); | |||

element.setAttribute("data-title-level", titleHierarchy); | |||

// add id if doesn't exist | |||

tocElementNbr++; | |||

idElement = element.id; | |||

if(idElement == ''){ | |||

element.id = 'title-element-' + tocElementNbr; | |||

} | |||

let newIdElement = element.id; | |||

}); | |||

} | |||

// create toc list | |||

let tocElements = content.querySelectorAll(".title-element"); | |||

for(var i= 0; i < tocElements.length; i++){ | |||

let tocElement = tocElements[i]; | |||

let tocNewLi = document.createElement("li"); | |||

tocNewLi.classList.add("toc-element"); | |||

tocNewLi.classList.add("toc-element-level-" + tocElement.dataset.titleLevel); | |||

tocNewLi.innerHTML = '<a href="#' + tocElement.id + '">' + tocElement.innerHTML + '</a>'; | |||

tocUl.appendChild(tocNewLi); | |||

} | |||

} | |||

async function buildTOC() { | |||

try { | |||

console.log("toc"); | |||

class handlers extends window.Paged.Handler { | |||

constructor(chunker, polisher, caller) { | |||

super(chunker, polisher, caller); | |||

} | |||

beforeParsed(content){ | |||

createToc({ | |||

content: content, | |||

tocElement: '#my-toc-content', | |||

titleElements: [ '.title-element' ] | |||

}); | |||

} | |||

} | |||

window.Paged.registerHandlers(handlers); | |||

} | |||

catch(err) { | |||

} | |||

} | |||

async function preview() { | |||

try { | |||

console.log("preview"); | |||

await window.PagedPolyfill.preview(); | |||

//window.print(); | |||

} | |||

catch(err) { | |||

} | |||

} | |||

async function load_pagedjs() { | |||

await $.getScript("https://unpkg.com/pagedjs/dist/paged.polyfill.js", function(data, textStatus, jqxhr) {}); | |||

preview(); | |||

buildTOC(); | |||

} | |||

var printbutton = document.getElementById("printbutton"); | |||

printbutton.onclick = function(){ | |||

console.log("printbutton"); | |||

var boxes = document.getElementsByName("print"); | |||

console.log("boxes = ", boxes); | |||

for (var i=0; i<boxes.length; i++) { | |||

if (boxes[i].checked) { | |||

console.log(boxes[i], boxes[i].checked); | |||

boxes[i].parentElement.parentElement.classList.add("print"); | |||

} | |||

} | |||

var images = document.getElementsByTagName('img'); | |||

var srcList = [];//This is just for the src attribute | |||

for(var i = 0; i < images.length; i++) { | |||

var sourceList = images[i].src; | |||

//console.log(sourceList); | |||

sourceList = sourceList.substr(0, sourceList.lastIndexOf(".")) + ".png"; | |||

//console.log(sourceList); | |||

srcList.push(images[i].src); | |||

images[i].src = sourceList; | |||

} | |||

var toprint = document.getElementsByClassName("print"); | |||

console.log("toprint = ", toprint); | |||

if (toprint.length == 0){ | |||

for (var i=0; i<boxes.length; i++) { | |||

boxes[i].checked = true; | |||

boxes[i].parentElement.parentElement.classList.add("print"); | |||

} | |||

selectall.value = "Unselect All"; | |||

} | |||

document.getElementsByTagName("body")[0].classList.add("pagedjs"); | |||

load_pagedjs(); | |||

}; | |||

</nowiki> | |||

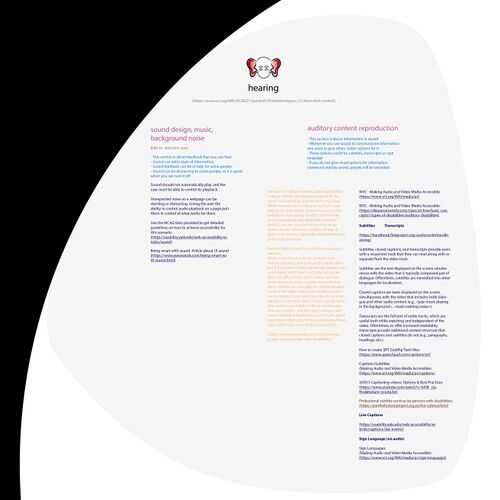

===Illustration=== | |||

During the whole process, I continued to work on illustrations. Both animated ones depicting "user skills" and still ones which show "aspects of websites". | |||

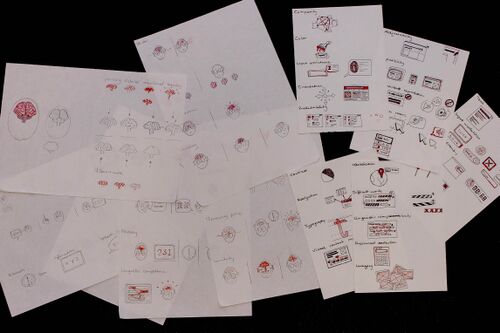

[[File:MIW_Illu_Sketches1.jpg|none|thumb|500px|Development and sketches on paper before digital implementation]] | |||

[[File:HumanIlluBlender.png|none|thumb|500px|Making animated illustrations in Blender]] | |||

{| | |||

|- | |||

| [[File:Attention.gif|none|thumb|500px|Animation: User ability - Attention]] || [[File:Colorvision.gif|none|thumb|500px|Animation: User ability - Color vision]] | |||

|- | |||

| [[File:Comprehension.gif|none|thumb|500px|Animation: User ability - Comprehension]] || [[File:Flexibility.gif|none|thumb|500px|Animation: User ability - Flexibility]] | |||

|- | |||

| [[File:Hearing.gif|none|thumb|500px|Animation: User ability - Hearing]] || [[File:Language.gif|none|thumb|500px|Animation: User ability - Language]] | |||

|- | |||

| [[File:LinguisticCompetence.gif|none|thumb|500px|Animation: User ability - Linguistic competence]] || [[File:Memory.gif|none|thumb|500px|Animation: User ability - Memory]] | |||

|- | |||

| [[File:ModeOfAdress.gif|none|thumb|500px|Animation: User ability - Mode of adress]] || [[File:MotorFunctions.gif|none|thumb|500px|Animation: User ability - Motor functions]] | |||

|- | |||

| [[File:ProcessingPace.gif|none|thumb|500px|Animation: User ability - Processing pace]] || [[File:Sensitivity.gif|none|thumb|500px|Animation: User ability - Sensitivity]] | |||

|- | |||

| [[File:Sight.gif|none|thumb|500px|Animation: User ability - Sight]] || | |||

|} | |||

[[File:Illustrations_Website.jpg|none|thumb|900px|Illustrations: Website aspects with regards to user abilities]] | |||

===Conceptual assumptions=== | |||

The '''Wiki''' is a well-established interface for many users. In my mind, it works well for users who: | |||

* have used this interface before, | |||

* are comfortable thinking in hierarchical structures, | |||

* like to focus on specific content, | |||

* use assistive hardware or software. | |||

'''Globe''' is an intuitive 3-dimensional interface. In my mind, it works well for users who: | |||

* want to switch quickly between content, | |||

* want to get an overview of the whole subject, | |||

* are good at spatial thinking, | |||

* are playful and like to interact, | |||

* like to be more stimulated when taking in content. | |||

'''Fold Out''' is a compact and mobile-friendly interface. In my mind, it works well for users who: | |||

* access the website from their mobile phone, | |||

* want to get an overview of the whole subject, | |||

* like to be less stimulated when taking in content, | |||

* are comfortable thinking in hierarchical structures. | |||

'''UI Options''' is an easy to customise and distraction-free environment that remembers the user's preferences. In my mind, it works well for users who: | |||

* have special needs in terms of typography and colour contrasts, | |||

* are willing to take a few steps for a one-time adjustment on the website, | |||

* are overstimulated or distracted by moving content, | |||

* operate the website using only a keyboard (no mouse), | |||

* use assistive hardware or software. | |||

'''Print''' is a minimalist interface that converts selected content into a PDF. In my mind, it works well for users who: | |||

* want to take in content offline, | |||

* want to take in content in printed form, | |||

* prefer to take in content in PDF format, | |||

* want to pass on content to other people in analogue form who are not comfortable using digital technology. | |||

===Final Outcome=== | |||

Make Inclusive Websites is a web index that helps to create websites that work well for a variety of people with diverse abilities. It provides many references to helpful websites, such as texts, tutorials or tools. These are integrated into an environment that provides an easy entry into this complex topic. | |||

Make Inclusive Websites is also a speculative proposal for a different way of making web content accessible and an invitation to discuss it. | |||

The idea is to move away from a one-size-fits-all mentality, yet not put users in the responsibility of going through complex adaptation processes that may be barriers to some. | |||

Make Inclusive Websites proposes the collaborative creation of a variety of different interfaces that allow the same web content to be accessed by a variety of users without the need for much adaptation. | |||

[[File:MIW_Print.gif|none|thumb|500px|Final Print interface of Make Inclusive Websites]] | {| | ||

|- | |||

| [[File:MIW_Home.gif|none|thumb|500px|Final home (selection) screen of Make Inclusive Websites]] || [[File:MIW_Wiki.gif|none|thumb|500px|Final Wiki of Make Inclusive Websites]] | |||

|- | |||

| [[File:MIW_Globe.gif|none|thumb|500px|Final Globe interface of Make Inclusive Websites]] || [[File:MIW_FoldOut.gif|none|thumb|500px|Final Fold Out interface of Make Inclusive Websites]] | |||

|- | |||

| [[File:MIW_UIOptions.gif|none|thumb|500px|Final UI Options interface of Make Inclusive Websites]] || [[File:MIW_Print.gif|none|thumb|500px|Final Print interface of Make Inclusive Websites]] | |||

|} | |||

[[https://lehmannmax.de/Thesis/Thesis.html Thesis - Make Inclusive Websites]] | [[https://lehmannmax.de/Thesis/Thesis.html Thesis - Make Inclusive Websites]] | ||

Latest revision as of 10:28, 21 June 2021

[Look at the project documentation here]

My Motivation

- My sister was born with Trisomy 21 (Down Syndrom).

- My parents raised her to be self-determined.

- For as long as I can remember my mother worked to support development towards a more inclusive society.

- I went to a primary school that was integrative. I had classes in a group that included children without and some with disabilities.

- I believe that everyone is valuable and should have equal opportunities.

- In my previous studies (Communication Design) I worked on the concept of inclusive factbooks. [See here] [and here]

- I am convinced that equal access to information and knowledge for all people is a basic prerequisite for a just society.

[Read more about my motivation in my thesis]

First Year

Special Issue X: Input Output

Special Issue X - Documentation

For [Special Issue X] I built GLARE, a 16 voice polyphonic synthesizer instrument that is based on Arduino. GLARE module is controlled by gestures only and thereby works in a very intuitive way. This project is based on the approach of simplifying complex processes in such a way that they can be used by a wide range of people with different abilities without much prior knowledge. The sensors can be easily removed from the unit for storage or transport.

In this project, a group publication of 10 circuit boards was produced by the 10 students in vinyl record format. Together with 10 instructions for assembling the various modules, this publication is available from DePlayer in an original edition of 30. The project was also presented there.

[High resolution image of my publication contribution]

All modules could be connected with and reacted to each other.

I was involved in the design of the visual identity of this exhibition.

Special Issue XI: We have secrets to tell you

My full Special Issue XI Documentation is not accessible to the public at this moment.

In [Special Issue XI] I came into first contact with (Semantic) MediaWiki queries. Later in the project, I was mainly involved in coding the publicly accessible part of our web publication. This was the first time for me to code in Javascript. On the website, it is possible to filter content and generate a print document from selected content.

In this project, my focus was on breaking down the collectively used processes into tools and methods. For each collected item I wrote short texts that explain what we used them for. I also developed an icon for each collected item.

Special Issue XII: Radio Implicancies

File:Max Lehmann Special Issue XII notebook.pdf

In [Special Issue XII] I mainly created audio content for our collective weekly broadcast. I was also involved in coding one of the web broadcasting interfaces.

Second Year

Proposal

Concept development

Initial thoughts

- Speculative experimental approach to inclusive interface design

- Simple language vs. Complex language - In which areas is it not possible to use simple language and why? Language as a barrier, as a weapon, as protection...

- What is normal? A publication about the developments of norms in societies. Predjudices, conformity... Technological norms and implications? (Queer Technologies) Reading Normal by Allen Frances. [Read more about this in my thesis.]

- Critical examination of the meaning of "normal" and problems that come with it. Is normal just an individual reflection of a perceived average of our surroundings? Normal is consistency in perception?

- Is there a definition of "normal", that is not exclusive towards minority groups? What will future norms be and how can they be changed?

- Interactive (game) website using 2D animation to explain what "normal" is normal and why

- Barrier-free calibration tool to make a website dynamically adjust to individual users

Inclusive web, user abilities and conditions

- Reduce barriers for web users - inclusive browser, (-plugin), website, wiki: Remove distraction, simplify, add illustration, layout...

- Access to what kinds of information is crucial for equal participation? Who decides?

- How inclusive is the web with special regards to websites on which all kinds of "important information" is available.

- Which user skills and conditions can affect equal access to information and knowledge?

Proposal (Summary)

For my master project, I am creating a website that allows for exploration of selected aspects of human diversity, due to which users or groups of users might be disadvantaged when information is presented to them, or they seek to access it.

This is to give an overview of possible necessary adaptations in the process of creating an inclusive publication in terms of design and content. It is also to inform about the human emotions that can be caused by facing a disadvantage in accessing information, maybe because of an individual special feature that has not been thought of in the creation process.

The website will consist of a spherical 3D map. On the 3D map selected aspects of human diversity will be arranged on the basis of their thematic proximity and interconnections. I will create animated illustrations to support the understanding of the information. Additionally, personal reports of various individuals will be provided, telling their experiences of exclusion. I chose the shape of the sphere, as its surface has no ends and no center.

The interface of the website will allow for certain adjustments of the website‘s appearance according to user preferences, like font-size, color-scheme, speed of moving content, audio playback, or language. All texts will either be written in simple language, or each text will be available in different levels of complexity, from which the user can choose according to preference.

I will speak about the different aspects of human diversity exclusively in the form of individual symptoms and refrain from naming diagnoses.

Read my full Project proposal

Illustration

Prototyping

I used the open-source software [Blender] to create a prototype of the interface and to create 2D animated illustrations.

Over time I developed an understanding of various Javascript libraries, especially [Three.js].

Over time I developed an interactive sphere interface on which information is arranged.

Check out my complete [Technical process index].

Workshop

Meet - Interface Together with Avital I developed a workshop in which we analyzed different websites according to criteria that would normally be used to examine people and social interactions. On the basis of this analysis, we played improvised theatre scenes and processed the insights gained as a group. Read the full [documentation page].

Resubmition

I was asked to resubmit my project proposal. The reasons for this were, as I understand it, that the scope of the proposed project was too large. I was advised to reduce the scope in order not to compromise the quality of my publication.

Accordingly, I narrowed the focus of the project. After analysing the possible channels of information communication, I decided to focus on the inclusive creation of websites. This was an obvious choice, as online research of necessary materials on this topic was particularly easy to implement. Also, the field of web design matched the development of my personal interests. Read more about this decision in my [Thesis]

With regard to the groups of aspects of human diversity, I decided to limit myself to disabilities because of my personal connection to this topic. Read more about this decision in my [Thesis]

New proposal

I will create an index of online references that can help with the creation of websites that work well for all people, including those with physical or mental disabilities. The references will be combined with individual testimonials of exclusion in this area, as well as illustrations and simple summaries. A wiki will serve as both, the primary interface to access these contents, as well as a backend from which I will conduct interface experiments. In these experiments, I will write scripts to create websites by querying contents from the wiki.

Read my full Resubmition.

Check out my [Resubmition Presentation].

Process

Concept / Contents

I gathered a collection of references and tools linked to the topic of the inclusive creation of websites. I used Adobe Illustrator to collect and organize the collected content in the beginning.

I settled on clear thematic areas and affiliations and connections of these. I rely on existing categorisations such as the Web Content Accessibility Guidelines, but sometimes expanded them with my own.

I did a lot of online research and created bookmarks with keywords for all the content that was relevant to my work. Later, I went through all the websites I had collected and embedded them in my structure.

I then listed these references in as uniform a scheme as possible (title, author, source for content and title, short description for tools) and revised them.

I wrote simple introductions to the different aspects of websites and revised them.

In order to collect the user stories as planned, I first developed a questionnaire in simple language which I made public via various distributors. The only response I received during the whole project was one from my personal contacts.

I made the questionnaire available as an [online survey] using the free [survey.js library].

I realized, that creating such stories in a respectful and authentic way takes time and direct collaboration with the individuals, which I was not able to do. So due to lack of time and other circumstances, I decided not to make any further efforts to collect these stories. I replaced the missing stories as much as possible with ones that I found online and that I partially shortened. Where I don't find any, I invite users to share their experiences through the online survey or in the [forum].

Design

The design was strongly adapted to the technical necessities and functionalities of this project.

I decided early on two strong contrast colours (#FF014D, #477CE6), which in combination with grey tones result in my very simple colour scheme. The colour red makes hierarchically superior elements recognisable compared to subordinate elements that use the colour blue.

I also developed a logo consisting of two circles of arrowheads around a broken circle and text, the title of my project. The middle circle, which does not obstruct the arrows, symbolises content that is to be made inclusive. The arrows, that go from the outside to the inside, point from different angles, without barriers, to the centre of the lettering, where the word "Inclusive" is written. This symbolises the removal of barriers to accessing content that is inclusive. The arrows pointing from the inside to the outside form a frame for the described system. They point from the content outwards, in different directions, reflecting the direction of thinking in my technical proposal and representing the collaborative approach.

The choice of fonts was based on the criterion of readability. I chose a non-serif font (Century Gothic) for all flow and sub-headings and a slab-serif font (Arvo), for headings. The font sizes are mostly above 18pt.

The illustrations are simple and as minimalistic as possible. Here, too, I limit myself to very few colours. Where information would be communicated through colour, I additionally used textures. For the human figures, I have tried to give as few clues as possible to classify the faces into social groups.

Overall, I try to keep the design consistent in the different parts of the project and to create clarity and calmness through white space. Of course, the interfaces are responsive and, where appropriate, specially adapted for mobile. All buttons and other interactive elements are clearly recognisable and give distinct feedback.

I tried to get feedback for my project from an agency specialising in inclusive design. After this was confirmed and I had sent the material, I was cancelled due to overload.

Wiki

I structured the content on [my Wiki] using, among other extensions, [Pageforms] and [Cargo]. I set up and published my Wiki, as well as the rest of my publication, on a personal Raspberry Pi.

On my Wiki I implemented Templates and Forms with Page-Forms and stored the information in Cargo tables.

{{References

|headline1=General

|coordhead1=10.000, 285.000, 0.000

|references1=

Use headings to convey meaning and structure;

Tips for Getting Started - 3WC Web Accessibility Initiative;

https://www.w3.org/WAI/tips/writing/#use-headings-to-convey-meaning-and-structure;

Section Headings;

Understanding Web Content Accessibility Guidelines (WCAG) 2.1;

https://www.w3.org/WAI/WCAG21/Understanding/section-headings;

Headings and labels;

Understanding Web Content Accessibility Guidelines (WCAG) 2.1;

https://www.w3.org/WAI/WCAG21/Understanding/headings-and-labels.html

|headline2=Dividing Processes

|coordhead2=16.000, 285.000, 0.000

|references2=

Multi-page Forms;

Web Accessibility Tutorials - 3WC Web Accessibility Initiative;

https://www.w3.org/WAI/tutorials/forms/multi-page/;

Progress Trackers in UX Design;

Nick Babich;

https://uxplanet.org/progress-trackers-in-ux-design-4319cef1c600;

Multi-step form design: Progress indicators and field labels;

Kristen Willis;

https://www.breadcrumbdigital.com.au/multi-step-form-design-part-1-progress-indicators-and-field-labels/

|linksto=

|belongsto=Complexity

|contains=

}}

Technical process

General

The information in all the interfaces is retrieved from my Wiki as a cargo query with Javascript "fetch" in JSON format.

let fetchhuman = (promise) => {

return fetch("/wiki/index.php?title=Special:CargoExport&tables=human%2C&&fields=human._pageName%2C+human.headline%2C+human.coordinates%2C+human.image1%2C&&order+by=%60cargo__human%60.%60_pageName%60%2C%60cargo__human%60.%60headline%60%2C%60cargo__human%60.%60coordinates__full%60+%2C%60cargo__human%60.%60image1%60&origin=*&limit=100&format=json").then(r=>r.json()).then(data=>{

...

Then all the contents of the query data are rendered into HTML elements.

for (let i = 0; i < data.length; i++) {

var content = data[i];

var div = document.createElement("div");

div.setAttribute("id", content._pageName);

document.body.appendChild(div);

var image = document.createElement("img");

image1.setAttribute("src", content.image1);

image1.setAttribute("alt", "Illustration of " + content.headline);

div.append(image1);

var head = document.createElement("h2");

temphead.innerHTML = content._pageName;

temphead.setAttribute("id", content.headline+"head");

div.appendChild(temphead);

...

Some parts of the data, like the references need to be sorted.

let references = content.references1;

references.unshift("Empty");

let ref_link = references1.filter(function(value, index, Arr) {

return index % 3 == 0;

});

ref_link.shift();

let ref_head = references.filter(function(value, index, Arr) {

return index % 3 == 1;

});

let ref3desc = references3.filter(function(value, index, Arr) {

return index % 3 == 2;

});

for (let i = 0; i < ref3head.length; i++) {

if (ref3head[i]!== ""){

var ref3headline = ref3head[i];

var ref3description = ref3desc[i];

var ref3link = ref3lin[i];

...

I used the "belongsto" information in my Cargo tables to assign the subtopics to the corresponding parent topics.

var belongsto = content.belongsto[0]; var parent = document.getElementById(belongsto); ... parent.appendChild(div);

On this basis, the information is then processed according to the requirements of the different interfaces. Also other elements, like the sidebar navigation are created from the query data

//First query

var sidebar = document.getElementById("ulist");

var li = document.createElement("li");

sidebar.appendChild(li);

var listelem = document.createElement("A");

listelem.innerHTML = content._pageName;

listelem.setAttribute("href", "#"+content._pageName);

listelem.setAttribute("id", content._pageName+"_listelem");

li.appendChild(listelem);

var sublist = document.createElement("ul");

sublist.setAttribute("id", content._pageName+"_sublist");

li.appendChild(sublist);

//Second query

var listparent = document.getElementById(content.belongsto[0]+"_sublist");

var li = document.createElement("li");

listparent.appendChild(li);

var listelem = document.createElement("A");

listelem.innerHTML = content._pageName;

listelem.setAttribute("href", "#"+content._pageName);

listelem.setAttribute("id", content._pageName+"_listelem");

li.appendChild(listelem);

On all my interfaces, I have paid special attention to keyboard navigation.

For some interfaces, I wrote a function that allows navigation of the sidebar with the arrow keys.

var canvasKBcontrol = true;

$(document).keyup(function(e){

if ($(":focus").hasClass( "sidebar" )||$(":focus").hasClass( "sidebar_master" )) {

canvasKBcontrol = false;

} else {

canvasKBcontrol = true;

};

if (e.keyCode == 40 && canvasKBcontrol == false) {

var next0 = $(".listelem.sidebar.focused").next().find('a.listelem.sidebar').first();

var next1 = $(".listelem.sidebar.focused").parent().next().find('a.listelem.sidebar').first();