Prototyping/2019-2020/T3: Difference between revisions

No edit summary |

No edit summary |

||

| (257 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

Prototyping session Spring 2020, in the context of [[:Category:Implicancies|Special Issue #12: Radio Implicancies]] | Prototyping session Spring 2020, in the context of [[:Category:Implicancies|Special Issue #12: Radio Implicancies]] | ||

{{underconstruction}} | |||

==01== | ==01== | ||

In the work for the Special Issue, we will employ a strategy of [[Implicancies Channels and Tools|working between systems]] | |||

Proposition is to publish prototyping work alongside other developments of the Special Issue in a rolling fashion, ie following an "early and often" approach to publishing. | |||

Focus on using the wiki as a "cookbook" -- a place to record useful techniques for others. | |||

* Review setup with [[liquidsoap]] and [[icecast]] from the sandbox to the lurk server. | |||

* Review setup of [https://docs.gitea.io/en-us/webhooks/ webhook] connecting [https://git.xpub.nl/murtaugh/SI12-prototyping git repo] with [https://hub.xpub.nl/sandbox/12/ webpage on sandbox] | |||

* Example of adding a [[makefile]] to the workflow. Idea of separating sources from outputs (targets in make speak). | |||

* Connecting to the shared terminal with [[tmux]] | |||

So specifically: | |||

=== Connecting to the shared sandbox terminal === | |||

ssh ''sandbox'' | |||

tmux -S /tmp/radio attach -t radio -r | |||

Where "ssh sandbox" assumes you have a "sandbox" entry in your .ssh/config file. | |||

=== .gitignore === | |||

We will follow a "sources only" approach to using the git whereby only "source" files will get committed to a repo. The idea is that the tools are in place for anyone who clones the directory to "build" the other files using the makefile + tools. | |||

For this reason the .gitignore file contains: | |||

*.html | |||

So that if you try to: | |||

git add README.html | |||

You will see: | |||

The following paths are ignored by one of your .gitignore files: | |||

README.html | |||

Use -f if you really want to add them. | |||

=== Why .gitignore === | |||

* Lighter/smaller repos, faster to share, with less need for huge disk space just to change. | |||

* Less conflicts -- many "artificial" conflicts arise when files that get generated are also version controlled. It's simpler to track only the sources, and let programs like [[make]] take care of updating the rest from there. | |||

=== more .gitignore === | |||

See the "live" [https://git.xpub.nl/murtaugh/SI12-prototyping/_edit/master/.gitignore .gitignore] | |||

* Also ignoring media files (use scp to place... manage differently) -- goal: keep the core repository as "light" as possible. Git is not idea for managing big binary files (they don't then to change in a way that lends itself to diff-ing). | |||

* Temporary files (things like foo~) | |||

* Python derived files / cache (python generates __pycache__ folders as needed). | |||

=== (why) make radio === | |||

Why use make? Makefiles are like "executable notebooks" are a really effective (and time tested) way to document and share scripts, workflows, and workarounds using diverse tools. | |||

What's the catch? Make comes from free software programming culture and was created to support programming work rather than publishing. However, as managing programmers is like "herding cats", the tool is extremely flexible and porous, and well integrated with the command-line and [[BASH]] scripting. As such it's compatible with the richness of command-line tools available. | |||

* [https://zgp.org/static/scale12x/# git and make not just for code] presentation about using [[makefile]] and [[git]] in publishing workflows | |||

=== practical to do's === | |||

* Update issue.xpub.nl to point http://issue.xpub.nl/12/ to (redirect to?) The working space of SI12. | |||

* Understand and make use of the git & make based workflow. | |||

* Familiarity using liquidsoap to create icecast streams. | |||

* Use liquidsoap to create flexible streams that can be interrupted | |||

* Use [[top]] to check the load on the sandbox | |||

* Use liquidsoap as an editing tool to assemble, mix, and filter audio | |||

* Explore some basic structural elements of liquidsoap (playlist, rotate, sequence). | |||

=== Make Noise === | |||

* the [[Sox#Generating_tones_or_noise|colors of noise]] (using sox to generate white, brown, and pink noise) | |||

=== Playlist === | |||

Liquidsoap's [https://www.liquidsoap.info/doc-1.4.2/reference.html#playlist playlist] | |||

Try... | |||

* reload | |||

* reload_mode | |||

Exercise: | |||

1. | |||

ssh to the sandbox | |||

cd | |||

cp /var/www/html/12/liquidsoap/playlist.liq . | |||

cp /var/www/html/12/liquidsoap/playlist.m3u . | |||

cp /var/www/html/12/liquidsoap/passwords.liq . | |||

Copy the whole folder somewhere | |||

cp -r /var/www/html12/liquidsoap . | |||

or | |||

git clone https://git.xpub.nl/XPUB/SI12-prototyping.git | |||

(read-only) | |||

Edit it with nano... | |||

Make sure you change the log file to be specific to you... | |||

set("log.file.path","/tmp/<script>_YOURNAME.log") | |||

and try to run it... | |||

liquidsoap -v playlist.liq | |||

2. Clone the respository to your laptop... | |||

apt install liquidsoap | |||

brew install liquidsoap | |||

git clone ssh://git@git.xpub.nl:2501/XPUB/SI12-prototyping.git | |||

Change the playlist to list local audio files... (NB: they need to be stereo) | |||

Change "radioimplicancies.ogg" to whatever name you want... | |||

=== Live or Fallback === | |||

* liquidsoap fallbacks | |||

* mixing diverse sources of material... | |||

* working with silence | |||

* dynamics compression and loudness | |||

* test streaming to an interruptable mount point... | |||

* BASIC cutting / format manipulation with [[ffmpeg]] such as [[ffmpeg#Extracting_a_specific_part_of_a_media_file | extracting chunks with a start / duration]] | |||

=== Mixing audio sources === | |||

* Filtering for radio/streams: | |||

* compression (as in [https://en.wikipedia.org/wiki/Dynamic_range_compression Dynamic range compression] different from [https://en.wikipedia.org/wiki/Data_compression#Audio audio data compression] | |||

* "Loudness" and "Normalisation"... What is replaygain and why can it help? | |||

* [[liquidsoap]] for [[Liquidsoap#Sound_processing|sound processing]] | |||

=== Resources === | |||

* About the materiality of digital audio: samples, sampling rate, sample width, format, channels... See [[Sound and listening]] | |||

* Playing "[[Raw audio|raw]]" and non-sound data ... See [[Sonification]] | |||

* [https://freemusicarchive.org/genre/Field_Recordings field recordings @ freemusicarchive] | |||

* William Burroughs: [http://www.ubu.com/papers/burroughs_gysin.html cut-ups] + [https://hub.xpub.nl/bootleglibrary/book/70 The Electronic Revolution] | |||

* Example: [http://activearchives.org/wiki/Spectrum_sort spectrumsort] | |||

* [http://www.ubu.com/sound/ sound art on ubu] | |||

=== Assignment(s) for next week === | |||

* | * Make a "self-referential" '''radio program''' with liquidsoap... that is one where the content and the material of its transmission and its tools of production are reflected. | ||

* Document your use of liquidsoap, or other tool(s) in the xpub wiki in the style of a (code) "Cookbook" recipe. | |||

* | |||

=== Listen together === | |||

* Listen: [[Alvin Lucier]]'s [http://ubusound.memoryoftheworld.org/source/Lucier-Alvin_Sitting.mp3 I am Sitting in a room] | |||

* Listen: [[Janet Cardiff]] [http://ubusound.memoryoftheworld.org/cardiff_janet/slow_river/Cardiff-Janet_A-Large-Slow-River_2001.mp3 A Large Slow River] | |||

* Listen/watch: [http://www.ubu.com/film/logue_anderson.html Laurie Anderson microphone piece] | |||

* Listen/watch: [https://www.youtube.com/watch?v=23njMQx0UuQ&t=71s Gary Hill] | |||

Also for next week, check out [http://radio.aporee.org/ Radio Aporee]. | |||

== 02 == | == 02 == | ||

https://pad.xpub.nl/p/prototyping190520 | |||

Set of things to work on / research, make small groups, work on the tasks, report back | |||

* Play/testing an interuptable stream | |||

* How do we archive a [[liquidsoap]] program (adding a file as output to the stream) | |||

* Render a waveform with ffmpeg | |||

* "Simple" interface (mapping timeupdate to SVG/canvas) | |||

[[tmux]] | |||

=== Exercise (afternoon) === | |||

try to type in the following, reading.. | |||

Using the "[https://git.xpub.nl/XPUB/SI12-prototyping/src/branch/master/player player] code" | |||

ssh sandbox | |||

cd | |||

mkdir -p public_html | |||

cd public_html | |||

look at: https://hub.xpub.nl/sandbox/~YOURSANDBOXUSERNAME/ | |||

You should see your "homepage" on the sandbox (maybe empty)... | |||

Copy the player folder to your public_html... | |||

cp -r /var/www/html/12/player/ . | |||

cd player | |||

now you should see the files here: | |||

https://hub.xpub.nl/sandbox/~YOURSANDBOXUSERNAME/player/ | |||

ls | |||

cat numbers.txt | |||

cat numbers.txt | bash -x speaklines.sh | |||

ls | |||

cat speaklines.sh | |||

(if you want to modify the numbers) | |||

nano numbers.txt | |||

then... | |||

cat numbers.txt | bash -x speaklines.sh | |||

again... | |||

LOOK at your folder from the browser: https://hub.xpub.nl/sandbox/~YOURSANDBOXUSERNAME/player/ | |||

ogginfo speech01.ogg | |||

ogginfo speech02.ogg | |||

ls speech*.ogg | |||

ls speech*.ogg > counting.m3u | |||

cat counting.m3u | |||

cat counting.file.liq | |||

liquidsoap -v counting.file.liq | |||

Take a look at counting.ogg (in the browser) | |||

ogginfo counting.ogg | |||

Take a look at dream.html >>>>>>>>>>>> anyone else here??me i opened it in my browser. is that right?yes | |||

Open the console and look at debugging messages while you scroll around... > what is the console? like inspect element console? on firefox it s Tools>Web developer>web console oh yes same thing, different road leading to it. what should we see in console? text while the video is playing, you see the subtitles i neverminf i see it :) woop wooop | |||

(ctrl-shift-I or ctrl-shift-k depending on your browser ctrl-option-j chrome mac) > this is the shortcut didnt work for me tried both?yupbut you're there?yupeh! | |||

ogginfo counting.ogg | python3 ogginfo-to-srt.py --json | |||

ogginfo counting.ogg | python3 ogginfo-to-srt.py | |||

ogginfo counting.ogg | python3 ogginfo-to-srt.py --vtt | |||

and finally | |||

ogginfo counting.ogg | python3 ogginfo-to-srt.py --vtt > counting.vtt | |||

cp dream.html counting.html | |||

Adjust the html to use counting.ogg and counting.vtt (no path, just the filename) | |||

Change the video tag to an audio tag... | |||

links: | |||

https://trac.ffmpeg.org/wiki/Waveform | |||

try | |||

ffmpeg -i counting.ogg -filter_complex "showwavespic=s=1024x120:colors=000000" -frames:v 1 -y counting.png | |||

=== Notes === | |||

* "Live elements" + ways to integrate (metadata) with player page. | |||

* "Radio programs" with liquidsoap... for instance, mixing a set of quotes, music or how might "jingles" function in a longer stream... What structural elements exist in liquidsoap to compose a sequence (like rotate, mix) | |||

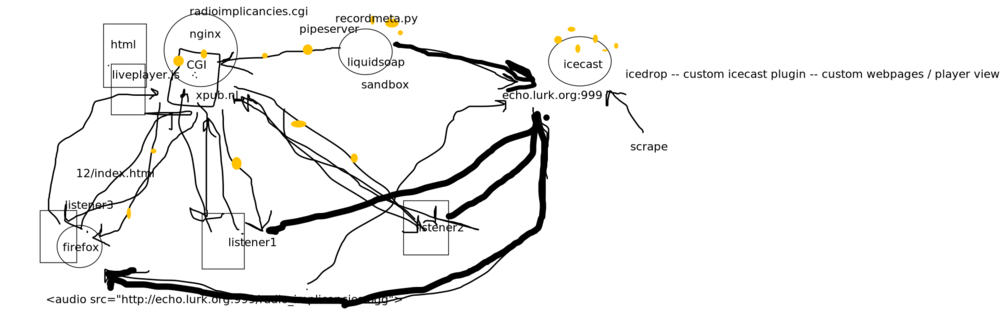

* Review / extend the between systems diagram -- Q: How to design a useful system for the metadata. | |||

* Frames (and iframes) ... a brief history? Window.postMessage | |||

* XSS/"Cross-site scripting": Using document.domain, CORS, cgi/php relays, JSONP | |||

* The difficult history of "shared whiteboards" ... [https://diversions.constantvzw.org/wiki/index.php?title=Eventual_Consistency Eventual consistency] http://www.processwave.org/ [https://medium.com/bpxl-craft/building-a-peer-to-peer-whiteboarding-app-for-ipad-2a4c7728863e recent ipad app] [https://online.visual-paradigm.com/diagrams/features/seamless-collaboration/ VP] [https://miro.com/ miro] might also look at alternatives like [http://hotglue.me hotglue] or [https://www.yourworldoftext.com/ YWOT] -- projects that are obscure (why? "artistic" nature/lack of entrepreneur-speak, lack of "cutting edge" technology, radical take on collaboration, ultimately trapped in proof of concept, but not really usable?) ... POC with [https://github.com/SVG-Edit/svgedit SVG editor] and using [https://try.gitea.io/api/swagger#/repository/repoUpdateFile gitea API] as backend ? (is there an API for commmiting files?)... granularity of collaboration ... "realtime" vs. "shared"... what if one at a time editing is OK, given a workable/fluid social protocol around it? | |||

* authentication | |||

* Visualization "sound cloud" style of a rendered stream ... showing structure... | |||

== 03 == | |||

RADIO PROTOCOLS aka METADATA, or STREAMING TEXT | |||

https://pad.xpub.nl/p/prototyping260520 | |||

=== Radio Protocols === | |||

In a one sense ("media"), radio protocols could be considered the conventions of a radio station, involving structural elements of "programming" in the sense of what gets played when. It involves "formats" (weather report, traffic report, requests), schedules (news bulletin on the hour), and programs (Jazz brunch). | |||

In a informatics/computer science context, ''protocols'' are also social agreements made around technical formats (like HTML, ogg/mp3) often involved with how different programs / processes can communicate with each other, often in the context of a network. For instance [[HTTP]] as the protocol that occurs between web browser and web server to request and then transmit the contents of a web page. Streaming media has specialized protocols (like ...), though the Icecast server we use uses a [https://gist.github.com/ePirat/adc3b8ba00d85b7e3870 variation of HTTP]. Icecast also has related protocols called [https://cast.readme.io/docs/icy ICY] related to managing/requesting the metadata of a stream. | |||

Protocols can range from "ad hoc" best practices / hacks, to highly formalized processes. | |||

Many protocols are based on simple exchanges of [https://en.wikipedia.org/wiki/Text-based_protocol text], typically formatted in lines (ie each line is a significant "unit" for transmitting and requesting information from a server. | |||

Based on the question, "how might we share the terminal as part of the broadcast?"... We are going to be looking at how the textual output of the [[liquidsoap]] server (1) be transmitted to listeners, and (2) could be filtered and used as a novel protocol for the Radio Implicancies transmissions. | |||

Consider searching for "[https://stackoverflow.com/questions/6115531/is-it-possible-to-get-icecast-metadata-from-html5-audio-element syncing metadata with icecast]"... | |||

=== preparing and testing a stream with replay gain === | |||

How might me make a [[Liquidsoap#Editing | test stream]] to hear smaller samples of each element in a playlist? | |||

And here, a [https://blakeniemyjski.com/blog/how-to-normalize-home-volume-levels-with-node-red/ somewhat freaky example] of audio level normalization and home automization gone perhaps too far. | |||

=== "Random Access" === | |||

Dynamic playlist(s) | |||

* Add (random) excerpts of "raw" materials ?! | |||

* How to combine one or more playlists, with randomness. | |||

* How to use jingles to "join" elements | |||

* How might "bumpers" function to mark beginnings and ends of "programs". | |||

* How might we make the program more inviting for outside/new listeners? (Meta programming?) | |||

=== regular expressions === | |||

* [[grep]] | |||

* [https://developer.mozilla.org/en-US/docs/Web/JavaScript/Guide/Regular_Expressions Regular Expressions in Javascript] | |||

=== pipeserver === | |||

Use cue_cut and annotation to prepare a test stream. | |||

* How might we filter the messages that can sent to the stream (how to filter with commandline) | |||

* Add metadata to the audio files | |||

* Add a metadata "reporter" to the liq | |||

* Run liq + pipe to pipeserver | |||

* Adjust pipeserver.html | |||

=== commandline calisthenics === | |||

ls *.ogg | wc -l | |||

ls *.ogg > playlist.m3u | |||

cat playlist.m3u | wc -l | |||

# multitasking commandline style | |||

nano foo.txt | |||

ctrl-z | |||

fg | |||

ctrl-z | |||

ps | |||

# PID are ''process ids'' Note which one is nano then... | |||

fg XXX | |||

ps aux | grep liquidsoap | |||

=== creating a custom pipeserver interface === | |||

cd | |||

cd public_html | |||

cp /home/mmurtaugh/pipeserver/pipeserver.html . | |||

nano pipeserver.html | |||

Add | |||

<audio controls src="http://echo.lurk.org:999/radioimplicancies_test.ogg"></audio> | |||

Change line 31 to look like: | |||

var ws_addr = 'ws://'+window.location.host+'/pipe', | |||

=== notes === | |||

* replaygain, Loudness normalization vs. Dynamic normalisation / compression: script to test first x sec each source... just liquidsoap? | |||

* metadata hook | |||

* [[liquidsoap]] server? / telnet? | |||

* countdown ... (say protocol, add?) | |||

* [http://manpages.ubuntu.com/manpages/artful/man1/vorbisgain.1.html vorbisgain] is a tool following the [[ReplayGain]] algorithm, that adds metadata to an OGG audio file describing the loudness of the file. It was created for music players to be able to keep a consistent audio level between different albums. | |||

* pipebot? | |||

* materiality of timed text ... epicpedia ... etherlamp... | |||

* chat archive to vtt | |||

* podcast feed ? | |||

=== broadcast notes === | |||

Set a log file on radio2: | |||

set("log.file",true) | |||

set("log.file.path","<script>.log") | |||

set("log.stdout",true) | |||

Follow the log with tail -f (follow) and pipe to the pipeserver: | |||

tail -f 12.2.livetest.log | pipeserver.py --host 0.0.0.0 | |||

==== shell.html ==== | |||

aka pipeserver frontent... | |||

<source lang="html4strict"> | |||

<!DOCTYPE html> | |||

<html> | |||

<head> | |||

<meta charset="utf-8"> | |||

<title>shell</title> | |||

<style> | |||

body { | |||

background: #888; | |||

} | |||

body.connected { | |||

background: white; | |||

} | |||

#shell { | |||

white-space: pre-wrap; | |||

height: 25em; | |||

width: 100%; | |||

font-family: monospace; | |||

color: white; | |||

font-size: 14px; | |||

background: black; | |||

overflow-y: auto; | |||

} | |||

</style> | |||

</head> | |||

<body> | |||

<p><span id="connections">0</span> active connections</p> | |||

<div id="shell"></div> | |||

<script> | |||

var ws_addr = 'wss://'+window.location.host+"/12/pipe/", | |||

sock = null, | |||

shell = document.getElementById("shell"), | |||

connections = document.getElementById("connections"); | |||

function connect () { | |||

sock = new WebSocket(ws_addr); | |||

sock.onopen = function (event) { | |||

console.log("socket opened"); | |||

document.body.classList.add("connected"); | |||

// sock.send(JSON.stringify({ | |||

// src: "connect", | |||

// })); | |||

}; | |||

sock.onmessage = function (event) { | |||

// console.log("message", event); | |||

if (typeof(event.data) == "string") { | |||

var msg = JSON.parse(event.data); | |||

// console.log("message JSON", msg); | |||

if (msg.src == "stdin" && msg.line) { | |||

// Show all lines | |||

var line = document.createElement("div"); | |||

line.classList.add("line"); | |||

line.innerHTML = msg.line; | |||

shell.appendChild(line); | |||

// scroll to bottom | |||

shell.scrollTop = shell.scrollHeight; | |||

} else if (msg.src == "connect") { | |||

connections.innerHTML = msg.connections; | |||

} | |||

} | |||

}; | |||

sock.onclose = function (event) { | |||

// console.log("socket closed"); | |||

connections.innerHTML = "?"; | |||

document.body.classList.remove("connected"); | |||

sock = null; | |||

window.setTimeout(connect, 2500); | |||

} | |||

} | |||

connect(); | |||

</script> | |||

</body> | |||

</html></source> | |||

==== live_interrupt_withmetadata.liq ==== | |||

"RADIO1" program, with a callback to recordmeta.py, a script that relays the data (via POSTign to a cgi script on (issue.)xpub.nl) | |||

<source lang="bash"> | |||

# /var/www/radio/live_interrupt_withmetadata.liq | |||

set("log.file",false) | |||

# set("log.file.path","/tmp/<script>.log") | |||

set("log.stdout",true) | |||

%include "/srv/radio/passwords.liq" | |||

# Add the ability to relay live shows | |||

radio = | |||

fallback(track_sensitive=false, | |||

[input.http("http://echo.lurk.org:999/radioimplicancies_live.ogg"), | |||

single("brownnoise.mp3")]) | |||

################### | |||

# record metadata | |||

scripts="/var/www/html/radio/" | |||

def on_meta (meta) | |||

# call recordmeta.py with json_of(meta) | |||

data = json_of(compact=true,meta) | |||

system (scripts^"recordmeta.py --timestamp --post https://issue.xpub.nl/cgi-bin/radioimplicancies.cgi "^quote(data)) | |||

# PRINT (debugging) | |||

#print("---STARTMETA---") | |||

#list.iter(fun (i) -> print("meta:"^fst(i)^": "^snd(i)), meta) | |||

# data = string.concat(separator="\n",list.map(fun (i) -> fst(i)^":"^snd(i), meta)) | |||

#print ("---ENDMETA---") | |||

end | |||

radio = on_metadata(on_meta, radio) | |||

######################################## | |||

radio = mksafe(radio) | |||

output.icecast(%vorbis, | |||

host = ICECAST_SERVER_HOST, port = ICECAST_SERVER_PORT, | |||

password = ICECAST_SERVER_PASSWORD, mount = "radioimplicancies.ogg", | |||

radio) | |||

</source> | |||

==== recordmeta.py ==== | |||

<source lang="python"> | |||

#!/usr/bin/env python3 | |||

import sys, argparse, datetime, json | |||

import requests | |||

# todo: add timestamp | |||

ap = argparse.ArgumentParser("") | |||

ap.add_argument("data") | |||

ap.add_argument("--post") | |||

ap.add_argument("--logfile") | |||

ap.add_argument("--timestamp", default=False, action="store_true") | |||

args = ap.parse_args() | |||

data = json.loads(args.data) | |||

if args.timestamp: | |||

data['time'] = datetime.datetime.utcnow().isoformat() | |||

if args.logfile: | |||

with open (args.logfile, "a") as f: | |||

print (json.dumps(data), file=f) | |||

if args.post: | |||

r = requests.post(args.post, json=data) | |||

resp = r.content.decode("utf-8").strip() | |||

print ("POST response ({}): {}".format(r.status_code, resp)) | |||

</source> | |||

==== radioimplicancies.cgi ==== | |||

<source lang="python"> | |||

</source> | |||

==== liveplayer.js ==== | |||

<source lang="javascript"> | |||

function liveplayer (elt) { | |||

var last_timestamp, | |||

DELAY_TIME_SECS = 5; | |||

async function poll () { | |||

var rows = await (await fetch("/cgi-bin/radioimplicancies.cgi")).json(); | |||

// console.log("rows", rows); | |||

if (rows) { | |||

// get the last (most recent) item | |||

var d = rows[rows.length-1]; | |||

if (d.time && d.time != last_timestamp) { | |||

last_timestamp = d.time; | |||

console.log("liveplayer: current metadata", d); | |||

window.setTimeout(function () { | |||

var old_nowplaying = elt.querySelector(".now_playing"); | |||

if (old_nowplaying) { | |||

old_nowplaying.classList.remove("now_playing"); | |||

} | |||

var nowplaying = elt.querySelector("#"+d.title); | |||

if (nowplaying) { | |||

console.log("liveplayer: nowplaying", nowplaying); | |||

nowplaying.classList.add("now_playing"); | |||

} else { | |||

console.log("liveplayer: warning no div matching title " + d.title); | |||

} | |||

}, DELAY_TIME_SECS*1000); | |||

} | |||

} | |||

} | |||

window.setInterval(poll, 1000); | |||

} | |||

</source> | |||

== 04 == | |||

https://pad.xpub.nl/p/prototyping-2020-06-02 | |||

xtended PLAYERS / TIME BASED TEXT(s) / REPLAYING (textual) stream | |||

FROM last time, form groups around the following... | |||

* Better way to edit metadata | |||

** [https://hub.xpub.nl/sandbox/12/man/vorbiscomment.pdf vorbiscomment] supports reading / writing from a file... | |||

** Ability to remake things differently in the future (future interfaces) | |||

vorbiscomment -l speech01.ogg > speech01.meta | |||

nano speech01.meta | |||

vorbiscomment -c speech01.meta -w speech01.ogg | |||

[[File:liveplayerDiagram02.png|1000px]] | |||

* liveplayer.js | |||

** [https://issue.xpub.nl/cgi-bin/radioimplicancies.cgi radioimplicancies.cgi] | |||

** [[Prototyping/2019-2020/T3#recordmeta.py | recordmeta.py]] | |||

** [https://git.xpub.nl/XPUB/issue.xpub.nl/src/branch/master/12/liveplayer.js liveplayer.js] | |||

* Links in metadata ... frame=http://foo.bar/whatever | |||

--- | |||

schedule ... | |||

copies TO PAD | |||

* Technotexts: Epicpedia | |||

* evt make radioimplicancies.cgi lighter (add lasttimestamp) + liveplayer.js | |||

* OGG+VTT | |||

** Archive of last weeks program from the playlist => OGG/MP3 + VTT | |||

* VIZ:loudness + freq | |||

** Image representation of stream + javascript (archive visualisation) / player | |||

** waveform + alternatives ... what other kinds of time based traces make sense... ( | |||

** (live) Audio visualization (HTML5 audio + canvas) (proxy the audio via xpub / nginx ?) | |||

* Temporal Text: LOG | |||

** ARCHIVE of the liquidsoap log: How to replay this text in sync with the archive? | |||

** Make a logplayer.js to play the log synced with audio recording. | |||

* Temporal Text: PAD | |||

** ARCHIVE of the etherpad https://pad.xpub.nl/p/radio_implicancies_12.3/export/etherpad | |||

** replaying the etherpad | |||

== 05 == | |||

* What happened last time? | |||

* Archiving past episodes (12.3, 12.4) | |||

* Time for experimentation with all the elements of the radio setup: [[liquidsoap]], [[vtt]], [[Python CGI|cgi?]] | |||

* Maybe some [[sox]] [https://hub.xpub.nl/sandbox/12/man/sox.pdf man sox] | |||

* The [https://www.liquidsoap.info/doc-1.3.7/complete_case.html complete case] uses some interesting elements of liquidsoap: | |||

** playlist | |||

** random | |||

** switch | |||

** add | |||

** request | |||

How to play around with these... | |||

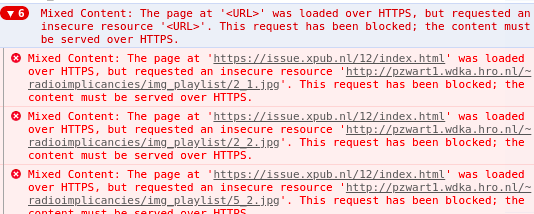

[[File:Mixedcontent.png]] | |||

== ... == | |||

LIVENESS | |||

* What possibilities exist in LIVE situations... (alternative representations of presence / writing systems / CHAT?) | |||

* Image representation of stream + javascript (archive visualisation) | |||

* Live audio visualization (HTML5 audio + canvas) (proxy the audio via xpub / nginx ?) | |||

== PLAYER == | |||

Radio webpage | |||

* Audio player (html/js) | |||

* META DATA display / COUNTDOWN | |||

* INTEGRATION with chat / etherpad ?? ... | |||

* iframes | |||

* guest book cgi??!! (socially determined captcha !) | |||

* Player: display metadata (Metadata from icecast? https://stackoverflow.com/questions/6115531/is-it-possible-to-get-icecast-metadata-from-html5-audio-element ) TRY! | |||

* Generate [[podcast]]/[[RSS]] with json + Template (template.py(python+jinja)/makefile)... [https://makezine.com/2008/02/29/how-to-make-enhanced-podc/ enhanced pocasts?] | |||

* Stream access (scheduling) | |||

(meta/way of working Publishing via git/githook TRY! ) | |||

* random access media ?? (can an mp3 do a range request jump? or only ogg ??) depends on server, example of [http://ubusound.memoryoftheworld.org/cardiff_janet/slow_river/Cardiff-Janet_A-Large-Slow-River_2001.mp3 non-random access mp3] | |||

* ubu audio player as anti-pattern ?! | |||

* html5 media fragments, and javascript | |||

* synced visualisations ???... html triggered by stream / metadata... (or not ?!) | |||

== VOICE == | |||

* text to speech / speech synthesis | * text to speech / speech synthesis | ||

| Line 37: | Line 692: | ||

http://librivox.org | http://librivox.org | ||

* [https://hacks.mozilla.org/2019/12/deepspeech-0-6-mozillas-speech-to-text-engine/ speech to text] | |||

* [https://m.soundcloud.com/amy_pickles/we-hope-this-email-finds-you-well-a-radio-drama-series-1 We Hope This Email Finds You Well] | |||

* festival provides *text2wave* which liquidsoap uses | |||

* microphones | |||

HRTF | |||

* ASMR - HRTF ... https://www.youtube.com/watch?v=3VOLkehNz-8 | |||

* Spatilsation of Audio: https://www.york.ac.uk/sadie-project/database.html, head-related transfer functions (HRTFs) | |||

* CLI spatialisation of audio: ffmpeg headphone, sofalizer | |||

* https://trac.ffmpeg.org/wiki/AudioChannelManipulation#VirtualBinauralAcoustics | |||

== BOTS == | |||

* "posthuman" ... hayles | |||

Cross system / bots (2 parts?) | Cross system / bots (2 parts?) | ||

| Line 62: | Line 719: | ||

* mediawiki API (pull + push) .. example of epicpedia? | * mediawiki API (pull + push) .. example of epicpedia? | ||

* etherpad API (pull + push?) .. example of etherlamp (make js version?) | * etherpad API (pull + push?) .. example of etherlamp (make js version?) | ||

* Example of live log of chat , replayed with video recording... | |||

See: https://vnsmatrix.net/projects/corpusfantastica-moo-and-lambda-projects | See: https://vnsmatrix.net/projects/corpusfantastica-moo-and-lambda-projects | ||

== | == LEAVE A MESSAGE / RECORDER == | ||

See [[Leave a message]] | |||

* https://mdn.github.io/web-dictaphone/ https://github.com/mdn/web-dictaphone/ (+ javascript looping / instrument) ... now how to save / submit ... ( | |||

* online recording interface... interface with CGI to save/serve files ? | |||

* https://developer.mozilla.org/en-US/docs/Web/API/MediaRecorder | |||

* https://github.com/mattdiamond/Recorderjs | |||

* Angeliki Diakrousis http://radioactive.w-i-t-m.net/ | |||

* IDEA: Limit recordings to a fixed duration (data size)... | |||

== UNCATEGORIZED ;) == | |||

* [https://docs.gitea.io/en-us/webhooks/ gitea webhooks] | |||

* CGI to receive a JSON post | |||

* php to receive JSON post (example on gitea webhooks page) | |||

* CGI (making a "helloworld" / printenv with python) See [[CGI checklist]] | |||

* Don't forget "sudo a2enmod cgi" and "sudo systemctl restart apache2" to enable the cgi module in Apache... | |||

MIDI | |||

* David Cope https://computerhistory.org/blog/algorithmic-music-david-cope-and-emi/ http://artsites.ucsc.edu/faculty/cope/5000.html | |||

* Corneluis Cardew: [http://www.ubu.com/papers/cardew_ethics.html Towards an Ethic of Improvisation] [https://britishmusiccollection.org.uk/score/great-learning The Great Learning] | |||

SUPERCUTS | |||

* Example of marking up moments of stream / media links (in etherpad ... or outside player ??)... POC: js to make media links... | |||

* Complementary [[ffmpeg]] commands to extract said portions... | |||

* EXAMPLES of making supercuts of material... | |||

* TEMPORAL TEXT: VTT | |||

* TEMPORAL TEXT: etherpad ... js player of "raw" etherpad... | |||

* EXERCISE: make COUNTDOWNS (with liquidsoap ?? + espeak) | |||

* work with schedules? timing/? | |||

* AI | |||

* ethercalc to organize things ... examples of export to JSON / CSV / HTML through template | |||

* NORMALIZATION test ... try some examples... | |||

* XMPP + links to images ?! | |||

* listener relationships... | |||

* TV! (picture ?! / visuals ??) how to broaden beyond voice... VISUAL component... | |||

* LIVE GUESTS / "call in" / voice mail, prompts, | |||

* Sockets / FIFO style pipeline bots ?? | |||

* archiving of the previous week... | |||

* listening to data(sets) | |||

* test say_metadata | |||

[[Category:Implicancies_12]] | |||

Latest revision as of 11:14, 15 March 2021

Prototyping session Spring 2020, in the context of Special Issue #12: Radio Implicancies

![]() This page is currently being worked on.

This page is currently being worked on.

01

In the work for the Special Issue, we will employ a strategy of working between systems

Proposition is to publish prototyping work alongside other developments of the Special Issue in a rolling fashion, ie following an "early and often" approach to publishing.

Focus on using the wiki as a "cookbook" -- a place to record useful techniques for others.

- Review setup with liquidsoap and icecast from the sandbox to the lurk server.

- Review setup of webhook connecting git repo with webpage on sandbox

- Example of adding a makefile to the workflow. Idea of separating sources from outputs (targets in make speak).

- Connecting to the shared terminal with tmux

So specifically:

ssh sandbox tmux -S /tmp/radio attach -t radio -r

Where "ssh sandbox" assumes you have a "sandbox" entry in your .ssh/config file.

.gitignore

We will follow a "sources only" approach to using the git whereby only "source" files will get committed to a repo. The idea is that the tools are in place for anyone who clones the directory to "build" the other files using the makefile + tools.

For this reason the .gitignore file contains:

*.html

So that if you try to:

git add README.html

You will see:

The following paths are ignored by one of your .gitignore files: README.html Use -f if you really want to add them.

Why .gitignore

- Lighter/smaller repos, faster to share, with less need for huge disk space just to change.

- Less conflicts -- many "artificial" conflicts arise when files that get generated are also version controlled. It's simpler to track only the sources, and let programs like make take care of updating the rest from there.

more .gitignore

See the "live" .gitignore

- Also ignoring media files (use scp to place... manage differently) -- goal: keep the core repository as "light" as possible. Git is not idea for managing big binary files (they don't then to change in a way that lends itself to diff-ing).

- Temporary files (things like foo~)

- Python derived files / cache (python generates __pycache__ folders as needed).

(why) make radio

Why use make? Makefiles are like "executable notebooks" are a really effective (and time tested) way to document and share scripts, workflows, and workarounds using diverse tools.

What's the catch? Make comes from free software programming culture and was created to support programming work rather than publishing. However, as managing programmers is like "herding cats", the tool is extremely flexible and porous, and well integrated with the command-line and BASH scripting. As such it's compatible with the richness of command-line tools available.

- git and make not just for code presentation about using makefile and git in publishing workflows

practical to do's

- Update issue.xpub.nl to point http://issue.xpub.nl/12/ to (redirect to?) The working space of SI12.

- Understand and make use of the git & make based workflow.

- Familiarity using liquidsoap to create icecast streams.

- Use liquidsoap to create flexible streams that can be interrupted

- Use top to check the load on the sandbox

- Use liquidsoap as an editing tool to assemble, mix, and filter audio

- Explore some basic structural elements of liquidsoap (playlist, rotate, sequence).

Make Noise

- the colors of noise (using sox to generate white, brown, and pink noise)

Playlist

Liquidsoap's playlist

Try...

- reload

- reload_mode

Exercise:

1.

ssh to the sandbox

cd cp /var/www/html/12/liquidsoap/playlist.liq . cp /var/www/html/12/liquidsoap/playlist.m3u . cp /var/www/html/12/liquidsoap/passwords.liq .

Copy the whole folder somewhere

cp -r /var/www/html12/liquidsoap .

or

git clone https://git.xpub.nl/XPUB/SI12-prototyping.git

(read-only)

Edit it with nano... Make sure you change the log file to be specific to you...

set("log.file.path","/tmp/<script>_YOURNAME.log")

and try to run it...

liquidsoap -v playlist.liq

2. Clone the respository to your laptop...

apt install liquidsoap

brew install liquidsoap

git clone ssh://git@git.xpub.nl:2501/XPUB/SI12-prototyping.git

Change the playlist to list local audio files... (NB: they need to be stereo)

Change "radioimplicancies.ogg" to whatever name you want...

Live or Fallback

- liquidsoap fallbacks

- mixing diverse sources of material...

- working with silence

- dynamics compression and loudness

- test streaming to an interruptable mount point...

- BASIC cutting / format manipulation with ffmpeg such as extracting chunks with a start / duration

Mixing audio sources

- Filtering for radio/streams:

- compression (as in Dynamic range compression different from audio data compression

- "Loudness" and "Normalisation"... What is replaygain and why can it help?

- liquidsoap for sound processing

Resources

- About the materiality of digital audio: samples, sampling rate, sample width, format, channels... See Sound and listening

- Playing "raw" and non-sound data ... See Sonification

- field recordings @ freemusicarchive

- William Burroughs: cut-ups + The Electronic Revolution

- Example: spectrumsort

- sound art on ubu

Assignment(s) for next week

- Make a "self-referential" radio program with liquidsoap... that is one where the content and the material of its transmission and its tools of production are reflected.

- Document your use of liquidsoap, or other tool(s) in the xpub wiki in the style of a (code) "Cookbook" recipe.

Listen together

- Listen: Alvin Lucier's I am Sitting in a room

- Listen: Janet Cardiff A Large Slow River

- Listen/watch: Laurie Anderson microphone piece

- Listen/watch: Gary Hill

Also for next week, check out Radio Aporee.

02

https://pad.xpub.nl/p/prototyping190520

Set of things to work on / research, make small groups, work on the tasks, report back

- Play/testing an interuptable stream

- How do we archive a liquidsoap program (adding a file as output to the stream)

- Render a waveform with ffmpeg

- "Simple" interface (mapping timeupdate to SVG/canvas)

Exercise (afternoon)

try to type in the following, reading.. Using the "player code"

ssh sandbox cd mkdir -p public_html cd public_html

look at: https://hub.xpub.nl/sandbox/~YOURSANDBOXUSERNAME/

You should see your "homepage" on the sandbox (maybe empty)... Copy the player folder to your public_html...

cp -r /var/www/html/12/player/ . cd player

now you should see the files here:

https://hub.xpub.nl/sandbox/~YOURSANDBOXUSERNAME/player/

ls cat numbers.txt cat numbers.txt | bash -x speaklines.sh ls

cat speaklines.sh

(if you want to modify the numbers)

nano numbers.txt

then...

cat numbers.txt | bash -x speaklines.sh

again...

LOOK at your folder from the browser: https://hub.xpub.nl/sandbox/~YOURSANDBOXUSERNAME/player/

ogginfo speech01.ogg ogginfo speech02.ogg

ls speech*.ogg ls speech*.ogg > counting.m3u cat counting.m3u cat counting.file.liq

liquidsoap -v counting.file.liq

Take a look at counting.ogg (in the browser)

ogginfo counting.ogg

Take a look at dream.html >>>>>>>>>>>> anyone else here??me i opened it in my browser. is that right?yes

Open the console and look at debugging messages while you scroll around... > what is the console? like inspect element console? on firefox it s Tools>Web developer>web console oh yes same thing, different road leading to it. what should we see in console? text while the video is playing, you see the subtitles i neverminf i see it :) woop wooop

(ctrl-shift-I or ctrl-shift-k depending on your browser ctrl-option-j chrome mac) > this is the shortcut didnt work for me tried both?yupbut you're there?yupeh!

ogginfo counting.ogg | python3 ogginfo-to-srt.py --json ogginfo counting.ogg | python3 ogginfo-to-srt.py ogginfo counting.ogg | python3 ogginfo-to-srt.py --vtt

and finally

ogginfo counting.ogg | python3 ogginfo-to-srt.py --vtt > counting.vtt

cp dream.html counting.html

Adjust the html to use counting.ogg and counting.vtt (no path, just the filename) Change the video tag to an audio tag...

links:

https://trac.ffmpeg.org/wiki/Waveform

try

ffmpeg -i counting.ogg -filter_complex "showwavespic=s=1024x120:colors=000000" -frames:v 1 -y counting.png

Notes

- "Live elements" + ways to integrate (metadata) with player page.

- "Radio programs" with liquidsoap... for instance, mixing a set of quotes, music or how might "jingles" function in a longer stream... What structural elements exist in liquidsoap to compose a sequence (like rotate, mix)

- Review / extend the between systems diagram -- Q: How to design a useful system for the metadata.

- Frames (and iframes) ... a brief history? Window.postMessage

- XSS/"Cross-site scripting": Using document.domain, CORS, cgi/php relays, JSONP

- The difficult history of "shared whiteboards" ... Eventual consistency http://www.processwave.org/ recent ipad app VP miro might also look at alternatives like hotglue or YWOT -- projects that are obscure (why? "artistic" nature/lack of entrepreneur-speak, lack of "cutting edge" technology, radical take on collaboration, ultimately trapped in proof of concept, but not really usable?) ... POC with SVG editor and using gitea API as backend ? (is there an API for commmiting files?)... granularity of collaboration ... "realtime" vs. "shared"... what if one at a time editing is OK, given a workable/fluid social protocol around it?

- authentication

- Visualization "sound cloud" style of a rendered stream ... showing structure...

03

RADIO PROTOCOLS aka METADATA, or STREAMING TEXT

https://pad.xpub.nl/p/prototyping260520

Radio Protocols

In a one sense ("media"), radio protocols could be considered the conventions of a radio station, involving structural elements of "programming" in the sense of what gets played when. It involves "formats" (weather report, traffic report, requests), schedules (news bulletin on the hour), and programs (Jazz brunch).

In a informatics/computer science context, protocols are also social agreements made around technical formats (like HTML, ogg/mp3) often involved with how different programs / processes can communicate with each other, often in the context of a network. For instance HTTP as the protocol that occurs between web browser and web server to request and then transmit the contents of a web page. Streaming media has specialized protocols (like ...), though the Icecast server we use uses a variation of HTTP. Icecast also has related protocols called ICY related to managing/requesting the metadata of a stream.

Protocols can range from "ad hoc" best practices / hacks, to highly formalized processes.

Many protocols are based on simple exchanges of text, typically formatted in lines (ie each line is a significant "unit" for transmitting and requesting information from a server.

Based on the question, "how might we share the terminal as part of the broadcast?"... We are going to be looking at how the textual output of the liquidsoap server (1) be transmitted to listeners, and (2) could be filtered and used as a novel protocol for the Radio Implicancies transmissions.

Consider searching for "syncing metadata with icecast"...

preparing and testing a stream with replay gain

How might me make a test stream to hear smaller samples of each element in a playlist?

And here, a somewhat freaky example of audio level normalization and home automization gone perhaps too far.

"Random Access"

Dynamic playlist(s)

- Add (random) excerpts of "raw" materials ?!

- How to combine one or more playlists, with randomness.

- How to use jingles to "join" elements

- How might "bumpers" function to mark beginnings and ends of "programs".

- How might we make the program more inviting for outside/new listeners? (Meta programming?)

regular expressions

pipeserver

Use cue_cut and annotation to prepare a test stream.

- How might we filter the messages that can sent to the stream (how to filter with commandline)

- Add metadata to the audio files

- Add a metadata "reporter" to the liq

- Run liq + pipe to pipeserver

- Adjust pipeserver.html

commandline calisthenics

ls *.ogg | wc -l ls *.ogg > playlist.m3u cat playlist.m3u | wc -l

# multitasking commandline style

nano foo.txt ctrl-z fg

ctrl-z ps

# PID are process ids Note which one is nano then...

fg XXX

ps aux | grep liquidsoap

creating a custom pipeserver interface

cd cd public_html cp /home/mmurtaugh/pipeserver/pipeserver.html .

nano pipeserver.html

Add

<audio controls src="http://echo.lurk.org:999/radioimplicancies_test.ogg"></audio>

Change line 31 to look like:

var ws_addr = 'ws://'+window.location.host+'/pipe',

notes

- replaygain, Loudness normalization vs. Dynamic normalisation / compression: script to test first x sec each source... just liquidsoap?

- metadata hook

- liquidsoap server? / telnet?

- countdown ... (say protocol, add?)

- vorbisgain is a tool following the ReplayGain algorithm, that adds metadata to an OGG audio file describing the loudness of the file. It was created for music players to be able to keep a consistent audio level between different albums.

- pipebot?

- materiality of timed text ... epicpedia ... etherlamp...

- chat archive to vtt

- podcast feed ?

broadcast notes

Set a log file on radio2:

set("log.file",true)

set("log.file.path","<script>.log")

set("log.stdout",true)

Follow the log with tail -f (follow) and pipe to the pipeserver:

tail -f 12.2.livetest.log | pipeserver.py --host 0.0.0.0

shell.html

aka pipeserver frontent...

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<title>shell</title>

<style>

body {

background: #888;

}

body.connected {

background: white;

}

#shell {

white-space: pre-wrap;

height: 25em;

width: 100%;

font-family: monospace;

color: white;

font-size: 14px;

background: black;

overflow-y: auto;

}

</style>

</head>

<body>

<p><span id="connections">0</span> active connections</p>

<div id="shell"></div>

<script>

var ws_addr = 'wss://'+window.location.host+"/12/pipe/",

sock = null,

shell = document.getElementById("shell"),

connections = document.getElementById("connections");

function connect () {

sock = new WebSocket(ws_addr);

sock.onopen = function (event) {

console.log("socket opened");

document.body.classList.add("connected");

// sock.send(JSON.stringify({

// src: "connect",

// }));

};

sock.onmessage = function (event) {

// console.log("message", event);

if (typeof(event.data) == "string") {

var msg = JSON.parse(event.data);

// console.log("message JSON", msg);

if (msg.src == "stdin" && msg.line) {

// Show all lines

var line = document.createElement("div");

line.classList.add("line");

line.innerHTML = msg.line;

shell.appendChild(line);

// scroll to bottom

shell.scrollTop = shell.scrollHeight;

} else if (msg.src == "connect") {

connections.innerHTML = msg.connections;

}

}

};

sock.onclose = function (event) {

// console.log("socket closed");

connections.innerHTML = "?";

document.body.classList.remove("connected");

sock = null;

window.setTimeout(connect, 2500);

}

}

connect();

</script>

</body>

</html>

live_interrupt_withmetadata.liq

"RADIO1" program, with a callback to recordmeta.py, a script that relays the data (via POSTign to a cgi script on (issue.)xpub.nl)

# /var/www/radio/live_interrupt_withmetadata.liq

set("log.file",false)

# set("log.file.path","/tmp/<script>.log")

set("log.stdout",true)

%include "/srv/radio/passwords.liq"

# Add the ability to relay live shows

radio =

fallback(track_sensitive=false,

[input.http("http://echo.lurk.org:999/radioimplicancies_live.ogg"),

single("brownnoise.mp3")])

###################

# record metadata

scripts="/var/www/html/radio/"

def on_meta (meta)

# call recordmeta.py with json_of(meta)

data = json_of(compact=true,meta)

system (scripts^"recordmeta.py --timestamp --post https://issue.xpub.nl/cgi-bin/radioimplicancies.cgi "^quote(data))

# PRINT (debugging)

#print("---STARTMETA---")

#list.iter(fun (i) -> print("meta:"^fst(i)^": "^snd(i)), meta)

# data = string.concat(separator="\n",list.map(fun (i) -> fst(i)^":"^snd(i), meta))

#print ("---ENDMETA---")

end

radio = on_metadata(on_meta, radio)

########################################

radio = mksafe(radio)

output.icecast(%vorbis,

host = ICECAST_SERVER_HOST, port = ICECAST_SERVER_PORT,

password = ICECAST_SERVER_PASSWORD, mount = "radioimplicancies.ogg",

radio)

recordmeta.py

#!/usr/bin/env python3

import sys, argparse, datetime, json

import requests

# todo: add timestamp

ap = argparse.ArgumentParser("")

ap.add_argument("data")

ap.add_argument("--post")

ap.add_argument("--logfile")

ap.add_argument("--timestamp", default=False, action="store_true")

args = ap.parse_args()

data = json.loads(args.data)

if args.timestamp:

data['time'] = datetime.datetime.utcnow().isoformat()

if args.logfile:

with open (args.logfile, "a") as f:

print (json.dumps(data), file=f)

if args.post:

r = requests.post(args.post, json=data)

resp = r.content.decode("utf-8").strip()

print ("POST response ({}): {}".format(r.status_code, resp))

radioimplicancies.cgi

liveplayer.js

function liveplayer (elt) {

var last_timestamp,

DELAY_TIME_SECS = 5;

async function poll () {

var rows = await (await fetch("/cgi-bin/radioimplicancies.cgi")).json();

// console.log("rows", rows);

if (rows) {

// get the last (most recent) item

var d = rows[rows.length-1];

if (d.time && d.time != last_timestamp) {

last_timestamp = d.time;

console.log("liveplayer: current metadata", d);

window.setTimeout(function () {

var old_nowplaying = elt.querySelector(".now_playing");

if (old_nowplaying) {

old_nowplaying.classList.remove("now_playing");

}

var nowplaying = elt.querySelector("#"+d.title);

if (nowplaying) {

console.log("liveplayer: nowplaying", nowplaying);

nowplaying.classList.add("now_playing");

} else {

console.log("liveplayer: warning no div matching title " + d.title);

}

}, DELAY_TIME_SECS*1000);

}

}

}

window.setInterval(poll, 1000);

}

04

https://pad.xpub.nl/p/prototyping-2020-06-02

xtended PLAYERS / TIME BASED TEXT(s) / REPLAYING (textual) stream

FROM last time, form groups around the following...

- Better way to edit metadata

- vorbiscomment supports reading / writing from a file...

- Ability to remake things differently in the future (future interfaces)

vorbiscomment -l speech01.ogg > speech01.meta nano speech01.meta vorbiscomment -c speech01.meta -w speech01.ogg

- liveplayer.js

- Links in metadata ... frame=http://foo.bar/whatever

---

schedule ... copies TO PAD

- Technotexts: Epicpedia

- evt make radioimplicancies.cgi lighter (add lasttimestamp) + liveplayer.js

- OGG+VTT

- Archive of last weeks program from the playlist => OGG/MP3 + VTT

- VIZ:loudness + freq

- Image representation of stream + javascript (archive visualisation) / player

- waveform + alternatives ... what other kinds of time based traces make sense... (

- (live) Audio visualization (HTML5 audio + canvas) (proxy the audio via xpub / nginx ?)

- Temporal Text: LOG

- ARCHIVE of the liquidsoap log: How to replay this text in sync with the archive?

- Make a logplayer.js to play the log synced with audio recording.

- Temporal Text: PAD

- ARCHIVE of the etherpad https://pad.xpub.nl/p/radio_implicancies_12.3/export/etherpad

- replaying the etherpad

05

- What happened last time?

- Archiving past episodes (12.3, 12.4)

- Time for experimentation with all the elements of the radio setup: liquidsoap, vtt, cgi?

- Maybe some sox man sox

- The complete case uses some interesting elements of liquidsoap:

- playlist

- random

- switch

- add

- request

How to play around with these...

...

LIVENESS

- What possibilities exist in LIVE situations... (alternative representations of presence / writing systems / CHAT?)

- Image representation of stream + javascript (archive visualisation)

- Live audio visualization (HTML5 audio + canvas) (proxy the audio via xpub / nginx ?)

PLAYER

Radio webpage

- Audio player (html/js)

- META DATA display / COUNTDOWN

- INTEGRATION with chat / etherpad ?? ...

- iframes

- guest book cgi??!! (socially determined captcha !)

- Player: display metadata (Metadata from icecast? https://stackoverflow.com/questions/6115531/is-it-possible-to-get-icecast-metadata-from-html5-audio-element ) TRY!

- Generate podcast/RSS with json + Template (template.py(python+jinja)/makefile)... enhanced pocasts?

- Stream access (scheduling)

(meta/way of working Publishing via git/githook TRY! )

- random access media ?? (can an mp3 do a range request jump? or only ogg ??) depends on server, example of non-random access mp3

- ubu audio player as anti-pattern ?!

- html5 media fragments, and javascript

- synced visualisations ???... html triggered by stream / metadata... (or not ?!)

VOICE

- text to speech / speech synthesis

Specifically the case of [MBROLA](https://github.com/numediart/MBROLA) and the "free" but not "libre" voices. https://github.com/numediart/MBROLA-voices

Espeak + MBrola https://github.com/espeak-ng/espeak-ng/blob/master/docs/mbrola.md

Projects spɛl ænd spik. hellocatfood (antonio roberts) https://www.hellocatfood.com/spell-and-speak/

"real" VOICES http://librivox.org

- speech to text

- We Hope This Email Finds You Well

- festival provides *text2wave* which liquidsoap uses

- microphones

HRTF

- ASMR - HRTF ... https://www.youtube.com/watch?v=3VOLkehNz-8

- Spatilsation of Audio: https://www.york.ac.uk/sadie-project/database.html, head-related transfer functions (HRTFs)

- CLI spatialisation of audio: ffmpeg headphone, sofalizer

- https://trac.ffmpeg.org/wiki/AudioChannelManipulation#VirtualBinauralAcoustics

BOTS

- "posthuman" ... hayles

Cross system / bots (2 parts?)

- infobot

- eliza

- mud/moo

- Bot / chatroom

- Radio stream / schedule

- mediawiki API (pull + push) .. example of epicpedia?

- etherpad API (pull + push?) .. example of etherlamp (make js version?)

- Example of live log of chat , replayed with video recording...

See: https://vnsmatrix.net/projects/corpusfantastica-moo-and-lambda-projects

LEAVE A MESSAGE / RECORDER

See Leave a message

- https://mdn.github.io/web-dictaphone/ https://github.com/mdn/web-dictaphone/ (+ javascript looping / instrument) ... now how to save / submit ... (

- online recording interface... interface with CGI to save/serve files ?

- https://developer.mozilla.org/en-US/docs/Web/API/MediaRecorder

- https://github.com/mattdiamond/Recorderjs

- Angeliki Diakrousis http://radioactive.w-i-t-m.net/

- IDEA: Limit recordings to a fixed duration (data size)...

UNCATEGORIZED ;)

- gitea webhooks

- CGI to receive a JSON post

- php to receive JSON post (example on gitea webhooks page)

- CGI (making a "helloworld" / printenv with python) See CGI checklist

- Don't forget "sudo a2enmod cgi" and "sudo systemctl restart apache2" to enable the cgi module in Apache...

MIDI

- David Cope https://computerhistory.org/blog/algorithmic-music-david-cope-and-emi/ http://artsites.ucsc.edu/faculty/cope/5000.html

- Corneluis Cardew: Towards an Ethic of Improvisation The Great Learning

SUPERCUTS

- Example of marking up moments of stream / media links (in etherpad ... or outside player ??)... POC: js to make media links...

- Complementary ffmpeg commands to extract said portions...

- EXAMPLES of making supercuts of material...

- TEMPORAL TEXT: VTT

- TEMPORAL TEXT: etherpad ... js player of "raw" etherpad...

- EXERCISE: make COUNTDOWNS (with liquidsoap ?? + espeak)

- work with schedules? timing/?

- AI

- ethercalc to organize things ... examples of export to JSON / CSV / HTML through template

- NORMALIZATION test ... try some examples...

- XMPP + links to images ?!

- listener relationships...

- TV! (picture ?! / visuals ??) how to broaden beyond voice... VISUAL component...

- LIVE GUESTS / "call in" / voice mail, prompts,

- Sockets / FIFO style pipeline bots ??

- archiving of the previous week...

- listening to data(sets)

- test say_metadata