SPECIAL ISSUE 15 MARTIN DAILY BOARD

Overview

Links

- Radio Implicancies https://pzwiki.wdka.nl/mediadesign/Category:Implicancies

- Radio Implicancies Conference Room https://conf.domainepublic.net/radioimplicancies

- Implicanties Ressources https://pzwiki.wdka.nl/mediadesign/Implicancies_Resources

- Implicancies Guests + contributors https://pzwiki.wdka.nl/mediadesign/Implicancies_Guests_%2B_contributors#Steve_Rushton

- Aesthetic Porgramming https://aesthetic-programming.net/

- Difraction vs Reflection https://possiblebodies.constantvzw.org/files/implicancies/reflection-diffraction.pdf

- Music with Python here https://wiki.python.org/moin/PythonInMusic (note: install github)

Hotlines

- https://hotline.xpub.nl/grs2 Steve

- https://conf.domainepublic.net/radioimplicancies Femke

- https://hotline.xpub.nl/prototyping Manetta and Michael

Pads

- Week 1 Introduction https://pad.xpub.nl/p/15.1

- Week 2 Prototyping w/ Manetta https://pad.xpub.nl/p/15.2-prototyping-Monday

- Week 2 w/ Steve https://pad.xpub.nl/p/Mythodologies19-4-21

- Week 2 w/ Michael https://pad.xpub.nl/p/2021-04-21-prototyping

- Week 3 https://pad.xpub.nl/p/15.3

- Week 3 w/Michael https://pad.xpub.nl/p/mythodologies11-5-21

- Week 3 w/Jacopo and Nami https://pad.xpub.nl/p/15-group5

Keywords

- Implicancies vocabulary https://pad.constantvzw.org/p/implicanties

- Situated knowledges (Donna Haraway)

- Worlding (Haraway:

_ making of worlds. language, science, technology : these forces produce worlds. world doesn't exist w/o language, language is an active part of the world

- The poetic of relations (Eduard Glissant)

- Entanglement (Karen Barad)

_ entanglement assumes that we have different things that are being knotted together _ state of being knotted together Denise Ferreira Da Silva: introduces "implicancies" things be co-response-able for each other starting point is particles

- Response-ability (Barad + Haraway)

- Implicancies (Denise Ferreira Da Silva)

_ The term "implicancies" brings together: entanglement, response-ability & relations. That is why this SI is called Radio Implicancies

- Techno-epistomologies:

_ Technological knowledge systems [metadata etc how algorithms produce data]

- Techno-ontology: How social media puts a filter on the world. We know a lot about bodies through scanning technologie. In line with that: algorithms are part of the world, while structuring the world. So when it is claimed to work on "fair AI" or "fair algorithms", algorithms are placed outside the worlding forces.

_ Donna Haraway's frames of references: biology, US based, 75 year old

https://www.youtube.com/watch?v=J2DcAf16zeI

_ 2nd person that is important in thinking through relations: Eduart Glissant in French context.

Ressources

From https://pzwiki.wdka.nl/mediadesign/Implicancies_Resources

Watching / listening

- Ron Morrison (2018), Decoding space: Liquid infrastructures https://vimeo.com/showcase/5551892/video/306993793 + https://medium.com/digital-earth/when-the-history-repeats-itself-lithium-extraction-in-d-r-congo-b372090c4a5c

- Aimee Bahng (2017), Plasmodial Improprieties: Octavia E. Butler, Slime Molds, and Imagining a Femi-Queer Commons https://soundcloud.com/thehuntington/plasmodial-improprieties

- Martine Syms (2013), The Mundane Afrofuturist Manifesto https://www.kcet.org/shows/artbound/episodes/the-mundane-afrofuturist-manifesto

- Ursula Leguin (1985), She Unnames Them http://ursulakleguinarchive.com/MP3s/BuffaloGals.html

- Edouard Glissant (Manthia Diawara, 2010) One World in Relation https://www.youtube.com/watch?v=aTNVe_BAELY

- Denise Fereira Da Silva, Arjuna Neuman (2019) Four waters: deep implicancy https://hub.xpub.nl/sandboxradio/ingredients/4%20WATERS%20-%20DEEP%20IMPLICANCY.mp4

- Four Rooms – Denise Ferreira Da Silva (2020), on the Logics of Exclusion and Obliteration https://video.constantvzw.org/Four_rooms/Four%20Rooms,%20Denise%20Ferreira%20Da%20Silva,%20on%20the%20Logics%20of%20Exclusion%20and%20Oblitera...-152937289377315.mp4

- Natalie Jeremijenko + Kate Rich Track 12: The mutual synchronisation of coupled oscillators (This was one track from a CD released as an "insert" to Mute Magazine Vol 1, No. 21, September 2001). The other tracks are available as well. In the recording, Jeremijenko speaks; the audio production/remix was done by Rich. They worked together as the Bureau of Inverse Technology.

Reading

- Romi Ron Morrison (2019), Gaps between the digits. On the fleshy unknowns of the HUMAN https://benjamins.com/catalog/idj.25.1.05mor

- Oulimata Gueye (2019), No Congo, no technologies https://medium.com/digital-earth/no-congo-no-technologies-163ea2caec0a

- Martine Syms (2013), The Mundane Afrofuturist Manifesto http://thirdrailquarterly.org/martine-syms-the-mundane-afrofuturist-manifesto/

- Ramon Amaro (2019), Artificial intelligence: Warped, colorful forms and their unclear geometries https://research.gold.ac.uk/27052/1/SoU_AI%2C%20warped%2C%20colorful%20forms....pdf

- Sylvia Wynter (2015), On Being Human as Praxis -- interview with Katherine Mckittrick

- Donna Haraway (2019), A Giant Bumptious Litter: Donna Haraway on Truth, Technology, and Resisting Extinction https://logicmag.io/nature/a-giant-bumptious-litter/?fbclid=IwAR13RpmuwM17aSvo6V-G5EDWF8MpxKunBf-1KuTfExrDsfCK1BHFvCWNv3

- Denise Fereira Da Silva (2016), 'On difference without separability'

- Katherine Mckittrick, Mathematics Black Life

- Zach Blas & Micha Carde (2015), Imaginary computational systems: queer technologies and transreal aesthetics

- Noah Tsika (2016), CompuQueer: Protocological Constraints, Algorithmic Streamlining, and the Search for Queer Methods Online

- Anaïs Nony (2017), Technology of Neo-Colonial Epistemes

- Wendy Hui Kyong Chun (2009), Race and/as Technology; or, How to Do Things to Race

- Syed Mustafa Ali (2016), A brief introduction to decolonial computing

- Michael Murtaugh, Eventual consistency https://diversions.constantvzw.org/wiki/index.php?title=Eventual_Consistency

- Saidiya Hartman, The Plot of Her Undoing

- Alexis Pauline Gumbs and Julia Roxanne Wallace, Black Feminist Calculus Meets Nothing to Prove: A Mobile Homecoming Project Ritual toward the Postdigital

Sketch a musical composition

- Each of the 2 system login sounds slowed down as a starter

Legend Radio with Louisa

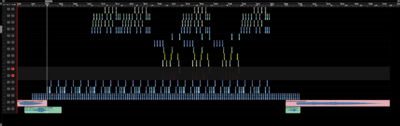

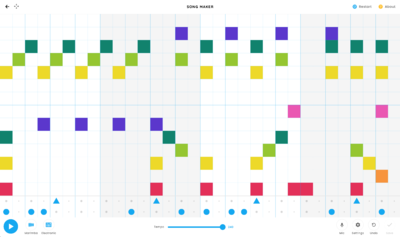

Louisa and I created a musical dialogue between Microsoft and Mac operating sound systems. In order to create this soundtrack, we first used Musiclab, a Google song maker allowing to make simple songs with visual patterns. In a second step, we tryed to remake our song with Earsketch, a sound editor working with Python language. By refering to Musicalab visual pattern, we could quiet easily understand how to reconstruct our track in Python. Some screenshots bellow will eventually even show so visual links between the two things!

# python code

# script_name:

#

# author:

# description:

#

from earsketch import *

init()

setTempo(115)

# Add Sounds

fitMedia(MARTINFOUCAUT_STARTUP_CUSTOM , 15, 1, 3)

fitMedia(MARTINFOUCAUT_START_UP_WINDOWS2, 16, 1.5, 4)

fitMedia(MARTINFOUCAUT_STARTUP20, 15, 19, 26)

fitMedia(MARTINFOUCAUT_EVERY_WINDOWS_STARTUP_SHUTDOWN_SOUND_TRIM, 16, 19, 20)

#fitMedia(MARTINFOUCAUT_LOOPE, 5, 20, 30)

#fitMedia(MARTINFOUCAUT_LOOPF, 6, 25, 35)

#fitMedia(MARTINFOUCAUT_LOOPG, 7, 30, 40)

#fitMedia(MARTINFOUCAUT_LOOPH, 8, 35, 45)

#fitMedia(YG_RNB_TAMBOURINE_1, 10, 1, 5)

#fitMedia(YG_FUNK_CONGAS_3, 11, 1, 5)

#fitMedia(YG_FUNK_HIHAT_2, 12, 5, 9)

#fitMedia(RD_POP_TB303LEAD_3, 13, 5, 9)

# Effects fade in

#setEffect(1, VOLUME,GAIN, -20, 5, 1, 10)

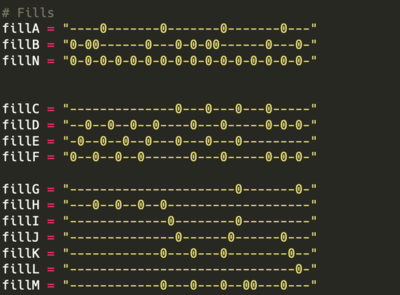

# Fills

fillA = "----0-------0-------0-------0---"

fillB = "0-00------0---0-0-00------0---0-"

fillN = "0-0-0-0-0-0-0-0-0-0-0-0-0-0-0-0-"

fillC = "--------------0---0---0---0-----"

fillD = "--0--0--0--0----0---0-----0-0-0-"

fillE = "-0--0--0--0---0---0---0---------"

fillF = "0--0--0--0------0---0-----0-0-0-"

fillG = "----------------------0-------0-"

fillH = "---0--0--0--0-------------------"

fillI = "-------------0--------0---------"

fillJ = "--------------0------0------0---"

fillK = "------------0---0---0--------0--"

fillL = "------------------------------0-"

fillM = "------------0---0---0--00---0---"

makeBeat(MARTINFOUCAUT_WINDOWS_RECYCLE2, 12 , 3, fillA)

makeBeat(MARTINFOUCAUT_BASSO, 13, 3, fillB)

makeBeat(MARTINFOUCAUT_SPEECH_MISRECOGNITION, 14, 2, fillN)

makeBeat(MARTINFOUCAUT_SPEECH_MISRECOGNITION, 14, 4, fillN)

makeBeat(MARTINFOUCAUT_SPEECH_MISRECOGNITION, 14, 6, fillN)

makeBeat(MARTINFOUCAUT_SPEECH_MISRECOGNITION, 14, 8, fillN)

makeBeat(MARTINFOUCAUT_SPEECH_MISRECOGNITION, 14, 10, fillN)

makeBeat(MARTINFOUCAUT_SPEECH_MISRECOGNITION, 14, 12, fillN)

makeBeat(MARTINFOUCAUT_SPEECH_MISRECOGNITION, 14, 14, fillN)

makeBeat(MARTINFOUCAUT_SPEECH_MISRECOGNITION, 14, 16, fillN)

makeBeat(MARTINFOUCAUT_SPEECH_MISRECOGNITION, 14, 18, fillN)

makeBeat(MARTINFOUCAUT_WINDOWS_RECYCLE2, 12 , 4, fillA)

makeBeat(MARTINFOUCAUT_BASSO, 13, 4, fillB)

makeBeat(MARTINFOUCAUT_WINDOWS_RECYCLE2, 12 , 6, fillA)

makeBeat(MARTINFOUCAUT_BASSO, 13, 6, fillB)

makeBeat(MARTINFOUCAUT_WINDOWS_RECYCLE2, 12 , 8, fillA)

makeBeat(MARTINFOUCAUT_BASSO, 13, 8, fillB)

makeBeat(MARTINFOUCAUT_WINDOWS_RECYCLE2, 12 , 10, fillA)

makeBeat(MARTINFOUCAUT_BASSO, 13, 10, fillB)

makeBeat(MARTINFOUCAUT_WINDOWS_RECYCLE2, 12 , 12, fillA)

makeBeat(MARTINFOUCAUT_BASSO, 13, 12, fillB)

makeBeat(MARTINFOUCAUT_WINDOWS_RECYCLE2, 12 , 14, fillA)

makeBeat(MARTINFOUCAUT_BASSO, 13, 14, fillB)

makeBeat(MARTINFOUCAUT_WINDOWS_RECYCLE2, 12 , 16, fillA)

makeBeat(MARTINFOUCAUT_BASSO, 13, 16, fillB)

makeBeat(MARTINFOUCAUT_WINDOWS_DING4, 1, 6, fillC)

makeBeat(MARTINFOUCAUT_WINDOWS_DING3, 2, 6, fillD)

makeBeat(MARTINFOUCAUT_WINDOWS_DING2, 3, 6, fillE)

makeBeat(MARTINFOUCAUT_WINDOWS_DING1, 4, 6, fillF)

makeBeat(MARTINFOUCAUT_WINDOWS_DING4, 1, 8, fillC)

makeBeat(MARTINFOUCAUT_WINDOWS_DING3, 2, 8, fillD)

makeBeat(MARTINFOUCAUT_WINDOWS_DING2, 3, 8, fillE)

makeBeat(MARTINFOUCAUT_WINDOWS_DING1, 4, 8, fillF)

makeBeat(MARTINFOUCAUT_WINDOWS_DING4, 1, 12, fillC)

makeBeat(MARTINFOUCAUT_WINDOWS_DING3, 2, 12, fillD)

makeBeat(MARTINFOUCAUT_WINDOWS_DING2, 3, 12, fillE)

makeBeat(MARTINFOUCAUT_WINDOWS_DING1, 4, 12, fillF)

makeBeat(MARTINFOUCAUT_WINDOWS_DING4, 1, 16, fillC)

makeBeat(MARTINFOUCAUT_WINDOWS_DING3, 2, 16, fillD)

makeBeat(MARTINFOUCAUT_WINDOWS_DING2, 3, 16, fillE)

makeBeat(MARTINFOUCAUT_WINDOWS_DING1, 4, 16, fillF)

makeBeat(MARTINFOUCAUT_GLASS5, 5, 10, fillG)

makeBeat(MARTINFOUCAUT_GLASS, 6, 10, fillH)

makeBeat(MARTINFOUCAUT_GLASS2, 7, 10, fillI)

makeBeat(MARTINFOUCAUT_GLASS3, 8, 10, fillJ)

makeBeat(MARTINFOUCAUT_GLASS4, 9, 10, fillK)

makeBeat(MARTINFOUCAUT_GLASS7, 10, 10, fillL)

makeBeat(MARTINFOUCAUT_GLASS6, 11, 10, fillM)

makeBeat(MARTINFOUCAUT_GLASS5, 5, 12, fillG)

makeBeat(MARTINFOUCAUT_GLASS, 6, 12, fillH)

makeBeat(MARTINFOUCAUT_GLASS2, 7, 12, fillI)

makeBeat(MARTINFOUCAUT_GLASS3, 8, 12, fillJ)

makeBeat(MARTINFOUCAUT_GLASS4, 9, 12, fillK)

makeBeat(MARTINFOUCAUT_GLASS7, 10, 12, fillL)

makeBeat(MARTINFOUCAUT_GLASS6, 11, 12, fillM)

makeBeat(MARTINFOUCAUT_GLASS5, 5, 14, fillG)

makeBeat(MARTINFOUCAUT_GLASS, 6, 14, fillH)

makeBeat(MARTINFOUCAUT_GLASS2, 7, 14, fillI)

makeBeat(MARTINFOUCAUT_GLASS3, 8, 14, fillJ)

makeBeat(MARTINFOUCAUT_GLASS4, 9, 14, fillK)

makeBeat(MARTINFOUCAUT_GLASS7, 10, 14, fillL)

makeBeat(MARTINFOUCAUT_GLASS6, 11, 14, fillM)

finish()

Working with audio interpolations

What did I do?

<br<

Transforming a text into a question/answer text, make it read by a computer

Interpolating the readen text with Who wants to be a billionaire ambiant soundtrack with THX intro and I Feel Love (acapela) from Donna Summer

Original

"Barry Popper’s cruel step-parents make him live in a skip in the driveway of their house. Barry’s stepsister is spoiled and fed cake and ice cream while Barry eats cold spaghetti and licks empty crisp bags. One day a fox delivers a telegram which informs Barry that he has a place in Stinkwort’s Academy. Barry is instructed to go to departure gate 27-and-a-half at Local Airport at such-and-such a time. On arrival he is escorted on to a helicopter by the crew of friendly badgers. On the plane he sits next to fellow Stinkworter, Ginger Boozy. 'Hello, you must be Barry" says Ging...'

Retelling a Story

Question 1

A”Barry Popper’s" cruel step-parents make him live in a skip in the driveway, of?

Answer C

their house.

Question 2

Barry’s stepsister is?

Answer A

spoiled and fed cake and ice cream while

Question 3

Barry eats?

Answer A.

cold spaghetti and licks empty crisp bags.

Question 4

One day a fox delivers a telegram which informs Barry that?

Answer D.

he has a place in Stinkwort’s Academy.

Question 5

Barry is instructed to go to departure gate 27-and-a-half at?

Answer B.

Local Airport at such-and-such a time.

Question 6

On arrival he is escorted on to a helicopter by?

Answer B.

the crew of friendly badgers.

Question 7

On the plane he sits next to?

Answer A.

fellow Stinkworter, Ginger Boozy

https://pad.xpub.nl/p/martinfoucaut_interpolation

Streching audio space/time perception (with sample interpolation, overlap, looping and echoing)

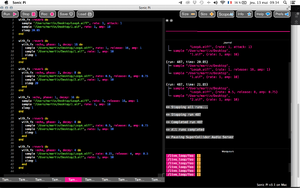

With Sonic Pi and as part of the team with Naami and Jacopro, I am working on time perception, by looping, overlapping and sketching a single track more and more untils it becomes a single constant note.

For what I am doing I could take as a reference this https://www.youtube.com/watch?v=mjnAE5go9dI&list=LLfu-Fy4NjlpiIYJyE447UDA&index=27&t=2743s

"the recordings consist of tape loops that gradually deteriorated each time they passed the tape head, the unexpected result of Basinski's attempt to transfer his earlier recordings to digital format."

Here it is more about a media deterioration than streching the audio space perception, but the process is pretty much the same, so I found relevant to keep this in mind.

Broadcast 3

Personal track description

For my sound piece I worked on a 20 seconds looped sample of the track called Beat Box (Diversion One) from Art of Noise (1984) that I recorded some years ago and more recently transformed to 1 Beat per second. With the help of SonicPI, I am questioning how musical or/and sound echo, overlap and reverberation can affect the listener's mental perception of the space where the music/sounds are being played.

#PLAY THE TRACK ONCE IN NORMAL MODE, NO ECHO, NO REVERB, NO TIME-STRECHING TO GIVE TO THE LISTENER AN IDEA OF WHAT MATERIAL I AM WORKING WITH

with_fx :reverb do

sample "/Users/martin/Desktop/LoopA.aiff", rate: 1, attack: 1

sample "/Users/martin/Desktop/1.aif", rate: 1, amp: 10

sleep 20.05

end

#PLAY THE TRACK ONCE (WITH DECAY AND RELEASE) AND OVERLAP IT WITH THE SAME TRACK PLAYED AT 50% SPEED, DECAY AND RELEASE ONE SECOND AFTER

with_fx :reverb do

with_fx :echo, phase: 1, decay: 16 do

sample "/Users/martin/Desktop/LoopA.aiff", rate: 1, release: 16, amp: 1

sample "/Users/martin/Desktop/1.aif", rate: 1, amp: 10

sleep 1

end

end

with_fx :reverb do

with_fx :echo, phase: 2, decay: 8 do

sample "/Users/martin/Desktop/LoopA.aiff", rate: 0.5, release: 8, amp: 0.75

sample "/Users/martin/Desktop/2.aif", rate: 1, amp: 10

sleep 20

end

end

#PLAY THE TRACK ONCE (WITH DECAY AND RELEASE) AND OVERLAP IT WITH THE SAME TRACK PLAYED AT 50% SPEED, DECAY AND RELEASE ONE SECOND AFTER AND ON AND ON AND ON AND ON (X5)

with_fx :echo, phase: 1, decay: 16 do

sample "/Users/martin/Desktop/LoopA.aiff", rate: 1, release: 16, amp: 1

sample "/Users/martin/Desktop/1.aif", rate: 1, amp: 10

sleep 1

end

with_fx :reverb do

with_fx :echo, phase: 2, decay: 8 do

sample "/Users/martin/Desktop/LoopA.aiff", rate: 0.5, release: 8, amp: 0.75

sample "/Users/martin/Desktop/2.aif", rate: 1, amp: 10

sleep 1

end

end

with_fx :reverb do

with_fx :echo, phase: 4, decay: 4 do

sample "/Users/martin/Desktop/LoopA.aiff", rate: 0.25, release: 4, amp: 0.5

sample "/Users/martin/Desktop/3.aif", rate: 1, amp: 10

sleep 1

end

end

with_fx :reverb do

with_fx :echo, phase: 8, decay: 2 do

sample "/Users/martin/Desktop/LoopA.aiff", rate: 0.125, release: 2, amp: 0.25

sample "/Users/martin/Desktop/4.aif", rate: 1, amp: 10

sleep 1

end

end

with_fx :reverb do

with_fx :echo, phase: 16, decay: 1 do

sample "/Users/martin/Desktop/LoopA.aiff", rate: 0.1, release: 8, amp: 0.1

sample "/Users/martin/Desktop/5.aif", rate: 1, amp: 10

sleep 40

end

end

Nami/Pongie/Martin Description

0916653+1005344+1007629 In a system dominated by measures, demanding an ever increasing synchronization between individuals and their environments, we propose "Spacing in Time" as a way to escape per-determined variables. Through these audio experiments, we encounter a new moment of experiencing different time flows. The radio turns into a time's perception box where various tools for time measurement and orientation are being de-fragmented and re-boot. While creating audio environments with sound repetitions, reverbs and echoes, the experiments lead to a sense of physicality which allows the full immersion into a broadcasted, time travelling. Shut down the clocks and de-synchronize yourself from the established recognition of time, while un-practicing your common perceptions.

Prototyping and Annotating

- https://earsketch.gatech.edu/earsketch2/ Editing/Importing/Composing/Transitioning/Randomizing with soundtracks recorded over many years.

- Random musical composition 1 https://musiclab.chromeexperiments.com/Song-Maker/song/6595644437823488

- Random musical composition 2 https://musiclab.chromeexperiments.com/Song-Maker/song/5504410688421888

Pads

Tools

- https://earsketch.gatech.edu/ Work with sound with python or javascript

- https://www.beatsperminuteonline.com/ Get BPM Manualy

- https://musiclab.chromeexperiments.com/ Make song from music box patterns

- https://bpmdetector.knifftech.org/file/ Get BPM by importing audio file

- https://www.produitencroix.com/ How to get the good sound lengh and fix it to 120 bpm (desired BPM x current BPM / current sound lengh new sound lengh with desired BPM)

- https://www.youtube.com/watch?v=vxz7mOu4XOM Python Sounds tutorial

- https://pypi.org/project/playsound/ Python playsound

- https://stackoverflow.com/questions/43904019/how-can-i-make-sound-with-frequency-in-python3 Sound frequency in Python

Individual Research

Links

- https://www.liveatc.net/search/?icao=klou Air Traffic Radio

- https://radiooooo.com/ One country, One decade, One music style, and play

- http://radio.garden/ Explore live radios around the globe

- https://driveandlisten.herokuapp.com/ Radio in cars around the globe

- https://duuuradio.fr/ Duuu is a radio dedicated to contemporary creation. Founded in 2012 by artists, this radio was born from the desire to make people hear situations of reflection and work.

- https://ezekielaquino.com/

- https://boniver.withspotify.com/ No exactly a radio, but impressive ascii style visualiser only using the character i

Reading and Listening

- Wendy Chun (2017), Crisis + Habit = Update

- Syed Mustafa Ali (2018), ‘White Crisis’ and/as ‘Existential Risk'

- Melanie Feinberg (2014), A Story Without End: Writing the Residual into Information Infrastructure

- Romi (Ron) Morrison (2018), Decoding space: Liquid infrastructures

- Romi (Ron) Morrisson (2019), Residual Black Data

- Aimee Bahng (2017), Plasmodial Improprieties: Octavia E. Butler, Slime Molds, and Imagining a Femi-Queer Commons

- Martine Syms (2013), The Mundane Afrofuturist Manifesto

- Ursula Leguin (1985), She Unnames Them

- Edouard Glissant (Manthia Diawara, 2010), One World in Relation

- Denise Fereira Da Silva, Arjuna Neuman (2019), Four waters: deep implicancy

- Four Rooms – Denise Ferreira Da Silva (2020), on the Logics of Exclusion and Obliteration

Reading

- Manthia Diawara, Edouard Glissant (2009), Edouard Glissant in Conversation with Manthia Diawara

- Wendy Hui Kyong Chun (2018), Queerying Homophily

- Orit Halperin (2014), Beautiful Data, A History of Vision and Reason since 1945

- Hope A. Olson (1998), Mapping Beyond Dewey’s Boundaries: Constructing Classificatory Space for’ Marginalized Knowledge Domains

- Dahlia S. Cambers, Mary Coffey, Måns Wrange / OMBUD (2014), The Law of Averages 1, 2 + 3

- Romi Ron Morrison (2019), Gaps between the digits. On the fleshy unknowns of the HUMAN

- Oulimata Gueye (2019), No Congo, no technologies

- Martine Syms (2013), The Mundane Afrofuturist Manifesto

- Ramon Amaro (2019), Artificial intelligence: Warped, colorful forms and their unclear geometries

- Donna Haraway (2019), A Giant Bumptious Litter: Donna Haraway on Truth, Technology, and Resisting Extinction

- Denise Fereira Da Silva (2016), On difference without separability

- Katherine Mckittrick, Mathematics Black Life

- Donna Harraway (1988), Situated Knowledges: The Science Question in Feminism and the Privilege of Partial Perspective

- Karen Barad, Adam Kleinmann (2012), Intra-actions

- Karen Barad (2016), Diffracting diffraction + Diffraction vs Reflection (in: Meeting the Universe Halfway)

- Katherine Mckittrick (2021), Dear Science (in: Dear Science and Other Stories)

- Zach Blas & Micha Carde (2015), Imaginary computational systems: queer technologies and transreal aesthetics

- Noah Tsika (2016), CompuQueer: Protocological Constraints, Algorithmic Streamlining, and the Search for Queer Methods Online

- Wendy Hui Kyong Chun (2009), Race and/as Technology; or, How to Do Things to Race (introduction)

- Syed Mustafa Ali (2016), A brief introduction to decolonial computing

- Michael Murtaugh (2020), Eventual consistency

- Saidiya Hartman (2020), The Plot of Her Undoing