|

|

| Line 1: |

Line 1: |

| ==6 steps of CG== | | ==The 6 steps of 3D Graphics== |

| 1. Pre-Production | | ===1. Pre-Production=== |

| In pre-production, the overall look of a character or environment is conceived. At the end of pre-production, finalized design sheets will be sent to the modeling team to be developed.

| | * Sketching |

| | * Defining color schemes |

| | * Storyboarding |

|

| |

|

| Every Idea Counts: Dozens, or even hundreds of drawings & paintings are created and reviewed on a daily basis by the director, producers, and art leads.

| | ===2. 3D Modelling=== |

|

| |

|

| Color Palette: A character's color scheme, or palette, is developed in this phase, but usually not finalized until later in the process.

| | Basic Modelling is made out of 2 types of objects: |

|

| |

|

| Concept Artists may work with digital sculptors to produce preliminary digital mock-ups for promising designs.

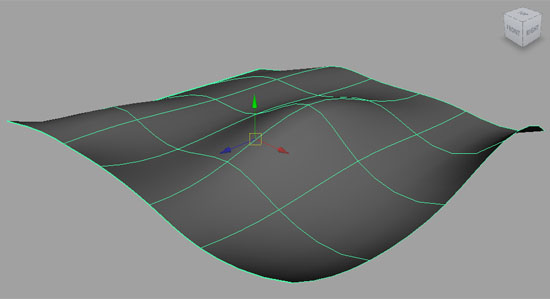

| | ====NURBS surface==== |

|

| |

|

| Character Details are finalized, and special challenges (like fur and cloth) are sent off to research and development.

| | A surface that is defined by 2 or more vector curves, and which the software connects. High level of mathematical precision and provides smooth surfaces |

| Ads

| | [[File:Nurbs.jpeg|framed|right]] |

| Best PPC Landing Pages

| | ====Polygon models==== |

| www.wordstream.com/landingpages

| |

| Cross the PPC Finish Line with Landing Pages that Convert!

| |

| Delta66, 3D voor iedereen

| |

| www.delta66.nl

| |

| 3D Visualisaties voor vaste prijs. Architectuur, 3D Web elementen

| |

| Archisign

| |

| www.archisign.nl

| |

| Voor totale presentatie oplossingen Van 2D naar 3D en nog veel meer...

| |

| 2. 3D Modeling | |

| With the look of the character finalized, the project is now passed into the hands of 3D modelers. The job of a modeler is to take a two dimensional piece of concept art and translate it into a 3D model that can be given to animators later on down the road.

| |

|

| |

|

| In today's production pipelines, there are two major techniques in the modeler's toolset: polygonal modeling & digital sculpting.

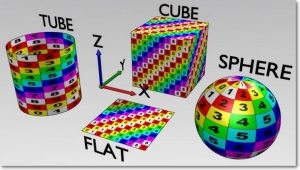

| | Start as a simple geometric shape, like a cube, sphere, or cylinder, which is then made more complex. This can be done by modifying or adding: |

| | * Faces |

| | * Edges |

| | * Vertices |

|

| |

|

| Each has its own unique strengths and weaknesses, and despite being vastly different, the two approaches are quite complementary.

| | [[File:Edges-vertices.gif|framed|right]] |

|

| |

|

| Sculpting lends itself more to organic (character) models, while polygonal modeling is more suited for mechanical/architectural models.

| | ===3. Shading & Texturing=== |

| The subject of 3D modeling is far too extensive to cover in three or four bullet points, but its something we'll continue covering in depth in both the blog, and in the Maya Training series.

| |

|

| |

|

| 3. Shading & Texturing

| | Defining the look of an object. |

| The next step in the visual effects pipeline is known as shading and texturing. In this phase, materials, textures, and colors are added to the 3D model.

| | * Define a Material = giving the object different properties (called shaders), for instance: color, transparency, glossiness etc etc |

| | * Adding textures = projecting a two dimensional image onto the model |

| | Or a combination of the two |

| | [[File:BSOD-Materials-Textures-mapping4.jpg|thumbnail]] |

|

| |

|

| Every component of the model receives a different shader-material to give it an appropriate look.

| | ===4. Lighting=== |

|

| |

|

| Realistic materials: If the object is made of plastic, it will be given a reflective, glossy shader. If it is made of glass, the material will be partially transparent and refract light like real-world glass.

| | The key to realism! |

| | * Related to materials |

| | * Shadows |

|

| |

|

| Textures and colors are added by either projecting a two dimensional image onto the model, or by painting directly on the surface of the model as if it were a canvas. This is accomplished with special software (like ZBrush) and a graphics tablet.

| | ===5. Animation=== |

| 4. Lighting

| | * Motion Rigging |

| In order for 3D scenes to come to life, digital lights must be placed in the scene to illuminate models, exactly as lighting rigs on a movie set would illuminate actors and actresses. This is probably the second most technical phase of the production pipeline (after rendering), but there's still a good deal of artistry involved.

| | * Pose-to-Pose |

| | * Physics |

|

| |

|

| Proper lighting must be realistic enough to be believable, but dramatic enough to convey the director’s intended mood.

| | ===Rendering & Post-Production=== |

|

| |

|

| Mood Matters: Believe it or not, lighting specialists have as much, or even more control than the texture painters when it comes to a shot’s color scheme, mood, and overall atmosphere.

| | * Finalizing Lighting: Shadows and reflections must be computed for each object. |

| | | * Special Effects: This is typically when effects like depth-of-field blurring, fog, smoke, and explosions would be integrated into the scene. |

| Back-and-Forth: There is a great amount of communication between lighting and texture artists. The two departments work closely together to ensure that materials and lights fit together properly, and that shadows and reflections look as convincing as possible.

| |

| 5. Animation

| |

| Animation, as most of you already know, is the production phase where artists breathe life and motion into their characters. Animation technique for 3D films is quite different than traditional hand drawn animation, sharing much more common ground with stop-motion techniques:

| |

| | |

| Rigged for Motion: 3D characters are controlled by means of a virtual skeleton or "rig" that allows an animator to control the model's arms, legs, facial expressions, and posture.

| |

| | |

| Pose-to-Pose: Animation is typically completed pose-to-pose. In other words, an animator will set a “key-frame” for both the starting and finishing pose of an action, and then tweak everything in between so that the motion is fluid and properly timed.

| |

| Jump over to our computer animation companion site for extensive coverage of the topic.

| |

| | |

| 6. Rendering & Post-Production

| |

| The final production phase for a 3D scene is known as rendering, which essentially refers to the translation of a 3D scene to a finalized two dimensional image. Rendering is quite technical, so I won’t spend too much time on it here. In the rendering phase, all the computations that cannot be done by your computer in real-time must be performed.

| |

| | |

| This includes, but is hardly limited to the following:

| |

| | |

| Finalizing Lighting: Shadows and reflections must be computed. | |

| | |

| Special Effects: This is typically when effects like depth-of-field blurring, fog, smoke, and explosions would be integrated into the scene. | |

| | |

| Post-processing: If brightness, color, or contrast needs to be tweaked, these changes would be completed in an image manipulation software following render time.

| |

| We've got an in-depth explanation of rendering here: Rendering: Finalizing the Frame

| |

| | |

| 7. Want to learn more?

| |

| Even though the computer graphics pipeline is technically complex, the basic steps are easy enough for anyone to understand. This article is not meant to be an exhaustive resource, but merely an introduction to the tools and skills that make 3D computer graphics possible.

| |

| | |

| Hopefully enough has been provided here to promote a better understanding of the work and resources that go into producing some of the masterpieces of visual effects we’ve all fallen in love with over the years.

| |

| | |

| ==a 3d model==

| |

| | |

| What is a 3D Model?

| |

| | |

| A 3D Model is a mathematical representation of any three-dimensional object (real or imagined) in a 3D software environment. Unlike a 2D image, 3D models can be viewed in specialized software suites from any angle, and can be scaled, rotated, or freely modified. The process of creating and shaping a 3D model is known as 3d modeling.

| |

| | |

| Types of 3D Models

| |

| | |

| There are two primary types of 3D models that are used in the film & games industry, the most apparent differences being in the way they're created and manipulated (there are differences in the underlying math as well, but that's less important to the end-user).

| |

| NURBS Surface: A Non-uniform rational B-spline, or NURBS surface is a smooth surface model created through the use of Bezier curves (like a 3D version of the the MS Paint pen tool). To form a NURBS surface, the artist draws two or more curves in 3D space, which can be manipulated by moving handles called control vertices (CVs) along the x, y, or z axis.

| |

| | |

| The software application interpolates the space between curves and creates a smooth mesh between them. NURBS surfaces have the highest level of mathematical precision, and are therefore most commonly used in modeling for engineering and automotive design.

| |

| | |

| | |

| Polygonal Model: Polygonal models or "meshes" as they're often called, are the most common form of 3D model found in the animation, film, and games industry, and they'll be the kind that we'll focus on for the rest of the article.

| |

| | |

| Polygonal models are very similar to the geometric shapes you probably learned about in middle school. Just like a basic geometric cube, 3D polygonal models are comprised of faces, edges, and vertices.

| |

| | |

| In fact, most complex 3D models start as a simple geometric shape, like a cube, sphere, or cylinder. These basic 3D shapes are called object primitives. The primitives can then be modeled, shaped, and manipulated into whatever object the artist is trying to create (as much as we'd like to go into detail, we'll cover the process of 3D modeling in a separate article).

| |

| | |

| The components of a polygonal model:

| |

| | |

| Faces: The defining characteristic of a polygonal model is that (unlike NURBS Surfaces) polygonal meshes are faceted, meaning the surface of the 3D model is comprised of hundreds or thousands of geometric faces.

| |

| | |

| In good modeling, polgons are either four sided (quads—the norm in character/organic modeling) or three sided (tris—used more commonly in game modeling). Good modelers strive for efficiency and organization, trying to keep polygon counts as low as possible for the intended shape.

| |

| | |

| The number of polygons in a mesh, is called the poly-count, while polygon density is called resolution. The best 3D models have high resolution where more detail is required—like a character's hands or face, and low resolution in low detail regions of the mesh. Typically, the higher the overall resolution of a model, the smoother it will appear in a final render. Lower resolution meshes look boxy (remember Mario 64?).

| |

| | |

| Edges: An edge is any point on the surface of a 3D model where two polygonal faces meet.

| |

| | |

| Vertices: The point of intersection between three or more edges is called a vertex (pl. vertices). Manipulation of vertices on the x, y, and z-axes (affectionately referred to as "pushing and pulling verts") is the most common technique for shaping a polygonal mesh into it's final shape in traditional modeling packages like Maya, 3Ds Max, etc. (Techniques are very, very different in sculpting applications like ZBrush or Mudbox.)

| |

| There's one more component of 3D models that needs to be addressed:

| |

| | |

| Textures and Shaders:

| |

| | |

| Without textures and shaders, a 3D model wouldn't look like much. In fact, you wouldn't be able to see it at all. Although textures and shaders have nothing do do with the overall shape of a 3D model, they have everything to do with it's visual appearance.

| |

| Shaders: A shader is a set of instructions applied to a 3D model that lets the computer know how it should be displayed. Although shading networks can be coded manually, most 3D software packages have tools that allow the artist to tweak shader parameters with great ease. Using these tools, the artist can control the way the surface of the model interacts with light, including opacity, reflectivity, specular highlight (glossiness), and dozens of others.

| |

| | |

| Textures: Textures also contribute greatly to a model's visual appearance. Textures are two dimensional image files that can be mapped onto the model's 3D surface through a process known as texture mapping. Textures can range in complexity from simple flat color textures up to completely photorealistic surface detail.

| |

| Texturing and shading are an important aspect of the computer graphics pipeline, and becoming good at writing shader-networks or developing texture maps is a specialty in it's own right. Texture and shader artists are just as instrumental in the overall look of a film or image as modelers or animators.

| |

| | |

| You made it!

| |

| | |

| ==Rendering==

| |

| Like Developing Film:

| |

| | |

| Rendering is the most technically complex aspect of 3D production, but it can actually be understood quite easily in the context of an analogy: Much like a film photographer must develop and print his photos before they can be displayed, computer graphics professionals are burdened a similar necessity.

| |

| | |

| When an artist is working on a 3D scene, the models he manipulates are actually a mathematical representation of points and surfaces (more specifically, vertices and polygons) in three-dimensional space.

| |

| | |

| The term rendering refers to the calculations performed by a 3D software package’s render engine to translate the scene from a mathematical approximation to a finalized 2D image. During the process, the entire scene’s spatial, textural, and lighting information are combined to determine the color value of each pixel in the flattened image.

| |

| | |

| Two Types of Rendering:

| |

| | |

| There are two major types of rendering, their chief difference being the speed at which images are computed and finalized.

| |

| | |

| Real-Time Rendering: Real-Time Rendering is used most prominently in gaming and interactive graphics, where images must be computed from 3D information at an incredibly rapid pace.

| |

| | |

| Interactivity: Because it is impossible to predict exactly how a player will interact with the game environment, images must be rendered in “real-time” as the action unfolds.

| |

| | |

| Speed Matters: In order for motion to appear fluid, a minimum of 18 - 20 frames per second must be rendered to the screen. Anything less than this and action will appear choppy.

| |

| | |

| The methods: Real-time rendering is drastically improved by dedicated graphics hardware (GPUs), and by pre-compiling as much information as possible. A great deal of a game environment’s lighting information is pre-computed and “baked” directly into the environment’s texture files to improve render speed.

| |

| Offline or Pre-Rendering: Offline rendering is used in situations where speed is less of an issue, with calculations typically performed using multi-core CPUs rather than dedicated graphics hardware.

| |

| | |

| Predictability: Offline rendering is seen most frequently in animation and effects work where visual complexity and photorealism are held to a much higher standard. Since there is no unpredictability as to what will appear in each frame, large studios have been known to dedicate up to 90 hours render time to individual frames.

| |

| | |

| Photorealism: Because offline rendering occurs within an open ended time-frame, higher levels of photorealism can be achieved than with real-time rendering. Characters, environments, and their associated textures and lights are typically allowed higher polygon counts, and 4k (or higher) resolution texture files.

| |

| Rendering Techniques:

| |

| | |

| There are three major computational techniques used for most rendering. Each has its own set of advantages and disadvantages, making all three viable options in certain situations.

| |

| Scanline (or rasterization): Scanline rendering is used when speed is a necessity, which makes it the technique of choice for real-time rendering and interactive graphics. Instead of rendering an image pixel-by-pixel, scanline renderers compute on a polygon by polygon basis. Scanline techniques used in conjunction with precomputed (baked) lighting can achieve speeds of 60 frames per second or better on a high-end graphics card.

| |

| | |

| Raytracing: In raytracing, for every pixel in the scene, one (or more) ray(s) of light are traced from the camera to the nearest 3D object. The light ray is then passed through a set number of "bounces", which can include reflection or refraction depending on the materials in the 3D scene. The color of each pixel is computed algorithmically based on the light ray's interaction with objects in its traced path. Raytracing is capable of greater photorealism than scanline, but is exponentially slower.

| |

| | |

| Radiosity: Unlike raytracing, radiosity is calculated independent of the camera, and is surface oriented rather than pixel-by-pixel. The primary function of radiosity is to more accurately simulate surface color by accounting for indirect illumination (bounced diffuse light). Radiosity is typically characterized by soft graduated shadows and color bleeding, where light from brightly colored objects "bleeds" onto nearby surfaces.

| |

| | |

| In practice, radiosity and raytracing are often used in conjunction with one another, using the advantages of each system to achieve impressive levels of photorealism.

| |

| | |

| | |

| [[File:Texturing|thumbnail]]

| |