User:Bnstlv/transclusion-cards

Making cards: transcluding

https://pad.xpub.nl/p/Methods5Oct22

Project description

- Team Stephen - Boyana

- The Why-How-What model

On Monday, October 3, we all gathered at the Research station to meet Wilma. Wilma is an ex-librarian, now the research station master.

We listened to her story and recorded it on Boyana's phone. Our assignment by Simon and Steve was to edit the vosk-transcribed text to make it legible to an uninitiated reader.

Wednesday | October 5th

In groups of two people, we had to make the recording legible as text ("cards"). We applied the Why-How-What model.

What (object of research) Community diff check. Based on human software interactions.

- cards visual translation of a process...result of the experiment; - which visualises the process; creates a key and accesses the full information.

We saved it in a pdf format (web to print). PDF is a good format for documents because it is portable.

How (methodology) - we started with two computer-transcribed texts. One we made in vosk-transcriber.

And another one by using the native app on Boyana's phone.

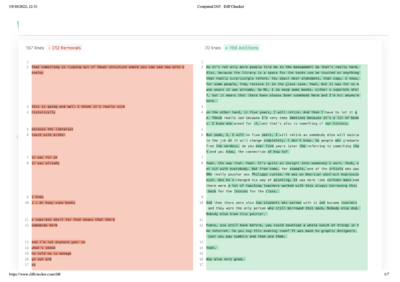

We assumed they had a common source, something transcluded in both of them (we assumed this because we knew they were sourced from the same audio recording). We selected a short section of each that seemed to be the same point and compared them on https://www.diffchecker.com.

Then we emphasised the identical (transcluded) elements. We inspected the code (different classes) to find out how the identical parts were shown on the screen. When we found them we tweaked the css values (rules) to change the appearance of the text. Basically, we blacked out the appearance of the non-identical parts (we hid them) and thus highlighted the identical ones.

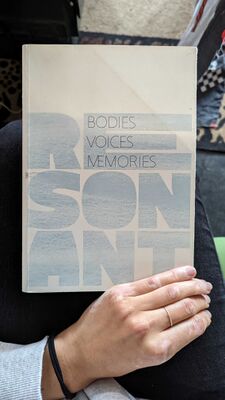

We also planned to make the remaining text more legible by formatting, adding punctuation, shortening, and possibly adding words. Our plan was to reveal(?) the source text (story) by editing in this way. We wanted to apply the same concept Boyana saw some days ago in the XML room. The book is called "Resonant bodies, voices, memories"

and the particular story was "House Pieces" by Gunndis Y. Finnbogadottir.

During the whole process, Stephen and I saw that the card we were making is about transclusion. So we decided our card to be about "transcluding". He wrote the content for the card itself and made a really good visualisation of our process on his notebook, but here is the short digital version of it:

Here is the final result on diffchecker.com

and here is on print.

Stephen and I ended up doing a performance-like "upgrade" on the end result (cards) by arranging the furniture

Why (motivation-research question) - By doing so (the editing) we'll be adding meaning to the text so it forms the story about Wilma. Because the source of the text is actually her life story. We consider her a co-author of the text. We also want to keep a trace of the missing character of Wilma.

We wanted to explore software-human interaction, and how humans and algorithms can interact to create stories. Conversation (with Wilma, with recording devices, with each other, with the diffchecker). We made the decision about what this piece of software should do with the text. We made an interpretation of the digital output into the physical space by arranging furniture and connectors.

The end. Salut, friends!