User:Manetta/thesis/thesis-outline: Difference between revisions

No edit summary |

(→NLP) |

||

| Line 3: | Line 3: | ||

==intro== | ==intro== | ||

===NLP=== | ===NLP=== | ||

With 'i-could-have-written-that' i would like to look at technologies that process natural language (NLP). By regarding NLP software as cultural objects, i'll focus on the inner workings of their technologies: | With 'i-could-have-written-that' i would like to look at technologies that process natural language (NLP). By regarding NLP software as cultural objects, i'll focus on the inner workings of their technologies: what are the mechanisms that systemize our natural language in order for it to be understood by a computer? | ||

NLP is a category of software packages that is concerned with the interaction between human language and machine language. NLP is mainly present in the field of computer science, artificial intelligence and computational linguistics. On a daily basis people deal with services that contain NLP techniques: translation engines, search engines, speech recognition, auto-correction, chatbots, OCR (optical character recognition), license plate detection, data-mining. For 'i-could-have-written-that', i would like to place NLP software central, not only as technology but also as a cultural object, to reveal in which way NLP software is constructed to understand human language, and what side-effects these techniques have. | NLP is a category of software packages that is concerned with the interaction between human language and machine language. NLP is mainly present in the field of computer science, artificial intelligence and computational linguistics. On a daily basis people deal with services that contain NLP techniques: translation engines, search engines, speech recognition, auto-correction, chatbots, OCR (optical character recognition), license plate detection, data-mining. For 'i-could-have-written-that', i would like to place NLP software central, not only as technology but also as a cultural object, to reveal in which way NLP software is constructed to understand human language, and what side-effects these techniques have. | ||

Revision as of 22:55, 14 January 2016

outline

intro

NLP

With 'i-could-have-written-that' i would like to look at technologies that process natural language (NLP). By regarding NLP software as cultural objects, i'll focus on the inner workings of their technologies: what are the mechanisms that systemize our natural language in order for it to be understood by a computer?

NLP is a category of software packages that is concerned with the interaction between human language and machine language. NLP is mainly present in the field of computer science, artificial intelligence and computational linguistics. On a daily basis people deal with services that contain NLP techniques: translation engines, search engines, speech recognition, auto-correction, chatbots, OCR (optical character recognition), license plate detection, data-mining. For 'i-could-have-written-that', i would like to place NLP software central, not only as technology but also as a cultural object, to reveal in which way NLP software is constructed to understand human language, and what side-effects these techniques have.

knowledge discovery in data (data-mining)

For the occassion of the graduating project of this year, i would like to focus on the practise of data-mining.

hypothesis

The results of data-mining software are not mined, results are constructed.

How do data-mining elements allow for algorithmic agreeability?

project & thesis (merge)

voice: accessible for a wider public

needed: problem formulations that connect with day-to-day life

As 'i-could-have-written-that' is driven by textual research, it would feel quite natural to merge the practical and written (reflective) elements of the graduation procedure into one project. Also, as the eventual format i have in mind at the moment is a publication series, that could bring the two together. Next to written reflections on the hypothesis of constructed results, i would like to work on hands-on prototypes with text-mining software.

As a work method, i would like to isolate and analyse different data-mining elements to test the hypothesis on. The elements selected so far focus on: terminology (metaphors + history), software (data construction + ... ), and presentation of results.

data mining elements

- terminology ('mining', 'data')

- 'mining' → from 'mining' minerals to 'mining' data; (wiki-page)

- 'data' → data as autonomous entity; from: information, to: data science

- text-processing

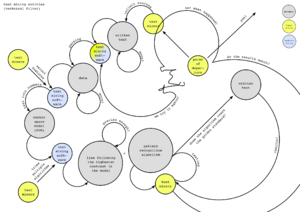

- workflow mining-software (eg. Pattern, Wecka); (software workflow diagram)

- from: able to check results with senses (OCR), to: intuition (data-mining)

- parsing, how text is treated: as n-grams, chunks, bag-of-words, characters

- presentation of results

theory

- solutionism & techno optimism

- big-data, machine learning & data-mining criticism

research material

→ filesystem interface, collecting research related material (+ about the workflow)

→ wikipage for 'i-could-have-written-that' (list of prototypes & inquiries)

→ little glossary

mining as ideology

* from mining minerals to mining data

anthropomorphism

* anthropomorphic qualities of a computer (?)

* the photographic apparatus → the data apparatus (annotations)

* Joseph's (Weizenbaum) questions on Computer Power and Human Reason

text processing

* semantic math: averaging polarity rates in Pattern (text mining software package)

* notes on wordclouds

* automatic reading machines; from encoding-decoding to constructed-truths

* index of WordNet 3.0 (2006)

data as autonomous entity

* knowledge driven by data - whenever i fire a linguist, the results improve

other

* (laughter) - it's embarrassing but these are the words

* call for a syntactic view; Florian Cramer & Benjamin Bratton (text)

* EUR PhD presentation 'Sentiment Analysis of Text Guided by Semantics and Structure' (13-11-2015)

* index of Roget's thesaurus (1805)

* comparing the classification of the word 'information' Thesaurus (1911) vs. WordNet 3.0 (2006)

annotations

- Alan Turing - Computing Machinery and Intelligence (1936)

- The Journal of Typographic Research - OCR-B: A Standardized Character for Optical Recognition this article (V1N2) (1967); → abstract

- Ted Nelson - Computer Lib & Dream Machines (1974);

- Joseph Weizenbaum - Computer Power and Human Reason (1976); → annotations

- Water J. Ong - Orality and Literacy (1982);

- Vilem Flusser - Towards a Philosophy of Photography (1983); → annotations

- Christiane Fellbaum - WordNet, an Electronic Lexical Database (1998);

- Charles Petzold - Code, the hidden languages and inner structures of computer hardware and software (2000); → annotations

- John Hopcroft, Rajeev Motwani, Jeffrey Ullman - Introduction to Automata Theory, Languages, and Computation (2001);

- James Gleick - The Information, a History, a Theory, a Flood (2008); → annotations

- Matthew Fuller - Software Studies. A lexicon (2008);

- Language, Florian Cramer; → annotations

- Algorithm, Andrew Goffey;

- Marissa Meyer - the physics of data, lecture (2009); → annotations

- Matthew Fuller & Andrew Goffey - Evil Media (2012); → annotations

- Antoinette Rouvroy - All Watched Over By Algorithms - Transmediale (Jan. 2015); → annotations

- Benjamin Bratton - Outing A.I., Beyond the Turing test (Feb. 2015) → annotations

- Ramon Amaro - Colossal Data and Black Futures, lecture (Okt. 2015); → annotations

- Benjamin Bratton - On A.I. and Cities : Platform Design, Algorithmic Perception, and Urban Geopolitics (Nov. 2015);

bibliography (five key texts)

- Vilem Flusser - Towards a Philosophy of Photography (1983); → annotations

- Language, Florian Cramer (2008); → annotations

- Antoinette Rouvroy - All Watched Over By Algorithms - Transmediale (Jan. 2015); → annotations

- The Journal of Typographic Research - OCR-B: A Standardized Character for Optical Recognition this article (V1N2) (1967); → abstract