User:Manetta/i-could-have-written-that/little-glossary

little glossary

language (as a resource)

It is discussable if language itself could be regarded as a technology or not. For my project i will follow James Gleick's statement in his book 'The Information: a Theory, a History, a Flood'[1], where he states: Language is not a technology, (...) it is not best seen as something separate from the mind; it is what the mind does. (...) but when the word is instantiated in paper or stone, it takes on a separate existence as artifice. It is a product of tools and it is a tool.

in-between language / inter-language

(...)

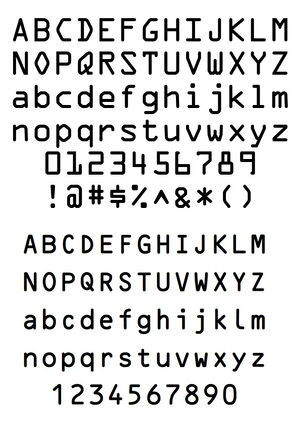

example: OCR-A: designed by American Type Founders (1968) + OCR-B: designed by Adrian Frutiger (1968)

writing as technology

...

alphabet as a tool

A very primary writing technology is the latin alphabet. The main set of 26 characters is a toolbox that enables us to systemize language into characters, into words, into sentences. When considering these tools as technologies, it makes it possible to follow a line from natural language to a computer language that computers can take as input, via multiple forms of mediation.

natural language?

For this project i would like to look at 'natural language' from a perspective grounded in computer science, computational linguistics and artificial intelligence (AI), where the term 'natural language' is mostly used in the context of 'natural language processing' (NLP), a field of studies that researches the transformation of natural (human) language into information(?), a format(?) that can be processed by a computer. (language-(...)-information)

the language that is not first invented and arranged technologically [2]

systemization

I'm interested in that moment of systemization: the moment that language is transformed into a model. These models are materialized in lexical-datasets, text-parsers, or data-mining algorithms. They reflect an aim of understanding the world through language (similar how the invention of geometry made it possible to understand the shape of the earth).

language as information

...

In how far does there lie in the essence of language itself the vulnerability and the possibility for its transformation into technological language, i.e., into information? [2]

automation

Such linguistic models are needed to write software that automates reading processes, which is a more specific example of natural language processing. It aims to automate the steps of information processing, for example to generate new information (in data-mining) or to perform processes on a bigger scale (which is the case for translation engines).

heteromation

...

reading (automatic reading machines)

In 1967, The Journal for Typographic Research expressed already high expectations of such 'automatic reading machines', as they would widen the bounds of the field of data processing. The 'automatic reading machine' they refered to used an optical reading process, that would be optimized thanks to the design of a specific font (called OCR-B). It was created to optimize reading both for the human eye and the computer (using OCR software).

reading-writing (automatic reading-writing-machines)

An optical reading process starts by recognizing a written character by its form, and transforming it into its digital 'version'. This optical reading process could be compared to an encoding and decoding process (like Morse code), in the sense that the process could also be excecuted in reverse, without getting different information. The translation process is a direct process.

But technologies like data-mining are processing data less direct. Every result that has been 'read' by the algorithmic process, is one version, one interpretation, one information process 'route' of the written text. Therefore could data-mining not be called a 'read-only' process, but better be labeled as a 'reading-writing' process. Where does the data-mining-process write?

tools (?)

the Pattern modules (pattern.en, pattern.vector, or pattern.graph);

technique (?)

parsing, lemmatizing, TFIDF (term frequency–inverse document frequency), KNN (K-nearest-neighbour), NB (naive bayes) are all basic mining techniques, and are used in a broader field of data mining as well. But also 'mining' and 'machine learning' are techniques, which contain some of them. I consider 'big data' also as a technique, although there is no fixed definition of the term, it is the technique of creating data from documents.

technology (?)

text mining as technology, a practise that includes more than only the code and scripts: ie. the software development team, the applications of the software, the culture of writing software in a more general sense (hosting the package on GitHub, and a Google-Group for feedback and discussions);

Technology can mean: the totality of the extant machines and apparatuses, merely as objects that are available-or in operation. Technology can mean: the production of these objects, whose production is preceded by a project and caIculation. Technology can also mean: the appertainment of what has been specified into one with the humans and the groups of humans who work in the construction, production, installation, service, and supervision of the whole system of machines and appliances. Yet, we do not experience what such broadly depicted technology really is with these pointers. [2]

data

(...)

document

(...)

workflow

(...)

data driven

(...) An example of a data-driven system is d3 (Data Driven Documents), which documents only 'happen' in the browser. Therefore d3 is not ideal for publishing aims.

document driven

(...) For example: MongoDB is called a document-oriented database, which probably refers to its flexibility (as Mongo does not use relational tables like we know from spreadsheets for example), but is confusing to me as it doesn't 'take' document files on its elemental level.

template

(...)

authoring

(...)

serialization

→ (for programmers:) a written form of something

→ to serialize = to put in order

Serialization is an in between state, and therefore not a writing form. It contains the idea of semantic structured data, and standardization to use these structures in between documents. Examples of serializations are xml, rdf & microdata.

'mining'

references

- ↑ James Gleick's personal webpage, The Information: a Theory, a History, a Flood - James Gleick (2011)

- ↑ Jump up to: 2.0 2.1 2.2 Martin Heidegger - Überlieferte Sprache und Technische Sprache (1989), from: transl. Traditional Language and Technological Language (1999).