MedianStop/17.12.2024: Difference between revisions

Wang ziheng (talk | contribs) |

Wang ziheng (talk | contribs) |

||

| Line 101: | Line 101: | ||

=GRADUATION PROJECT= | =GRADUATION PROJECT= | ||

In my past projects, I built a foundation for my current project by tackling technical challenges, gathering ideas, and relevant research. | In my past projects, I built a foundation for my current project by tackling technical challenges, gathering ideas, and doing relevant research. | ||

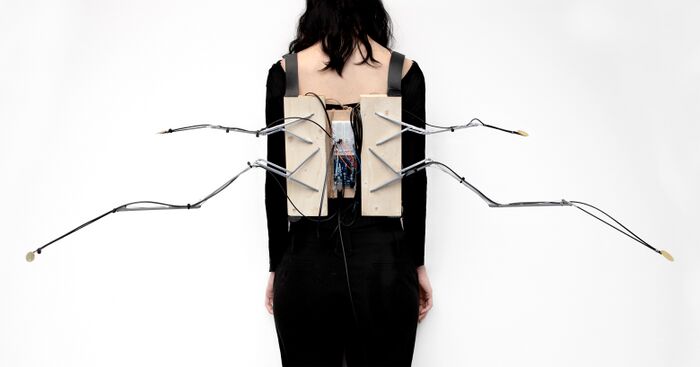

*''Rain Receiver was my first project, a wearable device that let sound interact with rain. '' | *''Rain Receiver was my first project, a wearable device that let sound interact with rain. '' | ||

Revision as of 01:04, 17 December 2024

HEAD

This presentation is divided into two parts.

In the first part, I will introduce the projects I made in my first year, explain how they relate to my graduation project, and how they supported my graduation project.

In the second part, I will present the concept of my graduation project, the references that inspired it, and the progress I’ve made so far.

ROOTS

Here are descriptions of some previous projects which have a direct relation to my graduation project:

SI22: Rain Receiver - a device that interacts with the weather/nature

The Rain Receiver project started with a simple question during a picnic: what if an umbrella could do more than just protect us from the rain? Imagined as a device to capture and convert rain into digital signals, the Rain Receiver is designed to archive nature's voice, capturing the interactions between humans and the natural world.

Inspiration for the project came from the "About Energy" skills classes, where I learned about ways to use natural energy—like wind power for flying kites. This got me thinking about how we could take signals from nature and use them to interact with our devices.

The Rain Receiver came together through experimenting with Max/MSP and Arduino. Using a piezo sensor to detect raindrops, I set it up to translate each drop into MIDI sounds. By sending these signals through Arduino to Max/MSP, I could automatically trigger the instruments and effects I had preset. Shifting away from an umbrella, I designed the Rain Receiver as a wearable backpack so people could experience it hands-free outdoors.

With help from Zuzu, we connected the receiver to a printer, creating symbols like "/" marks alongside apocalyptic-themed words to archive each raindrop as a kind of message. After fixing a few bugs, the Rain Receiver was showcased at WORM’s Apocalypse event, hinting at how this device could be a meaningful object in the future.

https://pzwiki.wdka.nl/mediadesign/Wang_SI22#Rain_Receiver

https://pzwiki.wdka.nl/mediadesign/Express_lane#Rain_receiver

SI23: sound experimentation tools base on HTML

During my experiment with Tone.js, I found its sound effects were quite limited. This led me to explore other JavaScript libraries with a wider repertoire of sound capabilities, like Pizzicato.js. Pizzicato.js offers effects such as ping pong delay, fuzz, flanger, tremolo, and ring modulation, which allow for much more creative sound experimentation. Since everything is online, there’s no need to download software, and it also lets users experience sound with visual and interactive web elements.

Inspired by deconstructionism, I created a project that presents all these effects on one webpage, experimenting with ways to layer and combine them like a "sound quilt."

I also trying to make it Playful, making sound interact through the playing, like the "beer robber", a sequencer controlling by a flying helicopter, each beer level represents the pitch of each step.

To introduce the project and gather feedback, I hosted a workshop and a performance. I started a JavaScript Club to introduce sound-related JavaScript libraries and show people how to experiment with sound on the web. At the end of the workshop, with a form of “Examination”, I gave out a zine hidden inside a pen called Script Partner. Each zine included unique code snippets for creating instruments or sound effects.

For the performance, I used an HTML interface I designed, displaying it on a large TV screen. The screen showed a wall of bricks, each brick linked to a frequency. By interacting with different bricks and sound effect sliders, the HTML could create a blend of sound and visuals, a strange, wobbly, and mysterious soundscape.

https://pzwiki.wdka.nl/mediadesign/Express_lane#Javasript

https://pzwiki.wdka.nl/mediadesign/JavaScriptClub/04

SI24: MIDI device

Rain Receiver is actually a midi controller but just by using sensor.

Most logic of a MIDI controller is to help users map their own values/parameters in the DAW, using potentiometers, sliders, buttons for sequencer steps (momentary), or buttons for notes (latching). The layout of the controls on these devices usually features a tight square grid with knobs, which is a common design for controllers intended for efficient control over parameters such as volume, pan, filters, and effects.

During an period of using pedals and MIDI controllers for performance, I've organized most of my mappings into groups, with each group containing 3 or 4 parameters.

For example,

the Echo group includes Dry/Wet, Time, and Pitch parameters, the ARP group includes Frequency, Pitch, Fine, Send C, and Send D.

In such cases, a controller with a 3x8 grid of knobs is not suitable for mapping effects with 5 parameters, like the ARP. A structure resembling a tree or radial structure would be more suitable for the ARP's complex configuration.

The structures, such as planets and satellites, demonstrate how a large star can be radially mapped to an entire galaxy; A tiny satellite orbiting a planet may follow an elliptical path rather than a circular one; Comets exhibit irregular movement patterns. The logic behind all these phenomena is similar to the logic I use when producing music.

"Solar Beep" is the result of this search, built to elevate creative flow with a uniquely flexible design. It offers three modes—Mono, Sequencer, and Hold—alongside 8 knobs, a joystick, and a dual-pitch control for its 10-note button setup. With 22 LEDs providing real-time visual feedback, "Solar Beep" simplifies complex mappings and gives users an intuitive experience, balancing precision and adaptability for a more responsive and engaging production tool.

https://pzwiki.wdka.nl/mediadesign/Wang_SI24#Midi_Controller

Building Connections

From September to December, I’ve been trying to combine the work I made, I performed with my MIDI controller and HTML and a JavaScript Club to share my sound experiments. I want my projects to stay connected to the idea of publications, but I also want to play with their form. For example, a publication hiding inside of a pen, or a 2 minutes short performance with a cocktail as outcome. These ideas are helping me rethink the form and content about publications.

Performance at Klankschool(21.09)

At Klankschool, I performed using an HTML setup with sliders, each displaying a different image. Each brick represented a frequency, allowing multiple bricks to overlap pitches. I combined this with the Solar Beep MIDI controller I maded.

The HTML setup has great potential, especially with MIDI controllers. For example, I mapped a MIDI controller to the bricks, using its hold function to toggle bricks or act as a sequencer. This combination saves effort by separating functions—letting a physical device handle sequencing instead of building it into the HTML.

Javascript Club #4(14.10)

During the JavaScript Club Session 4, I introduced JavaScript for sound, focusing on Tone.js and Pizzicato.js. I demonstrated examples of how I use these libraries to create sound experiments.

At the end, I provided a cheat pen with publications, each containing a script corresponding to a sound effect or tool.

https://pzwiki.wdka.nl/mediadesign/JavaScriptClub/04

Public Moment(04.11)

During the public event, the kitchen transformed into a Noise Kitchen. Using kitchen tools and a shaker, I made a non-alcoholic cocktail while a microphone overhead captured the sounds. These sounds were processed through my DAW to generate noise.

The event had four rounds, each round has 2 minutes, accounting time with the microwave, accommodating four participants. The outcome of each round was a non-alcoholic cocktail, made with ginger, lemon, apple juice, and milky oolong tea.

Colloquium Workshop(02.12)

https://pzwiki.wdka.nl/mediadesign/User:Wang_ziheng/BuildYourSoundB0x

Recycling is a interesting concept, it's about creatively using everyday tools and objects to form unique combinations.

For this workshop, I gathered items from daily life, tools, parts, door handles, lunch boxes, cans, egg beaters, hairpins, forks, chains, and more. Encouraging participants to bring their own materials was also a crucial element, as it created the diversity of possibilities.

To guide the process, I provided a selection of basic components, such as cutting boards, wooden boxes, jars, and iron cans. Initially, I considered preparing identical wooden boxes for each group, but I realized that the individuality of each finished product is a key part of this workshop's essence.

The workshop is also very relevant to my graduation project. Working with others to see these different combinations has been very inspiring for me.

GRADUATION PROJECT

In my past projects, I built a foundation for my current project by tackling technical challenges, gathering ideas, and doing relevant research.

- Rain Receiver was my first project, a wearable device that let sound interact with rain.

- Sound Quilt introduced me to web-based interaction using JavaScript and HTML.

- Solar Beep helped me gain hands-on experience with hardware development.

I want to combine the knowledge and ideas from all three projects to shape my graduation project.

For my graduation project, I want to build a device that captures natural dynamics—such as wind, tides, and direction, converts them into either MIDI signals or Oscillators.

This device will connect the natural environment and digital sound/visual design, offering a experimental methods for making sound and visual art.

By connecting the sensor to detect the wind or other nature elements, the device can control sound plugins or interact with web pages and software, providing a playful way to navigate sound and visuals.

By connecting directly with oscillators, it will generate dynamic, unstable sound forms, creating an experimental way with the unpredicted natural elements.

This project connects to broader themes of environmental awareness and the fusion of technology with nature. By exploring the interplay between natural phenomena and digital soundscapes, it will utilize unstable elements from nature to engage with digital aspects. This could be an important way to enhance creativity in sound design.

I will build on my experiences with Special issue 22 –24, combining my technical skills and creative exploration from those projects. The process of researching, drafting, and debugging will support my development approach, allowing me To incorporate previous learnings into this new project and create a seamless integration of sound and nature.

Key question:How do we adapt to nature and transform the language of nature?

- Humans can accurately predict the regular of tides and use the kinetic energy of waves.

- Humans use geomagnetism to determine direction and make compasses.

- Humans use wind energy to generate electricity and test wind direction and speed through different instruments.

We are constantly obtaining energy and dynamics from nature.

While we adapt to and use natural energy, we continue to produced and invent various machines to transform energy. This is a physical, energy transformation.

I want to apply this energy conversion method to this project to create apparatus which control digital devices, sounds, or web page interactions through these natural variables.

Pre-Research

At the first phase when I started the project, I did a lot of research about the topic: Interact Nature, and Data-based Art. For example, using algorithms to analyze the environmental values of trees and transform them into sound, or using the electricity generated by potatoes to produce sounds.

Moving Trees highlights the often-overlooked lives of trees by combining art and technology. Sensors installed in six significant trees in Arnhem reveal hidden data, such as trunk movements, CO2 and water flow, and photosynthesis activity. Wooden benches with QR codes provide access to soundscapes, visuals, and stories tied to the trees. This innovative installation transforms a simple walk into an opportunity to observe trees as dynamic, living beings, fostering a deeper connection with nature and its unseen processes.

https://arnhemsebomenvertellen.nl/T/uKFBL/data/7

https://arnhemsebomenvertellen.nl/

When I saw this project, the idea of using trees to generate sound based on data like temperature, light, and humidity seemed interesting, but I realized that the sounds created by this data didn’t really connect to the trees themselves. For example, using potatoes or plants to control sound may be an interesting way to change sounds, but it doesn’t actually reflect anything about the potato or plant. This made me question if using data to create sound can truly represent nature or if it just makes us aware of the difference between the natural world and the way we measure it.

As I thought more about the project, I wondered if the problem was with the way sound was being designed. It seems to lose the real connection to nature. For example, if there were field recordings of trees—like the sound of wind in the leaves—people might better connect the sounds to the trees. However, that would be a different approach since the main idea of the project is to show us things we can’t normally see, like changes in temperature or oxygen levels. I started to wonder if the sounds were intentionally not related to nature to make people think about how data can be different from real life, which is an interesting way of making people think.

This made me realize how hard sound design can be in projects like this. Turning data into sound that still feels like it’s connected to nature is tricky. Data is often invisible or hard to understand, so it’s not easy to create sounds that make people feel like they’re hearing something from nature. The sounds generated from temperature or oxygen levels don’t automatically make you think of trees or the outdoors. This made me think about how difficult it is to find the right balance—how to create sounds that still feel like they belong to nature, without just using obvious natural sounds like wind or birds.

Looking at this, I saw that my own project is a bit different. While both projects explore the relationship between nature and human interaction, my focus is not on representing nature directly through sound. Instead, I want to expand how people interact with things—moving from simple touches or clicks to more dynamic and unpredictable forms of interaction. The goal of my project isn’t to replicate or interpret nature through sound, but to explore new ways of interacting with the world. This allows for a more open and imaginative way of experiencing and exploring the environment.

Progress

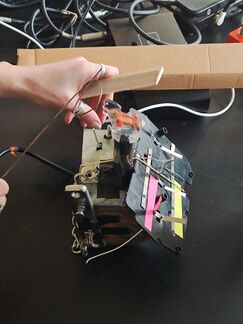

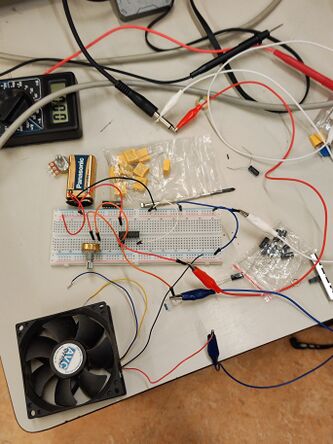

Regarding the research of the graduation project, I think the project should start with sensors, which is also the most important part of this project. In the third semester(SI24), I have solved most of the technical problems of midi.

When building a device, it’s a very practical task, every detail will lead to different directions of development. It’s hard to determine what I’ll make at the start, everything is constantly changing. This process involves continuous investigation, revision, reconstruction, and adjustment.

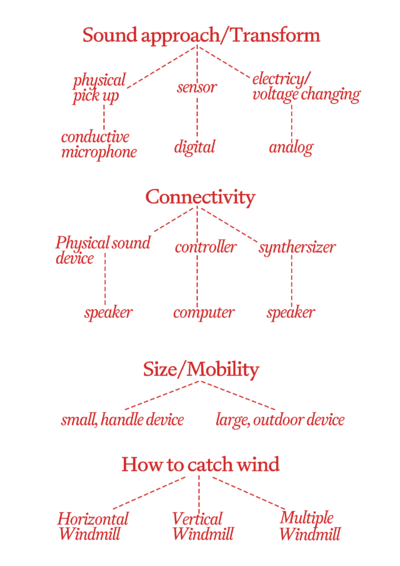

For example, during my research process with the wind device, I listed the main issues and challenges for building my project. I divided the project into four main parts and searched for references to support each part.

According to the protocol, it’s important to decide the size and mobility of the device, whether it’s a handheld device or wearable, using human parts to create wind.

In each process, I will found relevant references to help me to choose the most suitable approach.

Sound Approach/Transform

In terms of sound, I plan to try several experimental methods.

MIDI control via sensor data

A single MIDI device sends data through a sensor to control the corresponding software or parameters on the computer.

OSC-controlled waveform changes

An OSC device controls the waveform change of the sound through the current change of the sensor.

Through a simple model, I connected the cd40106 to a fan and a potentiometer, the potentiometer controls the frequnecry of the OSC, the rotation of the fan caused the OSC to be constantly turned on and off.

Arduino-based digital sound device

Based on the digital sound of Arduino, make a digital sound device through the sensor.

Physical pickups with electronic effects

Through physical pickup methods such as piezo sensors, obtain the original physical vibration sound and add electronic components to create reverberation, distortion and other effects.

https://folktek.com/collections/electro-acoustic/products/resonant-garden

The Resonant Garden is an electronic-acoustic hybrid instrument designed to create anything from beats and oddities to dense sound scapes.

The garden utilizes three Alter circuits each equipped with a mic pre-amp, and 4 sprout (stringed) panels for generating acoustics. By plucking, rubbing, tapping or even bowing, those micro-acoustic sounds become amplified and affected by the Alter in any number of ways. In essence, the garden is a large microphone designed to pick up vibrations.

Connectivity

Physical sound device

Digital Controller

Synthesizer

Size/Mobility

Small, handle device

If it is a very small device that can be held in the hand, how to connect it to the computer? How to keep it connected to the computer during movement, if it is transmitted through WiFi signals, how to solve technical problems.

The Concertronica

http://www.crewdson.net/the-concertronica.html

https://www.vice.com/en/article/eccentric-handmade-instruments-marry-folk-tech-and-electronics/

The Concertronica is a controller instrument based around the design of a traditional Concertina. Each of the two ends has 10 momentary push buttons, whilst the ‘bellows’ action – rather than using air – uses strings on a pulley system that have been hacked out of some old gametrak playstation controllers. The 4 strings each give a distance measurement as well as an X/Y position, so 3 parameter readings for each string. The base of each string has a pair of RGB LEDs which makes for a really spacey light show when the instrument is being played! The analog signal is carried from one end to the other using a 26 D-sub connection which I have soldered a bespoke cable for, not something I would recommend doing to anyone else! The instrument is powered via a USB connection to an Arduino which is housed in one of the ends. I am using Max/Msp to convert the signal into midi which I am then sending to Ableton Live.

Mouth Factory

https://www.dezeen.com/2012/07/14/mouth-tools-by-cheng-guo/

A Wearable device - Mouth Factory by Cheng Guo

This piece features a small windmill mounted in front of the mouth, powered by the breath. As the user exhales, blows, or speaks, the airflow drives the windmill’s rotation, turning a basic bodily function into mechanical energy. The work playfully explores the intersection of the human body and machinery, reimagining the mouth as a tool for production and challenging traditional notions of labor and energy generation.

Large, outdoor device

Portability and usage scenarios, whether it must be used outdoors, if it must be used outdoors, whether it must be connected to a computer, (and whether the storm will take away the device, which reminds me of an important challenge of rain receivers, whether it will encounter lightning in a rainstorm when worn, because rain receivers look like lightning rods in a sense.)

https://www.designboom.com/art/taiyi-yu-windmill-beach-rotates-sand-wip-in-play-10-16-2023/

Taiyi Yu’s inspiration for W.I.P. struck him upon arriving in the Netherlands, gathering an appreciation for the country’s robust winds and iconic windmills. ‘How have winds been industrialized?’ the designer questioned. This thought became the foundation of his research in which he explored the historical and contemporary appropriation of winds through the lenses of modernity and coloniality.

The windmill, both a concrete and metaphorical symbol of industrialization, underscores the dichotomy between humans and nature. It embodies a mechanistic worldview that views the natural world as a resource, shaping human-nature relationships, influencing daily life, and promoting resource exploitation through functional objects and infrastructure. Through a playful manipulation of this machine, the installation prompts a reflection on whether we can move away from the objectification of winds to foster a renewed and more harmonious relationship with the natural world.

How to catch the wind

When it comes to obtaining wind energy, the first thing that comes to my mind is windmills, which can be used to generate electricity, water conservancy and other purposes. Unlike windmills, I found two types of windmills in the corresponding devices for wind speed testing. One is horizontal and the other is vertical. Fans in both directions need to be fixed with a rod, but this has a very decisive significance for my equipment design, because it determines whether my equipment is flat or tower-shaped.

Horizontal Windmill

Vertical Windmill

Multiple Windmill

I investigated how to affect the change of values through the speed of wind. There are two more reasonable ways. One is to connect the motor. The rotation of the fan will drive the current change of the motor; install the hall effect sensor. Through the change of the magnet, every time the fan rotates a circle, it will be recorded in the sensor.

It also includes different materials, such as metal, wood, cloth, paper and so on.

During further research, I realized that the problem that limits creativity is the fan. It could be more creative in the angle of the fan. Each fan blade can be a different shape, different material, and different orientation. On a whole fan, it will only affect/control one parameter. If I separate the fan blades, I can use each fan blade to control a parameter, which creates more randomness.

Ten Rotors With Malachite

Ten Rotors With Malachite by George Rickey(1907–2002)

This kinetic sculpture by George Rickey features ten rotors mounted in a circular formation, each tipped with malachite. The sculpture is powered by natural air currents, with the rotors rotating gracefully in response to the breeze. As they spin, they catch and reflect light, creating shimmering patterns and transforming the environment. Rickey’s work explores movement and the interplay between sculpture, space, and light, using wind as a medium to animate the piece.

Wind-Driven Drawing Machine

Wind-Driven Drawing Machine (2007) by Cheng-Guo

https://concretewheels.com/drawingmachines/sculpture_wddm.htm

The Wind-Driven Drawing Machine is constructed using found objects like wire and sardine tins, assembled to create a device that responds to wind currents. The machine includes lightweight components that move in response to the breeze, causing the sardine tins to swing or rotate. As they move, they trace marks on the surface below, creating drawings through the gentle force of the wind. This method turns natural wind energy into a simple yet dynamic artistic expression, highlighting the unpredictability and beauty of nature’s influence on creativity.