User:Vitrinekast: Difference between revisions

Vitrinekast (talk | contribs) mNo edit summary |

Vitrinekast (talk | contribs) No edit summary |

||

| Line 220: | Line 220: | ||

* Aymeric talk about permacomputing | * Aymeric talk about permacomputing | ||

* Look mum no computer @paradiso | |||

* Deleted visual studio code from my laptop, bye microsoft and bloated software! | |||

* XPUB SI23 Launch: TL;DR | |||

Spoke to Michael about the actual publicness of log files. To me, they seemed quite public, but as we use sudo “bij de vleet” in the classroom, I tend to forget that admin rights are not for everybody, in that this is quite the extraordinary publication. I’m similarly hesitant with publishing alllll of this text on the wiki, as it will be visible when I’ve added the text, and if i made a silly grammar mistake, will forever be part of the wiki’s changelog. There is a reason why hardly any of my projects are actually open source. Since microsoft took github over and private repoare now free, all my repoare actually private. I see code as a method of communication, as a way of making, not as a publicattion of it’s own. Especially the final bits and pieces of a project tend to get ugly, and i would not like future employers/collaborators/clients to be able to access these wrongly idented quick fixes.</li></ul> | |||

</li></ul> | </li></ul> | ||

<div class="toccolours mw-collapsible mw-collapsed" style="margin-left: 5rem"> | |||

'''Transclude from Peripheral Centers and Feminist Servers/TL;DR''' | |||

<div class="mw-collapsible-content"> | |||

{{:Peripheral Centers and Feminist Servers/TL;DR}} | |||

</div> | |||

</div> | |||

<span id="april"></span> | <span id="april"></span> | ||

Revision as of 09:37, 2 April 2024

pandoc --from markdown --to mediawiki -o ~/Desktop/{{file_name}} {{file_path:absolute}}

Overall, i think i can reduce the things im working with to the following verbs (or ’ings):

- HTML’ing

- Repair’ing (Or break’ing)

- Sound’ing

- Radio’ing

- Solder’ing

- Workshop’ing

- developing the interest in Permacomput’ing

- Server’ing

XPUB Changelog

September

- Start XPUB with a read-tru of the manual

- SI22 is about radio, transmission

(not to be confused with my best experience of the summer, Touki Delphine’s transmission(reference). Their work, a 20 minute audio/light experience performed by car parts was mesmerising and super inspiring, as I am still trying to figure out ways of performance without actually being there. )

- Prototyping: making graphs with graphviz, blast from the past with installing chiplotle.

I’ve used the thermal printer/plotter tru chiplotle to be able to create my letter for joining XPUB. At the time, installing chiplotle was such a drag that I wasn’t very motivated to redo it on this new machine. But, it turns out it was way easier then I remember it being the first time!

The topics discussed by Ola Bonati were very recognisable, and something i’d love to explore further. The future of software is nintendo DS! (Reference)Not very interested in the demoscene in all honesty. Their response to the question “How is this related to permacomputing”, was “small file sizes”, and this contest of creating the most efficient line of code is not that appealing to me.

October

- Zine camp

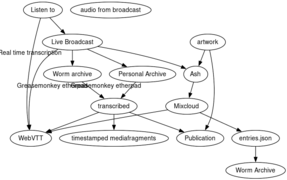

Earlier I made a node based representation of the worm archive, of which Manetta gave the very good feedback of asking what it was other then a fancy visual (not exactly those words!), and she was very right about this. I just wanted to play around with the file structure. But the basis of this was (going to be) used during the presentation at Zine Camp. The screenshot below shows the updated version, which includes loads of metadata extracted from the files at the archive. Unfortunately due to some personal things, I wasn’t able to join the presentation.

Transcluded wiki page of Granularchive

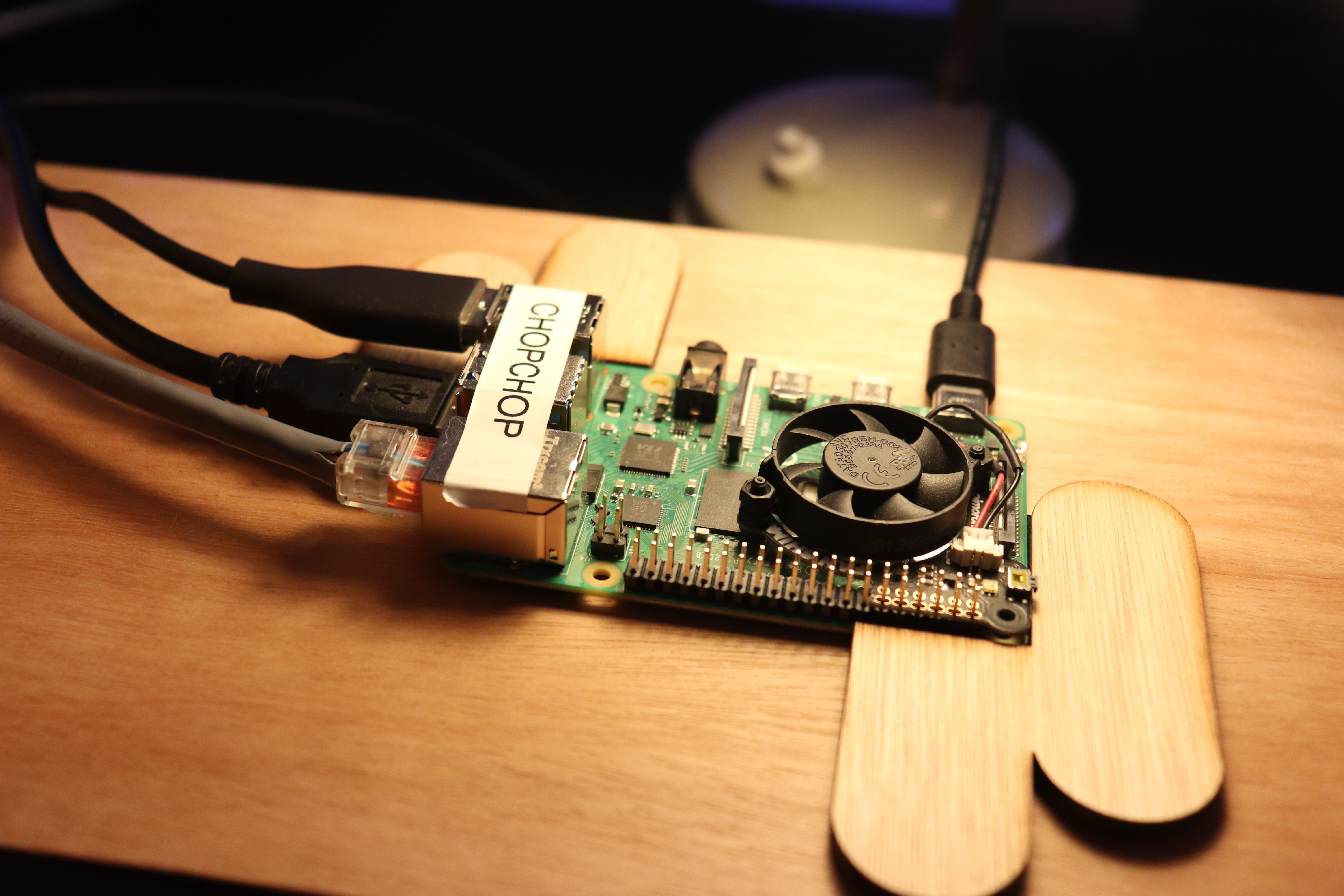

The Granularchive is a proposal to use as a tool during the presentation & radio broadcast of Zine Camp 2023, part of the Radio Worm: Protocols for an Active Archive Special Issue. Currently, the Granularchive can be visited via ChopChop.

The Granularachive previews all contents of an archive, by making use of exiftool (which is already installed on ChopChop). The content is displayed by translating a JSON file into the interactive SVG graph using D3.js. D3.js is an open-source javascript library.

Updating the Granularachive

- Upload new contents to the Granularchive folder on ChopChop via

/var/www/html/archive_non-tree→ name to be updated - Within this folder, run

exiftool -json -r . > exiftool.json, which will generate exiftool.json - Make sure this exiftool.json is placed at the root of `/var/www/archive_non-tree', and overwrite the existing one. Now the tool is updated!

An extra step will be needed to also include all of the wordhole content into the granularchive

Example of exiftool.json

The data displayed below can be used to change the way the Granularchive is presented.

{

"SourceFile": "./hoi/Folder1/Radio_Show_2008_3.mp3",

"ExifToolVersion": 12.5,

"FileName": "Radio_Show_2008_3.mp3",

"Directory": "./hoi/Folder1",

"FileSize": "0 bytes",

"FileModifyDate": "2023:10:28 23:16:26+02:00",

"FileAccessDate": "2023:10:30 08:39:14+01:00",

"FileInodeChangeDate": "2023:10:28 23:17:20+02:00",

"FilePermissions": "-rw-r--r--",

"Error": "File is empty"

},

- Created a mixer for Mixcloud (Wiki documentation).

Control the audio by moving the cursor. The snippet can be pasted into the console of a browser. I really enjoy this method of circuit bending existing webpages.

Transcluded wiki page of ReMixCloud

Copy-paste this into your console! (Right click -> inspect elements > console)

Remix Mixcloud

(copy paste it into the console of your browser when visiting mixcloud.com)

var remixCloud = function () {

var audio = document.querySelector("audio");

var svgs = document.querySelectorAll("svg");

var h1s = document.querySelectorAll("h1, h2, h3,button,img");

var as = document.querySelectorAll("a");

var timeout;

document.addEventListener("mousemove", function (e) {

if (!timeout) {

timeout = window.setTimeout(function () {

timeout = false;

var r = 3 * (e.clientY / window.innerWidth) + 0.25;

audio.playbackRate = r;

audio.currentTime = audio.duration * (e.clientX / window.innerWidth);

svgs.forEach((el) => {

el.style.transform = `scale(${(r, r)})`;

});

as.forEach((el) => {

el.style.transform = `rotate(${r * 20}deg)`;

});

document.body.style.transform = `rotate(${r * -4}deg)`;

h1s.forEach((el) => {

el.style.transform = `rotate(${r * 10}deg)`;

});

}, 100);

}

});

};

remixCloud();Adding audio elements to index files

// select all links

document.querySelectorAll("a").forEach((link) => {

// if it includes your audio format (regex)

if(link.href.match(/\.(?:wav|mp3)$/i)) {

// create an audio element and set its source to yourlink

var audioEl = document.createElement("audio");

audioEl.src = link.href;

audioEl.controls = true;

audioEl.style.display = "block";

link.insertAdjacentElement("afterend", audioEl)

};

// or an image

if(link.href.match(/\.(?:jpg|png)$/i)) {

var imgEl = document.createElement("img");

imgEl.src = link;

imgEl.style.display = "block";

link.insertAdjacentElement("afterend", imgEl)

}

});

- Experimented with recording my found german wall clock. Not a lot of luck yet using pickups, but later i’ve discovered that maybe piezo discs could be something, and to take a look at the open source schematics around using piezo’s as triggers.

- Tried out super collider

- Dealt with the broken laptop and spent way too much time in an apple store.

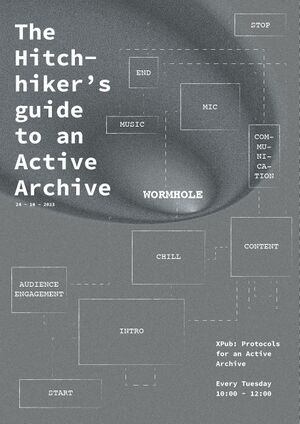

- Created the The Hitchhiker’s Guide to an Active Archive! At Radio Worm! Almost forgot about the fact that I originally joined Fine Arts to make games. Oh how the times change.

Transcluded wiki page of The Hitchhiker’s Guide to an Active Archive

The Hitchhiker's Guide to an Active Archive is the sixth radio show (including the Holiday Special) of the radio program XPUB: Protocols for an Active Archive on Radio Worm. The show aired on 2023 - 10 - 24 and was part of Special Issue 22.

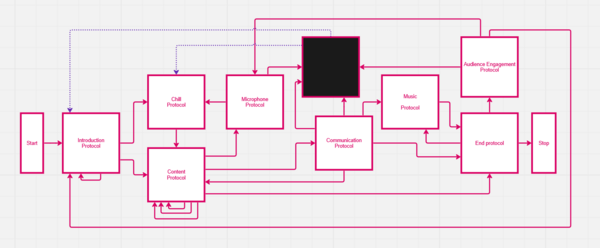

Flowchart

A recording of the broadcast

Links

EtherPad for communicating with the Caretakers during the show: https://pad.xpub.nl/p/SI22-shownotes-week5

How this Wiki was used during the show

The Hitchhiker's Guide to an Active Archive was a radioshow broadcast on Radio Worm on 2023-10-24. It is an interactive radio play that was performed live by XPUB students Rosa, Anita and Thijs. They each voiced one of the three voices present in the script. The show could be recreated using these wiki pages and a fresh channel of communication (e.g. another EtherPad). Click 'START BROADCAST' to enter the beginning of the script. It is not linear, most sections allow for several follow-ups, depending on audience decisions, dicerolls, time requirements and performer intuition. The overall structure is captured in the flowchart above. Throughout the script, clickable links are placed at the places where the narrative branches. Also, soundeffects, audio fragments and musical clips are inserted in the script at the place they would be played (often accompanied by an instruction).

References, inspiration and further reading / playing / listening

This show was made in response to / inspired by several works, some of which are presented below.

- The Hitchhiker's Guide to the Galaxy: a 1978 BBC radio play written by Douglas Adams, that was later adapted into books and a movie.

- Georges Perec, his works Die Maschine and The Art of Asking Your Boss for a Raise, and this text on these works by Hannah Higgins (chapter 2 of Mainframe Experimentalism)

Also, here are some follow-up links if this show resonated with you and you'd like to experience more choose your own adventure adventures, branching narratives, etc. These were also part of our conversations when preparing the show.

- Her Story: an interactive film video game in which the player sorts through video clips from a set of fictional police interviews to solve the case of a missing man.

- Heaven's Vault: a scifi branching narrative game about an archeologist deciphering an ancient language.

- In class we also briefly discussed text adventure games -- and CYOA books -- like 1976's Colossal Cave Adventure and 1977's ZORK. These then also evolved into MUDs (Multi User Dungeons), which in turn evolved into a plethora of other video game genres.

November

- Experimenting with the media fragments API after a recommendation of Michael.

Using metadata coming from the worm archive.

Transcluded wiki page of the media fragments API tests

This page looked so much better in markdown...

Example of a media fragment URI URL: http://www.example.org/video.ogv?t=60,100#t=20

Text below is from the Media Fragments URI 1.0 (basic)

The names and values can be arbitrary Unicode strings, encoded in UTF-8 and percent-encoded as per RFC 3986. Here are some examples of URIs with name-value pairs in the fragment component, to demonstrate the general structure:

* http://www.example.com/example.ogv#t=10,20 * http://www.example.com/example.ogv#track=audio&t=10,20 * http://www.example.com/example.ogv#id=Cap%C3%ADtulo%202

Media fragment URI can be used on video, images, and audio(?) → altough mentioned in the spec as video only(?) but what is a video anyway...

Used on image

Following URL should show a cropped image, but it does not appear to work onchrome.http://placekitten.com/500#xywh=percent:5,25,50,50

https://caniuse.com mentions that all browsers that have implemented something of this spec, have actually only implemented support for the #t=n,n control for selecting a range of video, and possibly track=(name) & id=(name).

Used in Audio!

I've uploaded some files to chopchop to test with

- https://hub.xpub.nl/chopchop/~vitrinekast/videos/small.3gp

- https://hub.xpub.nl/chopchop/~vitrinekast/videos/small.flv

- https://hub.xpub.nl/chopchop/~vitrinekast/videos/small.mp4

- https://hub.xpub.nl/chopchop/~vitrinekast/videos/small.ogv

- https://hub.xpub.nl/chopchop/~vitrinekast/videos/small.webm

- https://hub.xpub.nl/chopchop/~vitrinekast/videos/song.mp3

- https://hub.xpub.nl/chopchop/~vitrinekast/videos/song.flac

The following URL should only play the video from 00:01 to 00:02!

https://hub.xpub.nl/chopchop/~vitrinekast/videos/small.ogv#t=1,2

And... For mp3 it seems to work as wel!!

https://hub.xpub.nl/chopchop/~vitrinekast/videos/small.mp3#t=1,2

However, it does seem to fully load the file, when inspecting the request, which does not save any data being downloaded.

However 2: when throttling the request to slow3g, only 277 bytes are being downloaded according to the network tab. Something to investigate further.

- Check with charles to see if the amount of bytes are actually correct, as a reduced amount of bytes downloaded could be a positive result of using this to interact with an archive

- However, in terms of radio, m3u8 files are already broadcasted in "blobs" → so maybe this is more relevant to look into

Further checking and reading the spec

A glossary from the spec

Media fragments support addressing the media along two dimensions (in the basic version):

temporal This dimension denotes a specific time range in the original media, such as "starting at second 10, continuing until second 20";

spatial this dimension denotes a specific range of pixels in the original media, such as "a rectangle with size (100,100) with its top-left at coordinate (10,10)";

Media fragments support also addressing the media along two additional dimensions (in the advanced version defined in [Media Fragments 1.0 URI (advanced)](https://www.w3.org/TR/media-frags/#mf-advanced)):

track this dimension denotes one or more tracks in the original media, such as "the english audio and the video track";

id this dimension denotes a named temporal fragment within the original media, such as "chapter 2", and can be seen as a convenient way of specifying a temporal fragment.

- Spatial Dimension does not seem to work in chrome → clipping the video frame

- I'm not entirely sure yet what id/track should do, and also how to set this metadata, if it is even metadata?

- I tested https://hub.xpub.nl/chopchop/~vitrinekast/videos/small.mp4#track=audio but this still loads the full video instead of only the audio track. That could be great otherwise for the archive.

next steps

- Furher understand the Media fragment URI and what the current state of the spec is

- Read about [OggKate - XiphWiki](https://wiki.xiph.org/OggKate) an open source codec which could be used for Karaoke, but essentially allows for attaching time based metadata (see example below)

event {

00:18:30,000 --> 00:22:50,000

meta "GEO_LOCATION" = "35.42; 139.42"

meta "DATE" = "2011-08-12"

}

- this2that:

Used graphviz to together with riviera figure out some small tools we could make for the worm archive.

- Machine voice workshop by Ahnjili ZhuParris

Super interesting workshop, enjoyed the usage of these codelabs as a method of (self)documenting the code. I am a bit hesistant in experimenting with tools like machine learning, AI, etc. As i personally find them (but also know to little about this to make any actual statements) to use too much computing power to justify my own experimentation. It does tickle my beatboxing bone that I might want to use my own voice more as a source sound, in all of the sound device making.

December

- Hosted workshop Circuit Bending at Grafisch Lyceum

Grafisch Lyceum wants to make their Software Development MBO more “creative”, and therefore organises inspiratiesessies to generate new ideas for courses in the teaching departement. During the workshop, I’ve shared a bit about my practice, but we’ve mostly spent time doing some bending! I was pleasantly surprised with how much we got done, and how much fun it is to play with your instrument over a big speaker.

- attended Workshop Permacomputing @ Fiber

A 2 day workshop called Networking with Nature | Connecting plants and second-hand electronics hosted by Michal Klodner & Brendan Howell. Super interesting workshop with very interesting and diverse attendees. For instance, there was one person that researched getting electricity out of mud.

- SI22: Listen Closely

Ultimatly, the project didn’t fully work. But it almost did, and I think that already counts. It also resulted in my first XPUB all-nighter, where we took ChopChop and all of the audio interfaces home, to continue working after the WH building would close. Together with the Factory we’ve soldered together many radio’s, which would be used by visitors to listen to the various radio streams. If i were to create this project again, I think I wouldn’t use Supercollider, mostly due to the fact that I’m not familiar with it. And, more importantly, I would prepare for a bit more fallbacks. But YOLO.

Transcluded wiki page of Listen closely

Positioned around the space are FM radio transmitters broadcasting content from Special Issue #22. These were soldered together in the studio. The transmitters are connected to an audio interface which is itself connected to a Raspberry Pi. On the Pi, Open Sound Control (OSC) messages are sent back and forth between a Python application and Supercollider, an open-source, sound synthesis engine. OSC is a protocol which aims to standardise communication between audio applications.

Radio waves are agents for public commons. Resonating through communities tuning people together. It can reach far beyond where any human will ever go. By organizing multiple FM transmitters in a confined space and tuning them to the same frequency, an isolated ecosphere of electromagnetic waves is induced. To receive the hymn of the FM signals, equip yourself with a receiver. Let your movement in space be the interface in which you engage with the signals, and experience the proximity of the radio. So take your time, and listen closely.

- Started development of the web comic!

Together with Nik, we’ve started translating their comic “Writing down good reasons to freeze to death” to the web. The plan at the moment, is to divide the comic into multiple chapters. Readers can sign up for a mailing, and will receive a new chapter every week(ish), to make it a bit more into a slow-paced experience. We’re very inspired by the game Florence, and what remains of edith finch We’ll be using sprite-based animations, audio and low-key gamification elements to tell the story. But it’ll take a long time before this is finished.

- Took the leap and, after being advised by someone at operator not to, decided to start my eurorack path.

Januari

- Joined the first JS club about jQuery

- Saw Soulwax in paradiso. Who knew 3 drummers were better then one.

- Battle of the node_modules, inner debates around frameworks

- Using Pure data to create a granular synthesizer, and investigating further on how to do this on a raspberry Pi. I think it’s great to make use of the tools i’m already familiar with, such as pure data, instead of deep-diving in a new technique every three months.

- Made my first eurorack module from a kit, it doesn’t work. :(

- First attempt of installing PostmarketOS on an Android phone

The ongoing headache of getting an android phone to work as a webserver

This document follows the process of me trying to figure out how to set up a web server on various devices. Please note that this was really not a seamless experience, and every step of the way is just me trying things (over and over again). It’s more written like an ongoing conversation with myself to keep track of things.

It’s a Sony Xperia z5, and I’d like it to work as a webserver, as I already had it lying around and it would be an interesting alternative to a non-portable-server.

- Install documentation of postmarketOS Installation - postmarketOS

- I have to use pmbootstrap in order to install postmarketOS on the android phone

- I need linux in order to install it, so first installing linux trough UTM on my macbook No luck yet with installing, as the postmarketOS documentation is a bit all over the place. But i did find an article about running a webserver on the android device, might be interesting: How to host a Static Website on your Android Phone

- install f-doid

- install termux

- enable ssh

Unlock the bootloader via the Sony website instructions

Steps taken:

- check if bootloader is possible on the device

- In your device, open the dialer and enter ##7378423## to access the service menu.

- Tap Service info > Configuration > Rooting Status. If Bootloader unlock allowed says Yes, then you can continue with the next step. If it says No, or if the status is missing, your device cannot be unlocked.

- Download the [platform tools](https://developer.android.com/tools/releases/platform-tools

- enable USB debugging and the developer tools on the android device

- figure out the IMEI to unlock the unlock code by dialling

*#06#354187075829298- fill in that number in the unlock code generator

E43DC85FEDAC79C5

- connect to fastboot

- turn off device

- press volume up on device

- keep it and plug into usb

- in the extracted platform tools folder, run

./fastboot devices - verify that there are no errors

- enter unlock key

now i ran

pmbootstrap flasher flash_rootfs pmbootstrap flasher flash_kernel

Now it should work, but it is in a startup loop so i tried again

$ pmbootstrap build linux-sony-sumire $ pmbootstrap build device-sony-sumire $ pmbootstrap install --android-recovery-zip $ pmbootstrap flasher --method=adb sideload

hmm. i did some undocumented things, to get out of the loop. ultimately i ran:

$ pmbootstrap initfs hook_add maximum-attention $ pmbootstrap flasher boot

which took it into a loop, as documented in the wiki. so i removed the hook, and now it is loading the postmarket os for a little while, stating on the devices screen that its resizing the partition now its loading…

screen is stuck… restarting the device

and its back in the sony loop

I’ve tried all of the above 2x, but keep getting the same result… This issue is documented on the PostMarketOS wiki, so it might be unusable unfortunatly.

Februari

- JS club about Vue,

- Read loads about DIY culture from the Bootleg Library

- Oud-Charlois PCB-Dérive workshop at Varia

- Continued investigation on how to install linux (or other webservers) on ipads, android phones and my old macbook. Only took 2 months to get the first linux installation going. Then discovered; what is the point of running a webserver if it’s just for yourself?

The ongoing headache of getting an android phone to work as a webserver

This document follows the process of me trying to figure out how to set up a web server on various devices. Please note that this was really not a seamless experience, and every step of the way is just me trying things (over and over again). It’s more written like an ongoing conversation with myself to keep track of things.

Debian

I’ve by now installed Debian 12, and need to install a package without internet. i’ve put it on a usb stick

- to mount the usb stick to the mac

lsblkto list all devicesmkdir /media/usb-drivemount /dev/{name} /media/usb-drive/

- now we need to run the deb file using dpkg

dpkg -i {package}.debdont forget tosudo umount /media/usb

but now i get

dpkg: warning: 'ldconfig' not found in PATH or not executable. dpkg: warning: 'start-stop-daemon' not found in PATH or not executable.

so i did

su -

The package now installs, but it depends on wget, which is another package. So lets try to get internet by downloading the networkmanager

which needed many many dependencies, dependant on dependencies…

oh, but apparently networkManager is already installed? After I ran systemctl status NetworkManager it showed as inactive, so i restarted it using restart.

systemctl enable NetworkManager

im now installing zlibnm0_1.46.0-1_amd64.deb, which is needed to run nmcli (NetworkManager_?

so, that helped. now i can see that the wifi is on. but when running nmcli dev wifi list its still not showing any wifi spots. according to the internet i need to sudo systemctl start wpa_supplicant.service. but ofcourse, wpa_supplicant.service is not found…

This documentation is getting so chaotic

New day, new option: installed wireless tools

iwlist wlan0 scan

but this wireless chip doesn’t support scanning..?

And then I accidentally deleted the /usr folder, which broke the entire system. I couldn’t even ls anymore! Time to call it a day.

Michael left a usb stick on the laptop with a new installation of Debian 12. I’ve installed this one on the macbook, but keep running in the same issues. By now, I have gotten fast in mounting and unmounting USB sticks and manually downloading packages (that need packages and packages and packages).

Apparently I’m in need of specific non-free (free as in libre?) frameworks for the wifi card to work, but these honestly needed so many dependencies that needed to be manually downloaded one by one, that I gave up and went home.

Not the most seamless experience, and especially not the most empowering one (so far).

Ubuntu Server

Reverted so many things, installed Ubuntu server via the USB→ ethernet dongle of Michael, and now there is a server that can be ssh’d into! Ubuntu is supposed to be a bit more friendly out-of-the-box, so hopefully that will help me out. Time to finally get working on setting up the rest of the server, in more managable smaller steps: ### Things needed from a web server

- ☐ the ip address keeps changing. how to make this stay the same? ATM it stays the same, to look into what to do if you’ve lost the ip address..

- ☒ how to get wifi with eduroam to work?

- ☐ Fix this warning: Permissions for /etc/netplan/50-cloud-init.yaml are too open. Netplan configuration should NOT be accessible by others.

- ☐ Set the correct timezone

- ☒ resize terminal text size

- ☐ webserver

- ☒ Changing the font-size of the macbooks display

- ☐ SSH from a different location

- ☐ Git intergration?

- ☐ 404 pages

- ☐ FTP access

- ☐ being able to upload files

- ☐ Do not shut down when lid is closed - research other ways of lowering power consumption and extending shelf life.

- ☐ ehh how does DNS work?

- ☐ Think about if you’d want to self host gittea.

- ☐ change password to something more secure

How do i get the wifi of eduroam to work?

according to this gist, i need to create /etc/netplan/50-cloud-init.yaml. with the following setup

# This file is generated from information provided by the datasource. Changes

# to it will not persist across an instance reboot. To disable cloud-init's

# network configuration capabilities, write a file

# /etc/cloud/cloud.cfg.d/99-disable-network-config.cfg with the following:

# network: {config: disabled}

network:

ethernets:

eth0:

dhcp4: true

optional: true

wifis:

wlan0:

dhcp4: true

access-points:

"eduroam":

auth:

key-management: eap

password: "your password"

method: ttls

identity: "number@hr.nl"

phase2-auth: "MSCHAPV2"

version: 2

and then run sudo netplan --debug apply to apply the setup. the debug does show this warning, so i need to do something about it:

** (process:1657): **WARNING** **: 12:17:05.453: Permissions for /etc/netplan/50-cloud-init.yaml are too open. Netplan configuration should NOT be accessible by others. according to the logs, wpa_supplicant is created. but then: `A dependency job for netplan-wpa-wlan0.service failed. See 'journalctl -xe' for details.` and the apply is failed..

journalctl -xe returns

Mar 06 12:18:35 vitrinekast systemd[1]: **sys-subsystem-net-devices-wlan0.device: Job sys-subsystem-net-devices-wlan0.device/start timed out.** Mar 06 12:18:35 vitrinekast systemd[1]: **Timed out waiting for device /sys/subsystem/net/devices/wlan0.**

Did some searching, and removed this whole thing to try something else

installed NetworkManager to be able to run nmcli d. It is so much better to do this with an internet connection!

**rosa@vitrinekast**:**~**$ nmcli d DEVICE TYPE STATE CONNECTION wlp3s0 wifi disconnected -- enx00249c032885 ethernet unmanaged -- lo loopback unmanaged -- **rosa@vitrinekast**:**~**$

then I turn the wifi on by nmcli r wifi on. listing the available networks by running nmcli d wifi list returns loads of Tesla wifi networks! But also some eduroam.

nmcli d wifi connect my_wifi password <password>

gives Error: Failed to add/activate new connection: Failed to determine AP security information

According to stack exchange i need to

nmcli connection add \ type wifi con-name "MySSID" ifname wlp3s0 ssid "MySSID" -- \ wifi-sec.key-mgmt wpa-eap 802-1x.eap ttls \ 802-1x.phase2-auth mschapv2 802-1x.identity "USERNAME"

Turns into

sudo nmcli connection add \ type wifi con-name eduroam ifname wlp3s0 ssid eduroam -- \ wifi-sec.key-mgmt wpa-eap 802-1x.eap ttls \ 802-1x.phase2-auth mschapv2 802-1x.identity 1032713@hr.nl

then you shouldn’t unplug the usb ethernet thing and expect it to work right away (s.o.) but rather sudo nmcli c up eduroam --ask and fill in the eduroam login details there.

But, the wifi SSD apparently is incorrect? Let’s retrace some steps and try again.

I used a new netplan config:

# This is the network config written by 'subiquity'

network:

ethernets:

enx00249c032885:

dhcp4: true

version: 2

wifis:

wlp3s0:

dhcp4: yes

dhcp6: yes

access-points:

"eduroam":

auth:

password: "<mypassword>"

key-management: "eap"

method: "peap"

phase2-auth: "MSCHAPV2"

anonymous-identity: "@hr.nl"

identity: "1032713@hr.nl"

"Schuurneus Iphone":

password: "p1111111"

And then

netplan apply iwconfig and ip add and ping google.com

To verify that i now have a working wifi connection! IP: 145.137.15.162

Changing the font size

The answer was to edit the file /etc/default/console-setup and enter:

FONTFACE="Terminus" FONTSIZE="16x32"

then:

sudo update-initramfs -u

sudo reboot

OR (but the above already worked) using the setfont package

See the list of console fonts at ls /usr/share/consolefonts You might need to install them using the package fonts-ubuntu-font-family-console. You can change it system-wide instead of putting it in your .profile with dpkg-reconfigure console-setup

Creating a web server

installing nginx

sudo apt update sudo apt install nginx

Testing the web server by going to 145.137.15.162 which shows the nginx welcome page!

systemctl status nginx

The webserver serves /var/www/html and its contents

- Made a plotter experiment regarding listening to servers (snooping)

- Aymeric talk about permacomputing

- Look mum no computer @paradiso

- Deleted visual studio code from my laptop, bye microsoft and bloated software!

- XPUB SI23 Launch: TL;DR

Network Listening

[[:]]

Maart

Transclude from Peripheral Centers and Feminist Servers/TL;DR

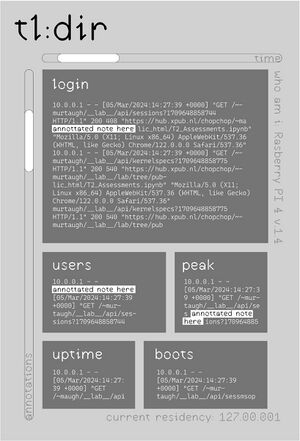

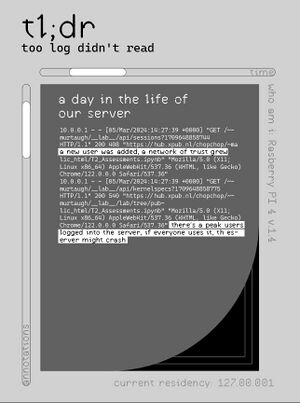

This page documents the TL;DR project part of Peripheral Centers and Feminist Servers

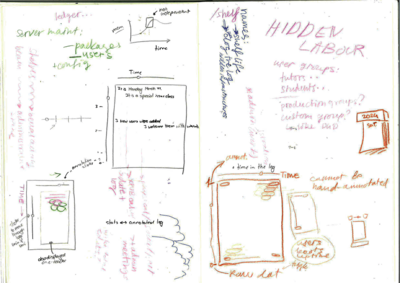

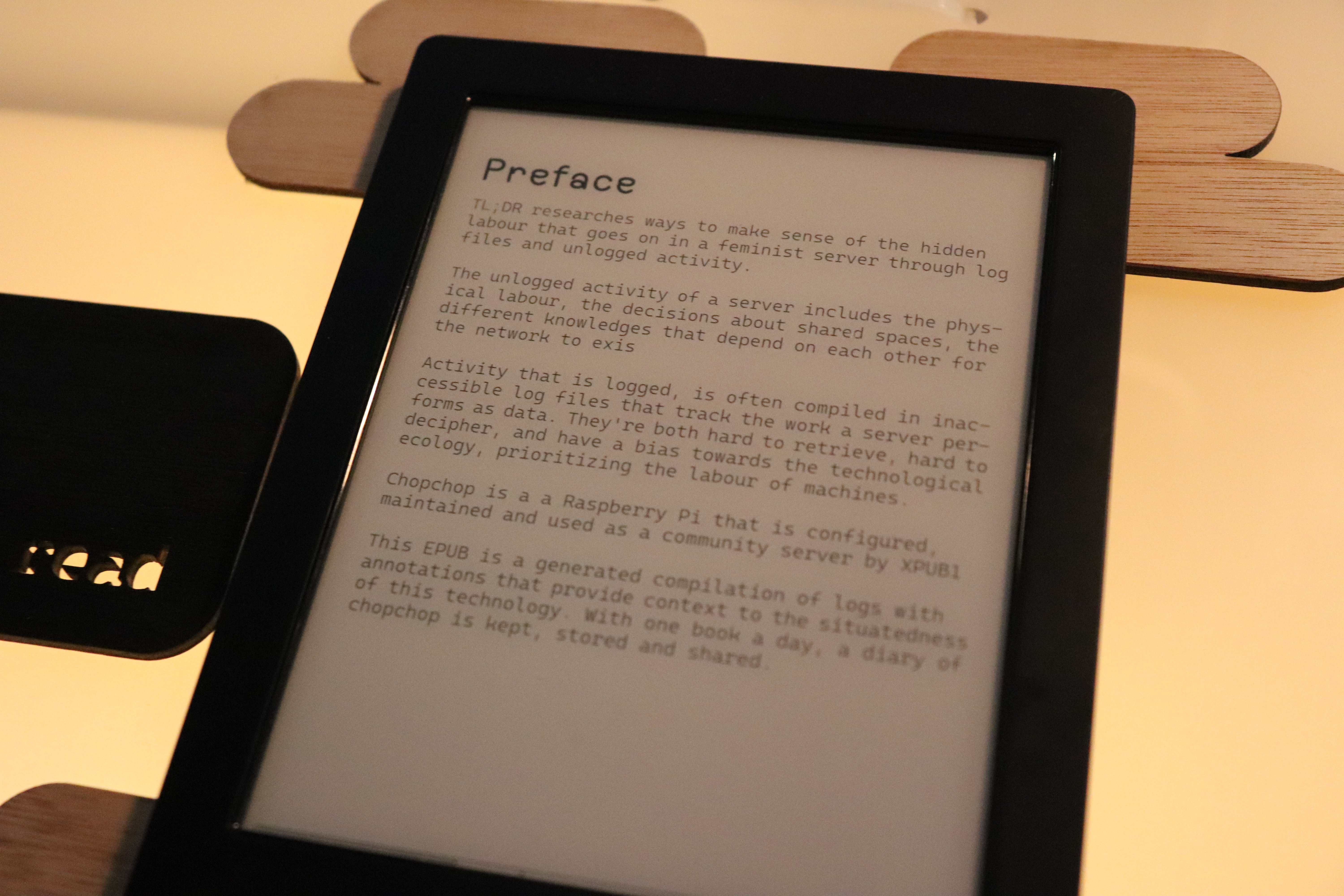

In a seamless world, awareness of techno-social infrastructure surfaces only when it's not working. But when you upload a photo, install an application, move a file, a technology serves, works, labours to execute what you've asked of it. Inaccessible files track this work as data. These files are inaccessible in two ways: they're hard to retrieve and hard to decipher. While these hidden files contain the not so hidden infrastructures of a server, they only manage to show a portion of it. After all, log files have a bias towards the technological ecology, prioritizing the labour of machines. The actual infrastructure consists of much more: the people maintaining (rebooting, organizing, meeting) for the tech to work. A feminist data center acknowledges and fosters the infrastructure surrounding this technology; the physical labour, the decisions about shared spaces, the different knowledges that depend on each other for the network to exist. TL;DR researches ways to make sense of the hidden labour that goes on in a feminist server through log files and unlogged effort / activity.

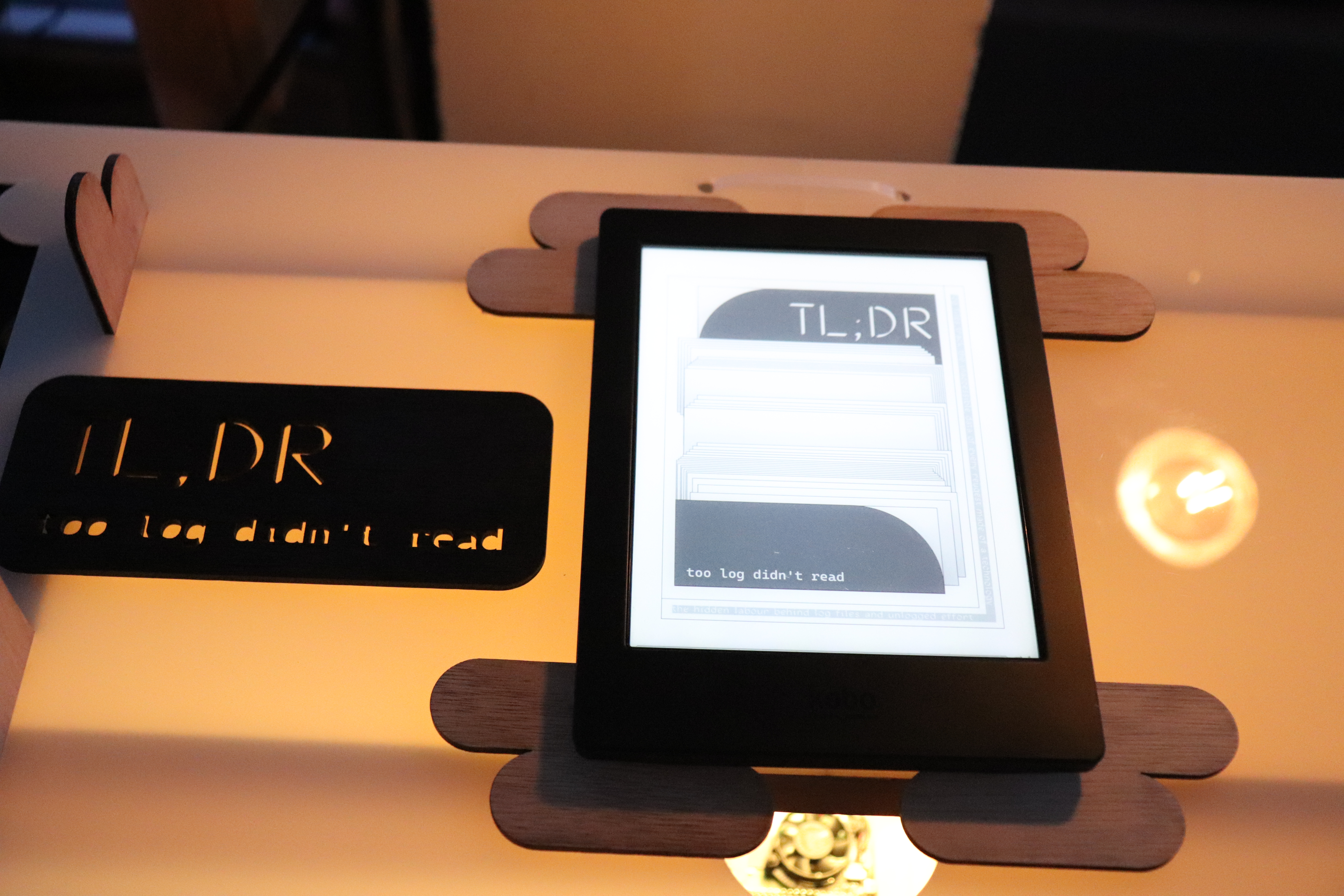

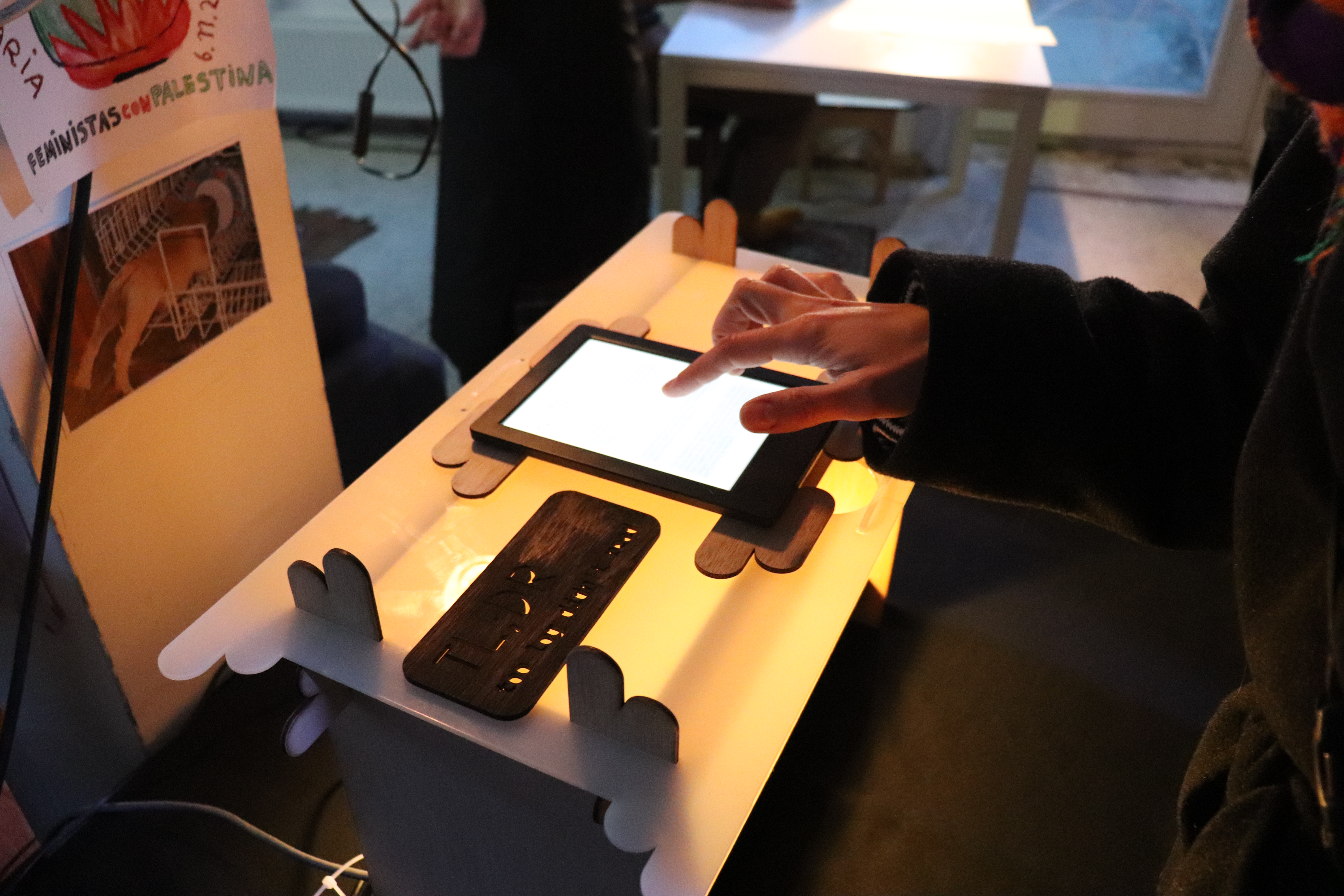

Project description

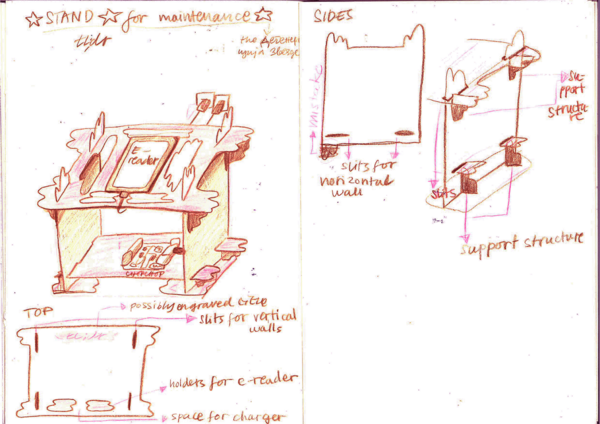

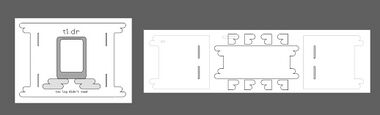

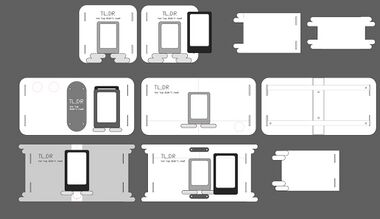

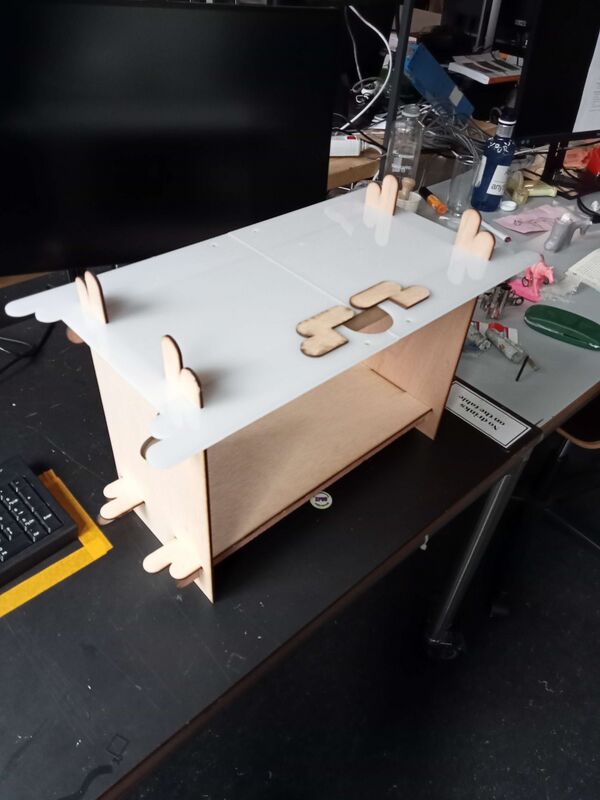

TL;DR is a project about showing the logged and unlogged activity of technologies we take for granted. In particular, XPUB1's community server chopchop. A shelf-like installation piece holds both chopchop and an E-reader, with which a reader can browse annotated log files. It is part of the Peripheral Centers and Feminist Servers project that was the launch event of SI23.

|

|

|

|

|

|

Motivation and evolution

A typical scenario in which one interacts with log files -- a typically scenario in which we have interacted with log files in setting up and maintaining our community server chopchop -- is when something is not working. One must first locate these files, and then try to make sense of the gargantuan data compilations containing cryptic information so matter-of-factly, that the matter of fact is lost on the reader.

These technologies are situated, there's a community using and maintaining, surrounding and being build on them. The same is true for chopchop. In our experience with this server, alongside our research on feminist servers, we have found several problems with this described scenario:

- The files are inacessible, unreadable, to many. This results in assymetric responsibilities in maintenance and potentially introduces an implicit technosocial hierarchy in the community working with such a technology.

- The files are inaccessible, hidden away. This implies the server's internal workings are meant to be hidden: there's a seamless experience the image of which needs to be kept up.

- Both cases of inaccessibility increase the distance between the technology and the human, in an attempt to naturalize the first in the life of the latter. In this process, the awareness of the situatedness, the collaboration between tech and human, tends to get lost.

- The agency of accessing the files is with the human.

- These logs are biased towards technology. Again, they ignore the community surrounding it. This invisibilizes labour such as maintaining the technology.

Initial idea

In its conception, we wanted to shift the agency from user to technology. We played around with several ideas:

- Transforming the logs into an emoticon. After a preset time interval, this would be printed. This idea was a play on the emoticon-feedback-pillars in e.g. grocery stores and airports (see image).

- Logging the launch event itself, e.g. by counting the people coming in and out, and making an analogy with server components.

- Having a central view of certain (fictional) components of the server, that would need to be kept within certain thresholds to keep the event going. For example, a bucket that slowly fills over time, and needs to be discharged lest it overflows (an analogy for garbage collection). This would put some focus on the communal aspect of maintenance.

We decided to develop the first idea further.

Comments by Joseph and Manetta

We proposed the initial idea to Joseph and Manetta. They had two comments:

- Are you not creating even more bureaucracy?

- What actually makes this feminist? (additionally, Manetta voiced concerned about a feminine connotation with emojis)

We felt the need to rethink the idea from the ground up, this time.

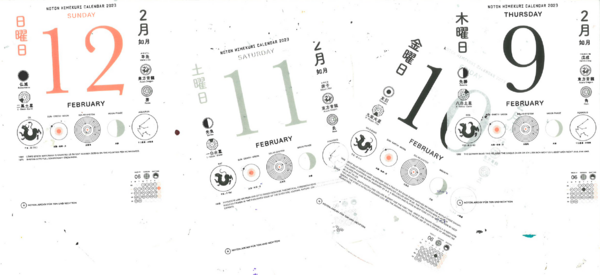

Updated idea: a diary

Senka showed a tear off calendar they used. This calendar displays lots of information aside from the date: constellation visualizations, historical events related to the date, ... We felt inspired by its ability to compile varried, difficult information in an approachable, readable way. In addition, the idea of time that is associated with a calendar resonated. With this in mind, we workshopped more ideas.

What if chopchop kept a diary? A compilation of important data, reporting both on the technical as well as the non-technical aspects involved in being / maintaining / using a server. Visualized and written in an accessible way. Hosted online to make it visible to anyone.

With this idea, we hope to expose some of the invisible labour surrounding a server, and address the problems of inaccessibility with traditional logging.

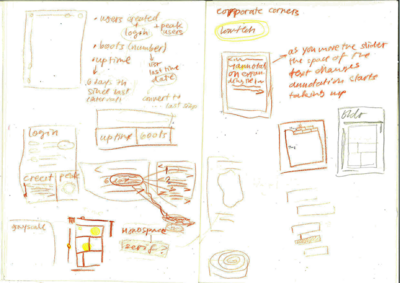

Design process

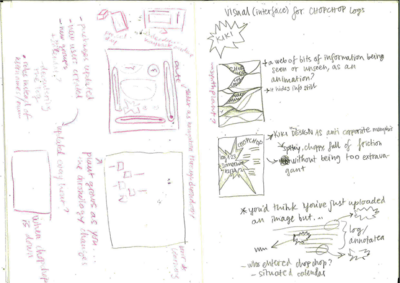

After multiple sketches and discussions, Senka drafted 2 versions of the design that would have multiple 'windows' in which different logs will be updated. The sketch was kept black and white, mostly accounting for it being displayed on an e-reader in the end. Two sliders were implemented to slide through time and the annotation of the logs. Time would show different logs presumably by day, ideally from the start of chopchop's life, while the annotation would display different layers / tiers of annotation of what that logs mean to all of us as a community of practice.

The fonts chose are open-source and free. One is Ductus, a font by Amélie Dumont, and the other is a Microsoft font called Cascadia Code, which Senka accidentally stumbled upon while browsing the fonts on their laptop. It's a super decent monospace font, and a very good fit for imitating the terminal font, while having slightly better kerning and a very good italic version. The other font choice is for annotations. The annotation have a 'highlighted' background to mimic highlights and analogue annotations.

After considering the actual size of an e-reader screen as well as a hierarchy of information these windows pose, the final sketch included all of the information in one 'windows / page / screen'. The user is supposed to just scroll down to view as much as they would want to.

As the TL;DR can be previewed on an e-reader at varia, we'd like to test what we can do in terms of HTML / CSS / Javascript with the e-reader. A test page was created, that includes some scrollbars, some font stylings, some javascript scroll tracking, some animations, etc.

Template & annotations

The code has been merged and is now accessable via the GIT repo, and this (temp) URL.

In order to get the logs visible to the public, we've created a script (script.py) that will run every X time using a cron job. The script renders an HTML page. To render the HTML page, we make use of jinja (link to documentation), which is a templating engine that is very readable and only used a little bit of code to get up and running. The template for the html page lives in templates/template.jinja. The python script runs a bunch of commands and saves the outcomes of these commands in variables, which are then referenced in this template file.

jinja allows for using if/else statements within the templates, loopings', small calculations, etc, which might come in handy when making the annotations. For a list of these variables, see below.

For the real-time ness, the plan was originally to use sockets, but as these require a node server to run (just another job that might not be needed), we're taking a bit more of a plakband approach. The plan is that the HTML page will contain the date of which the visitor can expect the next update. We need to write some javascript that checks once in a while if this date has already passed. When the date has passed, we could even do a little check if the browser and or chopchop are still "online", but ultimately do a refresh. Since, in the background, the python script has run and generated a new HTML page.

A side note is that the sketches do include a reference to "time" but we still need to figure out how exactly this would work, and that could influence this setup.

About the template

In the template, we've got the following variables, corresponding with commands. For each variable, there is a small if statement. If a variable is set, we show a level 1 annotation, visible when updating the slider.

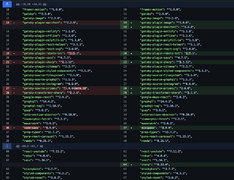

| variable | command |

|---|---|

| last_user_added | sudo journalctl _COMM=useradd -r -n 1 --output-fields=MESSAGE

|

| users_created_today | sudo journalct -S today _COMM=useradd -r -n 1 --output-fields=MESSAGE

|

| list_active_services | sudo service --status-all

|

| list_groups | getent group |

| since_last_boot | uptime -s

|

| list_package_installs | grep "install " /var/log/dpkg.log

|

| list_package_upgrade | grep "upgrade " /var/log/dpkg.log

|

| list_package_remove | grep "remove " /var/log/dpkg.log

|

| device_info | grep "Model" /proc/cpuinfo /(pipe but hey wiki tables...) awk -F: '{ print $2}' |

| debian_version | cat /etc/debian_version

|

| kernel_version | uname -a

|

| hostname | hostname -i

|

| ????file changes in specific directories for other projects of SI23? | find {path} -not -path '*/\.*' -atime 0 -readable -type f,d

|

| ?Watching some kitchen service??What would happen there? | sudo journalctl -u kitchen-stove.service -b

|

Display of the project

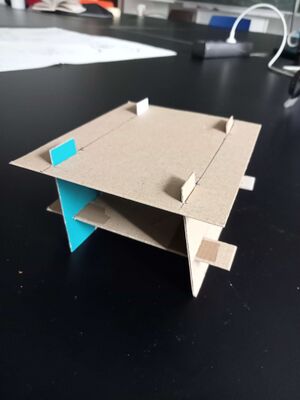

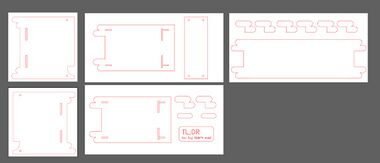

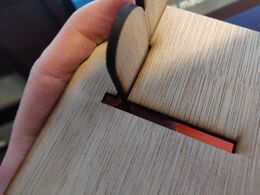

Senka made a few sketches and a small cardboard makette to think about a way to display chopchop and the e-reader together. This idea was based on having "windows" or a porous structure that would show what is usually hidden in a black box.

The idea was to use this structure, laser cut it on thin plywood and assemble it. This was the structure can move and travel easily, and be modular.

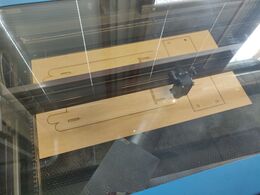

Lasercutting 2024-03-20

We booked the lasercutter one hour before class on Wednesday. This was ambitious: there were some setbacks. In particular, the thickness of the wood (bought in the WdKA store) is NOT the advertised 3.6mm. We managed to cut all of the wood parts, but not the plexiglass.

Lasercutting 2024-03-22

The fight with the lasercutter continues. Finally everything was successfully lasercut. Somehow the longer you lasercut wood on the largest lasercutter, the worst the quality of the cut gets. In my experience, this has not been the case with other materials, just the "3.6mm" wood from the WdKa store. The models can be assembled and disassembled, but with caution and care, some parts fit more smoothly than other. The last bit that needs to be finished is the sanding of the edges (only slight sanding).

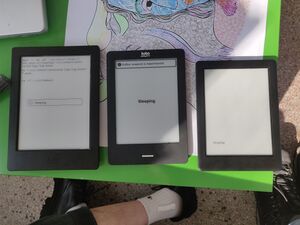

E-readers

From its concepion, we liked the diary idea to be displayed on an e-reader. Not only for its tactile, familiar experience, and association with actual reading, instead of skimming, but also because e-ink retains it's final image. So, if chopchop would die while connected to an e-reader, we would still be able to view the final log.

However, as e-readers have a low refresh rate and are generally a bit unreliable in their connectivity, interactivity is limited. While at first we had hoped to display a regular html page on the e-reader, this was later, by suggestion of Manetta, changed to generating epubs.

Different explorations / fallbacks

Monday March 26 we met at Varia. The SI launch draws near and we have a few options to continue with:

- The HTML option as presented above. We have come to find we cannot use this version on an e-reader (internet connection unstable, refresh rate to low, too risky in general), so this option would use a tablet instead. We dislike this. However, the webpage is ready (aside from the timeline function), so this is a fallback option.

- Using KoCloud, an open-soure community driven project that allows an e-reader to be synced with a cloud service. This is currently setup and working with Google Drive. However, we do not like this. We could try to setup nextcloud on chopchop, but feel like this is a decision that should be made with the whole group, as this would add a fundamentally new functionality to how we might use chopchop. And there's too little time for this decision. Aside, if we got it working, it's still to be seen if the syncing would actually work.

- KOReader, e-reader software that allows the execution of shell scripts and ssh login. If it doesn't have wifi (yet to be tested), it cannot automatically update, and we would still need to setup the synching scripts.

Talking about these options, we concluded the main technical bottleneck is synchronization between our logging efforts on chopchop and the device on which this will be displayed. We then decided that actually, no automatic synchronization is necessary: it is part of seamless experience you might have come to expect from Big Cloud, but would hide the fact that there is a continuous channel of communication required. By manual synchronization, we remain aware of this.

We decide to go for option 3. With manual synching, we think this is feasible. Also, the e-reader with KOReader (left) is also the one with the sleeping behaviour that best suits our needs: a little popup is showsn, while the rest of the page is retained. This is preferable over the other e-readers, that wipe the whole screen when in sleep mode.

Cover Image

The cover image was designed with Inkscape. The visuals are meant to represent the endless logs and files stacked in different formation and quantities depending on the EPUB opened. Each EPUB, for each day would have a slightly different cover.

Thoughts on Inkscape: offers a lot of functionality, although it is not the most precise. A bit finicky to use...

Since we wanted the images to be selected at a random order, we added this to the bash script that was fetching all of the info for assembling the epub:

index=$(shuf -i 1-14 -n 1) echo $index

Then this variable is later inserted into the code Rosa crafted:

pandoc /home/xpub/www/html/tl-dr/book.html -o /home/xpub/www/html/tl-dr/logged_book.epub -c print.css --metadata title="$1" --metadata author="/var/shelf" --epub-cover-image=assets/cover$index.jpg --epub-embed-font="fonts/Cascadia/ttf/CascadiaCode-Light.ttf" --epub-embed-font="fonts/Ductus/DuctusRegular.otf"

Preface

The preface was written and then re-written to have more of a format of a quick glossary that can explain some of the core elements of the project while being a distinctly separated piece of text that the public can skim through if they want to focus on the main body of the work.

Scripts

The Git repository for this project can be found here. We use several scripts to generate the annotated logs and feed them to the e-reader and quilt patch. In this section, we will discuss a few prominent ones.

The generation happens in a few stages:

- First, the logs are compiled with script.py on chopchop. This script uses several bash commands.

- Then, through the python script a jinja template, book.jinja is populated (see also this section)

- The resulting HTML file is then, again through the python script, converted to EPUB using create_book.sh

- The EPUBs can then be fetched from the e-reader by running ereader_get_epubs.sh on them

create_book.sh

#!/bin/bash

today=`date '+%B_%dth_%Y'`;

filename="TD;DR_$today"

echo $filename;

pandoc /home/xpub/www/html/tl-dr/book.html -o /home/xpub/www/html/tl-dr/logged_book.epub -c print.css --metadata title=$filename --epub-cover-image=cover.jpg --epub-embed-font='fonts/Cascadia/ttf/CascadiaCode-Light.ttf' --epub-embed-font='fonts/Ductus/DuctusRegular.woff'

cp /home/xpub/www/html/tl-dr/logged_book.epub /home/xpub/www/html/tl-dr/log-books/$filename.epub

echo "did the overwrite"

ls log-books > /home/xpub/www/html/tl-dr/log-books/all.txt

ereader_get_epubs.sh

#!/bin/ash

if test -f "/mnt/onboard/tldr/all.txt"; then

echo "all.txt exists., remove it";

rm /mnt/onboard/tldr/all.txt

fi

wget -nc "https://hub.xpub.nl/chopchop/tl-dr/log-books/all.txt" -O /mnt/onboard/tldr/all.txt

while read line; do

if test -f "/mnt/onboard/tldr/$line"; then

echo "$line exists."

else

wget "https://hub.xpub.nl/chopchop/tl-dr/log-books/${line}" -O "/mnt/onboard/tldr/${line}"

fi

done < /mnt/onboard/tldr/all.txt

April

- Life after CMD talk bij CMD

- Will visit robert henke’s performance at tivoli!

Questions for the rest of the future of XPUB:

- What is my ideal web-dev “stack” that I would like to explore further? Stack taken as wide as possible. What tools are in the toolbox? I’d like to continue to make a sustainable practice, from various perspectives, and this question can keep me up at night. In a professional field that keeps on overbloating their web presence with gigantic auto-playing video’s, unnecessary JS frameworks and that need to be maintained every 6 months, just to keep your over-engineered product landing pages somewhat working. I’ve always presented myself as the lazy developer, and still use that every week as an argument to avoid making things that I deem a waste of MB’s, but the land of JS frameworks is tempting, and there must be some cute little SSG tool that allows me to create a modular based web thing? (Where please i do not have to write CSS in JS!)

- How can I relate the topics of making sound devices to the topics of XPUB (critiquing the social economical world tru zines)

- Or is becoming part of DIY hacker culture already a method?

- How can i prevent it all becoming about the stuffz?

- How can these researches be shared (and why)

- as performance kills me slowly

- To explore further

- Where do all these macbooks go?

- Musical notation (Scratch Orchestra, cybernetic syrendipity)