User:Kiara/Special Issue 25: Difference between revisions

No edit summary |

|||

| (25 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

Since everything seems to be a ''mise en abyme'', this page is going to be all about protocols, in order to be meta and in harmony with this Special Issue's title. | Since everything seems to be a ''mise en abyme'', this page is going to be all about protocols, in order to be meta and in harmony with this Special Issue's title. | ||

[[Radio_WORM:_Protocols_for_Collective_Performance|General page of the trimester]] | |||

===<span style="border: dotted 1.5px rgb(92, 58, 196); padding: 5px 7px; background-color: #F7FAFF;">Protocol for a field recording</span>=== | ===<span style="border: dotted 1.5px rgb(92, 58, 196); padding: 5px 7px; background-color: #F7FAFF;">Protocol for a field recording</span>=== | ||

| Line 17: | Line 17: | ||

---- | ---- | ||

===<span style="border: dotted 1.5px rgb(92, 58, 196); padding: 5px 7px; background-color: #F7FAFF;">Protocol for a first radio show</span>=== | ===<span style="border: dotted 1.5px rgb(92, 58, 196); padding: 5px 7px; background-color: #F7FAFF;">Protocol for a first radio show</span>=== | ||

The very first show that took place at Radio WORM with [[User:Sevgi|Sevgi]], [[User:Wordfa|Fred]], [[User:Kim|Kim]] and [[User:Martina|Martina]] | <span style="font-family: monospace; font-weight: bold; font-size: 15px;">23-09-2024</span><br> | ||

The very first show that took place at Radio WORM with [[User:Sevgi|Sevgi]], [[User:Wordfa|Fred]], [[User:Kim|Kim]] and [[User:Martina|Martina]].<br> | |||

We used the field recordings, metadata and songs people put on their [[:Category:Field recording 2024|Field Recording]] wiki pages.<br> | |||

[https://www.mixcloud.com/radiowormrotterdam/protocols-for-collective-performance-w-xpub-230924/ Listen to the show on Mixcloud!!]<br> | [https://www.mixcloud.com/radiowormrotterdam/protocols-for-collective-performance-w-xpub-230924/ Listen to the show on Mixcloud!!]<br> | ||

<gallery mode="nolines" heights="300 | |||

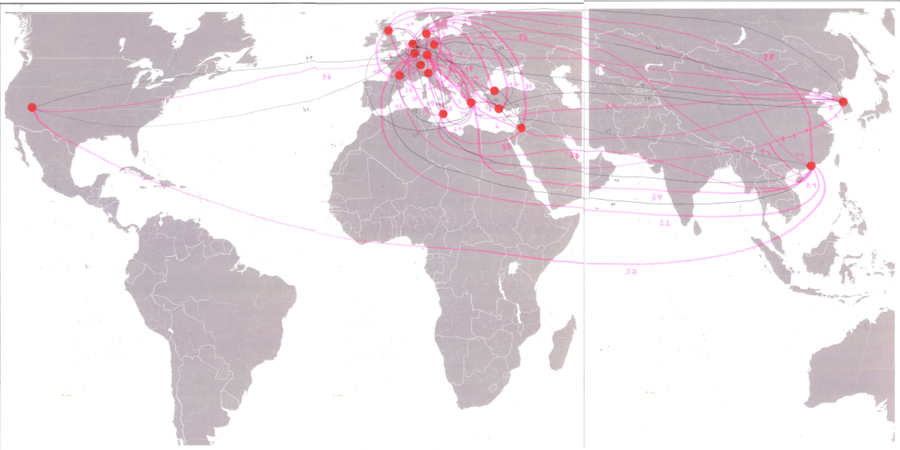

<p style="font-weight: bold; text-transform: uppercase; text-align: right;">Dedicated wiki page: [[SI25 Broadcast 1: Soundmapping]]</p> | |||

<gallery mode="nolines" heights="300" widths="200" style="text-align:center;"> | |||

File:Worm23092024.jpg | File:Worm23092024.jpg | ||

File:Makingradio.jpg | File:Makingradio.jpg | ||

| Line 39: | Line 44: | ||

---- | ---- | ||

===<span style="border: dotted 1.5px rgb(92, 58, 196); padding: 5px 7px; background-color: #F7FAFF;">Protocols for a Jam Session</span>=== | ===<span style="border: dotted 1.5px rgb(92, 58, 196); padding: 5px 7px; background-color: #F7FAFF;">Protocols for a Jam Session</span>=== | ||

Jam Session | <span style="font-family: monospace; font-weight: bold; font-size: 15px;">24-09-2024</span><br> | ||

Jam Session with Joseph: creating instruments with unusual objects<br> | |||

'''Jam 1''' {{audio|mp3=https://pzwiki.wdka.nl/mw-mediadesign/images/7/7a/Jam-full-1.mp3|style=width:100%; border-radius:25px;}} | '''Jam 1''' {{audio|mp3=https://pzwiki.wdka.nl/mw-mediadesign/images/7/7a/Jam-full-1.mp3|style=width:100%; border-radius:25px;}} | ||

| Line 46: | Line 52: | ||

=== <span style="border: dotted 1.5px rgb(92, 58, 196); padding: 5px 7px; background-color: #F7FAFF;">Protocol for Canon Music</span> === | === <span style="border: dotted 1.5px rgb(92, 58, 196); padding: 5px 7px; background-color: #F7FAFF;">Protocol for Canon Music</span> === | ||

[[File:Clapping-music-sheet.jpg|frameless|right]] | |||

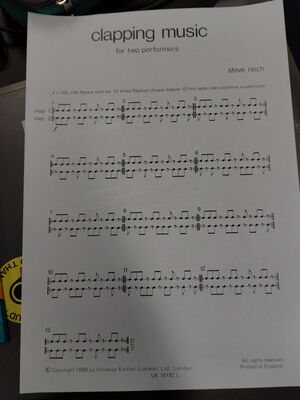

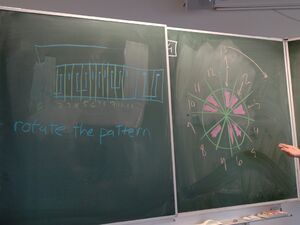

[[File:Clapping-music-sheet.jpg|frameless| | <span style="font-family: monospace; font-weight: bold; font-size: 15px;">14-10-2024</span><br> | ||

Session with Michael: Reading and acting the ''Clapping Music'' by Steve Reich. | |||

The pattern is <code>3-2-1-2</code> and it's looping.<br> | The pattern is <code>3-2-1-2</code> and it's looping.<br> | ||

''What happens when the second clapper enters and loops their part?''<br> | ''What happens when the second clapper enters and loops their part?''<br> | ||

| Line 56: | Line 61: | ||

''What would be a better way of writing this partition?''<br> | ''What would be a better way of writing this partition?''<br> | ||

→ In a wheel!<br> | → In a wheel!<br> | ||

=== <span style="border: dotted 1.5px rgb(92, 58, 196); padding: 5px 7px; background-color: #F7FAFF;">Protocol for | It feels like unveiling a secret hidden in the partition - <span style="color: rgb(92, 58, 196); background-color: #F7FAFF;">like solving a riddle!</span> | ||

[[File:Rotate-the-pattern.jpg|frameless|center]] | |||

<br><br> | |||

=== <span style="border: dotted 1.5px rgb(92, 58, 196); padding: 5px 7px; background-color: #F7FAFF;">Protocol for a Delayed Radio Show</span>=== | |||

[[File:LostMusic InTheMaking.jpg|frameless|right|210x210px]] | |||

<span style="font-family: monospace; font-weight: bold; font-size: 15px;">04-11-2024</span><br> | |||

with [[User:Chrissy|Chrissy]], [[User:aksellr|Tessa]], [[User:imina|Imina]], [[User:charlie|Charlie]] <br> | |||

Today, we were supposed to host one of the best radio shows of the trimester. We worked super hard to make it perfect but...WORM faced technical issues and had to close down the radio for an unknown period of time.<br> | |||

We really put our hearts into the making of this show: <br> | |||

[[File:CD in the Wild (radio worm) (2).jpg|frameless|right|210x210px]] | |||

:→ the theme is '''Lost Music''', we asked everybody to contribute by uploading songs from their teenage years onto [[SI25 Broadcast 8: Lost Music|this wiki page]]. From this, emerged also a [https://open.spotify.com/playlist/3VQHx4phiwFA5Pf0KVoxVt?si=78b2d2fa51f04b12 Spotify playlist]!! <br> | |||

:→ we burned CDs with the songs, according to the themes we agreed on, the CD cases contain a poster and stickers!<br> | |||

:→ we made a web page hosting the playlist (downloadable)<br> | |||

:→ we thought about copyright, piracy and music that is lost through time by being present only on CDs that people kept (opposed to being present on streaming platforms); but also the fact that through streaming platforms we don't own music anymore, and if the platform goes down, we lose music...<br> | |||

In the end, the show that was supposed to happen on Nov. 4th got pushed to Nov. 25th, and it was a <span style="font-weight: bold; font-family: 'Impact'; color: hotpink; font-size: 18px;">B L A S T</span>. | |||

<p style="font-weight: bold; text-transform: uppercase; text-align: right;">Dedicated wiki page: [[SI25 Broadcast 8: Lost Music]]</p> | |||

[[File:Lost Music Radio Group.jpg | center | frameless|265x265px]] | |||

<br> | |||

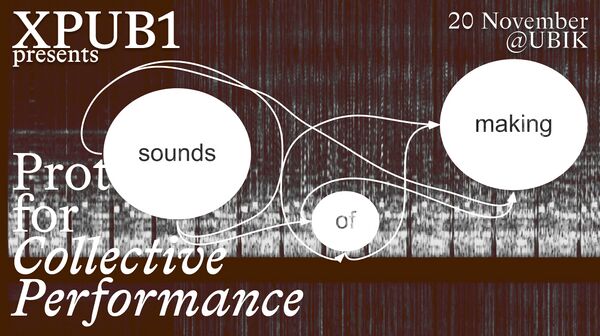

=== <span style="border: dotted 1.5px rgb(92, 58, 196); padding: 5px 7px; background-color: #F7FAFF;">Protocol for a Non-Radio Show</span>=== | |||

<span style="font-family: monospace; font-weight: bold; font-size: 15px;">18-11-2024</span><br> | |||

with [[User:queenfeline|Feline]], [[User:shoebby|Lexie]], [[User:eleni|Eleni]], [[User:chrissy|Chrissy]]<br> | |||

This is the Monday right before the Special Issue Public Event, we are not in a planning mindset. We thus decide to <span style="font-family: cursive; background-color: #F7FAFF;">improvise</span>.<br> | |||

We gather vinyls, readings and discussion topics. Lexie and Feline are DJing, Chrissy, Eleni and I are improvising a podcast. We will start by planning the radio show we are actually broadcasting (''mise en abyme'' hehe).<br> | |||

<p style="font-weight: bold; text-transform: uppercase; text-align: right;">Dedicated wiki page: [[SI25 Broadcast 7: Improvisation]]</p> | |||

<p style="font-family: 'Impact'; color: rgb(92, 58, 196); font-size: 25px; text-align: center;">S M O O T H</p> | |||

[[File:Dave 3.jpg|center|500x500px]] | |||

<br> | |||

===<span style="border: dotted 1.5px rgb(92, 58, 196); padding: 5px 7px; background-color: #F7FAFF;">Protocol for organizing an event</span>=== | |||

<span style="font-family: monospace; font-weight: bold; font-size: 15px;">28-10-2024 to 03-11-2024</span><br> | |||

Having 3 meetings in 2 days about the task management, making working groups, deciding on what we do and how we do it.<br> | Having 3 meetings in 2 days about the task management, making working groups, deciding on what we do and how we do it.<br> | ||

| Line 69: | Line 109: | ||

; My project idea for the event: Inspired by [https://sarahgarcin.com/workshops/an-underground-radio-to-print/toolkit.html Sarah Garcin's ''Radio to Print'' workshops] 🠶 there's a python dependency to transform speech into printed text. | ;My project idea for the event:Inspired by [https://sarahgarcin.com/workshops/an-underground-radio-to-print/toolkit.html Sarah Garcin's ''Radio to Print'' workshops] 🠶 there's a python dependency to transform speech into printed text. | ||

; Mixed with Eleni's idea of having a spoken input transformed into music via VCV Rack -- in the end we get: | ;Mixed with Eleni's idea of having a spoken input transformed into music via VCV Rack -- in the end we get: | ||

spoken input => becomes music for the listeners + gets printed live (thermal or fax machine) | spoken input => becomes music for the listeners + gets printed live (thermal or fax machine) | ||

; Adding Zuhui's idea: To engage visitors in this performance, they can become part of the foundational text/speech material for the auditory output: One or two writers will be present in the room, live scripting as they observe the event. This observation can focus on the visitors —or anyone in the room— and may include fictional elements depending on the writers' interpretations and creative choices. Ideally, this live-scripting will be projected on a screen, allowing visitors to watch as they’re being observed and transformed into text, and then speech, which then becomes sound and then text again (in VCV+python) —all in real-time. | ;Adding Zuhui's idea:To engage visitors in this performance, they can become part of the foundational text/speech material for the auditory output: One or two writers will be present in the room, live scripting as they observe the event. This observation can focus on the visitors —or anyone in the room— and may include fictional elements depending on the writers' interpretations and creative choices. Ideally, this live-scripting will be projected on a screen, allowing visitors to watch as they’re being observed and transformed into text, and then speech, which then becomes sound and then text again (in VCV+python) —all in real-time. | ||

; Sevgi's idea: This script could also serve as a part of documentation(post-production) of the event. Because it’s the writing of the live observation made directly in the moment and setting of the performance. | ;Sevgi's idea:This script could also serve as a part of documentation(post-production) of the event. Because it’s the writing of the live observation made directly in the moment and setting of the performance. | ||

;Whole public needed | ; Whole public needed | ||

; Works best if not time framed but could maybe work if the frame is around 2h | ;Works best if not time framed but could maybe work if the frame is around 2h | ||

'''Remaining questions to work on:''' | '''Remaining questions to work on:''' | ||

| Line 83: | Line 123: | ||

- Work on how to connect all the different processes (python + vcv + livescript) | - Work on how to connect all the different processes (python + vcv + livescript) | ||

[[ | =====<span style="border: dotted 1.5px rgb(92, 58, 196); padding: 5px 7px; background-color: #F7FAFF;">Personal documentation of the project</span>===== | ||

* '''WHAT IT IS''' | |||

''Lost in Narration'' is a performative installation. It is a Text-to-Speech-to-Text-to-Music-and-Print set up. Meaning the whole installation takes a text input which, spoken into mics get translated back into text and music simultaneously, and printed on a thermal printer. | |||

<p style="text-align: center; font-family: monospace; padding: 3px 5px; border: solid 1px black;">Written Text ---> Speech in mics ---> Speech back to text ---> Music + Print</p> | |||

* '''WHAT IT DOES''' | |||

It questions the purposes and outcomes of communication and story-telling. A livescript of the event gets misinterpreted by a python scripts as it is spoken into microphones, telling a totally different story from the one written by the narrators. | |||

* '''HOW IT WORKS''' | |||

Two narrators sit in front of keyboards and livescript whatever is happening around them. They can decide to twist the actual events, communicate with each other through that narration. The script is projected on a separate screen, in front of which sit two speakers, each one reading a narrator's voice. | |||

<p style="color: gray; font-style: italic; text-align: center;">---- Add a drawing! ----</p> | |||

When the speakers talk in the microphones, three things happen simultaneously: | |||

:- A python script tries to transcribe the words into written text, mostly messing it up <br> | |||

:- The same script is also printing this written output to a thermal printer<br> | |||

:- An additional computer uses a VCV Rack 2 set up to translate the spoken words into music/sound as they are spoken<br> | |||

When the speakers are done speaking, they can press <code>CTRL+C</code> on the keyboard to tell the printer to cut the paper. | |||

* '''HOW IT IS MADE''' | |||

:; Narration interface | |||

Built in Unity, each narrator get a font and a colour. Two keyboards are plugged on the same computer and write. <br> | |||

Controls: keys to write, TAB to switch fonts/voices, ENTER to start a new line<br> | |||

A <code>.txt</code> file containing all the written lines is made and preserved during runtime, it acts as a log.<br> | |||

[[File: Livescriptprogram.PNG]] | |||

:; Text-to-Speech-to-Text-to-Print | |||

Using python and python libraries.<br> | |||

:: 1. Install and run the speech recognition (Windows)<br> | |||

:::- create a dedicated folder on the computer, where you want to store the scripts and written files | |||

:::- install sounddevice with <code>pip install sounddevice</code> | |||

:::- install vosk with <code>pip install vosk</code> | |||

:::- download [https://github.com/alphacep/vosk-api/blob/master/python/example/test_microphone.py this file] and put it in the folder | |||

:::- cd in your folder through the terminal | |||

:::- run <code>python test_microphone.py</code> <br> | |||

:::- the program is stopped with <code>CTRL+C</code></span> | |||

:::- (optional) you can go modify the <code>test_microphone.py</code> by commenting lines 80 and 81. This prevents the program to print the partial result of it trying to recognize what's being said | |||

:::- if you want to print the content being recorder into a text file, run <code>python test_microphone.py ›› stderrout.txt</code>. The <code>››</code> means that it will append the new data in the file if you stop the program. If you want to overwrite everytime, simply use <code>›</code>. <br> | |||

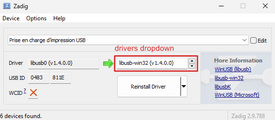

:: 2. Install the thermal printer | |||

:::- install [https://github.com/walac/pyusb?tab=readme-ov-file pyusb] with <code>pip install pysub</code> and download the libusb-win32 packages [https://sourceforge.net/projects/libusb-win32/ here] | |||

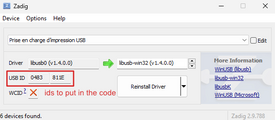

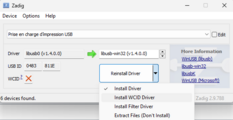

:::- install lib-usb and all the drivers through [https://zadig.akeo.ie/ Zadig]: in Options select ''List All Devices'' and from the dropdown select the printer device. Then, go through the list of drivers with the arrows and install the WCID Driver for each. '''IMPORTANT''': Replace the printer's driver with the <code>libusb-win32</code> driver, this is what allows the connection. Zadig will also give you the printer's id, which are needed in the <code>script.py</code> file we're creating later. | |||

<gallery class="li-gallery" widths="350" style="text-align: center;" mode="nolines"> | |||

File:Zadig-og.png | |||

File:Zadig-01.png | |||

File:Zadig-02.png | |||

File:Zadig-param-og.png | |||

</gallery> | |||

:::- install ESC/POS with <code>pip install python-escpos</code> | |||

:::- create a new .py file in your folder, via the code editor, call it <code>script.py</code> and put the code below, don't forget to change the ids in the parenthesis. Now you can run <code>python script.py</code> to see the magic happening! | |||

<syntaxhighlight lang=python> | |||

from escpos.printer import Usb | |||

iprinter = Usb(0x0483, 0x811E, in_ep=0x81, out_ep=0x02) #here you replace 0483 and 811E with the ids provided by Zadig | |||

iprinter.text("hello world") | |||

iprinter.qr("https://xpub.nl") | |||

</syntaxhighlight> | |||

:: 3. Merge the <code>test_microphone.py</code> and <code>script.py</code> scripts<br> | |||

Here is the final code that gets the voice, writes it and prints it: | |||

<syntaxhighlight lang=python> | |||

import argparse | |||

import queue | |||

import sys | |||

import sounddevice as sd | |||

from vosk import Model, KaldiRecognizer | |||

from escpos.printer import Usb | |||

import json | |||

import keyboard | |||

=== <span style="border: dotted 1.5px rgb(92, 58, 196); padding: 5px 7px; background-color: #F7FAFF;">Protocol for wrapping up the Special Issue 25</span> === | # Define the int_or_str function for argument parsing | ||

def int_or_str(text): | |||

"""Helper function for argument parsing to accept either an integer or string.""" | |||

try: | |||

return int(text) | |||

except ValueError: | |||

return text | |||

# Set up the queue for audio data | |||

q = queue.Queue() | |||

# Define callback to process audio in real-time | |||

def callback(indata, frames, time, status): | |||

if status: | |||

print(status, file=sys.stderr) | |||

q.put(bytes(indata)) | |||

# Argument parsing for devices, samplerate, etc. | |||

parser = argparse.ArgumentParser(add_help=False) | |||

parser.add_argument("-l", "--list-devices", action="store_true", help="show list of audio devices and exit") | |||

args, remaining = parser.parse_known_args() | |||

# List audio devices if requested | |||

if args.list_devices: | |||

print(sd.query_devices()) | |||

parser.exit(0) | |||

parser = argparse.ArgumentParser(description="Vosk microphone to printer", formatter_class=argparse.RawDescriptionHelpFormatter, parents=[parser]) | |||

parser.add_argument("-f", "--filename", type=str, metavar="FILENAME", help="audio file to store recording to") | |||

parser.add_argument("-d", "--device", type=int_or_str, help="input device (numeric ID or substring)") | |||

parser.add_argument("-r", "--samplerate", type=int, help="sampling rate") | |||

parser.add_argument("-m", "--model", type=str, help="language model; default is en-us") | |||

args = parser.parse_args(remaining) | |||

# Set up the model and samplerate | |||

try: | |||

if args.samplerate is None: | |||

device_info = sd.query_devices(args.device, "input") | |||

args.samplerate = int(device_info["default_samplerate"]) | |||

if args.model is None: | |||

model = Model(lang="en-us") | |||

else: | |||

model = Model(lang=args.model) | |||

if args.filename: | |||

dump_fn = open(args.filename, "wb") | |||

else: | |||

dump_fn = None | |||

# Set up USB printer (adjust device IDs as necessary) | |||

# --- This is the small black printer --- | |||

# iprinter = Usb(0x0483, 0x811E, in_ep=0x81, out_ep=0x02) | |||

# --- This is the big black printer --- | |||

iprinter = Usb(0x04B8, 0x0E28, 0, profile="TM-T88III") | |||

# Start the raw input stream from the microphone | |||

with sd.RawInputStream(samplerate=args.samplerate, blocksize=4000, device=args.device, dtype="int16", channels=1, callback=callback): | |||

print("#" * 80) | |||

print("Press Ctrl+C to stop the recording") | |||

print("#" * 80) | |||

rec = KaldiRecognizer(model, args.samplerate) | |||

while True: | |||

data = q.get() # Get the next audio chunk | |||

if rec.AcceptWaveform(data): | |||

result = rec.Result() | |||

result_json = json.loads(result) | |||

text = result_json.get('text', "") | |||

if text: # If recognized text exists, print it | |||

print(f"Recognized: {text}") | |||

iprinter.text(text + '\n') # Send recognized text to the printer | |||

if keyboard.is_pressed('c'): | |||

iprinter.cut() | |||

# Optional: You can also use PartialResult for real-time feedback, uncomment to use: | |||

# else: | |||

# print(rec.PartialResult(), flush=True) | |||

if dump_fn is not None: | |||

dump_fn.write(data) | |||

except KeyboardInterrupt: | |||

print("\nDone") | |||

parser.exit(0) | |||

except Exception as e: | |||

parser.exit(type(e).__name__ + ": " + str(e)) | |||

</syntaxhighlight> | |||

:; VCV Rack 2 | |||

[[File: Vcv.png]] | |||

* '''My part in the project''' | |||

We collectively decided to merge our ideas to make a super-project. We found that messing with story-telling and translation was very interesting and ''à propos'' since everyone in the class speaks a different native language. It questions the topics of documentation, accuracy and meaning. | |||

To that extent, I was managing a lot of the project, making sure every part was working, and especially working together. I made sure everyone had a role in the group. | |||

* '''What I take from it''' | |||

I tried my best to understand the python scripts, but couldn't get enough comprehension as to merge them. I need to step up and get better at this, python is cool! | |||

I naturally tend to manage groups and assure the harmony of the working process. | |||

The project could benefit from a better workflow, so that maybe it could travel without needing 3 different laptops. How can we achieve that?... | |||

<p style="font-weight: bold; text-transform: uppercase; text-align: right;">Dedicated wiki page: [[Lost in Narration]]</p> | |||

<br> | |||

===<span style="border: dotted 1.5px rgb(92, 58, 196); padding: 5px 7px; background-color: #F7FAFF;">Protocol for wrapping up the Special Issue 25</span>=== | |||

<span style="font-family: monospace; font-weight: bold; font-size: 15px;">25-11-2024 to ...</span><br> | |||

We gathered as a team and discussed the outcome we want for the Special Issue 25.<br> | We gathered as a team and discussed the outcome we want for the Special Issue 25.<br> | ||

| Line 99: | Line 311: | ||

So we divided tasks to work efficiently: | So we divided tasks to work efficiently: | ||

* Design group: code + design | * Design group: code + design | ||

** Feline + Kiara + Kim + Tessa | ** Feline + Kiara + Kim + Tessa | ||

* Archive group: gathering all wikis and pages, gather pictures and select | * Archive group: gathering all wikis and pages, gather pictures and select | ||

** Wiki: Charlie + Claudio | ** Wiki: Charlie + Claudio | ||

** Pics: Chrissy + Zuhui | ** Pics: Chrissy + Zuhui | ||

** Video editing: Wyn | ** Video editing: Wyn | ||

| Line 107: | Line 319: | ||

[https://pad.xpub.nl/p/Post-Prod_Sounds-of-Making Link to the pad] | [https://pad.xpub.nl/p/Post-Prod_Sounds-of-Making Link to the pad] | ||

==== <span style="border: dotted 1.5px rgb(92, 58, 196); padding: 5px 7px; background-color: #F7FAFF;">21-03-2025 UPDATE</span>==== | =====<span style="border: dotted 1.5px rgb(92, 58, 196); padding: 5px 7px; background-color: #F7FAFF; font-size: smaller;">21-03-2025 UPDATE</span>===== | ||

In the end, we decided to build an entirely *new* website. It uses a script to fetch data from wiki pages and insert them as content. | In the end, we decided to build an entirely *new* website. It uses a script and the wiki API to fetch data from wiki pages and insert them as content.<br> | ||

This | In order to do so, we decided on a very methodical approach that required a thorough exploration of all the wiki pages for the different Radio Shows and SI25 Projects in order to make sure they followed the same structure, the same naming procedure and eventually to add HTML classes where needed. We also gathered them in new '''Content Pages''' (see below) either by linking them or using the ''power of transclusion''.<br> | ||

This way, we could also push the content into a paged.js template to produce a printed archive of the trimester.<br> | |||

We are still in the styling process, as this method brought many bugs and needs for hacks in order to work properly.<br> | |||

* '''HOW IT WORKS / HOW IT IS MADE | |||

A [https://git.xpub.nl/kiara/Special-Issue-25/src/branch/main/js/view.js script] uses the Wiki API to fetch the content (see below) and put it in a HTML file.<br> | |||

By adding classes and HTML tags inside the wiki pages, we can now target them in CSS to style the website and the print accordingly. | |||

The print is generated using paged.js | |||

We use various stylesheets for the website, allowing us to work remotely and simultaneously without overwriting each other's work. Kim and I have been doing some stylesheet cleaning and restructuring lately. | |||

'''Wiki Pages (Content)''' | |||

:;[[Special Issue 25 | Website homepage]] | :;[[Special Issue 25 | Website homepage]] | ||

:;[[Special Issue 25 to print | The print content]] | :;[[Special Issue 25 to print | The print content]] | ||

:;[[SI25 Radio Shows | Radio Shows]] | :;[[SI25 Radio Shows | Radio Shows]] | ||

:;[[SI25: Sounds of Making | Public Event and | :;[[SI25: Sounds of Making | Public Event and Pictures]] <br> | ||

→ [[SI25 WIKI COLLECTION | | |||

→ [[SI25 WIKI COLLECTION | The working spreadsheet]] made by Claudio and Charlie to make sure the content was up to date and ready to be pushed to the html source. | |||

'''Preview Links'''<br> | |||

:;[https://hub.xpub.nl/cerealbox/Special-Issue-25/view.html?pagename=Special%20Issue%2025 Website] (WIP) | |||

:;[https://hub.xpub.nl/cerealbox/Special-Issue-25/print.html Print] (to be printed) | |||

* '''MY PART IN THE PROJECT''' | |||

I took care of all of the print. Some decisions were made collectively, such as choosing whether or not to display the audio players (I voted yes...). | |||

Then, once Claudio and Charlie cleaned up the wiki pages, I did the whole print layout. I went back into the wiki pages to add some classes that were needed to hide content, and did some more cleaning (i.e. some image galleries had been left as tables...). | |||

Once I was done, I went back to help with the website. We still have to finish it all and print the issue... | |||

* '''WHAT I TAKE FROM IT''' | |||

I am a paged.js advocate. | |||

It starts to look like a cult and I am a guru '-' | |||

We have some kind of difficulty to keep track of this project, everything was so chaotic in December... | |||

Latest revision as of 14:38, 1 April 2025

▁▂▃Protocols for Collective Performance▃▂▁

Since everything seems to be a mise en abyme, this page is going to be all about protocols, in order to be meta and in harmony with this Special Issue's title. General page of the trimester

Protocol for a field recording

Audio file taken from aporee.org near the Balma-Gramont metro station in Toulouse.

Listen to the audio on the website

Context

This precise place is actually one of the city's borders. It makes the connection between Toulouse and the city called Balma, which is in the North-East.

On the photo above, you can see the Hers[1] river, and a bridge, on which the metro passes (hear it at 01:49). Near this precise point on the map is a big commercial complex, lots of companies and an entrance to the highway. So this is obviously not the place you would expect to hear the birds. Yet, here they are! 🐦⬛

- ↑ pronounced “èrss” (or /ɛʁs/ according to the IPA audio chart and the IPA vowels audio chart)

Metadata

Memory from the place

A song that goes with the memory (kind of)

https://www.youtube.com/watch?v=AlwgC9yqNcw

This is a song from my childhood.

Permission granted to share all the audios in the radio show for Sept. 23 2024

Protocol for mourning a database

See User:Kiara/mourning for pictures

- Choose a date in the calendar, maybe the day you lost the DB :(( and set it as a holiday.

- Tell your boss/clients/whoever you want you're in grief and need a mourning leave.

- Rebuild the DB when grief is over :((

- Learn to backup your DBs to prevent future griefs.

Protocol for a first radio show

23-09-2024

The very first show that took place at Radio WORM with Sevgi, Fred, Kim and Martina.

We used the field recordings, metadata and songs people put on their Field Recording wiki pages.

Listen to the show on Mixcloud!!

Dedicated wiki page: SI25 Broadcast 1: Soundmapping

We made a map tracing the different routes we took with the recordings!

Protocols for Collective Reading

See the pages referenced below:

- Alphabet Soup

- Bookclub

- The pads from Lidia's sessions listed in the XPUB 2024-2026 Survival Guide

Protocols for a Jam Session

24-09-2024

Jam Session with Joseph: creating instruments with unusual objects

Jam 1

Jam 2 (chaos)

Jam 3 (quiet)

Protocol for Canon Music

14-10-2024

Session with Michael: Reading and acting the Clapping Music by Steve Reich.

The pattern is 3-2-1-2 and it's looping.

What happens when the second clapper enters and loops their part?

→ It becomes a canon!

What would be a better way of writing this partition?

→ In a wheel!

It feels like unveiling a secret hidden in the partition - like solving a riddle!

Protocol for a Delayed Radio Show

04-11-2024

with Chrissy, Tessa, Imina, Charlie

Today, we were supposed to host one of the best radio shows of the trimester. We worked super hard to make it perfect but...WORM faced technical issues and had to close down the radio for an unknown period of time.

We really put our hearts into the making of this show:

- → the theme is Lost Music, we asked everybody to contribute by uploading songs from their teenage years onto this wiki page. From this, emerged also a Spotify playlist!!

- → we burned CDs with the songs, according to the themes we agreed on, the CD cases contain a poster and stickers!

- → we made a web page hosting the playlist (downloadable)

- → we thought about copyright, piracy and music that is lost through time by being present only on CDs that people kept (opposed to being present on streaming platforms); but also the fact that through streaming platforms we don't own music anymore, and if the platform goes down, we lose music...

In the end, the show that was supposed to happen on Nov. 4th got pushed to Nov. 25th, and it was a B L A S T.

Dedicated wiki page: SI25 Broadcast 8: Lost Music

Protocol for a Non-Radio Show

18-11-2024

with Feline, Lexie, Eleni, Chrissy

This is the Monday right before the Special Issue Public Event, we are not in a planning mindset. We thus decide to improvise.

We gather vinyls, readings and discussion topics. Lexie and Feline are DJing, Chrissy, Eleni and I are improvising a podcast. We will start by planning the radio show we are actually broadcasting (mise en abyme hehe).

Dedicated wiki page: SI25 Broadcast 7: Improvisation

S M O O T H

Protocol for organizing an event

28-10-2024 to 03-11-2024

Having 3 meetings in 2 days about the task management, making working groups, deciding on what we do and how we do it.

I am in the documentation team, to find ways to archive the whole event (websiteeeeeeeeee)

- My project idea for the event

- Inspired by Sarah Garcin's Radio to Print workshops 🠶 there's a python dependency to transform speech into printed text.

- Mixed with Eleni's idea of having a spoken input transformed into music via VCV Rack -- in the end we get

spoken input => becomes music for the listeners + gets printed live (thermal or fax machine)

- Adding Zuhui's idea

- To engage visitors in this performance, they can become part of the foundational text/speech material for the auditory output: One or two writers will be present in the room, live scripting as they observe the event. This observation can focus on the visitors —or anyone in the room— and may include fictional elements depending on the writers' interpretations and creative choices. Ideally, this live-scripting will be projected on a screen, allowing visitors to watch as they’re being observed and transformed into text, and then speech, which then becomes sound and then text again (in VCV+python) —all in real-time.

- Sevgi's idea

- This script could also serve as a part of documentation(post-production) of the event. Because it’s the writing of the live observation made directly in the moment and setting of the performance.

- Whole public needed

- Works best if not time framed but could maybe work if the frame is around 2h

Remaining questions to work on: - Is it live radio or a recording during the day? After thinking about it: with Zuhui's addition to the pitch, maybe recording makes more sense? (open question) - Ask Joseph/Manetta how to install the python dependencies because i can't make it - Work on how to connect all the different processes (python + vcv + livescript)

Personal documentation of the project

- WHAT IT IS

Lost in Narration is a performative installation. It is a Text-to-Speech-to-Text-to-Music-and-Print set up. Meaning the whole installation takes a text input which, spoken into mics get translated back into text and music simultaneously, and printed on a thermal printer.

Written Text ---> Speech in mics ---> Speech back to text ---> Music + Print

- WHAT IT DOES

It questions the purposes and outcomes of communication and story-telling. A livescript of the event gets misinterpreted by a python scripts as it is spoken into microphones, telling a totally different story from the one written by the narrators.

- HOW IT WORKS

Two narrators sit in front of keyboards and livescript whatever is happening around them. They can decide to twist the actual events, communicate with each other through that narration. The script is projected on a separate screen, in front of which sit two speakers, each one reading a narrator's voice.

---- Add a drawing! ----

When the speakers talk in the microphones, three things happen simultaneously:

- - A python script tries to transcribe the words into written text, mostly messing it up

- - The same script is also printing this written output to a thermal printer

- - An additional computer uses a VCV Rack 2 set up to translate the spoken words into music/sound as they are spoken

When the speakers are done speaking, they can press CTRL+C on the keyboard to tell the printer to cut the paper.

- HOW IT IS MADE

- Narration interface

Built in Unity, each narrator get a font and a colour. Two keyboards are plugged on the same computer and write.

Controls: keys to write, TAB to switch fonts/voices, ENTER to start a new line

A .txt file containing all the written lines is made and preserved during runtime, it acts as a log.

- Text-to-Speech-to-Text-to-Print

Using python and python libraries.

- 1. Install and run the speech recognition (Windows)

- - create a dedicated folder on the computer, where you want to store the scripts and written files

- - install sounddevice with

pip install sounddevice - - install vosk with

pip install vosk - - download this file and put it in the folder

- - cd in your folder through the terminal

- - run

python test_microphone.py - - the program is stopped with

CTRL+C - - (optional) you can go modify the

test_microphone.pyby commenting lines 80 and 81. This prevents the program to print the partial result of it trying to recognize what's being said - - if you want to print the content being recorder into a text file, run

python test_microphone.py ›› stderrout.txt. The››means that it will append the new data in the file if you stop the program. If you want to overwrite everytime, simply use›.

- 2. Install the thermal printer

- - install pyusb with

pip install pysuband download the libusb-win32 packages here - - install lib-usb and all the drivers through Zadig: in Options select List All Devices and from the dropdown select the printer device. Then, go through the list of drivers with the arrows and install the WCID Driver for each. IMPORTANT: Replace the printer's driver with the

libusb-win32driver, this is what allows the connection. Zadig will also give you the printer's id, which are needed in thescript.pyfile we're creating later.

- - install pyusb with

- 1. Install and run the speech recognition (Windows)

- - install ESC/POS with

pip install python-escpos - - create a new .py file in your folder, via the code editor, call it

script.pyand put the code below, don't forget to change the ids in the parenthesis. Now you can runpython script.pyto see the magic happening!

- - install ESC/POS with

from escpos.printer import Usb

iprinter = Usb(0x0483, 0x811E, in_ep=0x81, out_ep=0x02) #here you replace 0483 and 811E with the ids provided by Zadig

iprinter.text("hello world")

iprinter.qr("https://xpub.nl")

- 3. Merge the

test_microphone.pyandscript.pyscripts

- 3. Merge the

Here is the final code that gets the voice, writes it and prints it:

import argparse

import queue

import sys

import sounddevice as sd

from vosk import Model, KaldiRecognizer

from escpos.printer import Usb

import json

import keyboard

# Define the int_or_str function for argument parsing

def int_or_str(text):

"""Helper function for argument parsing to accept either an integer or string."""

try:

return int(text)

except ValueError:

return text

# Set up the queue for audio data

q = queue.Queue()

# Define callback to process audio in real-time

def callback(indata, frames, time, status):

if status:

print(status, file=sys.stderr)

q.put(bytes(indata))

# Argument parsing for devices, samplerate, etc.

parser = argparse.ArgumentParser(add_help=False)

parser.add_argument("-l", "--list-devices", action="store_true", help="show list of audio devices and exit")

args, remaining = parser.parse_known_args()

# List audio devices if requested

if args.list_devices:

print(sd.query_devices())

parser.exit(0)

parser = argparse.ArgumentParser(description="Vosk microphone to printer", formatter_class=argparse.RawDescriptionHelpFormatter, parents=[parser])

parser.add_argument("-f", "--filename", type=str, metavar="FILENAME", help="audio file to store recording to")

parser.add_argument("-d", "--device", type=int_or_str, help="input device (numeric ID or substring)")

parser.add_argument("-r", "--samplerate", type=int, help="sampling rate")

parser.add_argument("-m", "--model", type=str, help="language model; default is en-us")

args = parser.parse_args(remaining)

# Set up the model and samplerate

try:

if args.samplerate is None:

device_info = sd.query_devices(args.device, "input")

args.samplerate = int(device_info["default_samplerate"])

if args.model is None:

model = Model(lang="en-us")

else:

model = Model(lang=args.model)

if args.filename:

dump_fn = open(args.filename, "wb")

else:

dump_fn = None

# Set up USB printer (adjust device IDs as necessary)

# --- This is the small black printer ---

# iprinter = Usb(0x0483, 0x811E, in_ep=0x81, out_ep=0x02)

# --- This is the big black printer ---

iprinter = Usb(0x04B8, 0x0E28, 0, profile="TM-T88III")

# Start the raw input stream from the microphone

with sd.RawInputStream(samplerate=args.samplerate, blocksize=4000, device=args.device, dtype="int16", channels=1, callback=callback):

print("#" * 80)

print("Press Ctrl+C to stop the recording")

print("#" * 80)

rec = KaldiRecognizer(model, args.samplerate)

while True:

data = q.get() # Get the next audio chunk

if rec.AcceptWaveform(data):

result = rec.Result()

result_json = json.loads(result)

text = result_json.get('text', "")

if text: # If recognized text exists, print it

print(f"Recognized: {text}")

iprinter.text(text + '\n') # Send recognized text to the printer

if keyboard.is_pressed('c'):

iprinter.cut()

# Optional: You can also use PartialResult for real-time feedback, uncomment to use:

# else:

# print(rec.PartialResult(), flush=True)

if dump_fn is not None:

dump_fn.write(data)

except KeyboardInterrupt:

print("\nDone")

parser.exit(0)

except Exception as e:

parser.exit(type(e).__name__ + ": " + str(e))

- VCV Rack 2

- My part in the project

We collectively decided to merge our ideas to make a super-project. We found that messing with story-telling and translation was very interesting and à propos since everyone in the class speaks a different native language. It questions the topics of documentation, accuracy and meaning.

To that extent, I was managing a lot of the project, making sure every part was working, and especially working together. I made sure everyone had a role in the group.

- What I take from it

I tried my best to understand the python scripts, but couldn't get enough comprehension as to merge them. I need to step up and get better at this, python is cool!

I naturally tend to manage groups and assure the harmony of the working process.

The project could benefit from a better workflow, so that maybe it could travel without needing 3 different laptops. How can we achieve that?...

Dedicated wiki page: Lost in Narration

Protocol for wrapping up the Special Issue 25

25-11-2024 to ...

We gathered as a team and discussed the outcome we want for the Special Issue 25.

The idea is the following:

- event documentation

- reflection on the radio, what we did in those 3 months

For this, we decided to make a website to print, that displays all the information about radio shows and the 20 nov. event.

The website will have two distinct sections, one for the radio and one for the event, built upon the already existing website.

So we divided tasks to work efficiently:

- Design group: code + design

- Feline + Kiara + Kim + Tessa

- Archive group: gathering all wikis and pages, gather pictures and select

- Wiki: Charlie + Claudio

- Pics: Chrissy + Zuhui

- Video editing: Wyn

21-03-2025 UPDATE

In the end, we decided to build an entirely *new* website. It uses a script and the wiki API to fetch data from wiki pages and insert them as content.

In order to do so, we decided on a very methodical approach that required a thorough exploration of all the wiki pages for the different Radio Shows and SI25 Projects in order to make sure they followed the same structure, the same naming procedure and eventually to add HTML classes where needed. We also gathered them in new Content Pages (see below) either by linking them or using the power of transclusion.

This way, we could also push the content into a paged.js template to produce a printed archive of the trimester.

We are still in the styling process, as this method brought many bugs and needs for hacks in order to work properly.

- HOW IT WORKS / HOW IT IS MADE

A script uses the Wiki API to fetch the content (see below) and put it in a HTML file.

By adding classes and HTML tags inside the wiki pages, we can now target them in CSS to style the website and the print accordingly.

The print is generated using paged.js

We use various stylesheets for the website, allowing us to work remotely and simultaneously without overwriting each other's work. Kim and I have been doing some stylesheet cleaning and restructuring lately.

Wiki Pages (Content)

→ The working spreadsheet made by Claudio and Charlie to make sure the content was up to date and ready to be pushed to the html source.

Preview Links

- MY PART IN THE PROJECT

I took care of all of the print. Some decisions were made collectively, such as choosing whether or not to display the audio players (I voted yes...). Then, once Claudio and Charlie cleaned up the wiki pages, I did the whole print layout. I went back into the wiki pages to add some classes that were needed to hide content, and did some more cleaning (i.e. some image galleries had been left as tables...).

Once I was done, I went back to help with the website. We still have to finish it all and print the issue...

- WHAT I TAKE FROM IT

I am a paged.js advocate.

It starts to look like a cult and I am a guru '-'

We have some kind of difficulty to keep track of this project, everything was so chaotic in December...