User:Markvandenheuvel/prototyping hackpacts: Difference between revisions

| (35 intermediate revisions by the same user not shown) | |||

| Line 37: | Line 37: | ||

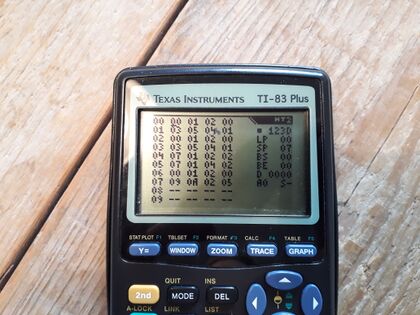

I came across an open-source project that makes it possible to 'hack' old Texas Instruments calculators and turn them into 1-bit music composing tool (instrument). The program ([[https://irrlichtproject.de/houston/ Houston Tracker)]] is still not being used so much and remains quite obscure. HT2 converts the binary output of the TI-calculator to generate 1-bit sound. What I think is really interesting is that 1-bit sound represents the on/off binary basics of computing. The 1-bit sound is therefore close to the inner process. | I came across an open-source project that makes it possible to 'hack' old Texas Instruments calculators and turn them into 1-bit music composing tool (instrument). The program ([[https://irrlichtproject.de/houston/ Houston Tracker)]] is still not being used so much and remains quite obscure. HT2 converts the binary output of the TI-calculator to generate 1-bit sound. What I think is really interesting is that 1-bit sound represents the on/off binary basics of computing. The 1-bit sound is therefore close to the inner process. | ||

[[File:20201103 140137.jpg| | [[File:20201103 140137.jpg|420px]] | ||

https://youtu.be/ | [[https://youtu.be/Ktyi1d2ohAY See it work]] | ||

===Key points=== | ===Key points=== | ||

| Line 74: | Line 74: | ||

: 1-bit synthesis techniques: https://phd.protodome.com/#anchor-pulse-width-sweep\ | : 1-bit synthesis techniques: https://phd.protodome.com/#anchor-pulse-width-sweep\ | ||

: Graphlink cable'''for converting binary data to sound: https://www.amazon.com/Texas-Instruments-94327-Graphlink-USB/dp/B00006BXBS | : Graphlink cable'''for converting binary data to sound: https://www.amazon.com/Texas-Instruments-94327-Graphlink-USB/dp/B00006BXBS | ||

=KCS: digital data standard explorations= | =KCS: digital data standard explorations= | ||

| Line 164: | Line 163: | ||

[https://hub.xpub.nl/sandbox/~markvandenheuvel/results/t.wav Listen to this image ] | |||

https://hub.xpub.nl/sandbox/~markvandenheuvel/results/t.wav | |||

====playful ideas & potentials to further explore:==== | ====playful ideas & potentials to further explore:==== | ||

| Line 190: | Line 188: | ||

[[File:6100c610fbf73c210905eef0d538f9af.png|500px]] | [[File:6100c610fbf73c210905eef0d538f9af.png|500px]] | ||

===Tutorial with David Moroto | ===Tutorial with David Moroto === | ||

====What is your content?==== | ====What is your content?==== | ||

| Line 198: | Line 196: | ||

: dept! (true for me, fiction for other) | : dept! (true for me, fiction for other) | ||

: 'conversational tool' between the analog and digital | : 'conversational tool' between the analog and digital | ||

: tool of mediation | : tool of mediation | ||

==Inspiration== | ==Inspiration== | ||

| Line 342: | Line 339: | ||

:: ''- "You just looked outside through a window, staring at the screen again, you think about the past."'' | :: ''- "You just looked outside through a window, staring at the screen again, you think about the past."'' | ||

:: ''- "Lately, I often got asked if I am a robot. Are a robot. Are you a robot?"'' | :: ''- "Lately, I often got asked if I am a robot. Are a robot. Are you a robot?"'' | ||

:: ''- " | :: ''- "Listen closely to this image!"'' | ||

====Output==== | ====Output==== | ||

| Line 349: | Line 346: | ||

====Inspiration taken from tape recordings of:==== | ====Inspiration taken from tape recordings of:==== | ||

*'Alfa Training' course | *'Alfa Training' motivational audio course about 'self-programming', regaining autonomy and guidance in making life and work decisions [https://youtu.be/sZSq1MqnVIw Listen here] | ||

* software (detail: music snippets of | * software on tape! (fun detail: there are music snippets of ABBA in between) | ||

* | * Recording of computer course: 'How to get online?' (mechanical typing sounds, registration, silence to do an exercise) | ||

* guide/course: leader/character, bumpers and theme songs and scores, silence for executing | * guide/course: leader/character, bumpers and theme songs and scores, silence for executing | ||

* blending in personal experiences | * low key blending in personal stories and experiences | ||

* use metaphors and figure of speech from 'alpha training' | |||

Important input: | |||

: Donna Harraway: viewpoints "cyborg" (some parts are hard to digest) | |||

: Sun Ra: creating myths, "sonic fiction" (planetary importance) | |||

: Radical software: knowledge, sharing, community building | |||

: Erkki Kurenniemi / Computer eats Art (misusing technology to 'keep control' / in between formats) | |||

<pre> | <pre> | ||

| Line 363: | Line 369: | ||

(_,...'(_,.`__)/'.....+ | (_,...'(_,.`__)/'.....+ | ||

</pre> | </pre> | ||

===Feedback(w/Manetta 12.02.2021)=== | |||

'''On digital text and the development of the Word processor:''' | |||

*[https://www.youtube.com/watch?v=nN9wNvEnn-Q Secret Life Of Machines - The Word Processor] | |||

*[https://monoskop.org/images/f/f9/Cramer_Florian_Anti-Media_Ephemera_on_Speculative_Arts_2013.pdf Anti Media - Florian Cramer (- 'literate programming' (code is an esthetic!)] | |||

*[https://vvvvvvaria.org/curriculum/In-the-Beginning-...-Was-the-Commandline/READER.html Exploring computer text interfaces] | |||

'''Research as publication:''' | |||

* Situationist Times: http://vandal.ist/thesituationisttimes/ | |||

* Publishing: https://www.woodstonekugelblitz.org/ | |||

* http://lists.artdesign.unsw.edu.au/pipermail/empyre/2020-December/011211.html | |||

* streaming the 'sound' of streaming: http://anarchaserver.org:8000/ | |||

'''Practical tools/tips:''' | |||

: Turn a Git repo into a collection of interactive notebooks: https://mybinder.org/ | |||

: jupyter nbconvert | |||

: /var/log/ for (accessing logs of processes) | |||

'''potential idea's:''' | |||

*instagrain' (insstvagram) broadcasting platform (SSTV) | |||

- a website you can visit which constantly broadcasting images you can capture with your phone | |||

* text feed (Twitter) to audio and storing on cassette tape (KCS) | |||

- a 'service' to capture text and store it on cassette tape (and decode it as well) | |||

:::''Mini task: Think about what substantive questions and observations regarding content arise?'' <br> | |||

:::'fluxus' / system / continuity / analog vs digital / etc | |||

standalone: | |||

- /dev/zero | |||

- /zero/null | |||

- RM optie | |||

> server (hub) | |||

===Feedback(w/Michael 12.02.2021)=== | |||

TAKEN FROM SOUNDSTUDIES READER: | |||

'''Semantic Listening''' | |||

I call semantic listening that which refers to a code or a language to interpret a message: spoken language, of course, as well as Morse and other such codes. This | |||

mode of listening, which functions in an extremely complex way, has been the object | |||

of linguistic research and has been the most widely studied. One crucial fi nding is that | |||

it is purely differential. A phoneme is listened to not strictly for its acoustical | |||

properties but as part of an entire system of oppositions and differences. Thus | |||

semantic listening often ignores considerable differences in pronunciation (hence in | |||

sound) if they are not pertinent differences in the language in question. Linguistic | |||

listening in both French and English, for example, is not sensitive to some widely | |||

varying pronunciations of the phoneme a. | |||

Obviously one can listen to a single sound sequence employing both the causal | |||

and semantic modes at once. We hear at once what someone says and how they say it. | |||

In a sense, causal listening to a voice is to listening to it semantically as perception of | |||

the handwriting of a written text is to reading it.2 | |||

'''On Background Listening''' | |||

In early 2009, Jay Rosen, a professor of journalism at New York University, posed a | |||

question to his 12,000 followers on the micro-blogging service Twitter. He wanted to | |||

know why they twittered. Of the almost 200 responses that he initially received via | |||

his blog and Twitter account, he noticed an important similarity. ‘Surprise fi nding | |||

from my project’, he wrote on Twitter on 8 January, ‘is how often I wound up with | |||

radio as a comparison.’ | |||

The comparison may have been unexpected, but it is provocative. Twitter is a | |||

social networking service where users send and receive text-based updates of up to | |||

140 characters. They can be delivered and read via the web, instant messaging clients | |||

or mobile phone as text messages. Unlike radio, which is a one-to-many medium, | |||

Twitter is many-to-many. People choose whom they will follow, which may be a small | |||

group of intimates, or thousands of strangers – they then receive all updates written | |||

by those people. Perhaps the most obvious difference from radio is that there is no | |||

sound broadcast on Twitter. It is simply a network of people scanning, reading and | |||

occasionally posting written messages. Yet the radio analogy persists. As MSN editor | |||

Jane Douglas writes, ‘I see Twitter like a ham radio for tuning into the world’ (cited | |||

in Rosen 2009). | |||

The keynote sounds of a landscape are those created by its geography and climate: | |||

water, wind, forests, plains, birds, insects and animals. Many of these sounds may | |||

possess archetypal signifi cance; that is, they may have imprinted themselves so deeply | |||

on the people hearing them that life without them would be sensed as a distinct | |||

impoverishment. They may even affect the behavior or life style of a society, though | |||

for a discussion of this we will wait until the reader is more acquainted with the | |||

matter. | |||

Signals are foreground sounds and they are listened to consciously. In terms of | |||

the psychologist, they are fi gure rather than ground. Any sound can be listened to | |||

consciously, and so any sound can become a fi gure or signal, but for the purposes of | |||

our community-oriented study we will confi ne ourselves to mentioning some of | |||

those signals which must be listened to because they constitute acoustic warning | |||

devices: bells, whistles, horns and sirens. Sound signals may often be organized into | |||

quite elaborate codes permitting messages of considerable complexity to be | |||

transmitted to those who can interpret them. Such, for instance, is the case with the | |||

cor de chasse, or train and ship whistles, as we shall discover. | |||

The term soundmark is derived from landmark and refers | |||

Latest revision as of 12:35, 28 January 2021

Hackpacts: "home school prototyping"

Prototypes and experiments so far:

- 1-Bit sound synthesis (making music on a 'hacked' TI calculator)

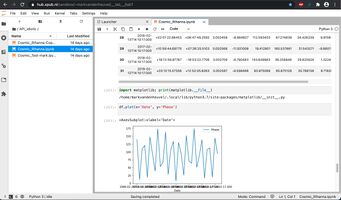

- KCS workflow: txt input > generated text > KSC audio > HTML

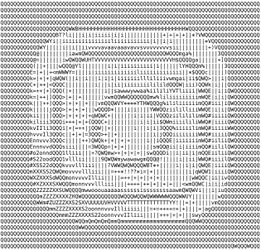

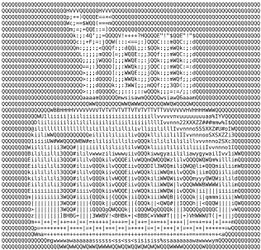

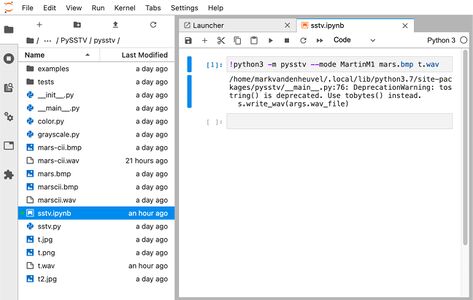

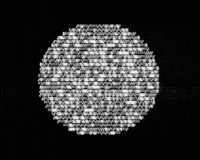

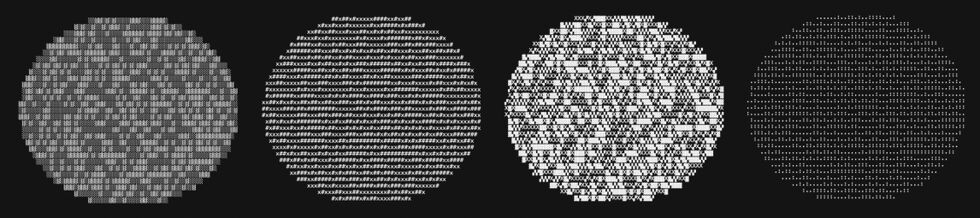

- AALIB experiments + Ascii (quilting): images converted to ascci via a generator in Python

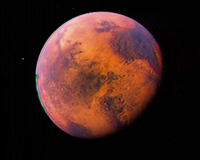

- SSTV workflow tests in Python: modulating images (sending/receiving – encoding/decoding, storing on tape)

- IRC bot experiments: (Urzaloid Franklin)

- API scraping(Planetary NASA data mixed with other sources)

- A)PART to de(PART PY.RATE.CHNC workshop: collaborative tape loop creation and recording (materiality of magnetic tape, deconstruction of sound, sonic fiction)

- interactive fiction + text based interface writing en generating text based on input.

__ __ ______ ___ ___ _______ ________ ______ __ __ ______ ______ ___

/" | | "\ / " \ |" \ /" | /" "| /" )/" _ "\ /" | | "\ / " \ / " \ |" |

(: (__) :) // ____ \ \ \ // |(: ______)(: \___/(: ( \___)(: (__) :) // ____ \ // ____ \ || |

\/ \/ / / ) :)/\\ \/. | \/ | \___ \ \/ \ \/ \/ / / ) :)/ / ) :)|: |

// __ \\(: (____/ //|: \. | // ___)_ __/ \\ // \ _ // __ \\(: (____/ //(: (____/ // \ |___

(: ( ) :)\ / |. \ /: |(: "| /" \ :)(: _) \ (: ( ) :)\ / \ / ( \_|: \

\__| |__/ \"_____/ |___|\__/|___| \_______)(_______/ \_______) \__| |__/ \"_____/ \"_____/ \_______)

_______ _______ ______ ___________ ______ ___________ ___ ___ __ _____ ___ _______

| __ "\ /" \ / " \(" _ ")/ " \(" _ ")|" \/" ||" \ (\" \|" \ /" _ "|

(. |__) :)|: | // ____ \)__/ \\__/// ____ \)__/ \\__/ \ \ / || | |.\\ \ |(: ( \___)

|: ____/ |_____/ )/ / ) :) \\_ / / / ) :) \\_ / \\ \/ |: | |: \. \\ | \/ \

(| / // /(: (____/ // |. | (: (____/ // |. | / / |. | |. \ \. | // \ ___

/|__/ \ |: __ \ \ / \: | \ / \: | / / /\ |\| \ \ |(: _( _|

(_______) |__| \___) \"_____/ \__| \"_____/ \__| |___/ (__\_|_)\___|\____\) \_______)

1-bit music: TI-83+ calculator experiments

I came across an open-source project that makes it possible to 'hack' old Texas Instruments calculators and turn them into 1-bit music composing tool (instrument). The program ([Houston Tracker)] is still not being used so much and remains quite obscure. HT2 converts the binary output of the TI-calculator to generate 1-bit sound. What I think is really interesting is that 1-bit sound represents the on/off binary basics of computing. The 1-bit sound is therefore close to the inner process.

Key points

- 1-bit music programming and playback (using Houston Tracker II by UTZ)

- materializing binary data + sound of the CPU (on/off)

- recording on cassette tape: analog processing of digital information

- the benefits of working with limitations

- 'Zombie media': reappropriation of obsolete tech and explore it's potential instead of discarding it: what does it mean?

- implement it in today's workflow (audio/visual, programming, etc)

playful ideas & potentials to further explore:

- 1-bit sound publication

- implementing graphics: bitmaps

- How to embed this in a modern context? What's the use?

- BASIC programming language

- 1-bit sound publication

To spread this project and both music, I thought about making a publication/release/demo in one.

- write a 4 track album for it and release it on a TI-83.

- People that buy it would receive a TI with the tracks on it (collected from thrift store / Marktplaats)

- mail it to people

- way to get started 'hands-on

- See how tracks were produced might get people started

- enlarge interest, spread the word & expand community?

This way, the public can not only listen but also directly engage and get their hands dirty if preferred. What I also think is interesting that in contrary to making music with the sound chip of obsolete gaming consoles is that it's much further detached from retro aesthetics. So it focuses much more on the tech part and thinking how to use this device otherwise.

links & Resources

- TILP: open source program for memory flashing on TI

- Houston Tracker 2: https://www.irrlichtproject.de/houston/houston1/index.html

- DOORS GUI: https://dcs.cemetech.net/index.php/Doors_CS_7_Scratchwork

- graphics: https://www.ticalc.org/pub/win/graphics/

- 1-bit synthesis paper: https://www.gwern.net/docs/cs/2020-troise.pdf

- 1-bit synthesis techniques: https://phd.protodome.com/#anchor-pulse-width-sweep\

- Graphlink cablefor converting binary data to sound: https://www.amazon.com/Texas-Instruments-94327-Graphlink-USB/dp/B00006BXBS

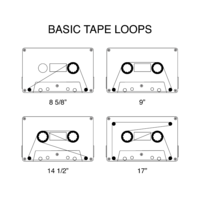

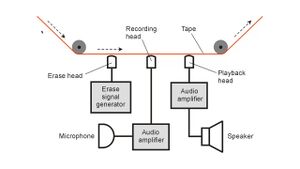

KCS: digital data standard explorations

KCS or Kansas City Standard was developed to convert code (in the form of ASCII text) into sound so it could be stored on media such as magnetic tape but became also suitable to broadcast over radio. This way, its data became more easily interchangeable. KSC is still being used today to restore large quantities of archival material that was stored on magnetic tape. There are other standards that are closely related to this standard. KCS was an attempt to standardize (For instance, the Commodore 64 had its own method however this was very much prone to errors.)

Key Points

- KCS workflow setup in Python (Jupyter Notebook) (text input > generated text > KSC audio > HTML)

- slow data transmission / image arise line by line

- materiality of data via sound (physical connection, embodiment of a process)

- deconstruction: encoding/decoding

- storage and playback (on audio cassette)

playful ideas & potentials to further explore

- bot that outputs encoded texts via audio

- bot that outputs encoded ASCII art (ASCII IMAGES) via audio

- printing out text receipt printer

- creating a modern Flexi Fisc Floppy Rom: https://en.wikipedia.org/wiki/Kansas_City_standard#/media/File:FloppyRom_Magazine.jpg

resources

- KCS standard: https://en.wikipedia.org/wiki/Kansas_City_standard

- Storing https://www.instructables.com/Storing-files-on-an-audio-cassette/

- future of data storage: https://spectrum.ieee.org/computing/hardware/why-the-future-of-data-storage-is-still-magnetic-tape

- Data Files on Tape (A Modern Attempt) https://youtu.be/muJDUonIOz8

AALIB + ASCCI generating experiments

ASCII art is a graphic design technique that uses computers for presentation and consists of pictures pieced together from the 95 printable (from a total of 128) characters defined by the ASCII Standard from 1963 and ASCII compliant character sets with proprietary extended characters (beyond the 128 characters of standard 7-bit ASCII). The term is also loosely used to refer to text-based visual art in general. ASCII art can be created with any text editor, and is often used with free-form languages. Most examples of ASCII art require a fixed-width font (non-proportional fonts, as on a traditional typewriter) such as Courier for presentation.

AAlib is a software library that allows applications to automatically convert still and moving images into ASCII art. It was released by Jan Hubicka as part of the BBdemo project in 1997.

Ascii planet renders in Python

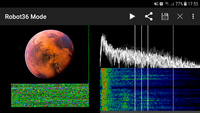

SSTV (Slow Scan Television) experiments

SSTV or Slow scan Television – originally invented as an analog method in the late '60s but accessible to the public in the early 90s when the custom radio equipment was replaced by PC software – is a protocol for sending images over audio frequencies. The sound holds the information of what color where to place, line by line. This way, the image is slowly generated when decoding the audio in real-time. This method is still used today by the HAM amateur radio community for sending and collecting images. Next to that, the International Space station (ISS) still sends images to planet earth this way. The way SSTV generates images is closely related to the material process of digital printing on paper.

SSTV Workflow experiments

Signal published on website:

https://hub.xpub.nl/sandbox/~markvandenheuvel/

Key points

- modulation: protocol/standard to send images over radio

- workflows and experiments using Python3 and bash in Jupyter Notebook

- exploring the materiality of data via sound

- 'slow' data transmission (in contrast with invisible processes and speed)

- encoding /decoding: deconstruction of data

- both interests combined: lo-tech graphics & sound!

SSTV Workflow in use: Image to SSTV signal received by phone

SSTV workflow and decoding via app

LISTEN

- Sound file of Mars image(mode: MartinM1: File:Sstv mars.mp3

- Sound file of Mars ascii image (mode: MartinM1) File:Sstv ascii mars.mp3

Instagram to SSTV: Instagrain Broadcasting

playful ideas & potentials to further explore:

- broadcasting images over Spotify (or 'invade' existing platforms with hidden content?)

- music & SSTV data combined? https://www.youtube.com/watch?v=tJ2X6HmW49E

links & resources:

- boadcasting software: https://www.qsl.net/kd6cji/downloads.html

- Python scripts to convert images to audio: https://pypi.org/project/PySSTV/

- general resources: http://users.belgacom.net/hamradio/sat-info.htm

- RXSSTV: http://users.belgacom.net/hamradio/rxsstv.htm

- SSTV tools (encoding/decoding) http://www.dxatlas.com/sstvtools/

- recent SSTV project: https://hsbp.org/rpi-sstv

- Pictures On Cassette: https://www.youtube.com/watch?v=c38dLDQoRtM

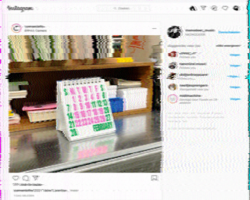

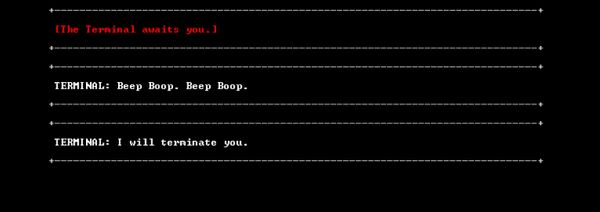

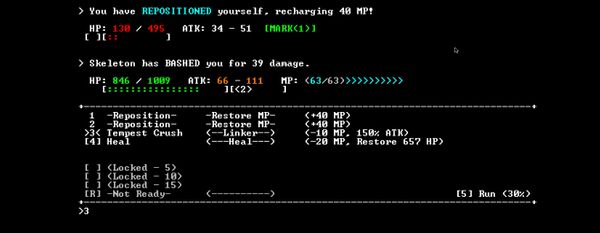

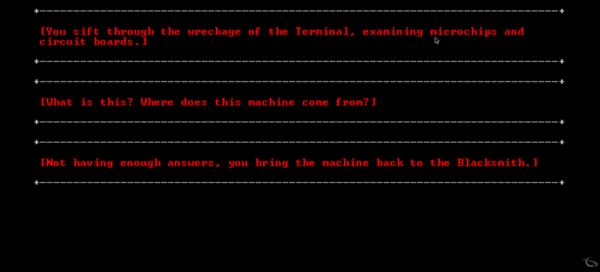

Interactive Fiction/'Text-based' adventure

Hypertext fiction is a genre of electronic literature, characterized by the use of hypertext links that provide a new context for non-linearity in literature and reader interaction. The reader typically chooses links to move from one node of text to the next, and in this fashion arranges a story from a deeper pool of potential stories. Its spirit can also be seen in interactive fiction.

Interactive fiction, often abbreviated IF, is software simulating environments in which players use text commands to control characters and influence the environment. Works in this form can be understood as literary narratives, either in the form of interactive narratives or interactive narrations. These works can also be understood as a form of video game,[1] either in the form of an adventure game or role-playing game. In common usage, the term refers to text adventures, a type of adventure game where the entire interface can be "text-only",[2] however, graphical text adventures still fall under the text adventure category if the main way to interact with the game is by typing text.

Due to their text-only nature, they sidestepped the problem of writing for widely divergent graphics architectures.

Tutorial with David Moroto

What is your content?

- speaks to my heart (fiction)

- source: emotional investment

- solve the coldness of the medium!

- dept! (true for me, fiction for other)

- 'conversational tool' between the analog and digital

- tool of mediation

Inspiration

SanctuaryRPG - (Classic Text Adventure Game)

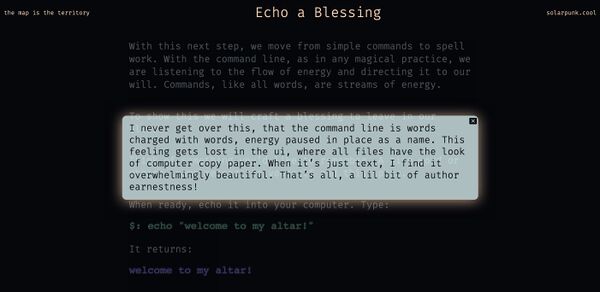

Inspiration: Solarpunk: The map is the territory

Inspiration regarding tone of voice: Shadow Wolf Zine (ASCII zine by musician Legowelt about various topics)

Resources & links

- Shadow Wolf ASCII Zine by Legowelt

- The map is the territory: Command Line instruction by Solar Punk

- https://en.wikipedia.org/wiki/Interactive_fiction

- https://en.wikipedia.org/wiki/Hypertext_fiction

- https://en.wikipedia.org/wiki/Text-based_game

- https://en.wikipedia.org/wiki/Ergodic_literature

PI: Selfhosting IRC + bot + API scraping

https://pythonspot.com/building-an-irc-bot/

Urzaloid Franklin: bot based on Wikipedia page Ursula Franklin's https://en.wikipedia.org/wiki/Ursula_Franklin

Sandbox as a publication

- showing where it is! (this is where you are!)

- what happens when you visit (exposing a process with the emphasis on materiality)

resources:

- shell: showing processes! https://github.com/jupyter/terminado

- https://pythonspot.com/building-an-irc-bot/

- https://almanac.computer/

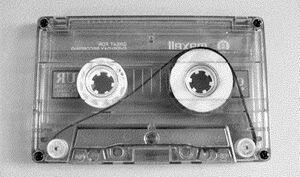

Tape + analog sound/recording experiments

Deconstruction workshop: materializing data over sound

- recording sound of a deconstruction process: transfer to the physical carrier (analog tape)

- recording data of images: broadcast SSTV signal

- material combined in audio-visual performance

>WORKSHOP: A)PART_to_de(PART (In collaboration with Tisa Neža and Ioana Tomici)

Performance:

>>>>>>>C0/\/\/\/\0N F/\CT0R<<<<<<<

Related:

- 'wacky tech' (low and obsolete tech processes )

- what meaning emerges when low-tech meets high-tech

- regaining autonomy and the value of misusing technology

- revealing inner workings (trough sonification of a process)

- obsolete systems as a method (not retro!)

- the affordances of not emulating

- "sonic fiction"

- meaning that occurs when going between formats

general links:

- https://www.westminsterpapers.org/articles/10.16997/wpcc.209/print/

- https://www.electronicdesign.com/industrial-automation/article/21808186/sending-data-over-sound-how-and-why

- https://spectrum.ieee.org/computing/hardware/why-the-future-of-data-storage-is-still-magnetic-tape

- http://screenl.es/slow.html

Project content: interim conclusion

_ (_)

____ ____ ___ ____ _ _ ____ ____ ___ ____| |_ _ ___ ____

/ _ | / ___) _ \| _ \ | | / _ )/ ___)___)/ _ | _)| |/ _ \| _ \

( ( | | ( (__| |_| | | | \ V ( (/ /| | |___ ( ( | | |__| | |_| | | | |

\_||_| \____)___/|_| |_|\_/ \____)_| (___/ \_||_|\___)_|\___/|_| |_|

_ ___

| | _ / __) _

| | _ ____| |_ _ _ _ ____ ____ ____ | |__ ___ ____ ____ ____| |_ ___

| || \ / _ ) _) | | |/ _ ) _ ) _ \ | __) _ \ / ___) \ / _ | _) /___)

| |_) | (/ /| |_| | | ( (/ ( (/ /| | | | | | | |_| | | | | | ( ( | | |__|___ |

|____/ \____)\___)____|\____)____)_| |_| |_| \___/|_| |_|_|_|\_||_|\___|___/

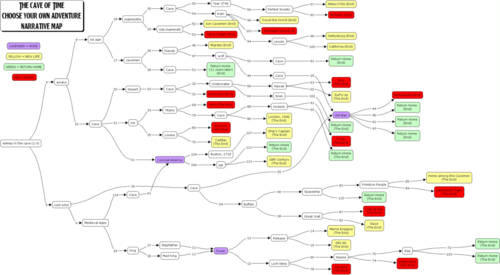

Project contents

- inner workings and processes of data modulation explained

- hyperfocus on detailed information regarding processes mixed with fictional elements (metaphors and inspiration from a 'guide')

- user can interact: sending > receiving information (real-time encoding / decoding)

Practical

- text-based / command-line style interface: to create a focus on other senses

- ASCII as image, code, sound: in between formats

- website, Python, on a Raspberry Pi.

Tone of voice of the interface:

- interactive fiction and text-based games (imagination!)

- tone of voice: speaking on a personal level

- Sonic Fiction / Matters Fiction: notion of 'planetary importance'

- - "Welcome to my home. You are here:" <showing pi in my house>

- - "You traveled a long way to get here in a very short time""

- - "You just looked outside through a window, staring at the screen again, you think about the past."

- - "Lately, I often got asked if I am a robot. Are a robot. Are you a robot?"

- - "Listen closely to this image!"

Output

- website (publication) hosted on a Raspberry Pi as publication

- part of the workflow will be used for audio/visual performance based on modulation of data (magnetic tape)

Inspiration taken from tape recordings of:

- 'Alfa Training' motivational audio course about 'self-programming', regaining autonomy and guidance in making life and work decisions Listen here

- software on tape! (fun detail: there are music snippets of ABBA in between)

- Recording of computer course: 'How to get online?' (mechanical typing sounds, registration, silence to do an exercise)

- guide/course: leader/character, bumpers and theme songs and scores, silence for executing

- low key blending in personal stories and experiences

- use metaphors and figure of speech from 'alpha training'

Important input:

- Donna Harraway: viewpoints "cyborg" (some parts are hard to digest)

- Sun Ra: creating myths, "sonic fiction" (planetary importance)

- Radical software: knowledge, sharing, community building

- Erkki Kurenniemi / Computer eats Art (misusing technology to 'keep control' / in between formats)

|\----/|

| . . |

\_><_/-..----.

___/ ` ' ,""+ \

(__...' __\ |`.___.';

(_,...'(_,.`__)/'.....+

Feedback(w/Manetta 12.02.2021)

On digital text and the development of the Word processor:

- Secret Life Of Machines - The Word Processor

- Anti Media - Florian Cramer (- 'literate programming' (code is an esthetic!)

- Exploring computer text interfaces

Research as publication:

- Situationist Times: http://vandal.ist/thesituationisttimes/

- Publishing: https://www.woodstonekugelblitz.org/

- http://lists.artdesign.unsw.edu.au/pipermail/empyre/2020-December/011211.html

- streaming the 'sound' of streaming: http://anarchaserver.org:8000/

Practical tools/tips:

- Turn a Git repo into a collection of interactive notebooks: https://mybinder.org/

- jupyter nbconvert

- /var/log/ for (accessing logs of processes)

potential idea's:

- instagrain' (insstvagram) broadcasting platform (SSTV)

- a website you can visit which constantly broadcasting images you can capture with your phone

- text feed (Twitter) to audio and storing on cassette tape (KCS)

- a 'service' to capture text and store it on cassette tape (and decode it as well)

- Mini task: Think about what substantive questions and observations regarding content arise?

- 'fluxus' / system / continuity / analog vs digital / etc

- Mini task: Think about what substantive questions and observations regarding content arise?

standalone:

- /dev/zero

- /zero/null

- RM optie

> server (hub)

Feedback(w/Michael 12.02.2021)

TAKEN FROM SOUNDSTUDIES READER:

Semantic Listening

I call semantic listening that which refers to a code or a language to interpret a message: spoken language, of course, as well as Morse and other such codes. This

mode of listening, which functions in an extremely complex way, has been the object

of linguistic research and has been the most widely studied. One crucial fi nding is that

it is purely differential. A phoneme is listened to not strictly for its acoustical

properties but as part of an entire system of oppositions and differences. Thus

semantic listening often ignores considerable differences in pronunciation (hence in

sound) if they are not pertinent differences in the language in question. Linguistic

listening in both French and English, for example, is not sensitive to some widely

varying pronunciations of the phoneme a.

Obviously one can listen to a single sound sequence employing both the causal

and semantic modes at once. We hear at once what someone says and how they say it.

In a sense, causal listening to a voice is to listening to it semantically as perception of

the handwriting of a written text is to reading it.2

On Background Listening In early 2009, Jay Rosen, a professor of journalism at New York University, posed a question to his 12,000 followers on the micro-blogging service Twitter. He wanted to know why they twittered. Of the almost 200 responses that he initially received via his blog and Twitter account, he noticed an important similarity. ‘Surprise fi nding from my project’, he wrote on Twitter on 8 January, ‘is how often I wound up with radio as a comparison.’ The comparison may have been unexpected, but it is provocative. Twitter is a social networking service where users send and receive text-based updates of up to 140 characters. They can be delivered and read via the web, instant messaging clients or mobile phone as text messages. Unlike radio, which is a one-to-many medium, Twitter is many-to-many. People choose whom they will follow, which may be a small group of intimates, or thousands of strangers – they then receive all updates written by those people. Perhaps the most obvious difference from radio is that there is no sound broadcast on Twitter. It is simply a network of people scanning, reading and occasionally posting written messages. Yet the radio analogy persists. As MSN editor Jane Douglas writes, ‘I see Twitter like a ham radio for tuning into the world’ (cited in Rosen 2009).

The keynote sounds of a landscape are those created by its geography and climate:

water, wind, forests, plains, birds, insects and animals. Many of these sounds may

possess archetypal signifi cance; that is, they may have imprinted themselves so deeply

on the people hearing them that life without them would be sensed as a distinct

impoverishment. They may even affect the behavior or life style of a society, though

for a discussion of this we will wait until the reader is more acquainted with the

matter.

Signals are foreground sounds and they are listened to consciously. In terms of the psychologist, they are fi gure rather than ground. Any sound can be listened to consciously, and so any sound can become a fi gure or signal, but for the purposes of our community-oriented study we will confi ne ourselves to mentioning some of those signals which must be listened to because they constitute acoustic warning devices: bells, whistles, horns and sirens. Sound signals may often be organized into quite elaborate codes permitting messages of considerable complexity to be transmitted to those who can interpret them. Such, for instance, is the case with the cor de chasse, or train and ship whistles, as we shall discover. The term soundmark is derived from landmark and refers