User:Alexander Roidl/algorithmicthesis: Difference between revisions

No edit summary |

|||

| (19 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

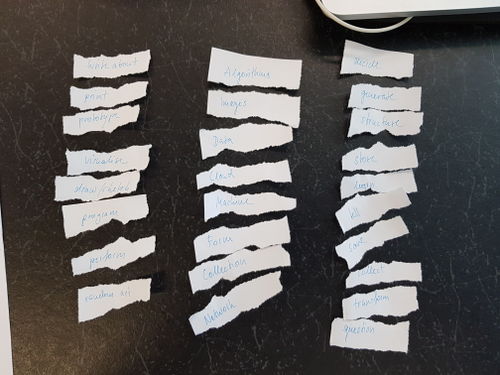

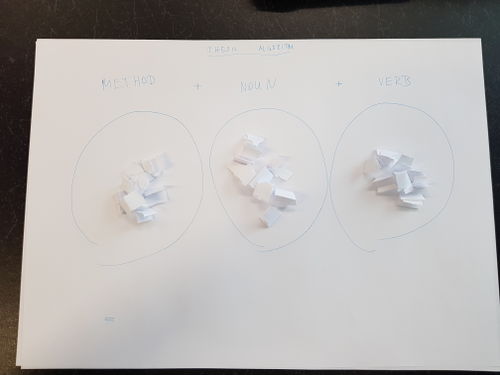

Set of experiments on generating the thesis topic by a set of rules / with a game. | Set of experiments on generating the thesis topic by a set of rules / with a game. | ||

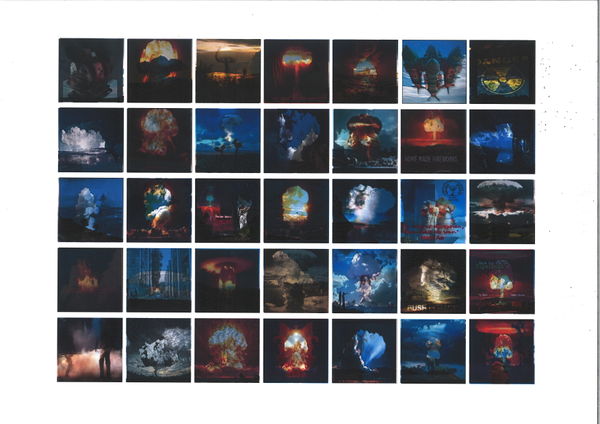

[[File:20181010 143308.jpg|500px|frameless]] | |||

[[File:20181010 143714.jpg|500px|frameless]] | |||

==Method: Print | What: Collection | Action: transform== | ==Method: Print | What: Collection | Action: transform== | ||

| Line 8: | Line 13: | ||

I picked a random set of images: | I picked a random set of images: | ||

A visible mass of water or ice particles suspended at a considerable altitude | ''Clouds & sky | 1399 pictures | ||

A visible mass of water or ice particles suspended at a considerable altitude'' | |||

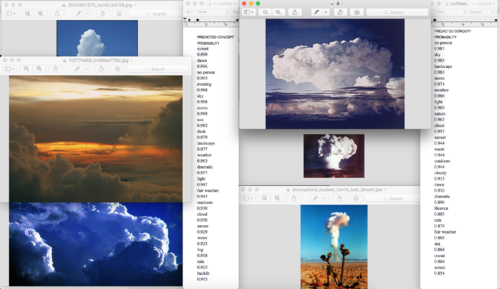

[[File:Screen Shot 2018-10-10 at 16.32.48.png|thumbnail]] | [[File:Screen Shot 2018-10-10 at 16.32.48.png|thumbnail]] | ||

| Line 19: | Line 27: | ||

Interestingly the set also features some cloudy explosions. | Interestingly the set also features some cloudy explosions. | ||

So I decided to run it through | As a first attempt to transform I overprinted explosion images with those of clouds. | ||

[[File:Scan MFP-529 0915 001.jpg|600px|frameless]] | |||

But I wondered if transformation can also happen on a more cognitive level. | |||

By putting this images into a certain context. | |||

So I decided to run it through an algorithm, an image detection software. | |||

As I result I figured out that the software would predict quite similar values. | As I result I figured out that the software would predict quite similar values. | ||

| Line 26: | Line 41: | ||

This is interesting as in my personal (human) opinion these images feature | This is interesting as in my personal (human) opinion these images feature | ||

radical different objects. | radical different objects. | ||

[[File:Screen Shot 2018-10-10 at 16.56.20.png|500px|frameless]] | |||

[[File:Screen Shot 2018-10-10 at 16.56.41.png|500px|frameless]] | |||

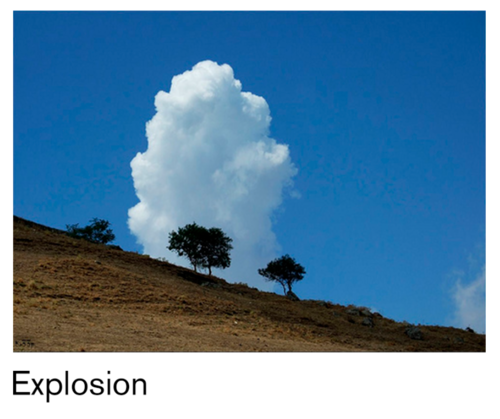

So what would the machine see if I labeled the images myself. | |||

It didn’t change its opinion a lot, but still the image detection wouldn’t output the same result | |||

PREDICTED CONCEPT

PROBABILITY

| |||

nature

0.989

landscape

0.988

sky

0.987

desert

0.962

travel

0.952

outdoors

0.942

no person

0.933

summer

0.923

volcano

0.922

sun

0.917

sand

0.916

tree

0.913

soil

0.909

cloud

0.907

fair weather

0.902

hill

0.893

hot

0.891

mountain

0.884

heat

0.875

eruption

0.863

| |||

PREDICTED CONCEPT

PROBABILITY | |||

nature

0.993

landscape

0.991

sky

0.990

travel

0.954

desert

0.951

summer

0.948

outdoors

0.943

sand

0.940

soil

0.939

cloud

0.935

volcano

0.935

tree

0.926

sun

0.921

fair weather

0.916

mountain

0.915

hill

0.911

rock

0.910

no person

0.899

eruption

0.885

lava

0.882

| |||

===Thoughts and questions on print, collection, transform=== | |||

What do I see when I look at something? | |||

Why is an explosion so different from a cloud and why are both connected for a machine. What do they have in common (outdoors, no person, dramatic) | |||

Also these labels like no person, remind me little bit of fortune tellers that tell you things that most probably fit to every person, but are still relatable to your personal situation. That there is no person in this image is something I wouldn’t have thought of when looking at this image. | |||

Thinking about: who classifies those images? How does the algorithm classify the images? | |||

> So this took somewhat far from printing :D | |||

==Method: Program | What: Data | Action: generate== | |||

So how do you generate Data? | |||

Program that generates random data: | |||

<pre> | |||

from random import randint | |||

f= open("file.txt","w+") | |||

for i in range(100000): | |||

f.write("{}".format(randint(0,255))) | |||

f.close() | |||

</pre> | |||

This generates random numbers between 0 and 255 and appends it to a file. | |||

To make it readable I extend .txt, so it is readable by any text-editor. | |||

You can generate GB of data with this scripts and crash your computer – so quite powerful lines of code. | |||

[[File:Screen Shot 2018-10-10 at 18.31.37.png|800px|frameless]] | |||

[[File:Screen Shot 2018-10-10 at 18.10.39.png|500px|frameless]] | |||

But what does this mean? | |||

To visualize it I mapped all these values to pixels, so this random data looks like the following: | |||

[[File:Result image.png|500px|frameless]] | |||

Outputting it as a gif: | |||

[[File:Random.gif|500px]] | |||

Compress: | |||

[[File:Compress copy 2.jpg|500px]] | |||

Crop & resize: | |||

[[File:Detail.png|500px]] | |||

==Method: Write | What: Algorithm & Image | Action: question== | |||

The algorithmic image & unalgorithmic imagination | |||

[[File:GANGeneration.jpeg|200px]] | |||

Think of a flower, any flower. | |||

[[File:Download (1).jpeg|200px]] | |||

Think of a bird, a bird flying in the sky. | |||

What you might have in your head is an image. But still – | |||

is it an image? Only you can see it, but as soon as you try to see it, it changes. It is not a single image, it is a series. Is it moving? What materiality does it have? | |||

If it is not an image, is it a thought or a chain of thoughts? | |||

[[File:Download (2).jpeg|200px]] | |||

Imagine a face, but not of a person you know. A new person that might exist, but probably doesn’t. | |||

When I think of a bird, I most probably think of a very different one than you do. But still we would be able to agree upon an image showing a bird. | |||

Why are we trying to use an algorithm for everything? What would be un-algorithmic? Does imagination follow a certain pattern? | |||

(to be continued) | |||

Images generated based on the text with: http://t2i.cvalenzuelab.com/ | |||

Latest revision as of 18:10, 10 October 2018

Set of experiments on generating the thesis topic by a set of rules / with a game.

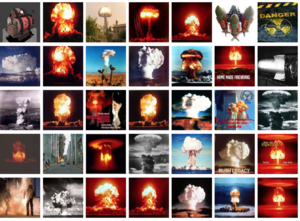

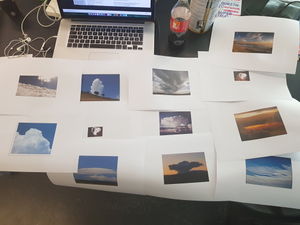

Method: Print | What: Collection | Action: transform

There is a huge collection of images on http://image-net.org/ It is an attempt to classify and categories images into a huge database

I picked a random set of images:

Clouds & sky | 1399 pictures

A visible mass of water or ice particles suspended at a considerable altitude

Interestingly the set also features some cloudy explosions.

As a first attempt to transform I overprinted explosion images with those of clouds.

But I wondered if transformation can also happen on a more cognitive level.

By putting this images into a certain context.

So I decided to run it through an algorithm, an image detection software.

As I result I figured out that the software would predict quite similar values.

This is interesting as in my personal (human) opinion these images feature radical different objects.

So what would the machine see if I labeled the images myself.

It didn’t change its opinion a lot, but still the image detection wouldn’t output the same result

PREDICTED CONCEPT PROBABILITY nature 0.989 landscape 0.988 sky 0.987 desert 0.962 travel 0.952 outdoors 0.942 no person 0.933 summer 0.923 volcano 0.922 sun 0.917 sand 0.916 tree 0.913 soil 0.909 cloud 0.907 fair weather 0.902 hill 0.893 hot 0.891 mountain 0.884 heat 0.875 eruption 0.863

PREDICTED CONCEPT PROBABILITY nature 0.993 landscape 0.991 sky 0.990 travel 0.954 desert 0.951 summer 0.948 outdoors 0.943 sand 0.940 soil 0.939 cloud 0.935 volcano 0.935 tree 0.926 sun 0.921 fair weather 0.916 mountain 0.915 hill 0.911 rock 0.910 no person 0.899 eruption 0.885 lava 0.882

Thoughts and questions on print, collection, transform

What do I see when I look at something?

Why is an explosion so different from a cloud and why are both connected for a machine. What do they have in common (outdoors, no person, dramatic)

Also these labels like no person, remind me little bit of fortune tellers that tell you things that most probably fit to every person, but are still relatable to your personal situation. That there is no person in this image is something I wouldn’t have thought of when looking at this image.

Thinking about: who classifies those images? How does the algorithm classify the images?

> So this took somewhat far from printing :D

Method: Program | What: Data | Action: generate

So how do you generate Data?

Program that generates random data:

from random import randint

f= open("file.txt","w+")

for i in range(100000):

f.write("{}".format(randint(0,255)))

f.close()

This generates random numbers between 0 and 255 and appends it to a file. To make it readable I extend .txt, so it is readable by any text-editor. You can generate GB of data with this scripts and crash your computer – so quite powerful lines of code.

But what does this mean? To visualize it I mapped all these values to pixels, so this random data looks like the following:

Outputting it as a gif:

Compress:

Crop & resize:

Method: Write | What: Algorithm & Image | Action: question

The algorithmic image & unalgorithmic imagination

Think of a flower, any flower.

Think of a flower, any flower.

Think of a bird, a bird flying in the sky.

Think of a bird, a bird flying in the sky.

What you might have in your head is an image. But still – is it an image? Only you can see it, but as soon as you try to see it, it changes. It is not a single image, it is a series. Is it moving? What materiality does it have? If it is not an image, is it a thought or a chain of thoughts?

Imagine a face, but not of a person you know. A new person that might exist, but probably doesn’t.

Imagine a face, but not of a person you know. A new person that might exist, but probably doesn’t.

When I think of a bird, I most probably think of a very different one than you do. But still we would be able to agree upon an image showing a bird. Why are we trying to use an algorithm for everything? What would be un-algorithmic? Does imagination follow a certain pattern?

(to be continued)

Images generated based on the text with: http://t2i.cvalenzuelab.com/