User:Tash/Special Issue 06: Difference between revisions

(Created page with "==My research and contribution to XPPL== === Brainstorm 23.04.2018=== Interface: How do you visualize that which is UNSTABLE? Serendipity? Missing data? Uncertainty? Dissent?...") |

|||

| (17 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

= | =Research and contribution to XPPL= | ||

=== Research questions === | |||

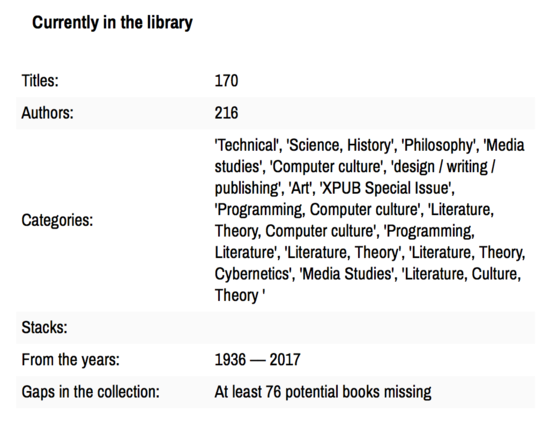

[[File:Xppl-potential2.png|600px|frameless|right]] | |||

on interface: | |||

* How do you engage with UNSTABLE libraries? Can we design reading / searching interfaces that are able to represent uncertainty, locate outsides, explore provenances? | |||

on sociality and access: | |||

* What modes of sociality can we embed into a library interface? Can we devise new ways to talk back to the data? | |||

* How to hero the enunciative materiality of digital libraries? Prioritize ecosystems and interactions instead of objects? | |||

* What is the current state of media piracy in the West vs in Asia? In both contexts, who is the pirate downloader and the outlaw uploader? | |||

'''More research [https://pzwiki.wdka.nl/mediadesign/User:Tash/RW%26RM_03 here]. | |||

''' | |||

=== Brainstorm 23.04.2018=== | === Brainstorm 23.04.2018=== | ||

Interface: How do you visualize that which is UNSTABLE? Serendipity? Missing data? Uncertainty? Dissent? Multiple views? | Interface: How do you visualize that which is UNSTABLE? Serendipity? Missing data? Uncertainty? Dissent? Multiple views? | ||

| Line 6: | Line 16: | ||

HOW can you GET data that's MISSING ?! E.G. from LibGen: where is the UPLOAD DATA? what could we do with it? | HOW can you GET data that's MISSING ?! E.G. from LibGen: where is the UPLOAD DATA? what could we do with it? | ||

Simple test to highlight absent information: | Simple test to highlight absent information: <br> | ||

In LibGen's catalogue CSV there are rows without titles. | |||

How to search for blanks? | How to search for blanks? | ||

| Line 22: | Line 33: | ||

Advanced search: https://archive.org/advancedsearch.php ghost in the mp3 | Advanced search: https://archive.org/advancedsearch.php ghost in the mp3 | ||

== Project description == | |||

{{User:Tash/RW&RM_03}} | |||

== Search functionality (Concept) == | |||

Goals: | |||

* Intervene in the process of 'finding' known-items | |||

* make the search a more social space, make more visible the ecosystem which allows for pirate librarianship (and the time between uploading, downloading) | |||

* functional tool | |||

* show outsides of data: where are the gaps, where is the potential? | |||

Considerations: | |||

* How to bring forward the distributed and enunciative materiality of the library? This means being more transparent about labour, traces | |||

* Interface design which challenges the user-as-consumer model: | |||

** "Sustained interpretative engagement, not efficient completion of tasks, would be the desired outcome." http://www.digitalhumanities.org/dhq/vol/7/1/000143/000143.html | |||

* Upload form and search form are two sides of the same coin. Classification informs query and vice versa. So how to insert new questions into this process? | |||

** "Searching in a collection of [amassed/assembled] [tangible] documents (ie. bookshelf) is different from searching in a systematically structured repository (library) and even more so from searching in a digital repository (digital library). Not that they are mutually exclusive. One can devise structures and algorithms to search through a printed text, or read books in a library one by one. They are rather [models] [embodying] various [processes] associated with the query. These properties of the query might be called [the sequence], the structure and the index. If they are present in the ways of querying documents, and we will return to this issue, are they persistent within the inquiry as such?" https://monoskop.org/Talks/Poetics_of_Research | |||

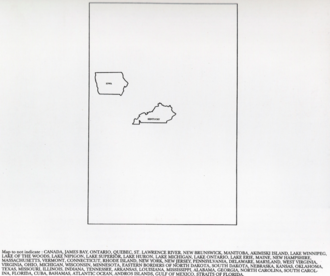

* On hierarchical systems of classification and how they rely on cultural assumptions: https://journals.ala.org/index.php/lrts/article/view/4913 | |||

** "Sameness and difference as guiding principles of classification seem obvious, but are actually fundamental characteristics specifically related to Western culture. [...] Classifications such as the DDC set up pigeonholes for certain samenesses. Dewey himself recognized that dividing all of knowledge into tens is absurd in any theoretical sense, but asserted that because it is efficient in practice – like pigeonholes on a nineteenth-century businessman's desk– it is justifiable." | |||

[[File:Classification olson.png|1000px|center]] | |||

* Showing what is included & excluded, remembering feminist methodologies: Interesting project on the politics of the search engine: http://www.feministsearchtool.nl/ | |||

== | == Search functionality (Tech) == | ||

Using Flask-WTForms and SQLAlchemy to create a search engine which queries the SQL database. | |||

Using Flask-WTForms to create a search which queries the SQL database. | |||

Links: https://pythonhosted.org/Flask-Bootstrap/forms.html and https://programfault.com/flask-101-how-to-add-a-search-form/ | Links: https://pythonhosted.org/Flask-Bootstrap/forms.html and https://programfault.com/flask-101-how-to-add-a-search-form/ | ||

| Line 195: | Line 155: | ||

</source> | </source> | ||

==== | === Flask-WhooshAlchemy === | ||

[[ | Flask-Whoosh-Alchemy is a Flask extension that integrates the text-search functionality of `Whoosh <https://bitbucket.org/mchaput/whoosh/wiki/Home>`_ with the ORM of `SQLAlchemy <http://www.sqlalchemy.org/>`_ for use in `Flask <http://flask.pocoo.org/>`_ applications. I'm using it to allow for a 'search all' function - next to search by title, search by category etc. | ||

<br> | |||

Link: https://pypi.org/project/Flask-WhooshAlchemy/ | |||

To get started: | |||

# Set a WHOOSH_BASE to the path for whoosh to create its index files. If not set, it will default to a directory called 'whoosh_index' in the directory from which the application is run. | |||

# Add a __searchable__ field to the model which specifies the fields (as str s) to be indexed. | |||

So, in __init__.py I added the lines: | |||

<source lang= python> | |||

import flask_whooshalchemyplus | |||

# set the location for the whoosh index | |||

app.config['WHOOSH_BASE'] = 'path/to/whoosh/base' | |||

flask_whooshalchemyplus.init_app(app) # initialize | |||

</source> | |||

Then in models.py, you have to specify the searchable fields whoosh will index: | |||

<source lang= python> | |||

class Book(db.Model): | |||

__tablename__ = 'books' | |||

__searchable__ = ['title', 'category', 'fileformat'] # these fields will be indexed by whoosh | |||

</source> | |||

And in the views.py, you need to call 'whoosh_search' when querying: | |||

<source lang= python> | |||

@app.route('/search/<searchtype>/<query>/', methods=['POST', 'GET']) | |||

def search_results(searchtype, query): | |||

search = SearchForm(request.form) | |||

random_order=Book.query.order_by(func.random()).limit(10) | |||

results=Book.query.filter(Book.title.contains(query)).all() | |||

if searchtype == 'Title': | |||

results=Book.query.filter(Book.title.contains(query)).all() | |||

if searchtype == 'Category': | |||

results=Book.query.filter(Book.category.contains(query)).all() | |||

if searchtype== 'All': | |||

results=Book.query.whoosh_search(query).all() | |||

if not results: | |||

upload_form = UploadForm(title= query, author='') | |||

return render_template('red_link.html', form=upload_form, title=query) | |||

if request.method == 'POST': | |||

query = search.search.data | |||

results = [] | |||

return redirect((url_for('search_results', searchtype=search.select.data, query=search.search.data))) | |||

return render_template('results.html', form=search, books=results, books_all=random_order, searchtype=search.select.data, query=query) | |||

</source> | |||

===Troubleshooting=== | |||

There were a lot of errors when integrating Whoosh-Alchemy to our set-up. | |||

First, Whoosh-Alchemy is not fully supported in Python3. So I found a different fork: [[https://github.com/Revolution1/Flask-WhooshAlchemyPlus WhooshAlchemyPlus]] | |||

Next, the problem was indexing an existing database. Default operations require you to commit changes to the database via the SQLite / RQLite interface. | |||

But since we have an external import_csv option, we need to tell Whoosh to rebuild its indices manually. WhooshAlchemyPlus had an 'index_all()' function but it threw up a lot of errors. | |||

So into the internet we go, to find: | |||

[[ | [[https://gist.github.com/davb5/21fbffd7a7990f5e066c Rebuild-search-indices.py] | ||

< | Hallelujah. | ||

<br> | |||

[[File:Xppl-search1.png|600px|thumbnail|center] | |||

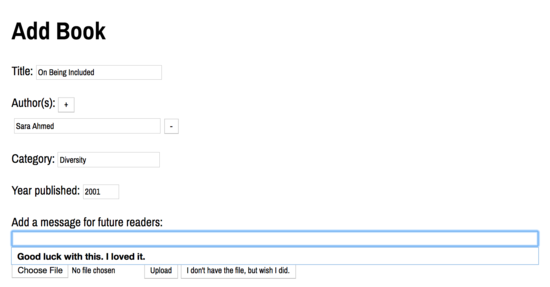

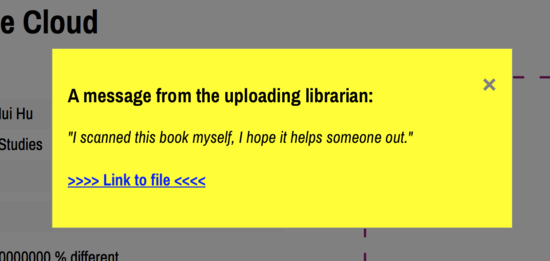

== Upload form == | |||

* Getting metadata which makes explicit the cultural act of uploading and downloading | |||

* Giving form to the current collection | |||

[[File:Screen Shot 2018-06-15 at 07.43.38.png|550px|thumbnail|left]][[File:Xppl-upload1.png|550px|thumbnail|right]] | |||

<br clear=all> | |||

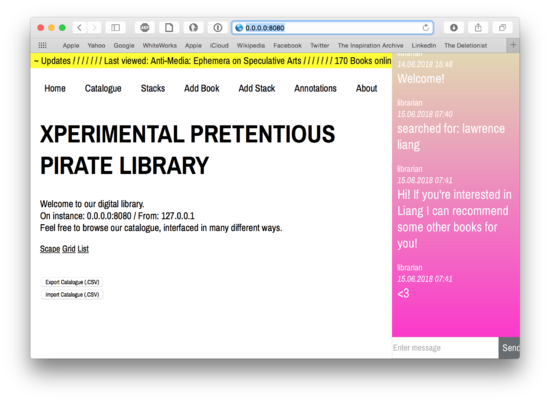

== Sociality of the interface == | |||

* Connecting the search form to the chat form | |||

* Add opportunities for contact between pirates, which works together with the annotations layer | |||

[[File:Xppl-search2.png|550px|thumbnail|left]] | |||

[[File:Screen Shot 2018-06-15 at 07.47.10.png|550px|thumbnail|right]] | |||

<br clear=all> | |||

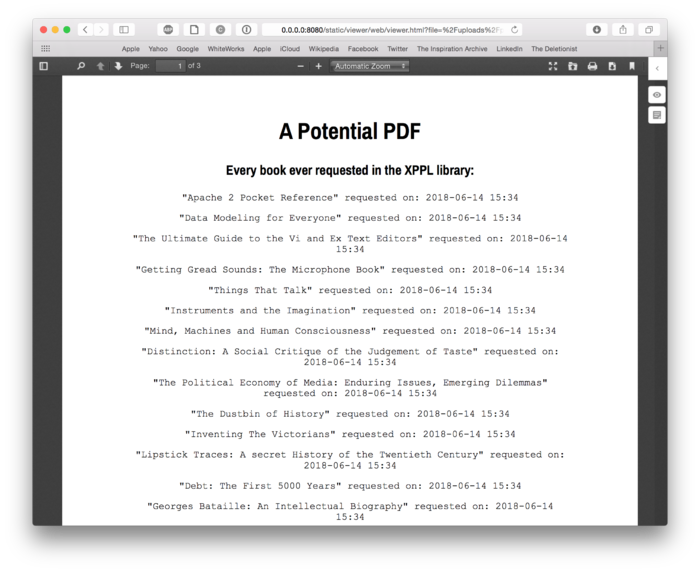

== The 'Potential PDF' == | |||

* Traces of the 'gaps' in the collection | |||

* Producing a new item which can be fed back into the collection | |||

* Using Flask to render an HTML page, SQLalchemy to track requests in the database, and Weasyprint to write it to PDF | |||

[[File:Xppl-potential.png|700px|thumbnail|center]] | |||

==Things to work on == | |||

* expand the search form to reflect and play with metadata | |||

* connect search form more closely with annotations and stacks | |||

* uploading only by stacks? | |||

* categorization | |||

* database --> how to make it stable enough to spread?? | |||

* installation --> how to make it more user friendly? | |||

Latest revision as of 07:27, 15 June 2018

Research and contribution to XPPL

Research questions

on interface:

- How do you engage with UNSTABLE libraries? Can we design reading / searching interfaces that are able to represent uncertainty, locate outsides, explore provenances?

on sociality and access:

- What modes of sociality can we embed into a library interface? Can we devise new ways to talk back to the data?

- How to hero the enunciative materiality of digital libraries? Prioritize ecosystems and interactions instead of objects?

- What is the current state of media piracy in the West vs in Asia? In both contexts, who is the pirate downloader and the outlaw uploader?

More research here.

Brainstorm 23.04.2018

Interface: How do you visualize that which is UNSTABLE? Serendipity? Missing data? Uncertainty? Dissent? Multiple views? On data provenance and feminist visualization: https://civic.mit.edu/feminist-data-visualization HOW can you GET data that's MISSING ?! E.G. from LibGen: where is the UPLOAD DATA? what could we do with it?

Simple test to highlight absent information:

In LibGen's catalogue CSV there are rows without titles.

How to search for blanks?

something like:

csvgrep -c Title -m "" content.csv

^ this solution matches spaces but doesn't look for empty state cells.

csvgrep -c Author -r "^$" content.csv

^ this solution finds rows with empty state cells in the 'Author' column

andre's exciting explorations of the archive.org api search: Internet Archive Advanced search: https://archive.org/advancedsearch.php ghost in the mp3

Project description

The default web interface of the library is a space for researching as well as reading. Here users can choose to navigate through the entire X-LIB catalogue, or through various stacks. Unlike most search engines, X-LIB's is designed to prioritize ecosystems and interactions instead of results or objects. Multiple queries for non-existant items in the collection are tracked and automatically made into red links, which are visualised and placed back into the library. In this way the collection is always represented in relation to its own limits, outsides and peripheries. Making these 'wishlists' visible also offers context: we get to know our fellow researchers, situate our own knowledge with theirs. Within the core network, pirate downloaders and outlaw uploaders can interact more directly with each other. When you see an empty item, either in the full catalogue or in someone's stack, you can choose to upload to it. You can create an entire stack of wished-for items and wait for others who may have the file to help you to complete it. The search engine also offers more playful orderings like randomization or by reading time.

The search engine can be made using html, python and CGI scripts. The files would be stored in separate directories and JSON files which can be called and created via a web interface. Another option is to use the Semantic MediaWiki platform, which already has built in functions like the automatic creation of red links, categories and tags for archiving, and also supports the maintenance of these files. To research further: how each of these platforms will deal with user accounts / anonymity / interactivity.

We want this library to exist in the space between researching and the act of downloading/uploading. Piracy is necessary for studying – but it is not just about file sharing. It is also about learning what it means to be a librarian, to pass on information and to explore questions of data provenance. In this way it is important that the default interface of X-LIB explores more social modes of reading and searching.

This project continues my research into feminist ways of representing data, of making visible what is included and what is excluded in archive. My research into the social aspects of the digital library is also relevant to the concept of enunciative materiality, which we started to explore last trimester.

Search functionality (Concept)

Goals:

- Intervene in the process of 'finding' known-items

- make the search a more social space, make more visible the ecosystem which allows for pirate librarianship (and the time between uploading, downloading)

- functional tool

- show outsides of data: where are the gaps, where is the potential?

Considerations:

- How to bring forward the distributed and enunciative materiality of the library? This means being more transparent about labour, traces

- Interface design which challenges the user-as-consumer model:

- "Sustained interpretative engagement, not efficient completion of tasks, would be the desired outcome." http://www.digitalhumanities.org/dhq/vol/7/1/000143/000143.html

- Upload form and search form are two sides of the same coin. Classification informs query and vice versa. So how to insert new questions into this process?

- "Searching in a collection of [amassed/assembled] [tangible] documents (ie. bookshelf) is different from searching in a systematically structured repository (library) and even more so from searching in a digital repository (digital library). Not that they are mutually exclusive. One can devise structures and algorithms to search through a printed text, or read books in a library one by one. They are rather [models] [embodying] various [processes] associated with the query. These properties of the query might be called [the sequence], the structure and the index. If they are present in the ways of querying documents, and we will return to this issue, are they persistent within the inquiry as such?" https://monoskop.org/Talks/Poetics_of_Research

- On hierarchical systems of classification and how they rely on cultural assumptions: https://journals.ala.org/index.php/lrts/article/view/4913

- "Sameness and difference as guiding principles of classification seem obvious, but are actually fundamental characteristics specifically related to Western culture. [...] Classifications such as the DDC set up pigeonholes for certain samenesses. Dewey himself recognized that dividing all of knowledge into tens is absurd in any theoretical sense, but asserted that because it is efficient in practice – like pigeonholes on a nineteenth-century businessman's desk– it is justifiable."

- Showing what is included & excluded, remembering feminist methodologies: Interesting project on the politics of the search engine: http://www.feministsearchtool.nl/

Search functionality (Tech)

Using Flask-WTForms and SQLAlchemy to create a search engine which queries the SQL database. Links: https://pythonhosted.org/Flask-Bootstrap/forms.html and https://programfault.com/flask-101-how-to-add-a-search-form/

in forms.py

- simple string search field

class SearchForm(FlaskForm):

search = StringField('', validators=[InputRequired()])

</search>

'''in views.py'''

* putting search bar on home page

* routing results.html, setting up redirect and error message

<source lang= python>

@app.route('/', methods=['GET', 'POST'])

def home():

"""Render website's home page."""

#return render_template('home.html')

search = SearchForm(request.form)

if request.method == 'POST':

return search_results(search)

return render_template('home.html', form=search)

## search

@app.route('/results', methods= ['GET'])

def search_results(search):

results = []

search_string = search.data['search']

if search_string:

results=Book.query.filter(Book.title.contains(search_string)).all()

if not results:

flash('No results found!')

return redirect('/')

else:

# display results

return render_template('results.html', books=results)

in results.html

- template page for showing results, same as show_books.html

{% extends 'base.html' %}

{% block main %}

<div class="container">

<h1 class="page-header">Search Results</h1>

{% with messages = get_flashed_messages() %}

{% if messages %}

<div class="alert alert-success">

<ul>

{% for message in messages %}

<li>{{ message }}</li>

{% endfor %}

</ul>

</div>

{% endif %}

{% endwith %}

<table style="width:100%">

<tr>

<th>Cover</th>

<th>Title</th>

<th>Author</th>

<th>Filetype</th>

<th>Tag</th>

</tr>

{% for book in books %}

<tr>

<td><img src="../uploads/cover/{{ book.cover }}" width="80"></td>

<td><a href="books/{{ book.id }}">{{ book.title }}</a></td>

<td> {% for author in book.authors %}

<li><a href="{{url_for('show_author_by_id', id=author.id)}}">{{ author.author_name }}</a> </li>

{% endfor %}</td>

<td>{{ book.fileformat }}</td>

<td>{{ book.tag}}</td>

</tr>

{% endfor %}

</table>

</div>

{% endblock %}

Flask-WhooshAlchemy

Flask-Whoosh-Alchemy is a Flask extension that integrates the text-search functionality of `Whoosh <https://bitbucket.org/mchaput/whoosh/wiki/Home>`_ with the ORM of `SQLAlchemy <http://www.sqlalchemy.org/>`_ for use in `Flask <http://flask.pocoo.org/>`_ applications. I'm using it to allow for a 'search all' function - next to search by title, search by category etc.

Link: https://pypi.org/project/Flask-WhooshAlchemy/

To get started:

- Set a WHOOSH_BASE to the path for whoosh to create its index files. If not set, it will default to a directory called 'whoosh_index' in the directory from which the application is run.

- Add a __searchable__ field to the model which specifies the fields (as str s) to be indexed.

So, in __init__.py I added the lines:

import flask_whooshalchemyplus

# set the location for the whoosh index

app.config['WHOOSH_BASE'] = 'path/to/whoosh/base'

flask_whooshalchemyplus.init_app(app) # initialize

Then in models.py, you have to specify the searchable fields whoosh will index:

class Book(db.Model):

__tablename__ = 'books'

__searchable__ = ['title', 'category', 'fileformat'] # these fields will be indexed by whoosh

And in the views.py, you need to call 'whoosh_search' when querying:

@app.route('/search/<searchtype>/<query>/', methods=['POST', 'GET'])

def search_results(searchtype, query):

search = SearchForm(request.form)

random_order=Book.query.order_by(func.random()).limit(10)

results=Book.query.filter(Book.title.contains(query)).all()

if searchtype == 'Title':

results=Book.query.filter(Book.title.contains(query)).all()

if searchtype == 'Category':

results=Book.query.filter(Book.category.contains(query)).all()

if searchtype== 'All':

results=Book.query.whoosh_search(query).all()

if not results:

upload_form = UploadForm(title= query, author='')

return render_template('red_link.html', form=upload_form, title=query)

if request.method == 'POST':

query = search.search.data

results = []

return redirect((url_for('search_results', searchtype=search.select.data, query=search.search.data)))

return render_template('results.html', form=search, books=results, books_all=random_order, searchtype=search.select.data, query=query)

Troubleshooting

There were a lot of errors when integrating Whoosh-Alchemy to our set-up. First, Whoosh-Alchemy is not fully supported in Python3. So I found a different fork: [WhooshAlchemyPlus]

Next, the problem was indexing an existing database. Default operations require you to commit changes to the database via the SQLite / RQLite interface. But since we have an external import_csv option, we need to tell Whoosh to rebuild its indices manually. WhooshAlchemyPlus had an 'index_all()' function but it threw up a lot of errors.

So into the internet we go, to find: [Rebuild-search-indices.py

Hallelujah.

[[File:Xppl-search1.png|600px|thumbnail|center]

Upload form

- Getting metadata which makes explicit the cultural act of uploading and downloading

- Giving form to the current collection

Sociality of the interface

- Connecting the search form to the chat form

- Add opportunities for contact between pirates, which works together with the annotations layer

The 'Potential PDF'

- Traces of the 'gaps' in the collection

- Producing a new item which can be fed back into the collection

- Using Flask to render an HTML page, SQLalchemy to track requests in the database, and Weasyprint to write it to PDF

Things to work on

- expand the search form to reflect and play with metadata

- connect search form more closely with annotations and stacks

- uploading only by stacks?

- categorization

- database --> how to make it stable enough to spread??

- installation --> how to make it more user friendly?