User:Angeliki/Ttssr-Speech Recognition Iterations: Difference between revisions

No edit summary |

No edit summary |

||

| (28 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

__NOTOC__ | |||

<div style=' | <div style=' | ||

width: 80%; | width: 80%; | ||

| Line 6: | Line 8: | ||

margin:0px; | margin:0px; | ||

float: left; | float: left; | ||

'> | '> | ||

:::::::::{{#Widget:Audio|mp3=https://pzwiki.wdka.nl/mw-mediadesign/images/4/49/Ttssr-audio1-varia.mp3}} | |||

<span style="font-size:120%; letter-spacing:4px;">Any one is one having been that one Any one is such a one.</span> | <span style="font-size:120%; letter-spacing:4px;">Any one is one having been that one Any one is such a one.</span> | ||

'''ttssr-loop-human-only''' | |||

<div style="background:#FFFFFF"> | |||

My collection of texts ''From Tedious Tasks to Liberating Orality: Practices of the Excluded on Sharing Knowledge'', refers to orality in relation to programming, as a way of sharing knowledge including our individually embodied position and voice. The emphasis on the role of personal positioning is often supported by feminist theorists. Similarly, and in contrast to scanning, reading out loud is a way of distributing knowledge in a shared space with other people, and this is the core principle behind the ''ttssr-> Reading and speech recognition in loop'' software. Using speech recognition software and python scripts I propose to the audience to participate in a system that highlights how each voice bears the personal story of an individual. In this case the involvement of a machine provides another layer of reflection of the reading process. | |||

<br /> | |||

In the context of the present available technologies | :::::::::::::'''The story behind''' | ||

As in oral cultures, in contrast to the literate cultures, I am getting engaged with two important ingredients for knowledge production; the presence of a speaker and the oral speech. The oral narratives are based on the previous ones keeping a movable line to the past by adjusting to the history of the performer, but only if they are important and enjoyable for the present audience and time. The structure of the oral poem is based on rhythmic formulas, the memory and verbal interaction. Oral cultures exist without the need of writing, texts and dictionaries. They don't need a library to be stored, to look up and create their texts. The learning process is shared from individual positions but with the participation of the community. | |||

This | Both my reader and my software highlight also another aspect of knowledge production, from literate cultures, regarding repetition and text processing tasks. From weaving to typewriting and programming, women, mainly hidden from the public, were exploring the realm of writing beyond its conventional form. According to Kittler (1999, pg. 221) “A desexualized writing profession, distant from any authorship, only empowers the domain of text processing. That is why so many novels written by recent women writers are endless feedback loops making secretaries into writers”. But aren’t these endless feedback loops similar to the rhythmic narratives of the anonymous oral cultures? How this knowledge is produced through repetitive formulas that are easily memorized? | ||

In the context of the present available technologies, like speech recognition software, and in relation with other projects, using the technology of their time, these questions can be explored on a more practical base. With the aid and the errors of [https://en.wikipedia.org/wiki/CMU_Sphinx Pocketsphinx], I aim to create new oral and reading experiences. My work derives from the examples of two other projects coming from 80s: [https://www.youtube.com/watch?v=8z32JTnRrHc Boomerang (1974)] and [https://www.youtube.com/watch?v=fAxHlLK3Oyk I Am Sitting In A Room (1981)]. The first one is about forming a tape by recording and broadcasting continuously the artist speaking. The latter is exploiting the imperfections of the system of recording tape machines and grabs the room echoes as musical qualities in the tape-delay system. It is noticeable that repetition seems to become an interesting instrument in the two projects and the process of typewriting. In all cases the machine includes repetition as its basic element (eternal loops and repeated processes). My software transcribes the voice of people reading a text. This process can be related to the typists transcribing the speech of writers, replacing the contemporary speech recognition software. Focusing on this anonymous labour, worked together with a machine, one cannot forget the anonymous people, who worked for the Pocketsphinx models. The training of this software is based on recorded human voices reading words. The acoustic model, being created after, is structured with phonemes. I am reversing this procedure by giving back Pocketsphinx to human voices. | |||

</div> | |||

*''Description'': The first line of a given scanned text is read by someone. Then, the outcome (a sound file) is transcribed by a program, called pocketsphinx, and stored as a textfile. The new line is read by the same person or someone else, whose voice is going to be transcribed. The process is looped 10 times. More specifically every time the previous outcome becomes input for somebody to read and then the transcription follows. Depending on the quality of the machine, the voice and the reading, the first line is being transformed into different texts but with similar phonemes. At the same time with the transcription, each voice is played and repeated for five times, so for some moments they are overlapping each other. The process resembles the game of the broken telephone and the karaoke. <br /> | *''Description'': | ||

:The first line of a given scanned text is read by someone. Then, the outcome (a sound file) is transcribed by a program, called pocketsphinx, and stored as a textfile. The new line is read by the same person or someone else, whose voice is going to be transcribed. The process is looped 10 times. More specifically every time the previous outcome becomes input for somebody to read and then the transcription follows. Depending on the quality of the machine, the voice and the reading, the first line is being transformed into different texts but with similar phonemes. At the same time with the transcription, each voice is played and repeated for five times, so for some moments they are overlapping each other. The process resembles the game of the broken telephone and the karaoke. <br /> | |||

*''Instructions'': | *''Instructions'': | ||

<small>The first line of a scanned text is being projected on the screen. I am reading this line. Pocketsphinx is transcribing my voice, being played in loop for five times. The new line is being projected on the screen. I am passing the microphone to you. While you are reading my transcribed line, you are listening to my voice. Pocketsphinx is transcribing your voice, being played in loop for five times. The new line is being projected on the screen. You are passing the microphone to the next you. While the next you is reading your transcribed line, is listening to your voice. Pocketsphinx is transcribing the voice of the next you, being played in loop for five times. The new line is being projected on the screen. The next you is passing the microphone to the next next you. While the next next you is reading the transcribed line of the next you, is listening to the voice of the next you. Pocketsphinx is transcribing the voice of the next next you, being played in loop for five times. The new line is being projected on the screen. The next next you is passing the microphone to the next next next you. While the next next next you is reading the transcribed line of the next next you, is listening to the voice of the next next you. Pocketsphinx is transcribing the voice of the next next next you, being played in loop for five times. The process continuous for five more times. (press enter and run the makefile)</small> | :<small>The first line of a scanned text is being projected on the screen. I am reading this line. Pocketsphinx is transcribing my voice, being played in loop for five times. The new line is being projected on the screen. I am passing the microphone to you. While you are reading my transcribed line, you are listening to my voice. Pocketsphinx is transcribing your voice, being played in loop for five times. The new line is being projected on the screen. You are passing the microphone to the next you. While the next you is reading your transcribed line, is listening to your voice. Pocketsphinx is transcribing the voice of the next you, being played in loop for five times. The new line is being projected on the screen. The next you is passing the microphone to the next next you. While the next next you is reading the transcribed line of the next you, is listening to the voice of the next you. Pocketsphinx is transcribing the voice of the next next you, being played in loop for five times. The new line is being projected on the screen. The next next you is passing the microphone to the next next next you. While the next next next you is reading the transcribed line of the next next you, is listening to the voice of the next next you. Pocketsphinx is transcribing the voice of the next next next you, being played in loop for five times. The process continuous for five more times. (press enter and run the makefile)</small> | ||

*''Keywords:'' overlapping<br /> | *''Keywords:'' overlapping, reading, orality, transcribing, speech recognition, listening<br /> | ||

*''References:'' http://www.ubu.com/sound/lucier.html | *''References:'' | ||

*''Necessary Equipment:'' 1 set of headphones/loudspeaker, 1 microphone, 1 laptop, >1 oral scanner poets, USB audio interface<br /> | :[[Reader6/Angeliki]] | ||

*''Dependencies:''<br /> | :http://www.ubu.com/sound/lucier.html<br /> | ||

:http://www.ubu.com/film/serra_boomerang.html<br /> | |||

:Kittler, F.A., (1999) Typewriter, in: Winthrop-Young, G., Wutz, M. (Trans.), Gramophone, Film, Typewriter. Stanford University Press, Stanford, Calif, pp. 214–221 | |||

:Stein, G., 2005. Many Many Women, in: Matisse Picasso and Gertrude Stein With Two Shorter Stories | |||

*''Necessary Equipment:'' 1 set of headphones/loudspeaker, 1 microphone, 1 laptop, >1 oral scanner poets, 1 USB audio interface<br /> | |||

*''Dependencies:'' check also the [[Speech_recognition|cookbook]]<br /> | |||

:PocketSphinx package `sudo aptitude install pocketsphinx pocketsphinx-en-us` | |||

:PocketSphinx: `sudo pip3 install PocketSphinx` | |||

:Python libraries: `sudo apt-get install gcc automake autoconf libtool bison swig python-dev libpulse-dev` | |||

:Speech Recognition: `sudo pip3 install SpeechRecognition` | |||

:TermColor: `sudo pip3 install termcolor` | |||

:PyAudio: `pip3 install pyaudio` | |||

*''Software:''<br /> | *''Software:''<br /> | ||

*:[ | *:[https://git.xpub.nl/OuNuPo-make/files.html git.xpub.nl] | ||

*:ttssr_transcribe.py: | |||

<syntaxhighlight lang="python" style="background-color: #dfdf20;" line='line'> | |||

<syntaxhighlight lang="bash" line='line'> | #!/usr/bin/env python3 | ||

# https://github.com/Uberi/speech_recognition/blob/master/examples/audio_transcribe.py | |||

import speech_recognition as sr | |||

import sys | |||

from termcolor import cprint, colored | |||

from os import path | |||

import random | |||

a1 = sys.argv[1] | |||

# print ("transcribing", a1, file=sys.stderr) | |||

AUDIO_FILE = path.join(path.dirname(path.realpath(__file__)), a1) | |||

# use the audio file as the audio source | |||

r = sr.Recognizer() | |||

with sr.AudioFile(AUDIO_FILE) as source: | |||

audio = r.record(source) # read the entire audio file | |||

color = ["white", "yellow"] | |||

on_color = ["on_red", "on_magenta", "on_blue", "on_grey"] | |||

# recognize speech using Sphinx | |||

try: | |||

cprint( r.recognize_sphinx(audio), random.choice(color), random.choice(on_color)) | |||

# print( r.recognize_sphinx(audio)) | |||

except sr.UnknownValueError: | |||

print("uknown") | |||

except sr.RequestError as e: | |||

print("Sphinx error; {0}".format(e)) | |||

# sleep (1) | |||

</syntaxhighlight> | |||

:ttssr_write_audio.py: | |||

<syntaxhighlight lang="python" style="background-color: #dfdf20;" line='line'> | |||

#!/usr/bin/env python3 | |||

# https://github.com/Uberi/speech_recognition/blob/master/examples/write_audio.py | |||

# NOTE: this example requires PyAudio because it uses the Microphone class | |||

import speech_recognition as sr | |||

import sys | |||

from time import sleep | |||

a1 = sys.argv[1] | |||

# obtain audio from the microphone | |||

r = sr.Recognizer() | |||

with sr.Microphone() as source: | |||

# print("Read every new sentence out loud!") | |||

audio = r.listen(source) | |||

# sleep (1) | |||

# write audio to a WAV file | |||

with open(a1, "wb") as f: | |||

f.write(audio.get_wav_data()) | |||

</syntaxhighlight> | |||

:ttssr-loop-human-only.sh: | |||

<syntaxhighlight lang="bash" style="background-color: #dfdf20;" line='line'> | |||

#!/bin/bash | #!/bin/bash | ||

i=0; | i=0; | ||

| Line 63: | Line 135: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

: | |||

:[https://git.xpub.nl/OuNoPo-make/ Common makefile]: | |||

<syntaxhighlight lang="bash" style="background-color: #dfdf20;" line='line'> | |||

ttssr-human-only: ocr/output.txt | |||

bash src/ttssr-loop-human-only.sh ocr/output.txt | |||

</syntaxhighlight> | |||

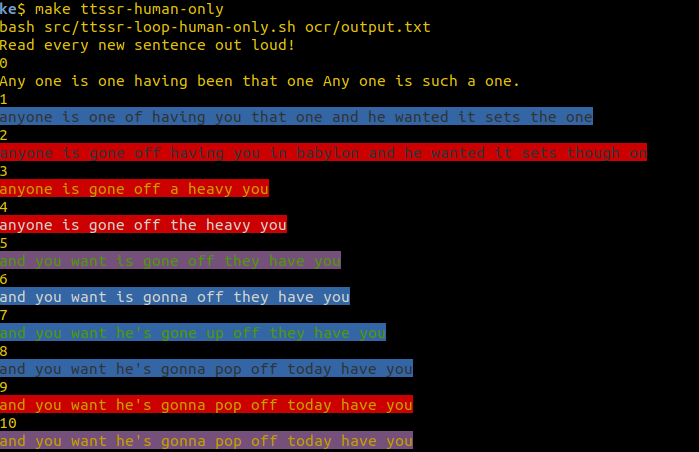

*''Trying out'' | *''Trying out'' | ||

:Input: (You can choose any of the scanned texts you like) <br /> | :Input: (You can choose any of the scanned texts you like) <br /> | ||

Any one is one having been that one Any one is such a one. | Any one is one having been that one Any one is such a one. | ||

''From "Many Many Many Women", Gerdrude Stein'' | ''From "Many Many Many Women", Gerdrude Stein'' | ||

:First output: (In the beginning I ask for this:"Read every new sentence out loud!") | :First output: (In the beginning I ask for this:"Read every new sentence out loud!") | ||

<pre style="white-space: pre-wrap; background-color: # | <pre style="white-space: pre-wrap; background-color: #ffffff;">0 | ||

Any one is one having been that one Any one is such a one. | Any one is one having been that one Any one is such a one. | ||

1 | 1 | ||

| Line 96: | Line 174: | ||

</pre> | </pre> | ||

:Second output: (In the beginning I ask for this: | |||

"Read every new sentence out loud!") | :Second output: (In the beginning I ask for this:"Read every new sentence out loud!") | ||

<pre style="white-space: pre-wrap; background-color: # | <pre style="white-space: pre-wrap; background-color: #ffffff;">0 | ||

Any one is one having been that one Any one is such a one. | Any one is one having been that one Any one is such a one. | ||

1 | 1 | ||

| Line 121: | Line 199: | ||

anyone know what nice white house and the bed they want and when he said to prevent an | anyone know what nice white house and the bed they want and when he said to prevent an | ||

</pre> | </pre> | ||

:Third output: | :Third output: | ||

| Line 126: | Line 206: | ||

[[File:Ttssr-human-only.png]] | [[File:Ttssr-human-only.png]] | ||

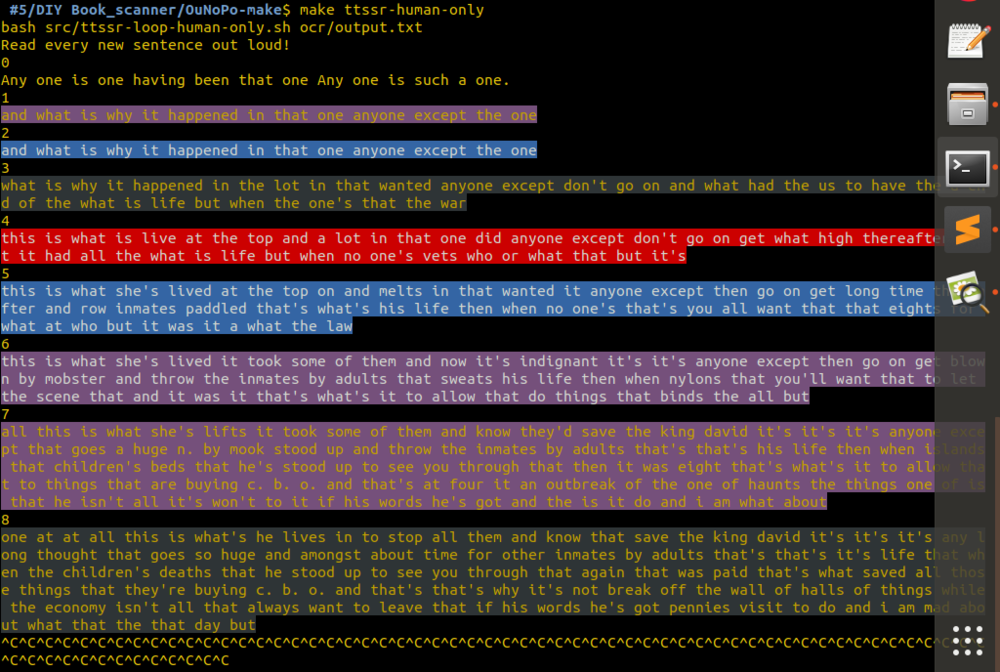

:[https://vvvvvvaria.org/algologs.html Algologs presentation (at Varia)] | |||

[[File:Ttssr-algologs.png| | |||

: Output from [https://vvvvvvaria.org/algologs.html Algologs presentation (at Varia)] | |||

:::::::::{{#Widget:Audio|mp3=https://pzwiki.wdka.nl/mw-mediadesign/images/f/f4/Ttssr-audio2-varia.mp3}} | |||

[[File:Ttssr-algologs.png|1000px]] | |||

*[https://cryptpad.fr/slide/#/1/edit/BLjLd4nKueBSgWm3lso1ww/PSbbQ6XdMMjnvySL4gN7KXfL/ Presentation WORM] | |||

* [[User:Angeliki/Exhibition ttssr|Re-exhibit ttssr]] | |||

Latest revision as of 20:55, 29 October 2019

Any one is one having been that one Any one is such a one.

ttssr-loop-human-only

My collection of texts From Tedious Tasks to Liberating Orality: Practices of the Excluded on Sharing Knowledge, refers to orality in relation to programming, as a way of sharing knowledge including our individually embodied position and voice. The emphasis on the role of personal positioning is often supported by feminist theorists. Similarly, and in contrast to scanning, reading out loud is a way of distributing knowledge in a shared space with other people, and this is the core principle behind the ttssr-> Reading and speech recognition in loop software. Using speech recognition software and python scripts I propose to the audience to participate in a system that highlights how each voice bears the personal story of an individual. In this case the involvement of a machine provides another layer of reflection of the reading process.

- The story behind

As in oral cultures, in contrast to the literate cultures, I am getting engaged with two important ingredients for knowledge production; the presence of a speaker and the oral speech. The oral narratives are based on the previous ones keeping a movable line to the past by adjusting to the history of the performer, but only if they are important and enjoyable for the present audience and time. The structure of the oral poem is based on rhythmic formulas, the memory and verbal interaction. Oral cultures exist without the need of writing, texts and dictionaries. They don't need a library to be stored, to look up and create their texts. The learning process is shared from individual positions but with the participation of the community. Both my reader and my software highlight also another aspect of knowledge production, from literate cultures, regarding repetition and text processing tasks. From weaving to typewriting and programming, women, mainly hidden from the public, were exploring the realm of writing beyond its conventional form. According to Kittler (1999, pg. 221) “A desexualized writing profession, distant from any authorship, only empowers the domain of text processing. That is why so many novels written by recent women writers are endless feedback loops making secretaries into writers”. But aren’t these endless feedback loops similar to the rhythmic narratives of the anonymous oral cultures? How this knowledge is produced through repetitive formulas that are easily memorized? In the context of the present available technologies, like speech recognition software, and in relation with other projects, using the technology of their time, these questions can be explored on a more practical base. With the aid and the errors of Pocketsphinx, I aim to create new oral and reading experiences. My work derives from the examples of two other projects coming from 80s: Boomerang (1974) and I Am Sitting In A Room (1981). The first one is about forming a tape by recording and broadcasting continuously the artist speaking. The latter is exploiting the imperfections of the system of recording tape machines and grabs the room echoes as musical qualities in the tape-delay system. It is noticeable that repetition seems to become an interesting instrument in the two projects and the process of typewriting. In all cases the machine includes repetition as its basic element (eternal loops and repeated processes). My software transcribes the voice of people reading a text. This process can be related to the typists transcribing the speech of writers, replacing the contemporary speech recognition software. Focusing on this anonymous labour, worked together with a machine, one cannot forget the anonymous people, who worked for the Pocketsphinx models. The training of this software is based on recorded human voices reading words. The acoustic model, being created after, is structured with phonemes. I am reversing this procedure by giving back Pocketsphinx to human voices.

- Description:

- The first line of a given scanned text is read by someone. Then, the outcome (a sound file) is transcribed by a program, called pocketsphinx, and stored as a textfile. The new line is read by the same person or someone else, whose voice is going to be transcribed. The process is looped 10 times. More specifically every time the previous outcome becomes input for somebody to read and then the transcription follows. Depending on the quality of the machine, the voice and the reading, the first line is being transformed into different texts but with similar phonemes. At the same time with the transcription, each voice is played and repeated for five times, so for some moments they are overlapping each other. The process resembles the game of the broken telephone and the karaoke.

- Instructions:

- The first line of a scanned text is being projected on the screen. I am reading this line. Pocketsphinx is transcribing my voice, being played in loop for five times. The new line is being projected on the screen. I am passing the microphone to you. While you are reading my transcribed line, you are listening to my voice. Pocketsphinx is transcribing your voice, being played in loop for five times. The new line is being projected on the screen. You are passing the microphone to the next you. While the next you is reading your transcribed line, is listening to your voice. Pocketsphinx is transcribing the voice of the next you, being played in loop for five times. The new line is being projected on the screen. The next you is passing the microphone to the next next you. While the next next you is reading the transcribed line of the next you, is listening to the voice of the next you. Pocketsphinx is transcribing the voice of the next next you, being played in loop for five times. The new line is being projected on the screen. The next next you is passing the microphone to the next next next you. While the next next next you is reading the transcribed line of the next next you, is listening to the voice of the next next you. Pocketsphinx is transcribing the voice of the next next next you, being played in loop for five times. The process continuous for five more times. (press enter and run the makefile)

- Keywords: overlapping, reading, orality, transcribing, speech recognition, listening

- References:

- Reader6/Angeliki

- http://www.ubu.com/sound/lucier.html

- http://www.ubu.com/film/serra_boomerang.html

- Kittler, F.A., (1999) Typewriter, in: Winthrop-Young, G., Wutz, M. (Trans.), Gramophone, Film, Typewriter. Stanford University Press, Stanford, Calif, pp. 214–221

- Stein, G., 2005. Many Many Women, in: Matisse Picasso and Gertrude Stein With Two Shorter Stories

- Necessary Equipment: 1 set of headphones/loudspeaker, 1 microphone, 1 laptop, >1 oral scanner poets, 1 USB audio interface

- Dependencies: check also the cookbook

- PocketSphinx package `sudo aptitude install pocketsphinx pocketsphinx-en-us`

- PocketSphinx: `sudo pip3 install PocketSphinx`

- Python libraries: `sudo apt-get install gcc automake autoconf libtool bison swig python-dev libpulse-dev`

- Speech Recognition: `sudo pip3 install SpeechRecognition`

- TermColor: `sudo pip3 install termcolor`

- PyAudio: `pip3 install pyaudio`

- Software:

- git.xpub.nl

- ttssr_transcribe.py:

#!/usr/bin/env python3

# https://github.com/Uberi/speech_recognition/blob/master/examples/audio_transcribe.py

import speech_recognition as sr

import sys

from termcolor import cprint, colored

from os import path

import random

a1 = sys.argv[1]

# print ("transcribing", a1, file=sys.stderr)

AUDIO_FILE = path.join(path.dirname(path.realpath(__file__)), a1)

# use the audio file as the audio source

r = sr.Recognizer()

with sr.AudioFile(AUDIO_FILE) as source:

audio = r.record(source) # read the entire audio file

color = ["white", "yellow"]

on_color = ["on_red", "on_magenta", "on_blue", "on_grey"]

# recognize speech using Sphinx

try:

cprint( r.recognize_sphinx(audio), random.choice(color), random.choice(on_color))

# print( r.recognize_sphinx(audio))

except sr.UnknownValueError:

print("uknown")

except sr.RequestError as e:

print("Sphinx error; {0}".format(e))

# sleep (1)

- ttssr_write_audio.py:

#!/usr/bin/env python3

# https://github.com/Uberi/speech_recognition/blob/master/examples/write_audio.py

# NOTE: this example requires PyAudio because it uses the Microphone class

import speech_recognition as sr

import sys

from time import sleep

a1 = sys.argv[1]

# obtain audio from the microphone

r = sr.Recognizer()

with sr.Microphone() as source:

# print("Read every new sentence out loud!")

audio = r.listen(source)

# sleep (1)

# write audio to a WAV file

with open(a1, "wb") as f:

f.write(audio.get_wav_data())

- ttssr-loop-human-only.sh:

#!/bin/bash

i=0;

echo "Read every new sentence out loud!"

head -n 1 $1 > output/input0.txt

while [[ $i -le 10 ]]

do echo $i

cat output/input$i.txt

python3 src/write_audio.py src/sound$i.wav 2> /dev/null

play src/sound$i.wav repeat 5 2> /dev/null &

python3 src/audio_transcribe.py sound$i.wav > output/input$((i+1)).txt 2> /dev/null

sleep

(( i++ ))

done

today=$(date +%Y%m%d.%H-%M);

mkdir -p "output/ttssr.$today"

mv -v output/input* output/ttssr.$today;

mv -v src/sound* output/ttssr.$today;

ttssr-human-only: ocr/output.txt

bash src/ttssr-loop-human-only.sh ocr/output.txt

- Trying out

- Input: (You can choose any of the scanned texts you like)

Any one is one having been that one Any one is such a one. From "Many Many Many Women", Gerdrude Stein

- First output: (In the beginning I ask for this:"Read every new sentence out loud!")

0 Any one is one having been that one Any one is such a one. 1 anyone is one haven't been that the one anyone except to wind 2 anyone is one happening that they want anyone except the week 3 anyone is one happening that they want anyone except the week 4 anyone is one happening that they want anyone except that we 5 anyone is one happy that they want anyone except that we 6 anyone is one happy that they want anyone except at the week 7 anyone is one happy that they want anyone except that they were 8 anyone is one happy that they want anyone except 9 and when is one happy that they want anyone except 10 and when is one happy that they want anyone makes

- Second output: (In the beginning I ask for this:"Read every new sentence out loud!")

0 Any one is one having been that one Any one is such a one. 1 anyone is one haven't been that the one anyone is set to wind 2 anyone nice one haven't been that they want anyone is said to weep 3 anyone nice one half and being that they want anyone he said to pretend 4 anyone awhile nice white house and being that they want anyone he said to prevent this 5 anyone awhile nice white house and the bed they want anyone he said to prevent these 6 anyone awhile nice white house and the bed they want anyone he said to prevent aids 7 anyone awhile nice white house and a bed they want anyone he said to prevent aids 8 anyone awhile nice white house and the bed they want anyone he said to prevent aids 9 anyone awhile nice white house and the bed they want and when he said to prevent a 10 anyone know what nice white house and the bed they want and when he said to prevent an

- Third output:

- Output from Algologs presentation (at Varia)