User:Francg/expub/specialissue2/dev2: Difference between revisions

No edit summary |

No edit summary |

||

| (11 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

<div style="float: left; color:#00FF40; margin: 0 15px 0 0; width: | <div style="float: left; color:#00FF40; margin: 0 15px 0 0; width: 850px; font-size:120%; line-height: 1.3em; letter-spacing: 0.8px;"> | ||

<br>Synapse + Kinect | <br>Synapse + Kinect | ||

<br> | <br> | ||

https://pzwiki.wdka.nl/mw-mediadesign/images/1/11/2_Synapse-Kinect.png | |||

<br> | <br> | ||

| Line 12: | Line 12: | ||

<br> | <br> | ||

https://pzwiki.wdka.nl/mw-mediadesign/images/1/18/Ableton.png | |||

<br> | <br> | ||

| Line 55: | Line 55: | ||

<br> | <br> | ||

https://pzwiki.wdka.nl/mw-mediadesign/images/e/e5/Vide-help.png | |||

<br> | <br> | ||

| Line 64: | Line 64: | ||

<br> | <br> | ||

https://pzwiki.wdka.nl/mw-mediadesign/images/1/11/Motion-detection.png | |||

<br> | <br> | ||

| Line 82: | Line 82: | ||

<br> | <br> | ||

https://pzwiki.wdka.nl/mw-mediadesign/images/0/0e/Grey-rgba.png | |||

<br> | <br> | ||

| Line 91: | Line 91: | ||

<br> | <br> | ||

https://pzwiki.wdka.nl/mw-mediadesign/images/5/5d/Audiofeedback-voice.png | |||

<br> | <br> | ||

<br> | <br> | ||

| Line 112: | Line 112: | ||

<br> | <br> | ||

https://pzwiki.wdka.nl/mw-mediadesign/images/c/ce/Audio-recording.png | |||

<br> | <br> | ||

<br> | <br> | ||

<br> | <br> | ||

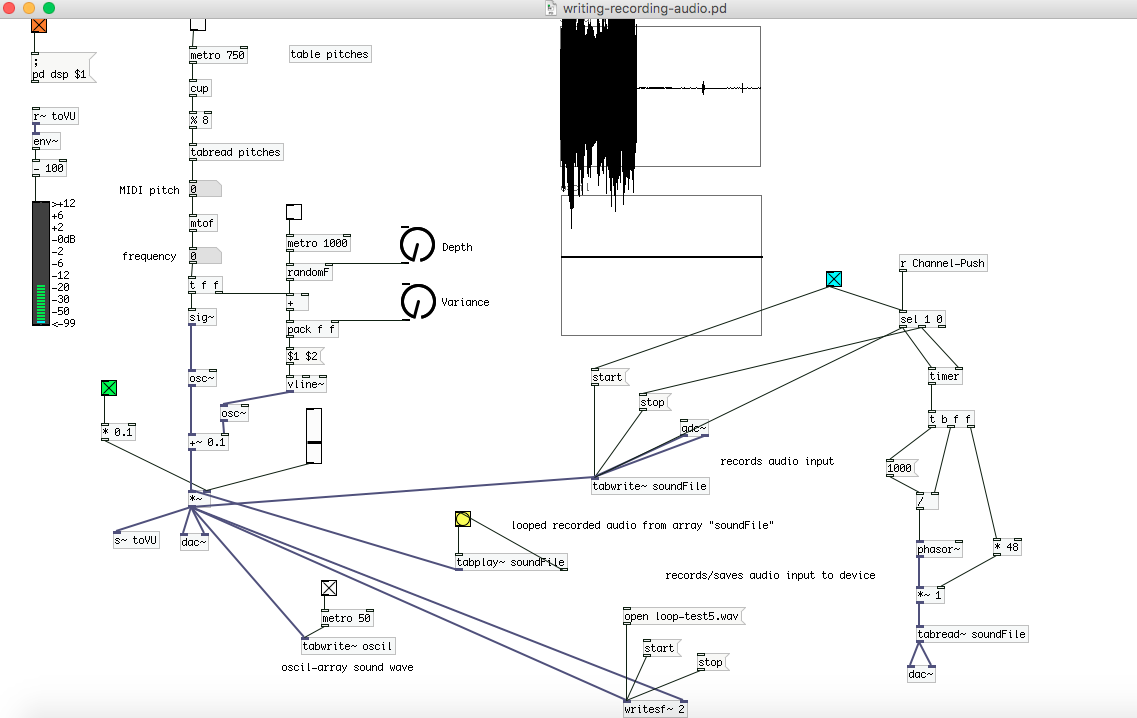

Audio recording [tabwrite~] + [tabplay~] looped + [r Channel-Push] | |||

<br> | <br> | ||

<br> | <br> | ||

https://pzwiki.wdka.nl/mw-mediadesign/images/4/4c/Audio-feedback1.png | |||

<br> | |||

<br> | |||

https://pzwiki.wdka.nl/mw-mediadesign/images/e/e7/Audio-feedback2.png | |||

<br> | |||

<br> | |||

<br> | |||

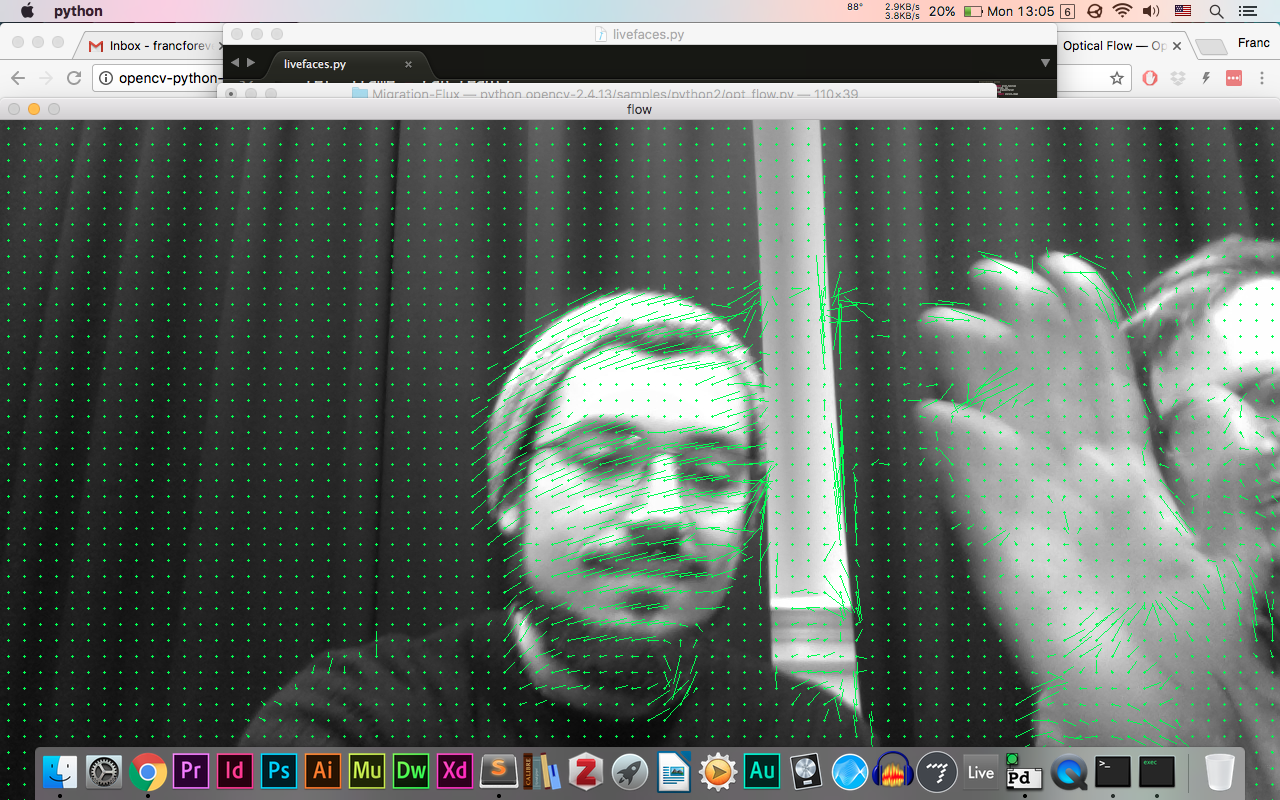

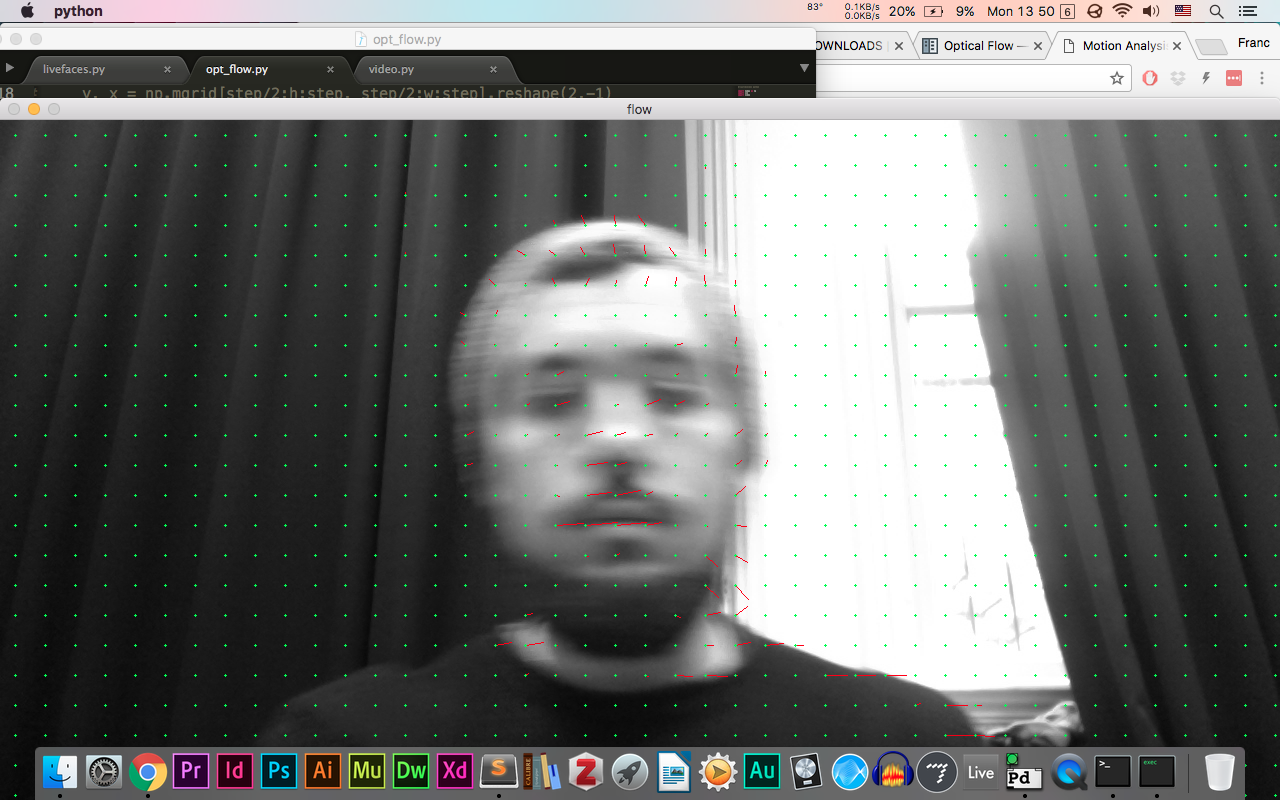

Python Opt_flow_py + video input <br> | |||

<br> | <br> | ||

https://pzwiki.wdka.nl/mw-mediadesign/images/a/a2/Opt-flow-py-3.png<br> | |||

<br> | <br> | ||

https://pzwiki.wdka.nl/mw-mediadesign/images/e/e9/Opt-flow-py.png | |||

<br> | <br> | ||

<br> | <br> | ||

https://pzwiki.wdka.nl/mw-mediadesign/images/e/e1/Opt-flow-py-2.png | |||

<br> | <br> | ||

<br> | <br> | ||

<br> | <br> | ||

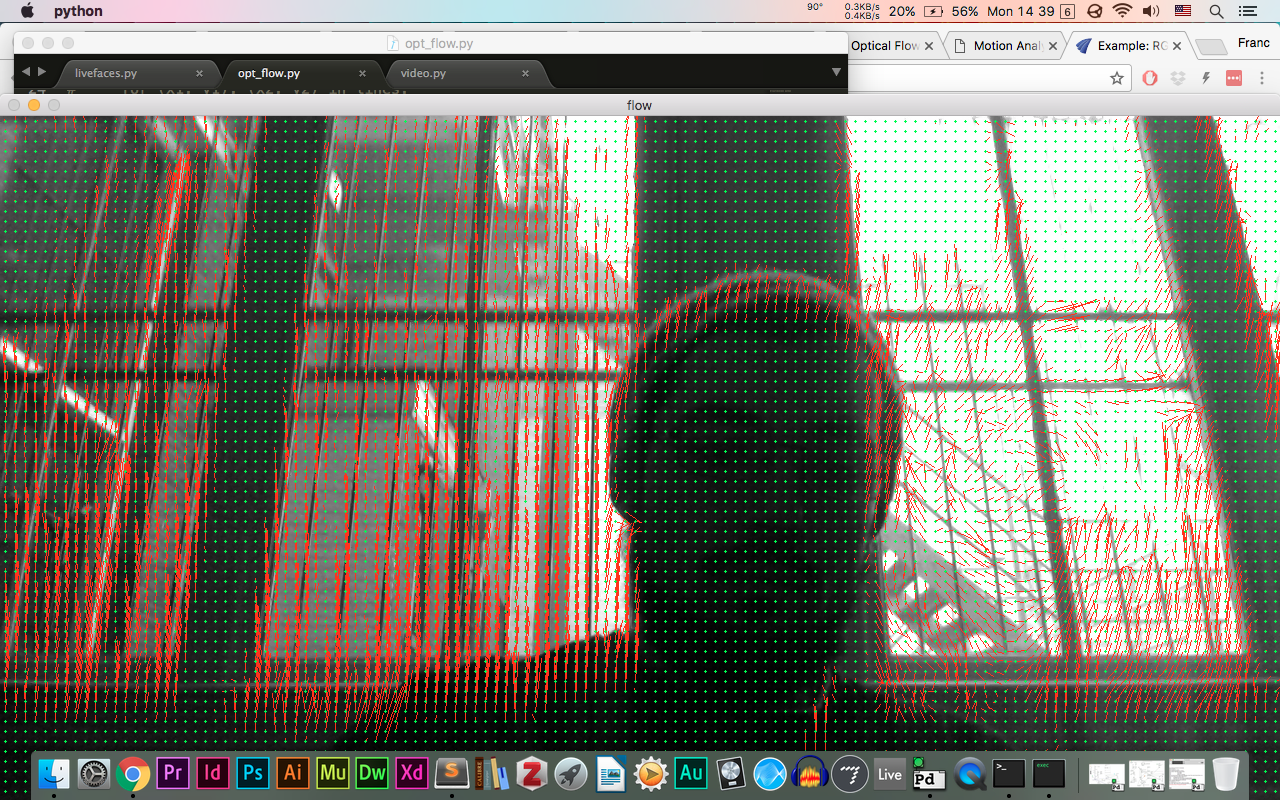

Python Opt_flow_py + OSC + Pd + tabwrite + tabplay <br> | |||

<br> | <br> | ||

https://pzwiki.wdka.nl/mw-mediadesign/images/7/7e/Osc_recorder-2.png | |||

https://pzwiki.wdka.nl/mw-mediadesign/images/0/0e/Osc_recorder.png | |||

</div> | </div> | ||

Latest revision as of 15:56, 7 March 2017

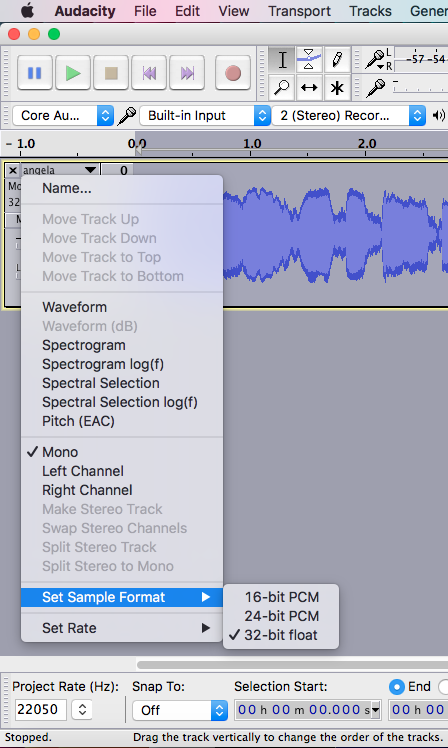

Synapse + Kinect

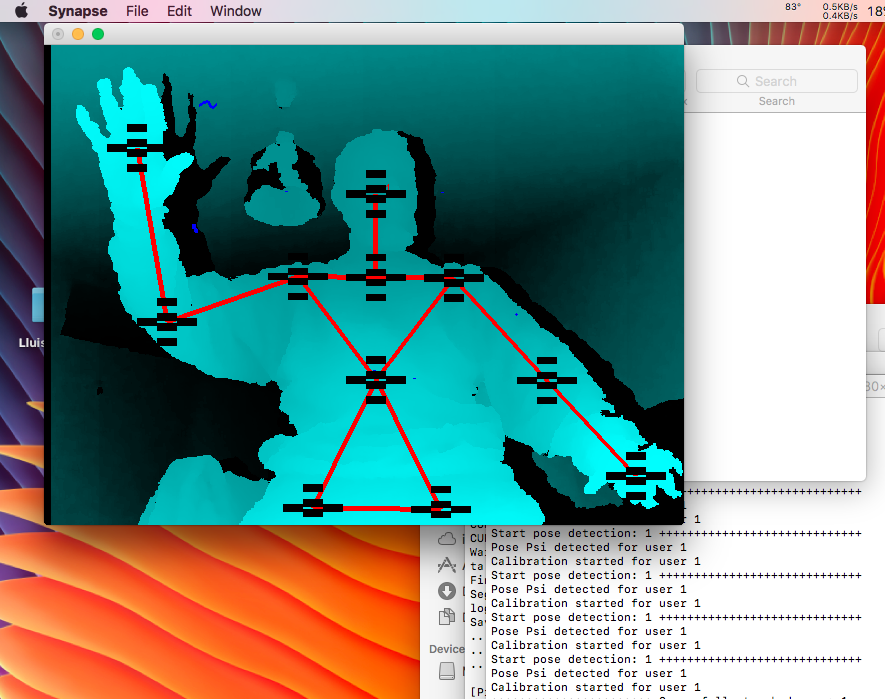

Synapse + Kinect + Ableton + Patches to merge and synnchronize Ableton's audio samples with the body limbs

* * Meeting notes / Feedback * *

- How can a body be represented into a score?

- "Biovision Hierarchy" = file format - motion detection.

- Femke Snelting reads the Biovision Hierarchy Standard

- Systems of notations and choreography - Johanna's thesis in the wiki

Raspberry Pi *

- Floppy disk: contains a patch from Pd.

- Box: Floppy Drive, camera, mic...

- Server: Documentation such as images, video, prototypes, resources...

- There are two different research paths that could be more interestingly further explored separately;

1 - on one hand, * motion capture * by employing tools/software like "Kinect", "Synapse" app, "Max MSP", "Ableton", etc...

2 - on the other hand, there is data / information reading * This can be further developed and simplified. * However, motion capture using Pd and an ordinary webcam to make audio effects could be efficiently linked.

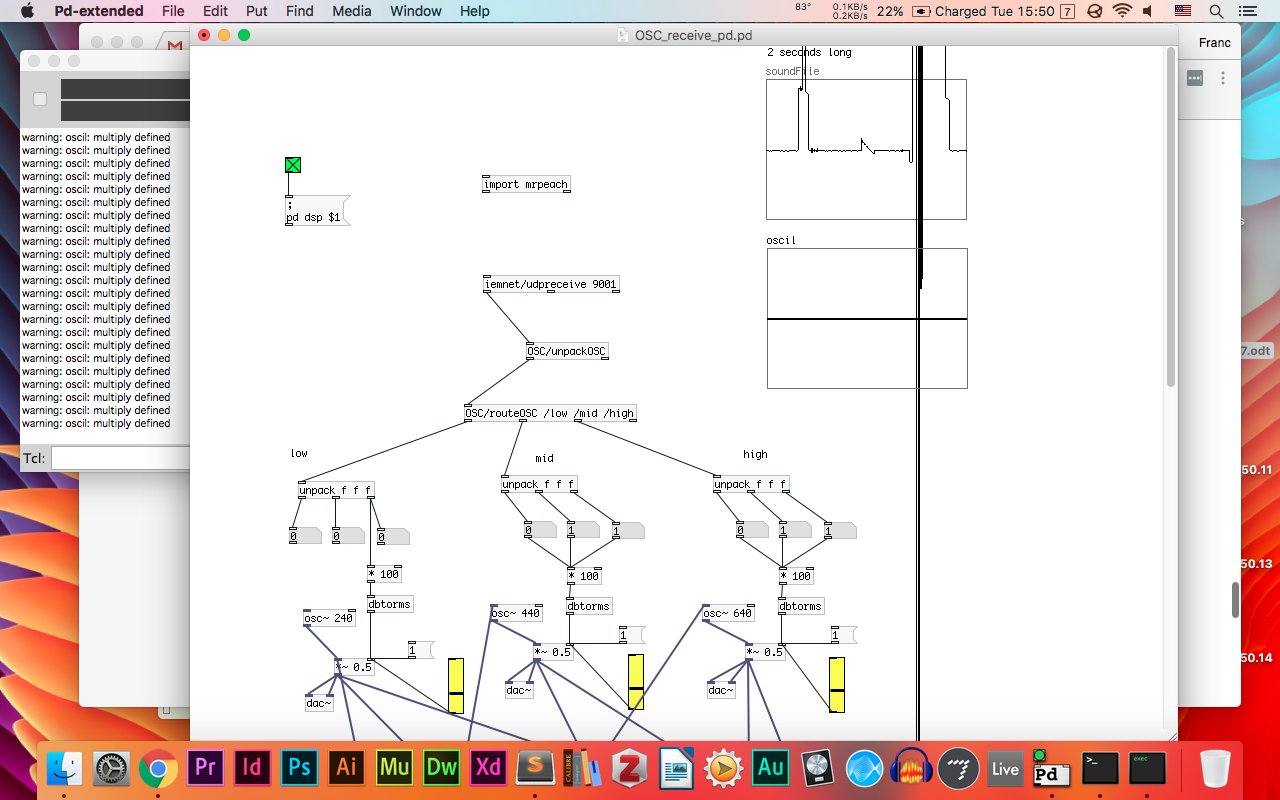

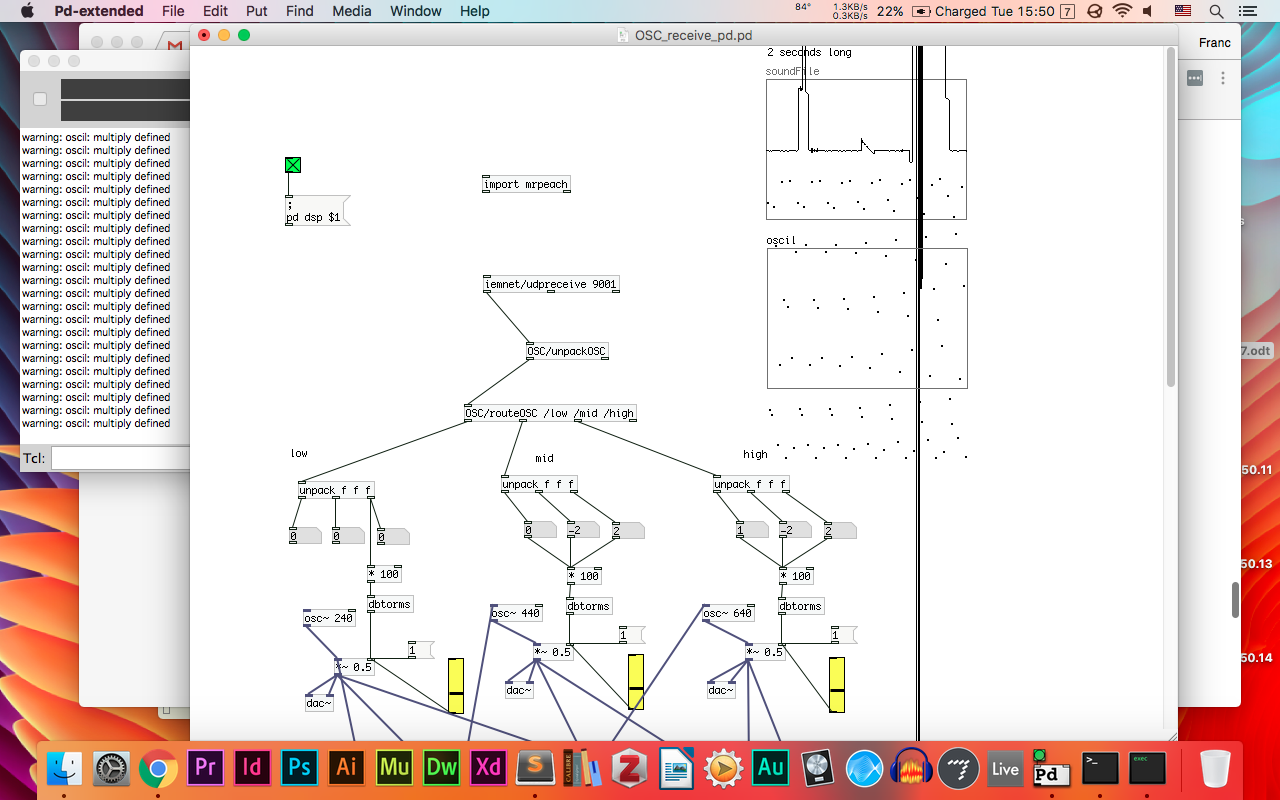

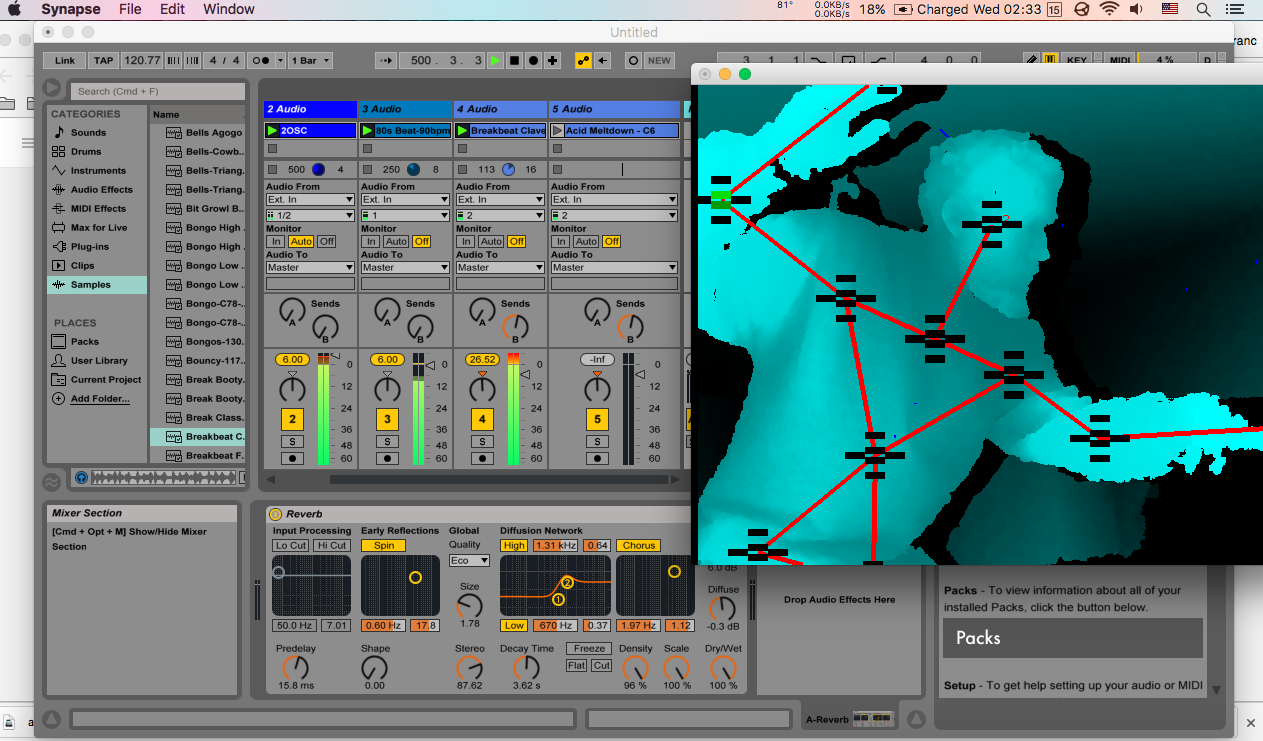

Detecting video input from my laptop's webcam in Pd

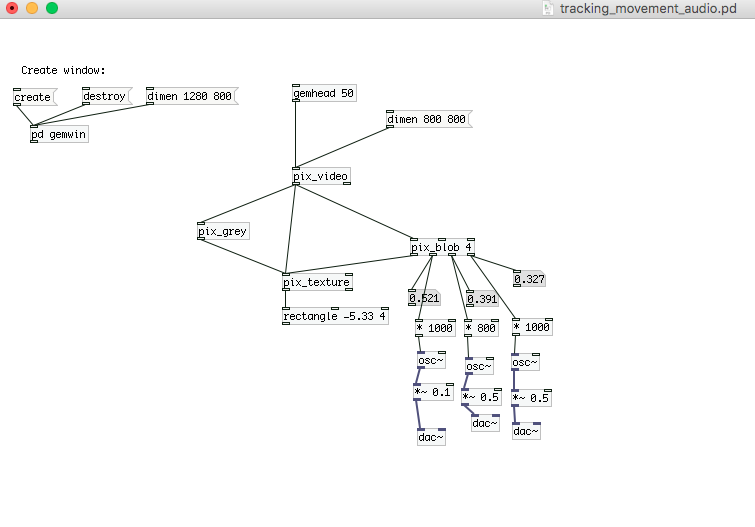

Motion Detection - “blob” object and oscillators

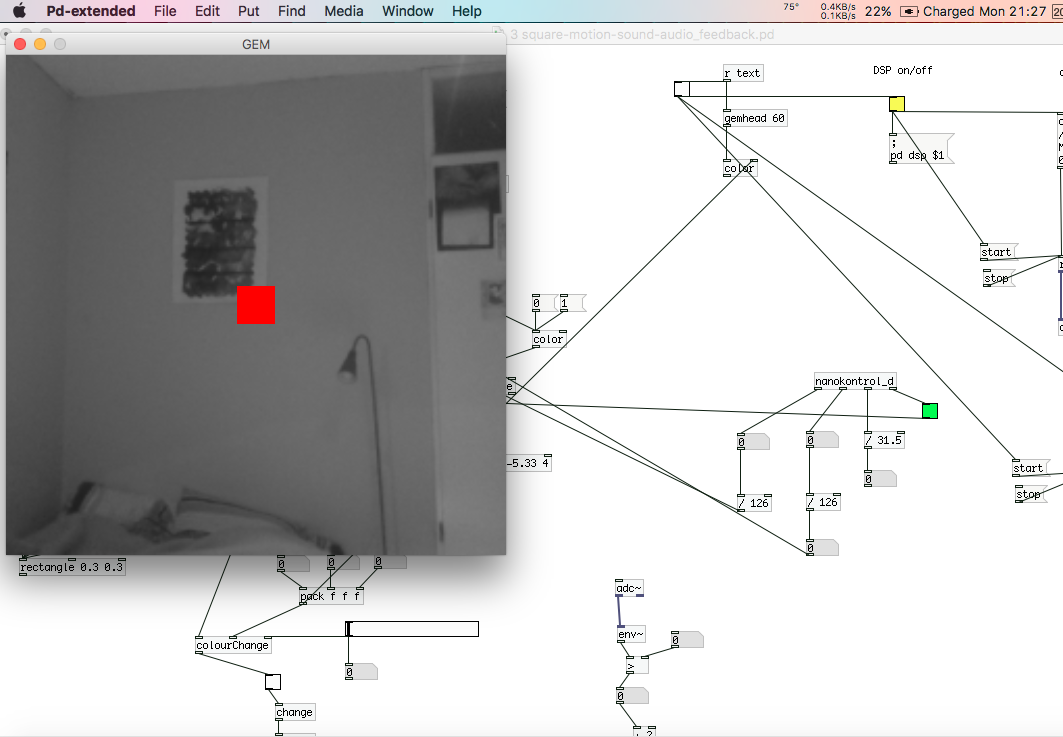

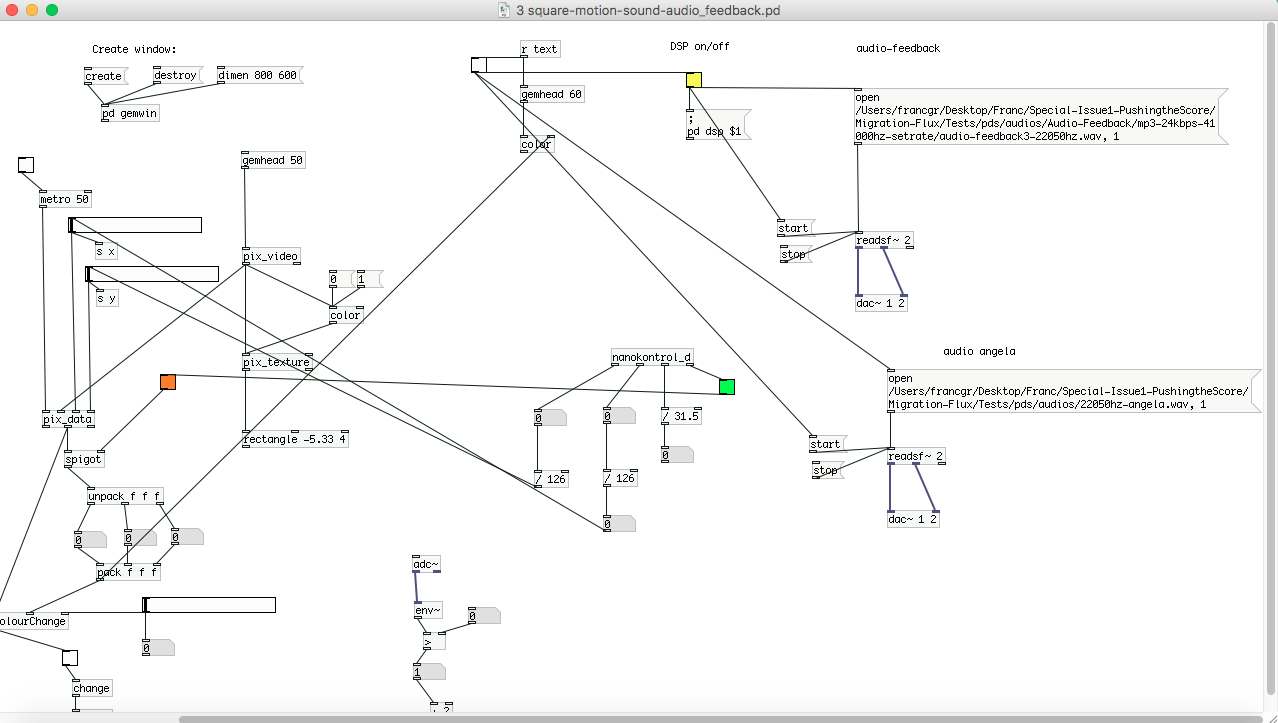

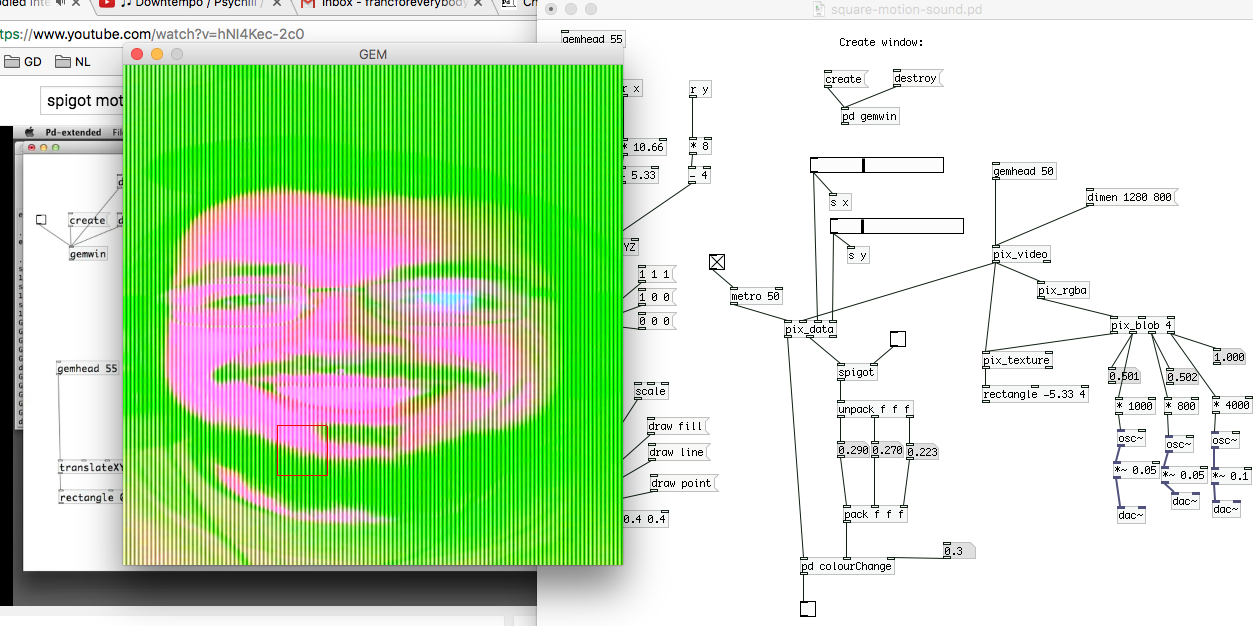

Color tracking inside square with “spigot” + “blob” object. This can be achieved in rgba or grey.

also by combining "grey" and "rgba" simultaneously, or any other screen noise effect.

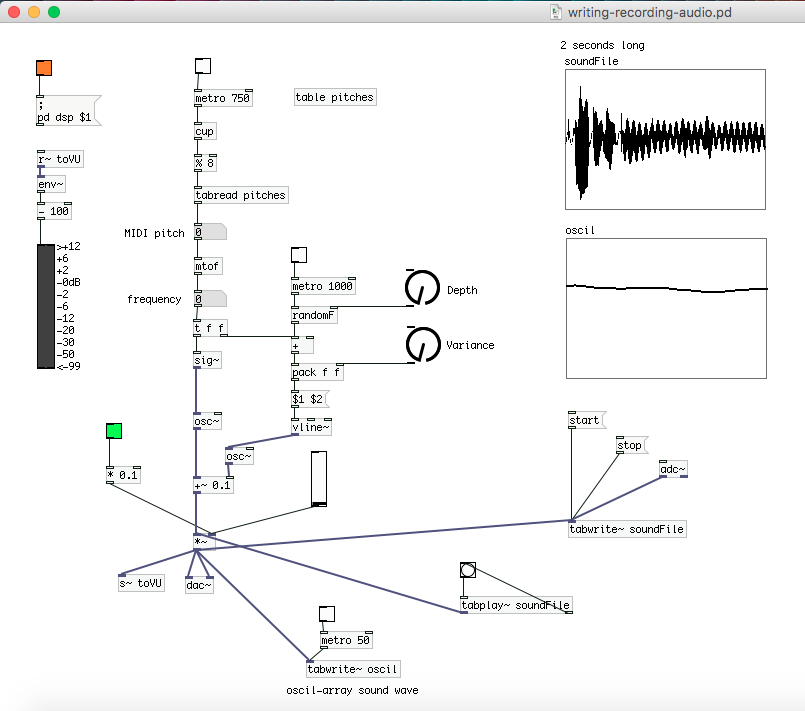

Same process can be performed with self-generated imported audio files. It's important to ensure that their sample rate is the same as in Pd's media/audio settings, in order to avoid errors.

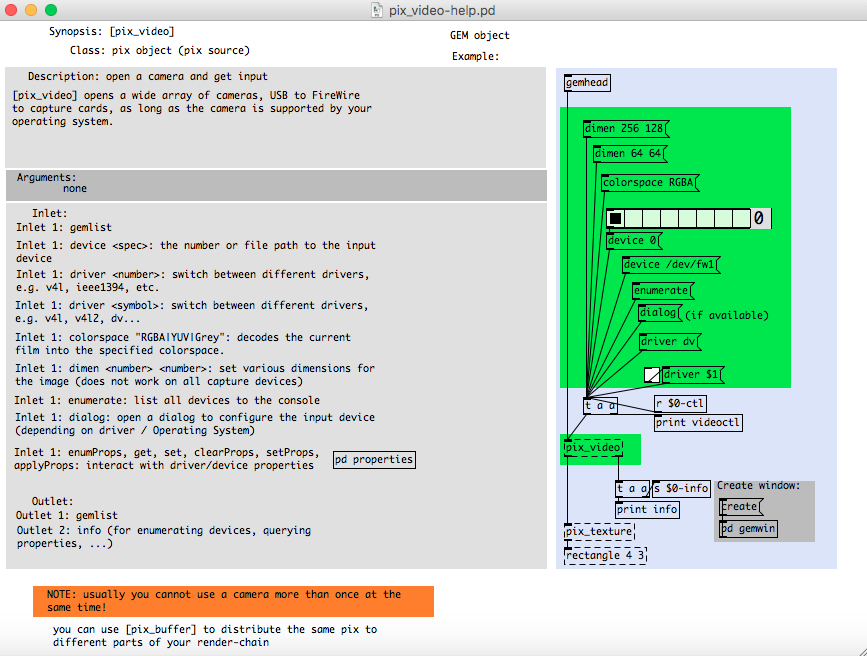

In order to better understand how audio feedback works, I have self-generated a series of feedback loops by "screen recording" my prototypes, using Quicktime and the microphone/s input, along with specific system audio settings. They were later edited in Audacity.

File:Audio1.ogg

File:Audio2.ogg

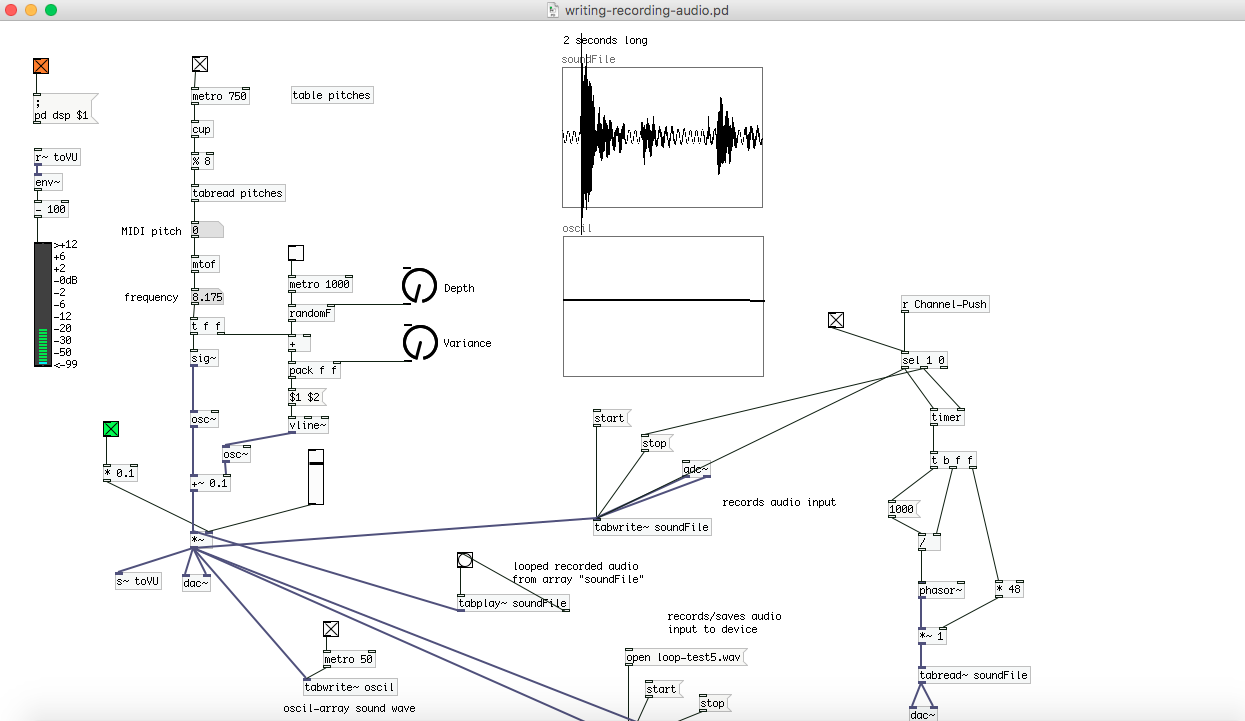

Audio recording + audio play by using [tabwrite~] and [tabplay~] objects. This allows to create a loop by recording multiple audios (which can also be overlapped depending on their length)

Audio recording [tabwrite~] + [tabplay~] looped + [r Channel-Push]

Python Opt_flow_py + video input

Python Opt_flow_py + OSC + Pd + tabwrite + tabplay