User:Jules/eyearchiveprocess: Difference between revisions

No edit summary |

|||

| (2 intermediate revisions by the same user not shown) | |||

| Line 11: | Line 11: | ||

<small>For the project with Thomas for the EYE research lab, I had to get the names of all the people listed within the EYE's archive database and know what activity lead them to enter this database. Therefore any actor, cameraman, what so ever should have been listed for the project. If it seemed like a quite simple idea, getting there hasn't been easy. The EYE archive employees have kindly tried to help me by manually making a few requests through the interface and exporting some excel spreadsheets for me. However, these were not complete and not so practical to deal with for what I wanted to do. If I wanted to get the identity of “all the people” within the database I had to proceed differently. Finding out how to get there was a bit long and complicated for me, as I am not acquainted with big volumes of data, nor with these abstract structures. The whole process of getting there was interesting and I had my few moments of epiphany, like when I discovered how to loop a regex over a batch of 74 files, instead of having to do it by hand.</small> | <small>For the project with Thomas for the EYE research lab, I had to get the names of all the people listed within the EYE's archive database and know what activity lead them to enter this database. Therefore any actor, cameraman, what so ever should have been listed for the project. If it seemed like a quite simple idea, getting there hasn't been easy. The EYE archive employees have kindly tried to help me by manually making a few requests through the interface and exporting some excel spreadsheets for me. However, these were not complete and not so practical to deal with for what I wanted to do. If I wanted to get the identity of “all the people” within the database I had to proceed differently. Finding out how to get there was a bit long and complicated for me, as I am not acquainted with big volumes of data, nor with these abstract structures. The whole process of getting there was interesting and I had my few moments of epiphany, like when I discovered how to loop a regex over a batch of 74 files, instead of having to do it by hand.</small> | ||

| Line 16: | Line 17: | ||

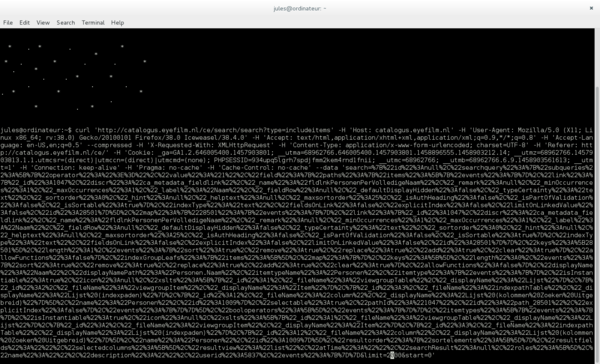

<small>A first issue encountered while trying to get the data was that the interface would not enable me to do big requests. Maximal export is limited to 2000 entries and a query for all the persons names returns more than 145664 items. Also, exports are always preformatted in the shape of an html table, with a lot of formatting that wasn't necessary in this case, including the overall data I didn't need. Therefore I had to do a direct cURL request through the Terminal. This enabled to specify a starting number and a limit. To be kind to the server, I extracted 74 text files of 2000 entries each. | <small>A first issue encountered while trying to get the data was that the interface would not enable me to do big requests. Maximal export is limited to 2000 entries and a query for all the persons names returns more than 145664 items. Also, exports are always preformatted in the shape of an html table, with a lot of formatting that wasn't necessary in this case, including the overall data I didn't need. Therefore I had to do a direct cURL request through the Terminal. This enabled to specify a starting number and a limit. To be kind to the server, I extracted 74 text files of 2000 entries each. | ||

[[File:Curleyedb.png|600px]] | |||

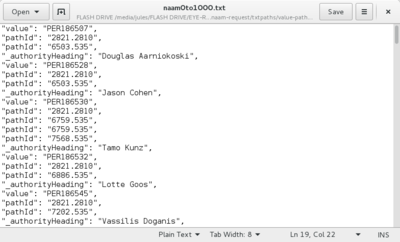

To understand the organisation of the files I got, I had to look a a few items and compare the raw file and the information presented to me over the Interface. The names appeared as “_authorityHeadings”. They also all had a unique identifier called “value”. As I couldn't see the jobs names appearing, I searched for a pattern that could link two entries under the similar job and that was recurring twice if the job was mentioned twice on the interface. After a while, I realised that pathIds were those digit combination by which a suffix referred to a common class. The suffix .535 seemed to refer to the job. So I trimmed all the files to only preserve “value”, “pathIds” and “_authorityHeading”. | To understand the organisation of the files I got, I had to look a a few items and compare the raw file and the information presented to me over the Interface. The names appeared as “_authorityHeadings”. They also all had a unique identifier called “value”. As I couldn't see the jobs names appearing, I searched for a pattern that could link two entries under the similar job and that was recurring twice if the job was mentioned twice on the interface. After a while, I realised that pathIds were those digit combination by which a suffix referred to a common class. The suffix .535 seemed to refer to the job. So I trimmed all the files to only preserve “value”, “pathIds” and “_authorityHeading”. | ||

[[File:Value-path-id.png|400px]] | |||

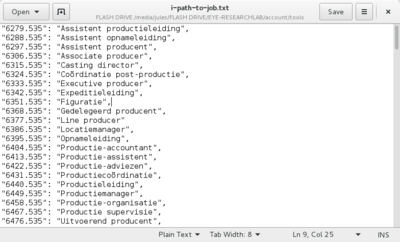

Then, having pathIds was not enough, so I made a new file compiling all the pathIds from the 74 files (hundreds of thousands of entries), and sort them out to preserve unique identifiers. It seemed like there was 165 of them. Not too sure how to automate the association with the job titles they referred to, I manually went through a few files to cross information, and worked with the interface. If one person only had one path id, over the interface one Job over one film would show up. I usually crossed through 3 entries to make sure I had enough confirmation. This enabled me to get some dictionary. Alongside, I made a file from the raw data listing all unique names. So I could compare whether I had kept all names and all jobs once the huge file with everything would be done. | Then, having pathIds was not enough, so I made a new file compiling all the pathIds from the 74 files (hundreds of thousands of entries), and sort them out to preserve unique identifiers. It seemed like there was 165 of them. Not too sure how to automate the association with the job titles they referred to, I manually went through a few files to cross information, and worked with the interface. If one person only had one path id, over the interface one Job over one film would show up. I usually crossed through 3 entries to make sure I had enough confirmation. This enabled me to get some dictionary. Alongside, I made a file from the raw data listing all unique names. So I could compare whether I had kept all names and all jobs once the huge file with everything would be done. | ||

[[File:Pathtojob.png|400px]] | |||

I was then able to replace the pathIds with job titles over the 74 files, with a python script in which I used my conversion file as a dictionary. Then, I transformed the files to make them look like json files using a lot of regular expressions, looping through them. | I was then able to replace the pathIds with job titles over the 74 files, with a python script in which I used my conversion file as a dictionary. Then, I transformed the files to make them look like json files using a lot of regular expressions, looping through them. | ||

| Line 28: | Line 37: | ||

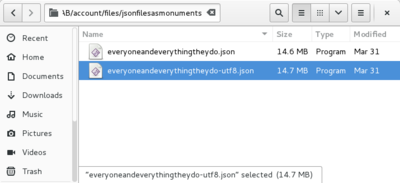

Then, I finally had my json, which happened to be massive. | Then, I finally had my json, which happened to be massive. | ||

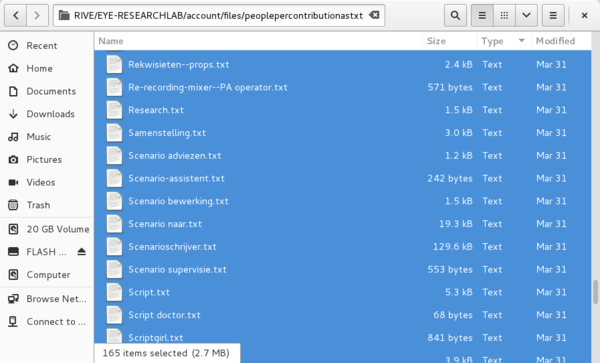

To get all the people's names sorted per “contribution” (or job), I wrote a quick script enabling me to loop within all the files. If a job was mentioned in the list of jobs of any individual, it would extract the name and put it in a file named after the job title (if a word was present in the “contribution” array, contribution is the name I gave to this array of substituted pathIds). I ended up with a folder of 165 text files, named after the job titles, compiling every individuals name.</small> | |||

[[File:Bigjson.png|400px]] | |||

To get all the people's names sorted per “contribution” (or job), I wrote a quick script enabling me to loop within all the files. If a job was mentioned in the list of jobs of any individual, it would extract the name and put it in a file named after the job title (if a word was present in the “contribution” array, contribution is the name I gave to this array of substituted pathIds). I ended up with a folder of 165 text files, named after the job titles, compiling every individuals name. | |||

[[File:Peoplepercontribution.png|600px]] | |||

</small> | |||

====<span style="background-color:yellow">Personal thoughts</span>==== | ====<span style="background-color:yellow">Personal thoughts</span>==== | ||

Latest revision as of 17:22, 1 April 2016

- Some files I have generated: http://pzwart1.wdka.hro.nl/~jules/EYE-RESEARCHLAB/files/

- Some tools I have used: http://pzwart1.wdka.hro.nl/~jules/EYE-RESEARCHLAB/tools/

- The whole record: http://pzwart1.wdka.hro.nl/~jules/EYE-RESEARCHLAB/files/0-printablemonument.pdf

How to find all the people in the EYE's archive

For the project with Thomas for the EYE research lab, I had to get the names of all the people listed within the EYE's archive database and know what activity lead them to enter this database. Therefore any actor, cameraman, what so ever should have been listed for the project. If it seemed like a quite simple idea, getting there hasn't been easy. The EYE archive employees have kindly tried to help me by manually making a few requests through the interface and exporting some excel spreadsheets for me. However, these were not complete and not so practical to deal with for what I wanted to do. If I wanted to get the identity of “all the people” within the database I had to proceed differently. Finding out how to get there was a bit long and complicated for me, as I am not acquainted with big volumes of data, nor with these abstract structures. The whole process of getting there was interesting and I had my few moments of epiphany, like when I discovered how to loop a regex over a batch of 74 files, instead of having to do it by hand.

Diving into the database

A first issue encountered while trying to get the data was that the interface would not enable me to do big requests. Maximal export is limited to 2000 entries and a query for all the persons names returns more than 145664 items. Also, exports are always preformatted in the shape of an html table, with a lot of formatting that wasn't necessary in this case, including the overall data I didn't need. Therefore I had to do a direct cURL request through the Terminal. This enabled to specify a starting number and a limit. To be kind to the server, I extracted 74 text files of 2000 entries each.

To understand the organisation of the files I got, I had to look a a few items and compare the raw file and the information presented to me over the Interface. The names appeared as “_authorityHeadings”. They also all had a unique identifier called “value”. As I couldn't see the jobs names appearing, I searched for a pattern that could link two entries under the similar job and that was recurring twice if the job was mentioned twice on the interface. After a while, I realised that pathIds were those digit combination by which a suffix referred to a common class. The suffix .535 seemed to refer to the job. So I trimmed all the files to only preserve “value”, “pathIds” and “_authorityHeading”.

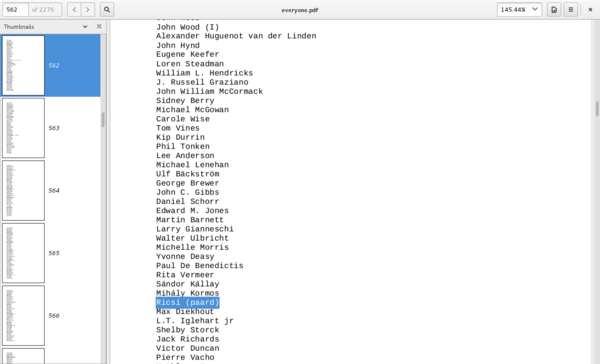

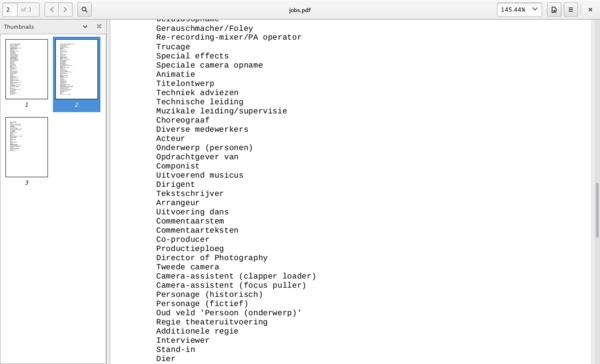

Then, having pathIds was not enough, so I made a new file compiling all the pathIds from the 74 files (hundreds of thousands of entries), and sort them out to preserve unique identifiers. It seemed like there was 165 of them. Not too sure how to automate the association with the job titles they referred to, I manually went through a few files to cross information, and worked with the interface. If one person only had one path id, over the interface one Job over one film would show up. I usually crossed through 3 entries to make sure I had enough confirmation. This enabled me to get some dictionary. Alongside, I made a file from the raw data listing all unique names. So I could compare whether I had kept all names and all jobs once the huge file with everything would be done.

I was then able to replace the pathIds with job titles over the 74 files, with a python script in which I used my conversion file as a dictionary. Then, I transformed the files to make them look like json files using a lot of regular expressions, looping through them.

Once I had clean jsons, another issue was that I had a lot of double entries. Not knowing how to proceed better (perhaps a bit too lazy to find a proper solution), I used Sublime text and synthesized manually. I removed all the comas to get there quicker and replaced all the 165 jobs being multiplied over two lines by the same name once. So the amount was always divided by two until there was no match anymore. I also realised that some people had no job for some reason, and that this wasn't my mistake but coming from the database (they may have had been involved differently). So I gave these people a “non identified” mention in the “contribution” array.

Then, I finally had my json, which happened to be massive.

To get all the people's names sorted per “contribution” (or job), I wrote a quick script enabling me to loop within all the files. If a job was mentioned in the list of jobs of any individual, it would extract the name and put it in a file named after the job title (if a word was present in the “contribution” array, contribution is the name I gave to this array of substituted pathIds). I ended up with a folder of 165 text files, named after the job titles, compiling every individuals name.

Personal thoughts

A first thing I must say about the process is that it was physically draining. Because I had to find each solution step by step, I probably spent extra days over it just for solution finding. Therefore it came to my mind that navigating such big amounts of data and trying to make sense of it was for me a real physical confrontation, my back hurts and I have scintillating scotomas.

I couldn't always treat the whole batch of data in one go because I needed to comprehend it. I needed to be fully involved with every step to understand how my process would unfold (manual labour also enables better familiarization). That enabled me to spot a lot of traces of other humans' mistakes within the records. Sometimes the names are doubled because one person has accentuated a surname while another person didn't. I think that botching the job is not avoidable over scales an individual cannot get a comprehensible overview of. I also realised as I was finishing that about 40 new names have been added since a started, therefore I cannot even be accurate myself.

Another thing I really liked in doing this was the flatness of the hierarchy within the two lists I got, names and roles. Julie Andres, King Edward VIIIth, Josephine the Chimpansee, Mickey Mouse and Sarah Michelle Gellar were all equally persons.

Also, I realised that Acteur was a job of same value than being a fictif or historical character, also equivalent to being a director or . It also seems that “Dier”, meaning being an animal, is also a person's job according to the database structure. I thought that this was a nice story of hierarchy removal, the rights of the social rank fade away where the rules of database efficiency prevail.

What we do next

Firstly, a hard copy of these documents should be given back to Anke Bel during the ceremony, as a stack of sheets. A stack of sheets with all the names is perhaps a good way to really translate quantity from something that is abstract to something understandable more directly. We all have made the experience of the weight of a ream, the thickness of a single sheet, the cost of ink cartridges, the amount of time for the printing, the impossibility to read them all or spread them on a table to get a proper overview... The accumulation of printed matter seems like a good translator of the physical intensity (or heaviness) of navigating such a vast quantity of information and trying to make sense of it in its very basic form. Another thing I like about it is that it then shows analogies with memorials to people whose lives (or death most probably) have been convergent within one single event at a place (like a war). These monuments usually confront an individual viewer with the scale over which people's names are spread, if a lot of people died, what you get is a sense of being tiny, and you can physically compare yourself to how many people these names would convert to. This hard record can therefore function as a sculpture and a memorial.

Then, I also made a quick python script to generate a video after a text file: