User:Manetta/self-initiated-shift: Difference between revisions

No edit summary |

|||

| Line 30: | Line 30: | ||

<span style="color:blue;">[intro]</span><br /> | <span style="color:blue;">[intro]</span><br /> | ||

In the last 6 months, i've been looking at different '''tools''' that contain linguistic systems. From ''speech-to-text software'' to ''text-mining tools'', they all '''systemize language''' in various ways in order to understand '''natural language'''—as human language is called. These tools fall under the term 'Natural Language Processing' ('''NLP'''), which is a field of computer science that is closely related to Artificial Intelligence (AI). | In the last 6 months, i've been looking at different '''tools''' that contain linguistic systems. From ''speech-to-text software'' to ''text-mining tools'', they all '''systemize language''' in various ways in order to understand '''natural language'''—as human language is called in computer science. These tools fall under the term 'Natural Language Processing' ('''NLP'''), which is a field of computer science that is closely related to Artificial Intelligence (AI). | ||

<span style="color:blue;">As ...<br/> (personal interest in human to computer interaction.....?)<br/ > | <span style="color:blue;">As ...<br/> (personal interest in human to computer interaction.....?)<br/ > | ||

Revision as of 09:46, 3 June 2015

shifts

shift to a new set of tools

As being educated to use the Adobe software masterpackage in the environment of Mac OSX, my former work has been produced and transmitted through the channels of InDesign, Photoshop, After Effects, Dreamweaver and a little bit of Illustrator from time to time. Next to these five giants, my toolset was supplemented with a basic set HTML+CSS lines. And: Fontlab, a piece of software to make typefaces and (e.g.) 'dynamic' ligatures with.

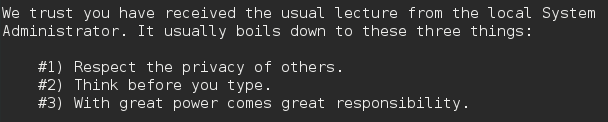

In the last 6 months, this set of tools has mainly been replaced with a new set of open-source tools (Scribus, Gimp, ImageMagick, Kdenlive), and supplemented with a programming language called 'python' , while working in the new environment of Debian (a distribution of the open source operating system Linux) which involves using the terminal. Working within a very visual environment shifted towards working within text-based interfaces. The convenience and experiences that i built up in the Mac environment haven't disappeared (obviously), as they are still present in the routes i take to search for options and tools which i was relying on before. Though it offers me a very different way of approaching design, based on thinking in structures rather then visual compositions and their connotations.

There are different arguments to give for this shift of operating system. The main reason was a rather practical one, related to a shift of work-method. Linux is developed in the early 90's by Linus Torvalds, and comes from a coders community. Therefore the system is built to be friendly for code-related work methods, but also asks for a bit more involvement when installing new programms or elements at your operating system. Also Linux features as the 'split screen' make it very comfi to work back and forth with a text-editor and browser.

Secondly there is a certain set of cultural principles present in the open-source community that I feel attracted to: the importance of distribution in stead of centralization, the aim of making information (also called knowledge) available for everyone (in the sense that it should not only be available but also legible), and the openness of the software packages which makes it possible to dig into the files a piece of software uses to function.

Using Linux is a way to be not fully depended on the mega-corporations. Although my private emailadress is still stored on servers owned by Google, my facebookaccount is there, and i'm following a set of people on Twitter, my professional toolset is not depended anymore on the decisions that Apple and Adobe will make in the future. That is a relief.

shift to design-perspective

from the book 'A not B', by Uta Eisenreich

[intro]

In the last 6 months, i've been looking at different tools that contain linguistic systems. From speech-to-text software to text-mining tools, they all systemize language in various ways in order to understand natural language—as human language is called in computer science. These tools fall under the term 'Natural Language Processing' (NLP), which is a field of computer science that is closely related to Artificial Intelligence (AI).

As ...

(personal interest in human to computer interaction.....?)

...

Examples of such linguistic tools are the following:

- alternative counting systems, to represent color (Hexidecimal - a numeral system with a base of 16: 0,1,2,3,4,5,6,7,8,9,A,B,C,D,E,F)

- interpreting the human voice into written language (CMU Sphinx - speech to text software)

- mimicing the human voice (text to speech software)

- interpreting digital written documents (Tesseract - a piece of character recognition software)

- using semantic structures as linking system (the idea of the Semantic Web - a metadata method of linking information)

- using subtitle files as editing resource (Videogrep - a video editor based on different types of search-queries)

- working with text parsing (recognizing word-types), machine learning (training algorithms with annotated example data), and text mining (recognizing certain patterns in text) (Pattern - a text mining tool)

- representing lexical structures and relationships in a dataset (WordNet - a lexical database)

[approaches, ways of working with them]

(method of opening them up)

As the tools i looked at where mainly open source, it enabled me to dig into the software files and observe the formats that are used to systemize a language. When looking into the speech-to-text tool 'Sphinx' for example, i've been exploring which format of dictionary is used, and how these dictionaries are constructed.

This has been a quite formal way of approaching them, in the sense that is was an act of observing the different language systemizations.

(method of adapting them in projects)

During the last 6 months I've also been looking at the subjects of AI and interfaces, which are both close to the issues that come up when looking at systemized languages: the phenomena of invisibility and naturalizing seem to be two little umbrella's that cover the three subjects.

It was by a kind of coincidence that i stumbled upon a device called 'Amazon Echo', an artificial intelligence machine that one could put in one's living room. It uses speech to text recognition which is used to receive voice commands; linked data to link the requested information sources; and text-to-speech to reply to the user's requests. Echo seemed to be a device wherin different language systemizations came together.

We could consider Echo as an interface in two ways. Echo has a physical interface, it's a black object made in the form of a cilinder that should be placed in a corner of one's living room. But there is a virtual interface as well, materialized through the voice, and presented to the user through the form of a human simulation. The first one contains an attempt to become invisible, as the black 'pipe' is not very much designed to be very present in one's house. Echo is small, and its black color, geometric clear shape, and its roughness are characteristics that 'common' audio devices share. example image? Echo is close to its users, so close that it is easy to forget even about it. Though is doesn't mean that the machine is less present. It is rather the opposite. The virtual interface of Echo is designed in a way that the user naturalizes with the system, in order to let Echo become part of one's natural surrounding. The device performs through speech, 'realtime' information deliveries, and an increasing amount of knowledge about one's contacts and behavior.

In order to put these AI characteristics in a bigger context, a next step was to see how these AI-related topics where depicted in popular cinematic culture. How are these kind of AI interfaces depicted over the years? I looked at five iconic science-fiction films, in which AI-machines are playing the main character. My selection of 'popular' AI interfaces existed out of Hal (from 2001, a Space Odyssey — 1968), Gerty (from Moon — 2009), Samantha (from Her 2013), Ash (Be Right Back, BlackMirror — 2013) and David (from Steven Spielberg's AI — 2009). Stating the years of the films when summing up seems to be quite important, as it seems to be important to know when people are already using AI machines in film, to show in which context these films are made. HAL is made in 1968, which is one year before the first man on the moon. Though this wasn't the departure point from which i was interested to approach the subject of AI.

An AI interface is mimicing a human being, but how does that influences the way that you communicate with it? And also, how is the user actually adapting his system to the machine's system? How is this relation between computer and user shaped by the fact that the interface is a human simulation?

(short conclusion?)

In a certain sense, human-to-hardware interface gestures like clicking & swiping could also be considered to be language systemizations. Together with 3 classmates (Cristina Cochior, Julie Boschat Thorez & Ruben van de Ven) i've been considering the ideologies that relate to these two gestures, and how the could be visualized. It resulted in a browser-based game called 'fingerkrieg', where the player is challenged to click/swipe as many times as possible in 15 seconds. As clicking could be considered as a binary gesture, swiping is a rather new gesture that is designed to naturalize the interaction a user has with a device. These two interfaces contain certain language systemizations in that sense, as they limit or offer certain possibilities that are fixed within the designed system.

[motivation to work on systemized languages]

The presence of machines nowadays, and the difficulties that arise when positioning them into a human centralized world.

The naturalization of machines, and the invisibility of their interfaces.

Linguistic systems are built to let the computer understand human language, which is already an act of looking for data-correlations. Hence they will always be insecure and inaccurate.

The fact that semantic meaning is constructed through these language structures and systems. (Examples: ngrams, valued dictionary files, JSGF files, machine-learning algorithms, newest = deep learning) Signification isn't derived from the objects themselves, but rather from the system they are put in.

[subjects related to systemized languages]

- universal language

- classification & taxonomies/ontologies

- problem of an one-sided fixed truth that represents 'common sense'

[relation to design background]

Working from a perspective of my graphic design background and combining that with my systemized languages interest, could be a step that would bring me closer to develop a more specific topic of research.

Graphic design is a multi-layered methodology for communication, by considering 'order', 'legibility', 'composition', & 'connotation'—and many other elements which could be part of this list.

(some notes on design from 2009: http://manettaberends.nl/ThoughtsAboutDesign.html)