User:Manetta/self-initiated-shift

shifts

shift to a new set of tools

As being educated to use the Adobe software masterpackage in the environment of Mac OSX, my former work has been produced and transmitted through the channels of InDesign, Photoshop, After Effects, Dreamweaver and a little bit of Illustrator from time to time. Next to these five giants, my toolset was supplemented with a basic set HTML+CSS lines. And: Fontlab, a piece of software to make typefaces and (e.g.) 'dynamic' ligatures with.

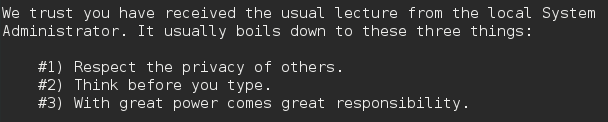

In the last 6 months, this set of tools has mainly been replaced with a new set of open-source tools (Scribus, Gimp, ImageMagick, Kdenlive), and supplemented with a programming language called 'python' , while working in the new environment of Debian (a distribution of the open source operating system Linux) which involves using the terminal. Working within a very visual environment shifted towards working within text-based interfaces. The convenience and experiences that i built up in the Mac environment haven't disappeared (obviously), as they are still present in the routes i take to search for options and tools which i was relying on before. Though it offers me a very different way of approaching design, based on thinking in structures rather then visual compositions and their connotations.

There are different arguments to give for this shift of operating system. The main reason was a rather practical one, related to a shift of work-method. Linux is developed in the early 90's by Linus Torvalds, and comes from a coders community. Therefore the system is built to be friendly for code-related work methods, but also asks for a bit more involvement when installing new programms or elements at your operating system. Also Linux features as the 'split screen' make it very comfi to work back and forth with a text-editor and browser.

Secondly there is a certain set of cultural principles present in the open-source community that I feel attracted to: the importance of distribution in stead of centralization, the aim of making information (also called knowledge) available for everyone (in the sense that it should not only be available but also legible), and the openness of the software packages which makes it possible to dig into the files a piece of software uses to function.

Using Linux is a way to be not fully depended on the mega-corporations. Although my private emailadress is still stored on servers owned by Google, my facebookaccount is there, and i'm following a set of people on Twitter, my professional toolset is not depended anymore on the decisions that Apple and Adobe will make in the future. That is a relief.

shift to design-perspective

from the book 'A not B', by Uta Eisenreich

For in those realms machines are made to behave in wondrous ways, often sufficient to dazzle even the most experienced observer. But once a particular program is unmasked, once its inner workings are explained in language sufficiently plain to induice understanding, its magic crumbles away; it stands revealed as a mere collection of procedures, each quite comprehensible. The observer says to himself "I could have written that". With that thought he moves the program in question from the shelf marked "intelligent" to that reserved for curios, fit to be discussed only with people less enlightened that he. (Joseph Weizenbaum, 1966)

[intro]

In the last 6 months, i've been looking at different tools that contain linguistic systems. From speech-to-text software to text-mining tools, they all systemize language in various ways in order to understand natural language—as human language is called in computer science. These tools fall under the term 'Natural Language Processing' (NLP), which is a field of computer science that is closely related to Artificial Intelligence (AI).

Examples of such linguistic tools are the following:

- interpreting the human voice into written language (CMU Sphinx - speech to text software)

- reading words and sentences, and mimicing human speech (text to speech software)

- interpreting visual characyers into a written text (Tesseract - character recognition software)

- using semantic metadata standard to link information (the idea of the Semantic Web - linked data by using RDF)

- using subtitle files as editing resource (Videogrep - a video editor based on different types of search-queries)

- alternative counting systems, to represent color in webpages (Hexidecimal - a numeral system with a base of 16: 0,1,2,3,4,5,6,7,8,9,A,B,C,D,E,F)

- working with text parsing (recognizing word-types), machine learning (training algorithms with annotated example data), and text mining (recognizing certain patterns in text) (Pattern - a text mining tool)

- representing lexical structures and relationships in a dataset (WordNet - a lexical database)

[approaches, ways of working with them]

(method of opening them up)

As the tools i looked at where mainly open source, it enabled me to dig into the software files and observe the formats that are used to systemize a language. When looking into the speech-to-text tool 'Sphinx' for example, i've been exploring which format of dictionary is used, and how these dictionaries are constructed. Next to the main .dict-file where 'all' possible words in the English dictionary are collected, the software also uses files that try to describe the patterns that occur in the language. By using 'ngrams' to list possible combinations, and 'jsgf'-files to describe a restricted set of possible texts, the software tries to be as accurate in understanding human language as another human being would be.

This formal way of approaching such tools, as an act of observing their architecture, reveals how the field of technology tries to transform a rather analog, gradual and in itself ambiguous system into a digital and mathematic construction.

(method of adapting them in projects)

The paradoxical problems that arrise when trying to combine these systems, is the main issue that AI is struggling with since the development of computers in the 60s. A classical optimistic computational question would be: could we make a system that is capable of intelligent behavior, as intelligent as the human brain is? But one could also ask: do we already simplify ourselves in order to level down to the understanding abilities of computers? How do we adapt our systems to the computational one's? And how do interfaces intervene in the way we communicate with them?

There is a similar paradox occuring here, as did in the situation of transforming a human language towards a computational model. Though, the problems that arise here are present on the level of the surface of technology, rather than within the architecture of the technology. How does a user adapt its individual habits and daily labour into a computational system that is built as a universal set of options and restrictions that should suit anyone? How does a user communicate with a system that is purely digital, and so based on numeric descisions and patterns?

Issues of the invisibility of technology and an attempt of naturalizing seem to effect our relation to such technologies strongly. The technology appears to function wondrously, that technology is only a voil that transmits information from the one place to the other.

It was by a kind of coincidence that i stumbled upon a device called 'Amazon Echo', an artificial intelligence machine that one could put in one's living room. It uses speech to text recognition which is used to receive voice commands; linked data to link the requested information sources; and text-to-speech to reply to the user's requests. Echo seemed to be a device wherin different language systemizations came together.

One could look at Echo as an interface in two ways: Echo has a physical interface, it's a black object made in the form of a cilinder that should be placed in a corner of one's living room; But there is a virtual interface as well, materialized through the voice, and presented to the user through the form of a human simulation. The first one contains an attempt to become invisible, as the black 'pipe' is not very much designed to be very present in one's house. Echo is small, and its black color, geometric clear shape, and its roughness are characteristics that 'common' audio devices share. example image? Echo is close to its users, so close that it is easy to forget even about it. Though is doesn't mean that the machine is less present. It is rather the opposite. The virtual interface of Echo is designed in a way that the user naturalizes with the system, in order to let Echo become part of one's natural surrounding. The device performs through speech, 'realtime' information deliveries, and an increasing amount of knowledge about one's contacts and behavior.

In order to put these AI characteristics in a bigger context, i decided to look closer at how these AI-related topics where depicted in popular cinematic culture. Five iconic science-fiction films in which AI-machines are playing the main character, became my basic set of sources. And so i started to work with Hal (from 2001, a Space Odyssey — 1968), Gerty (from Moon — 2009), Samantha (from Her 2013), Ash (Be Right Back, BlackMirror — 2013) and David (from Steven Spielberg's AI — 2009). As these actors were all playing the role of an AI machine, they embodied the role of an interface in the form of an human simulation. Similar to what happens with the Echo-interface, the human simulation is functioning as the channel where the user communicates through. The AI interfaces are disguising their origin of being machines. This camouflaging technique brings them close to their users, so close that they become almost invisible, and that it becomes easy to forget about them. How much does one trust on machines? How far can user-friendliness be stretched? Untill the machine is almost not present anymore? The AI's are trying to be part of human natural surroundings, through speech, 'realtime' information deliveries, and an increases amount of knowledge about one's contacts and behavior. How invisible and naturalized do we want technology to be?

In a certain sense, human-to-hardware interface gestures like clicking & swiping could also be considered to be language systemizations. Together with 3 classmates (Cristina Cochior, Julie Boschat Thorez & Ruben van de Ven) i've been considering the ideologies that relate to these two gestures, and how the could be visualized. It resulted in a browser-based game called 'fingerkrieg', where the player is challenged to click/swipe as many times as possible in 15 seconds. In an introduction to the game we state that clicking could be considered as a binary gesture, swiping is a rather new gesture that is designed to naturalize the interaction a user has with a device. These two interfaces contain certain language systemizations in that sense, as they limit or offer certain possibilities that are fixed in the way the interface can be used.

[motivation to work on systemized languages]

The presence of machines nowadays, and the difficulties that arise when positioning them into a human centralized world.

Metaphors are tools that enable us to speak about abstract elements of the internet. How are they contructed, and where do they refer to?

The contradictive motive of systemizing a language, as the act of making a model is obviously deductive. Which aims counter-balance this deduction? In other words: what purposes are strived for that justify making a simplified version of reality?

The naturalization of machines, and the invisibility of their interfaces.

The fact that the architecture of a tool is by default disguised, which is a motivation to open them up and reveal their constructions.

Linguistic systems are built to let the computer understand human language. In computer science this is called 'Natural Language Processing' (NLP). Motives to make such a language systemization are paradoxical, as the act of making a model is obviously deductive. Which aims counter-balance this deduction? In other words: what purposes are strived for that justify making a simplified version of reality?

Significance is mainly constructed through the systems and structures words or objects appear in. Signification isn't derived from the objects themselves, but rather from the system they are put in. (Examples: ngrams, valued dictionary files, JSGF files, machine-learning algorithms, newest = deep learning)

[subjects related to systemized languages]

- a universal language

- classification & taxonomies/ontologies

- naturalization & invisibility of technology as ideology

- turning text into numbers, linguistic models, (abstraction)

- text-mining field + (algorithmic) culture

- 'it just works', 'the data speaks', reveiling the technology behind an algorithm, constructed truth, common sense as point of departure

- construction of (visual) training-sets, lexicons/large-scale semantic knowledge bases