User:Max Dovey/PT/TRIMESTER 3: Difference between revisions

(→Step4) |

|||

| (15 intermediate revisions by the same user not shown) | |||

| Line 4: | Line 4: | ||

===Around the world in 200 Googles=== | ===Around the world in 200 Googles=== | ||

{{youtube | CoKMPlqG58s}} | |||

<source lang = "python" > | <source lang = "python" > | ||

google.com | google.com | ||

google.ac | google.ac | ||

| Line 225: | Line 226: | ||

action = False | action = False | ||

</source> | </source> | ||

===Bank Of Broken Dreams=== | |||

===Process Documentation=== | |||

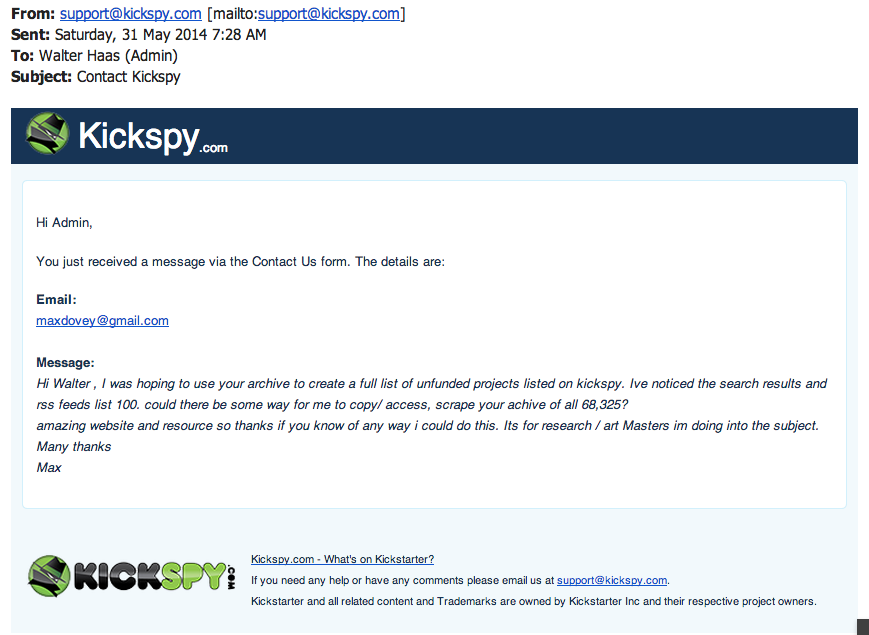

[[File:Walter1.png| 600 x 450]] | |||

[[File:Walter2.png| 600 x 450]] | |||

===STEP 1 === | |||

Make a list of all URLS of failed kickstarter projects. The script below grabs all links from every category (over 150) that are failed using the prefix ''http://www.kickspy.com/browse/all-failed/'' | |||

The output is a txt file 'kickurlscate.txt' that has 6000 failed projects from all the different categories, taking the maximum (100) failed projects from each category. | |||

<source lang = "python"> | |||

import urllib2 | |||

from bs4 import BeautifulSoup | |||

import requests | |||

import time , re | |||

from itertools import izip | |||

import csv | |||

from urlparse import urljoin, urldefrag | |||

s = open('allfailedurls.txt', 'rb') #list of urls of all categories | |||

startlist= [] | |||

urllist = [] | |||

cleanurls = [] | |||

prefix = "http://www.kickspy.com" | |||

f = open('kickurlscate.txt', "a") #write links to a text file for scraping later! | |||

for line in s: | |||

startlist.append(line) | |||

limit = len(startlist) | |||

print limit | |||

counter = 0 | |||

for i in startlist: | |||

page = urllib2.urlopen(i).read() | |||

soup = BeautifulSoup(page) | |||

counter +=1 | |||

print counter | |||

if counter > limit: | |||

break | |||

else: | |||

#Grab all links from start beginnning with projects and append them to urllist | |||

for a in soup.find_all('a', href=re.compile ("/projects")): | |||

l = (a.get('href')) | |||

c = urljoin(prefix, l) | |||

urllist.append(c) | |||

print c | |||

f.write(c) | |||

f.write("\n") | |||

</source > | |||

example output >>> | |||

<source lang = "python"> | |||

http://www.kickspy.com/projects/artastictime/artastic-time | |||

http://www.kickspy.com/projects/561192189/epermanent | |||

http://www.kickspy.com/projects/salgallery/marseille-art | |||

http://www.kickspy.com/projects/htavos/kooky-plush-monsters-and-more | |||

http://www.kickspy.com/projects/18521543/dingbats-handmade-treasures-farmers-market-booth | |||

</source> | |||

===Step 2=== | |||

The script below is a basic scaper that goes through the list of failed kickstarter pages and extracts the title, description and category information from the page and saves in csv format. | |||

<source lang = "python"> | |||

import urllib2 | |||

from bs4 import BeautifulSoup | |||

import requests | |||

import time , re | |||

from itertools import izip | |||

import csv | |||

from urlparse import urljoin, urldefrag | |||

startlist = [] | |||

title = [] | |||

desc = [] | |||

genre = [] | |||

counter = 0 | |||

s = open("fullcleanurls.txt", "r").readlines() | |||

for line in s: | |||

startlist.append(line) | |||

limit = len(startlist) | |||

print limit | |||

#iterate through urllist and scrape title, info etc | |||

for i in startlist[0:500]: #limits for procarity | |||

counter +=1 | |||

page = urllib2.urlopen(i).read() | |||

soup = BeautifulSoup(page) | |||

soup.prettify() | |||

print counter | |||

if counter > limit: | |||

break | |||

else: | |||

a = soup.find("h1").text.encode('utf-8') | |||

b = soup.find("div", {"class" : "description"}).text.encode('utf-8') | |||

c = soup.find("div", {"class" : "category"}).text.encode('utf-8') | |||

title.append(a) | |||

desc.append(b) | |||

genre.append(c) | |||

with open('fullkicklist2.csv', 'wb') as f: | |||

writer =csv.writer(f) | |||

writer.writerows(izip(title, desc, genre)) | |||

time.sleep(1) | |||

</source> | |||

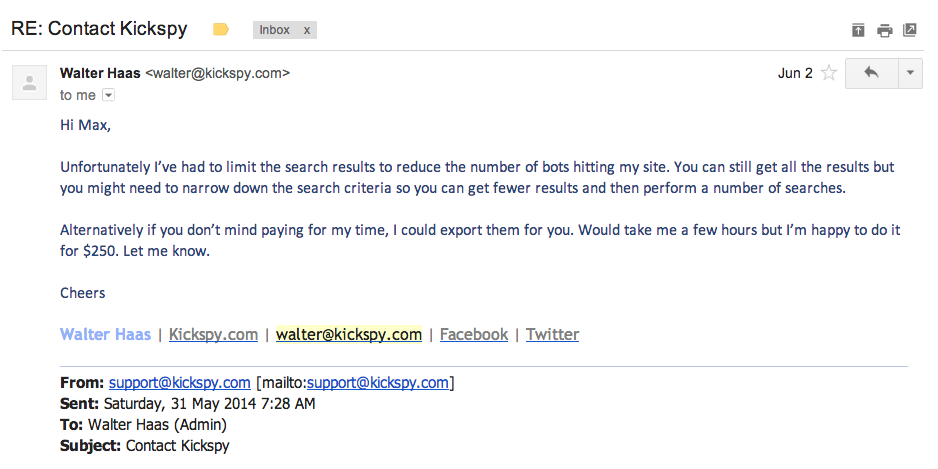

output example >>> | |||

[[File:Anicelyorganizedtable.png|300 x 150px]] | |||

===Step 3=== | |||

Now have 6000 failed kickstarters in a nicely formated csv its time to make some $$$$$$ | |||

The script below reads the csv and turns the data into dictionary so that i can append each row to different parts of an SVG using a brutal find and replace method. <br> | |||

[[File:Template4.svg|300x167px]] <br> | |||

<source lang= 'python'> | |||

import sys, os , time | |||

import csv | |||

from collections import defaultdict | |||

f = open('run/template4.svg', 'r') | |||

count = 0 | |||

field_names = ['title', 'desc', 'cat'] | |||

with open ('fullkickfaillist5.csv', 'rU') as csvfile: | |||

reader = csv.DictReader(csvfile, delimiter=",", quotechar='"', fieldnames=field_names) | |||

for row in reader: | |||

title = (row['title']) | |||

desc = (row['desc']) | |||

cat = (row['cat']) | |||

print title + desc | |||

tmp = open("tmp.svg", "w") | |||

tmp.write(open("run/template4.svg").read().replace("unique",title).replace("world",desc).replace("bath", cat)) | |||

tmp.close() | |||

count += 1 | |||

cmd = '/Applications/Inkscape.app/Contents/Resources/bin/inkscape --export-png=bill{0:06d}.png tmp.svg'.format(count) | |||

print cmd | |||

os.system(cmd) | |||

</source> | |||

[[File:Slide2.gif]] | |||

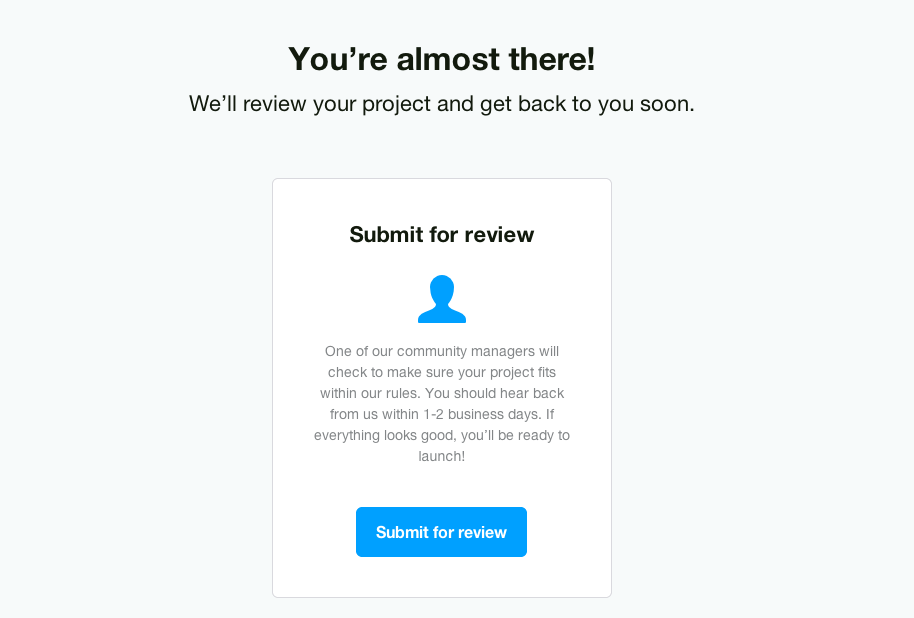

===Step4=== | |||

print 6000 notes and distribute them see more info [[user:max Dovey/bankofbrokendreams|here]] | |||

[[File:Revewfail.png]] | |||

Latest revision as of 12:01, 30 June 2014

- http://pzwart3.wdka.hro.nl/wiki/Scripts_%26_Screenplays

- http://pzwart2.wdka.hro.nl/~avanderhoek/finalessay_annemieke.pdf

Around the world in 200 Googles

google.com

google.ac

google.ad

google.ae

google.com.af

google.com.ag

google.com.ai

google.al

google.am

google.co.ao

google.com.ar

google.as

google.at

google.com.au

google.az

google.ba

google.com.bd

google.be

google.bf

google.bg

google.com.bh

google.bi

google.bj

google.com.bn

google.com.bo

google.com.br

google.bs

google.bt

google.co.bw

google.by

google.com.bz

google.ca

google.com.kh

google.cc

google.cd

google.cf

google.cat

google.cg

google.ch

google.ci

google.co.ck

google.cl

google.cm

google.cn

g.cn

google.com.co

google.co.cr

google.com.cu

google.cv

google.com.cy

google.cz

google.de

google.dj

google.dk

google.dm

google.com.do

google.dz

google.com.ec

google.ee

google.com.eg

google.es

google.com.et

google.fi

google.com.fj

google.fm

google.fr

google.ga

google.ge

google.gf

google.gg

google.com.gh

google.com.gi

google.gl

google.gm

google.gp

google.gr

google.com.gt

google.gy

google.com.hk

google.hn

google.hr

google.ht

google.hu

google.co.id

google.ir

google.iq

google.ie

google.co.il

google.im

google.co.in

google.io

google.is

google.it

google.je

google.com.jm

google.jo

google.co.jp

google.co.ke

google.ki

google.kg

google.co.kr

google.com.kw

google.kz

google.la

google.com.lb

google.com.lc

google.li

google.lk

google.co.ls

google.lt

google.lu

google.lv

google.com.ly

google.co.ma

google.md

google.me

google.mg

google.mk

google.ml

google.com.mm

google.mn

google.ms

google.com.mt

google.mu

google.mv

google.mw

google.com.mx

google.com.my

google.co.mz

google.com.na

google.ne

google.com.nf

google.com.ng

google.com.ni

google.nl

google.no

google.com.np

google.nr

google.nu

google.co.nz

google.com.om

google.com.pa

google.com.pe

google.com.ph

google.com.pk

google.pl

google.com.pg

google.pn

google.com.pr

google.ps

google.pt

google.com.py

google.com.qa

google.ro

google.rs

google.ru

google.rw

google.com.sa

google.com.sb

google.sc

google.se

google.com.sg

google.sh

google.si

google.sk

google.com.sl

google.sn

google.sm

google.so

google.st

google.com.sv

google.td

google.tg

google.co.th

google.com.tj

google.tk

google.tl

google.tm

google.to

google.tn

google.com.tn

google.com.tr

google.tt

google.com.tw

google.co.tz

google.com.ua

google.co.ug

google.co.uk

google.us

google.com.uy

google.co.uz

google.com.vc

google.co.ve

google.vg

google.co.vi

google.com.vn

google.vu

google.ws

google.co.za

google.co.zm

google.co.zw

import webbrowser, time

f = open("googleindex.txt")

url = []

action = False

for line in f:

line = line.strip()

link = ('http://' + line)

action = True

while action:

time.sleep(4)

webbrowser.open_new_tab(link)

action = False

Bank Of Broken Dreams

Process Documentation

STEP 1

Make a list of all URLS of failed kickstarter projects. The script below grabs all links from every category (over 150) that are failed using the prefix http://www.kickspy.com/browse/all-failed/ The output is a txt file 'kickurlscate.txt' that has 6000 failed projects from all the different categories, taking the maximum (100) failed projects from each category.

import urllib2

from bs4 import BeautifulSoup

import requests

import time , re

from itertools import izip

import csv

from urlparse import urljoin, urldefrag

s = open('allfailedurls.txt', 'rb') #list of urls of all categories

startlist= []

urllist = []

cleanurls = []

prefix = "http://www.kickspy.com"

f = open('kickurlscate.txt', "a") #write links to a text file for scraping later!

for line in s:

startlist.append(line)

limit = len(startlist)

print limit

counter = 0

for i in startlist:

page = urllib2.urlopen(i).read()

soup = BeautifulSoup(page)

counter +=1

print counter

if counter > limit:

break

else:

#Grab all links from start beginnning with projects and append them to urllist

for a in soup.find_all('a', href=re.compile ("/projects")):

l = (a.get('href'))

c = urljoin(prefix, l)

urllist.append(c)

print c

f.write(c)

f.write("\n")

example output >>>

http://www.kickspy.com/projects/artastictime/artastic-time

http://www.kickspy.com/projects/561192189/epermanent

http://www.kickspy.com/projects/salgallery/marseille-art

http://www.kickspy.com/projects/htavos/kooky-plush-monsters-and-more

http://www.kickspy.com/projects/18521543/dingbats-handmade-treasures-farmers-market-booth

Step 2

The script below is a basic scaper that goes through the list of failed kickstarter pages and extracts the title, description and category information from the page and saves in csv format.

import urllib2

from bs4 import BeautifulSoup

import requests

import time , re

from itertools import izip

import csv

from urlparse import urljoin, urldefrag

startlist = []

title = []

desc = []

genre = []

counter = 0

s = open("fullcleanurls.txt", "r").readlines()

for line in s:

startlist.append(line)

limit = len(startlist)

print limit

#iterate through urllist and scrape title, info etc

for i in startlist[0:500]: #limits for procarity

counter +=1

page = urllib2.urlopen(i).read()

soup = BeautifulSoup(page)

soup.prettify()

print counter

if counter > limit:

break

else:

a = soup.find("h1").text.encode('utf-8')

b = soup.find("div", {"class" : "description"}).text.encode('utf-8')

c = soup.find("div", {"class" : "category"}).text.encode('utf-8')

title.append(a)

desc.append(b)

genre.append(c)

with open('fullkicklist2.csv', 'wb') as f:

writer =csv.writer(f)

writer.writerows(izip(title, desc, genre))

time.sleep(1)

output example >>>

Step 3

Now have 6000 failed kickstarters in a nicely formated csv its time to make some $$$$$$

The script below reads the csv and turns the data into dictionary so that i can append each row to different parts of an SVG using a brutal find and replace method.

import sys, os , time

import csv

from collections import defaultdict

f = open('run/template4.svg', 'r')

count = 0

field_names = ['title', 'desc', 'cat']

with open ('fullkickfaillist5.csv', 'rU') as csvfile:

reader = csv.DictReader(csvfile, delimiter=",", quotechar='"', fieldnames=field_names)

for row in reader:

title = (row['title'])

desc = (row['desc'])

cat = (row['cat'])

print title + desc

tmp = open("tmp.svg", "w")

tmp.write(open("run/template4.svg").read().replace("unique",title).replace("world",desc).replace("bath", cat))

tmp.close()

count += 1

cmd = '/Applications/Inkscape.app/Contents/Resources/bin/inkscape --export-png=bill{0:06d}.png tmp.svg'.format(count)

print cmd

os.system(cmd)

Step4

print 6000 notes and distribute them see more info here