User:Wang ziheng/Project proposal: Difference between revisions

Wang ziheng (talk | contribs) |

Wang ziheng (talk | contribs) m (→Fortune) |

||

| (20 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

<div style="position:absolute; top:-5px; left: 550px;" z-index:1"> | |||

<img src="https://i.pinimg.com/736x/d3/c1/ae/d3c1aefaa952d6d6a11a3ddd51456ef6.jpg" width="400px"> | |||

</div> | |||

[[WangThesisDraft]] | |||

==What do you want to make?== | ==What do you want to make?== | ||

| Line 40: | Line 46: | ||

</div> | </div> | ||

During my experiment with Tone.js, I found its sound effects were quite limited. This led me to explore other JavaScript libraries with | During my experiment with Tone.js, I found its sound effects were quite limited. This led me to explore other JavaScript libraries with a wider repertoire of sound capabilities, like Pizzicato.js. Pizzicato.js offers effects such as ping pong delay, fuzz, flanger, tremolo, and ring modulation, which allow for much more creative sound experimentation. Since everything is online, there’s no need to download software, and it also lets users experience sound with visual and interactive web elements.<br><br> | ||

Inspired by deconstructionism, I created a project that presents all these effects on one webpage, experimenting with ways to layer and combine them like a "sound quilt."<br><br> | Inspired by deconstructionism, I created a project that presents all these effects on one webpage, experimenting with ways to layer and combine them like a "sound quilt."<br><br> | ||

To introduce the project and gather feedback, I hosted a workshop and a performance. I started a JavaScript Club to introduce sound-related JavaScript libraries and show people how to experiment with sound on the web. At the end of the workshop, with a form of “Examination”, I gave out a zine hidden inside a pen called Script Partner. Each zine included unique code snippets for creating instruments or sound effects.<br><br> | To introduce the project and gather feedback, I hosted a workshop and a performance. I started a JavaScript Club to introduce sound-related JavaScript libraries and show people how to experiment with sound on the web. At the end of the workshop, with a form of “Examination”, I gave out a zine hidden inside a pen called Script Partner. Each zine included unique code snippets for creating instruments or sound effects.<br><br> | ||

| Line 88: | Line 94: | ||

==What is your timetable?== | ==What is your timetable?== | ||

From September to December, | *From September to December, | ||

I will be constantly making workshops, exercises, and performances related to the main subject in order to develop more concrete content. | I will be constantly making workshops, exercises, and performances related to the main subject in order to develop more concrete content. | ||

Through these practices, I explored different forms for publications, hiding them inside a pen, using an examination format to connect with the audience, or limiting performances to 2 minutes, making the 'publications' both harsh and "tasty". These exercises helped me to shape the structure and display of my graduation project. | |||

*From December to January, I will focus on circuit building and sensor testing, experimenting to find the most suitable sensor and creating a demo version of the device. | |||

*From January to March, I will enter the design phase, working on the device's exterior, corresponding HTML, and fixing bugs. | |||

*From March to May, I will refine the device, test it through performances, and design its user manual. | |||

===Performance at Klankschool(21.09)=== | ===Performance at Klankschool(21.09)=== | ||

<div style="display: flex; justify-content: center;"> | <div style="display: flex; justify-content: center;"> | ||

[[File:128419214648.jpg|350px]] | [[File:128419214648.jpg|350px]] | ||

<img src="https://pzwiki.wdka.nl/mw-mediadesign/images/f/f1/Bricks.gif" width=" | <img src="https://pzwiki.wdka.nl/mw-mediadesign/images/f/f1/Bricks.gif" width="466px"> | ||

</div> | </div> | ||

At Klankschool, I performed using an HTML setup with sliders, each displaying a different image. Each brick represented a frequency, allowing multiple bricks to overlap pitches. I combined this with the Solar Beep MIDI controller I maded. | |||

The HTML setup has great potential, especially with MIDI controllers. For example, I mapped a MIDI controller to the bricks, using its hold function to toggle bricks or act as a sequencer. This combination saves effort by separating functions—letting a physical device handle sequencing instead of building it into the HTML. | |||

===Javascript Club #4(14.10)=== | ===Javascript Club #4(14.10)=== | ||

| Line 108: | Line 123: | ||

===Public Moment(04.11)=== | ===Public Moment(04.11)=== | ||

<div style="display: flex; justify-content: center;"> | <div style="display: flex; justify-content: center;"> | ||

[[File:173230022578.jpg|380px]] | [[File:173230022578.jpg|380px]] | ||

[[File:1732295367080.jpg|405px]] | [[File:1732295367080.jpg|405px]] | ||

</div> | </div> | ||

During the public event, the kitchen transformed into a Noise Kitchen. Using kitchen tools and a shaker, I made a non-alcoholic cocktail while a microphone overhead captured the sounds. These sounds were processed through my DAW to generate noise. | |||

The event had four rounds, each round has 2 minutes, accounting time with the microwave, accommodating four participants. The outcome of each round was a non-alcoholic cocktail, made with ginger, lemon, apple juice, and milky oolong tea. | |||

==Why do you want to make it?== | ==Why do you want to make it?== | ||

| Line 130: | Line 145: | ||

This project connects to broader themes of environmental awareness and the fusion of technology with nature. By exploring the interplay between natural phenomena and digital soundscapes, it will also utilize unstable elements from nature to engage with digital aspects. This could be an important way to enhance creativity in sound design. | This project connects to broader themes of environmental awareness and the fusion of technology with nature. By exploring the interplay between natural phenomena and digital soundscapes, it will also utilize unstable elements from nature to engage with digital aspects. This could be an important way to enhance creativity in sound design. | ||

==References | ==References== | ||

https://www.ableton.com/en/packs/inspired-nature/ | In my research period for the graduation project, my references are items, random pictures, plugins, and digital devices.<br> | ||

I divided these into different categories, like trees, planets, and gravity, which are the natural energy I’m exploring.<br> | |||

https://www.ableton.com/en/packs/inspired-nature/<br> | |||

https://www.lovehulten.com/ | https://www.lovehulten.com/ | ||

===Fortune=== | ===Fortune=== | ||

Believe it Yourself is a series of real-fictional belief-based computing kits to make and tinker with vernacular logics and superstitions. Created by Shanghai based design studio automato.farm, 'BIY™ - Believe it Yourself' is a series of real-fictional belief-based computing kits to make and tinker with vernacular logics and superstitions. The team worked with experts in fortune telling from Italy, geomancy from China and numerology from India to translate their knowledge and beliefs into three separate kits – BIY.SEE, BIY.MOVE and BIY.HEAR. They invite users to tinker with cameras that can see luck*, microphones that interpret your destiny*, and compasses that can point you to harmony and balance*. | Believe it Yourself is a series of real-fictional belief-based computing kits to make and tinker with vernacular logics and superstitions. Created by Shanghai based design studio automato.farm, 'BIY™ - Believe it Yourself' is a series of real-fictional belief-based computing kits to make and tinker with vernacular logics and superstitions. The team worked with experts in fortune telling from Italy, geomancy from China and numerology from India to translate their knowledge and beliefs into three separate kits – BIY.SEE, BIY.MOVE and BIY.HEAR. They invite users to tinker with cameras that can see luck*, microphones that interpret your destiny*, and compasses that can point you to harmony and balance*. | ||

http://automato.farm/portfolio/believe_it_yourself/ | http://automato.farm/portfolio/believe_it_yourself/<br> | ||

<img src="https://speculativeedu.eu/wp-content/uploads/2019/07/03_BIY-Hear-Training-768x512.jpg" width=' | <img src="https://speculativeedu.eu/wp-content/uploads/2019/07/03_BIY-Hear-Training-768x512.jpg" width='400px'> | ||

<img src="https://s3files.core77.com/blog/images/942225_33852_89014_UPgf7T8qH.jpg" width=' | <img src="https://s3files.core77.com/blog/images/942225_33852_89014_UPgf7T8qH.jpg" width='400px'> | ||

<img src="https://s3files.core77.com/blog/images/942223_33852_89014_iz4mAJm3s.jpg" width=' | <img src="https://s3files.core77.com/blog/images/942223_33852_89014_iz4mAJm3s.jpg" width='400px'> | ||

other inspirations: | other inspirations: | ||

| Line 148: | Line 165: | ||

===Plants=== | ===Plants=== | ||

====PlantWave==== | |||

<img src="https://plantwave.com/cdn/shop/files/slide-img-2.jpg?v=1676814383&width=1070" width='300px'> | |||

<img src="https://plantwave.com/cdn/shop/files/slide-img-2.jpg?v=1676814383&width=1070" width=' | |||

<br> | <br> | ||

https://www.datagarden.org/technology | https://www.datagarden.org/technology | ||

<br> | |||

PlantWave measures biological changes within plants, graphs them as a wave and translates the wave into pitch. It connects to a free app for iOS and Android. PlantWave monitoring electrical signals of plants as sound. Through patented sonification technology. | |||

<br> | |||

====Love Hultén==== | |||

<img src="https://i.pinimg.com/736x/1d/3c/0d/1d3c0dbefebca88bf8adbbf76e601ee3.jpg" width='356px'> | |||

<img src="https://i.pinimg.com/736x/f1/42/b2/f142b25ef904a472a32d13c32ef45e3c.jpg" width='300px'> | |||

<img src="https://i.pinimg.com/736x/1c/6d/96/1c6d969cb3e1d6237ea9a630cffcb4bf.jpg" width='200px'> | |||

https://design-milk.com/love-hultens-desert-songs-sounds-like-a-blast-from-the-chloroplast/?utm_source=Design+Milk+Staff&utm_medium=email&utm_campaign=1.31+Tuesday+Daily+Digest+%2801GR1X73VDA1TR617J0BFQ4J05%29&_kx=n4Il-D-uZPpoBNk0mS2m2lANwpKa53_4l8QWmP5bv0M%3D.MfHiGP&epik=dj0yJnU9RGxZSXNRekJqOEdBWTFEekQxNF9PSlREN0RJV0JuVGsmcD0wJm49NDNLa2FzWFZNRXMydVREWXBKd01SZyZ0PUFBQUFBR2NzRFJJ#038;utm_medium=rss&utm_campaign=love-hultens-desert-songs-sounds-like-a-blast-from-the-chloroplast | |||

Love Hultén is a Swedish designer and craftsman renowned for blending retro aesthetics with modern technology. His work uniquely combines traditional woodworking with cutting-edge electronics to create stunning, functional art pieces. Hultén's designs often evoke nostalgia, featuring retro gaming consoles, synthesizers, and computers in exquisitely handcrafted wooden casings. Known for their meticulous craftsmanship and innovative designs, his creations, such as the R-Kaid-R and the Pixel Vision, bridge the gap between art and technology, offering a tactile, visually captivating experience. | |||

===Gravities=== | |||

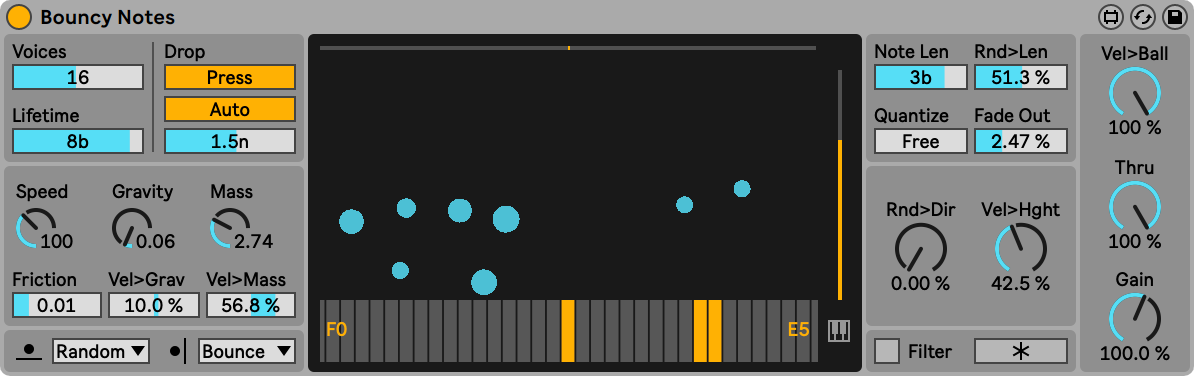

====Bouncy Notes==== | |||

<img src="https:// | <img src="https://ableton-production.imgix.net/devices/screenshots/bouncy-notes%402x.png?fm=png"; width="500px";> | ||

Bouncy Notes is a Max for Live MIDI effect that generates MIDI notes. It can function as an | |||

unconventional arpeggiator, sequencer, note delay, note generator, or other MIDI note | |||

applications. In the center of the device is a display of balls (represented by circles) that bounce | |||

up and down on a piano roll via a gravity simulation. When a ball hits the piano roll, a MIDI note | |||

is output that corresponds to the note on the piano roll. Balls are created by sending MIDI notes | |||

to the device. When a MIDI note is received, a ball is created above the pitch on the piano roll | |||

that corresponds to the MIDI note received. If the pitch of the MIDI note is not on the piano roll, | |||

the ball appears over the closest matching pitch on the piano roll. | |||

https://dillonbastan.com/inspiredbynature_manuals/Bouncy%20Notes%20User%20Manual.pdf<br> | |||

https://dillonbastan.com/inspiredbynature_manuals/Bouncy%20Notes%20User%20Manual.pdf | |||

https://www.youtube.com/watch?v=C2hQ-WbKBhU | https://www.youtube.com/watch?v=C2hQ-WbKBhU | ||

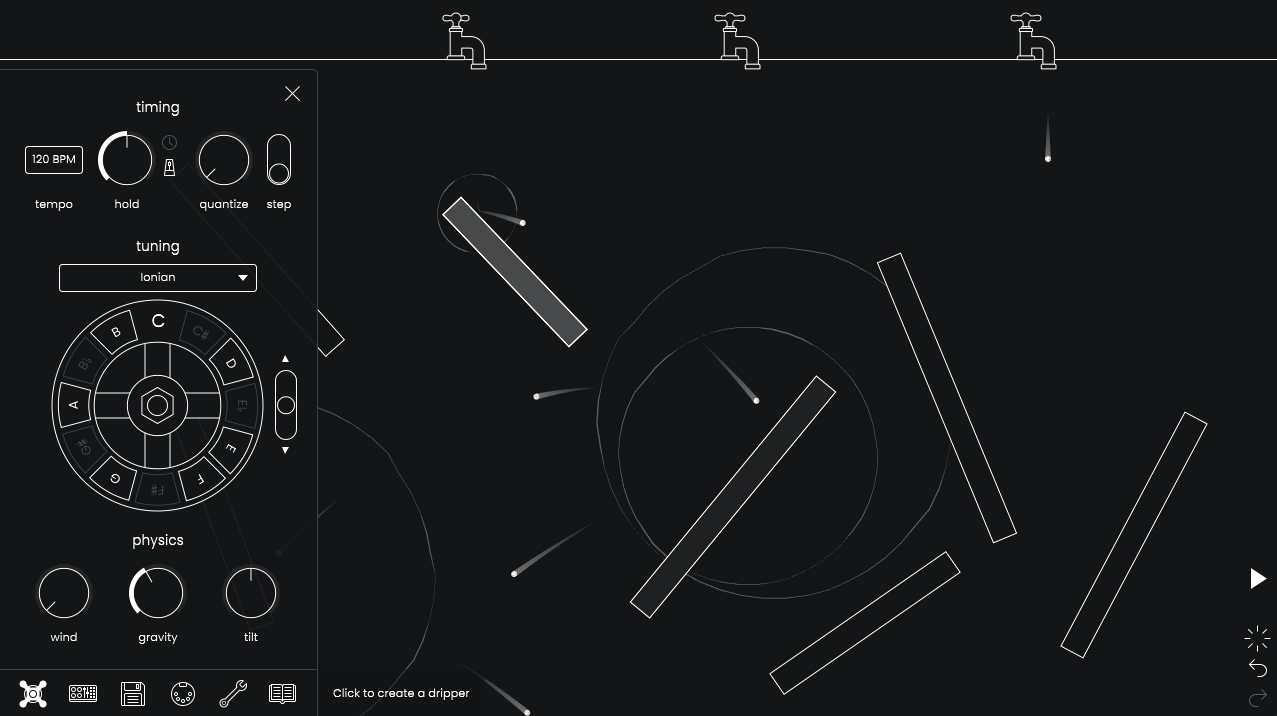

====Droplets==== | ====Droplets==== | ||

<img src="https://static.kvraudio.com/i/b/droplets_stageandsettings.png"; width="500px";> | |||

Droplets is a physics-based sequencer, offering a new way to create organic musical patterns. | |||

Drippers produce droplets at regular musical intervals. You can adjust the interval at which droplets are produced by turning the dripper's handle. As droplets fall down the screen, they hit and bounce off of bars, causing a note to play. Bars can be tuned by adjusting their length. By changing the arrangement of bars and drippers, entirely new musical patterns will arise. | |||

https://finneganeganegan.xyz/works/droplets | https://finneganeganegan.xyz/works/droplets | ||

===Tree=== | |||

<img src="https://arnhemsebomenvertellen.nl/static/img/trees/douglas_fir-600x.jpg"; width="300px"> | |||

<img src="https://arnhemsebomenvertellen.nl/static/img/sensors/sensoterra-600x.jpg"; width="300px"> | |||

<img src="https://arnhemsebomenvertellen.nl/static/img/sensors/milesight-600x.jpg"; width="300px"> | |||

Moving Trees highlights the often-overlooked lives of trees by combining art and technology. Sensors installed in six significant trees in Arnhem reveal hidden data, such as trunk movements, CO2 and water flow, and photosynthesis activity. Wooden benches with QR codes provide access to soundscapes, visuals, and stories tied to the trees. This innovative installation transforms a simple walk into an opportunity to observe trees as dynamic, living beings, fostering a deeper connection with nature and its unseen processes. | |||

https://arnhemsebomenvertellen.nl/T/uKFBL/data/7 <br> | |||

https://arnhemsebomenvertellen.nl/<br> | |||

When I saw this project, the idea of using trees to generate sound based on data like temperature, light, and humidity seemed interesting, but I realized that the sounds created by this data didn’t really connect to the trees themselves. For example, using potatoes or plants to control sound may be an interesting way to change sounds, but it doesn’t actually reflect anything about the potato or plant. This made me question if using data to create sound can truly represent nature or if it just makes us aware of the difference between the natural world and the way we measure it. | |||

As I thought more about the project, I wondered if the problem was with the way sound was being designed. It seems to lose the real connection to nature. For example, if there were field recordings of trees—like the sound of wind in the leaves—people might better connect the sounds to the trees. However, that would be a different approach since the main idea of the project is to show us things we can’t normally see, like changes in temperature or oxygen levels. I started to wonder if the sounds were intentionally not related to nature to make people think about how data can be different from real life, which is an interesting way of making people think. | |||

This made me realize how hard sound design can be in projects like this. Turning data into sound that still feels like it’s connected to nature is tricky. Data is often invisible or hard to understand, so it’s not easy to create sounds that make people feel like they’re hearing something from nature. The sounds generated from temperature or oxygen levels don’t automatically make you think of trees or the outdoors. This made me think about how difficult it is to find the right balance—how to create sounds that still feel like they belong to nature, without just using obvious natural sounds like wind or birds. | |||

Looking at this, I saw that my own project is a bit different. While both projects explore the relationship between nature and human interaction, my focus is not on representing nature directly through sound. Instead, I want to expand how people interact with things—moving from simple touches or clicks to more dynamic and unpredictable forms of interaction. The goal of my project isn’t to replicate or interpret nature through sound, but to explore new ways of interacting with the world. This allows for a more open and imaginative way of experiencing and exploring the environment. | |||

Latest revision as of 15:42, 16 December 2024

What do you want to make?

I want to build on the projects I developed last year and create a device that captures natural dynamics—like wind, tides, and wind direction—and convert them into MIDI signals. These signals can then control musical instruments or interact with web pages and software, offering new experimental methods for sound and visual design.

How do we adapt to nature and transform the language of nature?

- Humans can accurately predict the regular of tides and use the kinetic energy of waves.

- Humans use geomagnetism to determine direction and make compasses.

- Humans use wind energy to generate electricity and test wind direction and speed through different instruments.

We are constantly obtaining energy and dynamics from nature.

While we adapt to and use natural energy, we continue to produced and invent various machines to transform energy. This is a physical, energy transformation.

I want to apply this energy conversion method to this project to create one or several devices to control digital devices, sounds, or web page interactions through these natural variables.

In my past projects, I established a foundation for this project by tackling technical challenges, gathering ideas, and conducting focused research.

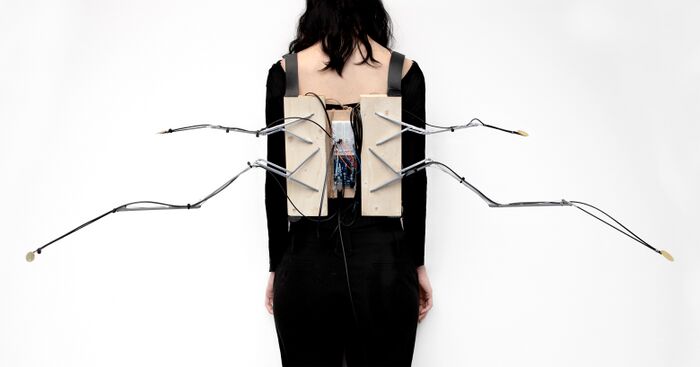

- Rain Receiver was the first step, a wearable device that let sound interact with rain, exploring how machines could respond to natural elements.

- With Sound Quilt, I moved into web-based interaction, using JavaScript and HTML to test out practical methods for digital performance through workshops and live demonstrations.

- Finally, Solar Beep marked a significant step forward in hardware development, where I gained hands-on experience with machine design and production.

Here are three descriptions about the previous projects:

SI22: Rain Receiver - a device that interact with weather/nature

The Rain Receiver project started with a simple question during a picnic: what if an umbrella could do more than just protect us from the rain? Imagined as a device to capture and convert rain into digital signals, the Rain Receiver is designed to archive nature's voice, capturing the interactions between humans and the natural world.

Inspiration for the project came from the "About Energy" skills classes, where I learned about ways to use natural energy—like wind power for flying kites. This got me thinking about how we could take signals from nature and use them to interact with our devices.

The Rain Receiver came together through experimenting with Max/MSP and Arduino. Using a piezo sensor to detect raindrops, I set it up to translate each drop into MIDI sounds. By sending these signals through Arduino to Max/MSP, I could automatically trigger the instruments and effects I had preset. Shifting away from an umbrella, I designed the Rain Receiver as a wearable backpack so people could experience it hands-free outdoors.

With help from Zuzu, we connected the receiver to a printer, creating symbols like "/" marks alongside apocalyptic-themed words to archive each raindrop as a kind of message. After fixing a few bugs, the Rain Receiver was showcased at WORM’s Apocalypse event, hinting at how this device could be a meaningful object in the future.

https://pzwiki.wdka.nl/mediadesign/Wang_SI22#Rain_Receiver

https://pzwiki.wdka.nl/mediadesign/Express_lane#Rain_receiver

SI23: sound experimentation tools base on HTML

During my experiment with Tone.js, I found its sound effects were quite limited. This led me to explore other JavaScript libraries with a wider repertoire of sound capabilities, like Pizzicato.js. Pizzicato.js offers effects such as ping pong delay, fuzz, flanger, tremolo, and ring modulation, which allow for much more creative sound experimentation. Since everything is online, there’s no need to download software, and it also lets users experience sound with visual and interactive web elements.

Inspired by deconstructionism, I created a project that presents all these effects on one webpage, experimenting with ways to layer and combine them like a "sound quilt."

To introduce the project and gather feedback, I hosted a workshop and a performance. I started a JavaScript Club to introduce sound-related JavaScript libraries and show people how to experiment with sound on the web. At the end of the workshop, with a form of “Examination”, I gave out a zine hidden inside a pen called Script Partner. Each zine included unique code snippets for creating instruments or sound effects.

For the performance, I used an HTML interface I designed, displaying it on a large TV screen. The screen showed a wall of bricks, each brick linked to a frequency. By interacting with different bricks and sound effect sliders, the HTML could create a blend of sound and visuals, a strange, wobbly, and mysterious soundscape.

https://pzwiki.wdka.nl/mediadesign/Express_lane#Javasript

https://pzwiki.wdka.nl/mediadesign/JavaScriptClub/04

SI24: MIDI device

Rain Receiver is actually a midi controller but just by using sensor.

Most logic of a MIDI controller is to help users map their own values/parameters in the DAW, using potentiometers, sliders, buttons for sequencer steps (momentary), or buttons for notes (latching). The layout of the controls on these devices usually features a tight square grid with knobs, which is a common design for controllers intended for efficient control over parameters such as volume, pan, filters, and effects.

During an period of using pedals and MIDI controllers for performance, I've organized most of my mappings into groups, with each group containing 3 or 4 parameters.

For example,

the Echo group includes Dry/Wet, Time, and Pitch parameters, the ARP group includes Frequency, Pitch, Fine, Send C, and Send D.

In such cases, a controller with a 3x8 grid of knobs is not suitable for mapping effects with 5 parameters, like the ARP. A structure resembling a tree or radial structure would be more suitable for the ARP's complex configuration.

The structures, such as planets and satellites, demonstrate how a large star can be radially mapped to an entire galaxy; A tiny satellite orbiting a planet may follow an elliptical path rather than a circular one; Comets exhibit irregular movement patterns. The logic behind all these phenomena is similar to the logic I use when producing music.

"Solar Beep" is the result of this search, built to elevate creative flow with a uniquely flexible design. It offers three modes—Mono, Sequencer, and Hold—alongside 8 knobs, a joystick, and a dual-pitch control for its 10-note button setup. With 22 LEDs providing real-time visual feedback, "Solar Beep" simplifies complex mappings and gives users an intuitive experience, balancing precision and adaptability for a more responsive and engaging production tool.

https://pzwiki.wdka.nl/mediadesign/Wang_SI24#Midi_Controller

How do you plan to make it?

With the same formula I used in SI24, it still will be the similar steps:

Research;

Draft;

Shopping list based on the draft;

Process;

Debug;

I want to create a device that captures natural dynamics—like wind, tides, and direction—and converts them into MIDI signals. These signals can then control musical instruments or interact with web pages and software, offering new experimental methods for sound and visual design.

To support this, I’ll design dedicated web pages and visual elements to enhance the interactive experience with the device.

In the initial testing phase, I’ll focus on three natural variables:

- waves

- direction

- wind

Each variable brings unique characteristics—

- wind, for instance, is unpredictable and unstable;

- tides are relatively regular and consistent;

- Direction requires intentional human input, like a compass.

Through sensor-based testing, I’ll gather and analyze data from each of these factors to determine which is most suitable for this project. By integrating these natural variables, I hope to introduce new ways of interaction in both sound and visual media.

What is your timetable?

- From September to December,

I will be constantly making workshops, exercises, and performances related to the main subject in order to develop more concrete content.

Through these practices, I explored different forms for publications, hiding them inside a pen, using an examination format to connect with the audience, or limiting performances to 2 minutes, making the 'publications' both harsh and "tasty". These exercises helped me to shape the structure and display of my graduation project.

- From December to January, I will focus on circuit building and sensor testing, experimenting to find the most suitable sensor and creating a demo version of the device.

- From January to March, I will enter the design phase, working on the device's exterior, corresponding HTML, and fixing bugs.

- From March to May, I will refine the device, test it through performances, and design its user manual.

Performance at Klankschool(21.09)

At Klankschool, I performed using an HTML setup with sliders, each displaying a different image. Each brick represented a frequency, allowing multiple bricks to overlap pitches. I combined this with the Solar Beep MIDI controller I maded.

The HTML setup has great potential, especially with MIDI controllers. For example, I mapped a MIDI controller to the bricks, using its hold function to toggle bricks or act as a sequencer. This combination saves effort by separating functions—letting a physical device handle sequencing instead of building it into the HTML.

Javascript Club #4(14.10)

During the JavaScript Club Session 4, I introduced JavaScript for sound, focusing on Tone.js and Pizzicato.js. I demonstrated examples of how I use these libraries to create sound experiments.

At the end, I provided a cheat pen with publications, each containing a script corresponding to a sound effect or tool.

https://pzwiki.wdka.nl/mediadesign/JavaScriptClub/04

Public Moment(04.11)

During the public event, the kitchen transformed into a Noise Kitchen. Using kitchen tools and a shaker, I made a non-alcoholic cocktail while a microphone overhead captured the sounds. These sounds were processed through my DAW to generate noise.

The event had four rounds, each round has 2 minutes, accounting time with the microwave, accommodating four participants. The outcome of each round was a non-alcoholic cocktail, made with ginger, lemon, apple juice, and milky oolong tea.

Why do you want to make it?

I think Nature is a large content, it could related to using the nature as a language to communicate, by various machine, or as a predicted way,to predict fortune, but not a typical Divination function. Such as direction, temperature, magnetic, gravity... these factors could be connections with the device. By using an unstable way to control the devices, like the nature factors.

Who can help you and how?

Xpub tutors will help me a lot to clarify my logical structure and assist me with the technological issues I may encounter during the process. I will also try to contact relevant engineers, product designers, sound designers, or relevant communities for more inspiration. During the testing phase, I will reach out to different communities to organize workshops and performances for testing the devices and gathering feedback from users.

Relation to previous practice

Building on my experiences with SI22, SI23, and SI24, I will combine my technical skills and creative exploration from those projects. The process of researching, drafting, and debugging will support my development approach, allowing me to incorporate previous learnings into this new project and create a seamless integration of sound and nature.

Relation to a larger context

This project connects to broader themes of environmental awareness and the fusion of technology with nature. By exploring the interplay between natural phenomena and digital soundscapes, it will also utilize unstable elements from nature to engage with digital aspects. This could be an important way to enhance creativity in sound design.

References

In my research period for the graduation project, my references are items, random pictures, plugins, and digital devices.

I divided these into different categories, like trees, planets, and gravity, which are the natural energy I’m exploring.

https://www.ableton.com/en/packs/inspired-nature/

https://www.lovehulten.com/

Fortune

Believe it Yourself is a series of real-fictional belief-based computing kits to make and tinker with vernacular logics and superstitions. Created by Shanghai based design studio automato.farm, 'BIY™ - Believe it Yourself' is a series of real-fictional belief-based computing kits to make and tinker with vernacular logics and superstitions. The team worked with experts in fortune telling from Italy, geomancy from China and numerology from India to translate their knowledge and beliefs into three separate kits – BIY.SEE, BIY.MOVE and BIY.HEAR. They invite users to tinker with cameras that can see luck*, microphones that interpret your destiny*, and compasses that can point you to harmony and balance*.

http://automato.farm/portfolio/believe_it_yourself/

other inspirations:

Plants

PlantWave

https://www.datagarden.org/technology

PlantWave measures biological changes within plants, graphs them as a wave and translates the wave into pitch. It connects to a free app for iOS and Android. PlantWave monitoring electrical signals of plants as sound. Through patented sonification technology.

Love Hultén

Love Hultén is a Swedish designer and craftsman renowned for blending retro aesthetics with modern technology. His work uniquely combines traditional woodworking with cutting-edge electronics to create stunning, functional art pieces. Hultén's designs often evoke nostalgia, featuring retro gaming consoles, synthesizers, and computers in exquisitely handcrafted wooden casings. Known for their meticulous craftsmanship and innovative designs, his creations, such as the R-Kaid-R and the Pixel Vision, bridge the gap between art and technology, offering a tactile, visually captivating experience.

Gravities

Bouncy Notes

Bouncy Notes is a Max for Live MIDI effect that generates MIDI notes. It can function as an unconventional arpeggiator, sequencer, note delay, note generator, or other MIDI note applications. In the center of the device is a display of balls (represented by circles) that bounce up and down on a piano roll via a gravity simulation. When a ball hits the piano roll, a MIDI note is output that corresponds to the note on the piano roll. Balls are created by sending MIDI notes to the device. When a MIDI note is received, a ball is created above the pitch on the piano roll that corresponds to the MIDI note received. If the pitch of the MIDI note is not on the piano roll, the ball appears over the closest matching pitch on the piano roll.

https://dillonbastan.com/inspiredbynature_manuals/Bouncy%20Notes%20User%20Manual.pdf

https://www.youtube.com/watch?v=C2hQ-WbKBhU

Droplets

Droplets is a physics-based sequencer, offering a new way to create organic musical patterns.

Drippers produce droplets at regular musical intervals. You can adjust the interval at which droplets are produced by turning the dripper's handle. As droplets fall down the screen, they hit and bounce off of bars, causing a note to play. Bars can be tuned by adjusting their length. By changing the arrangement of bars and drippers, entirely new musical patterns will arise.

https://finneganeganegan.xyz/works/droplets

Tree

Moving Trees highlights the often-overlooked lives of trees by combining art and technology. Sensors installed in six significant trees in Arnhem reveal hidden data, such as trunk movements, CO2 and water flow, and photosynthesis activity. Wooden benches with QR codes provide access to soundscapes, visuals, and stories tied to the trees. This innovative installation transforms a simple walk into an opportunity to observe trees as dynamic, living beings, fostering a deeper connection with nature and its unseen processes.

https://arnhemsebomenvertellen.nl/T/uKFBL/data/7

https://arnhemsebomenvertellen.nl/

When I saw this project, the idea of using trees to generate sound based on data like temperature, light, and humidity seemed interesting, but I realized that the sounds created by this data didn’t really connect to the trees themselves. For example, using potatoes or plants to control sound may be an interesting way to change sounds, but it doesn’t actually reflect anything about the potato or plant. This made me question if using data to create sound can truly represent nature or if it just makes us aware of the difference between the natural world and the way we measure it.

As I thought more about the project, I wondered if the problem was with the way sound was being designed. It seems to lose the real connection to nature. For example, if there were field recordings of trees—like the sound of wind in the leaves—people might better connect the sounds to the trees. However, that would be a different approach since the main idea of the project is to show us things we can’t normally see, like changes in temperature or oxygen levels. I started to wonder if the sounds were intentionally not related to nature to make people think about how data can be different from real life, which is an interesting way of making people think.

This made me realize how hard sound design can be in projects like this. Turning data into sound that still feels like it’s connected to nature is tricky. Data is often invisible or hard to understand, so it’s not easy to create sounds that make people feel like they’re hearing something from nature. The sounds generated from temperature or oxygen levels don’t automatically make you think of trees or the outdoors. This made me think about how difficult it is to find the right balance—how to create sounds that still feel like they belong to nature, without just using obvious natural sounds like wind or birds.

Looking at this, I saw that my own project is a bit different. While both projects explore the relationship between nature and human interaction, my focus is not on representing nature directly through sound. Instead, I want to expand how people interact with things—moving from simple touches or clicks to more dynamic and unpredictable forms of interaction. The goal of my project isn’t to replicate or interpret nature through sound, but to explore new ways of interacting with the world. This allows for a more open and imaginative way of experiencing and exploring the environment.