I-could-have-written-that: Difference between revisions

No edit summary |

No edit summary |

||

| (12 intermediate revisions by 2 users not shown) | |||

| Line 2: | Line 2: | ||

|Creator=Manetta Berends | |Creator=Manetta Berends | ||

|Date=2016 | |Date=2016 | ||

|Bio=Manetta Berends ( | |Bio=Manetta Berends (NL) has been educated as a graphic designer at ArtEZ, Arnhem before starting her master-education at the Piet Zwart Institute (PZI), Rotterdam. From an interest in linguistics, code and a research based design practice, she currently works on the systemization of language in the field of natural language processing and text mining. | ||

|Thumbnail=I-could-have-written-that these-are-the-words mb.png | |Thumbnail=I-could-have-written-that these-are-the-words mb 300dpi.png | ||

|Website= | |Website=www.manettaberends.nl | ||

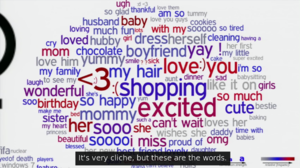

|Description=''i-could-have-written-that'' is a two hour workshop, that critically examines the reading power of text mining software. The workshop dismantels how large sets of written documents are transformed into useful/meaningful/truthful information. A process that is presented by text mining companies as ''"the power to know"'', ''"the absolute truth"'', ''"with an accuracy that rivals and surpasses humans"''. This workshop challenges the image of this modern algorithmic oracle, by making our own. more info: [http://i-could-have-written-that.info www.i-could-have-written-that.info] | |||

}} | }} | ||

<gallery> | |||

File:I-could-have-written-that these-are-the-words mb 300dpi.png | |||

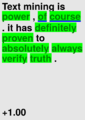

File:Text-mining-is +1.00 certain.png | |||

File:I-could-have-written-that categories-modality.gif | |||

</gallery> | |||

''i-could-have-written-that'' is a two hour workshop, in which we will write between the lines, raw data will be cooked, and personal oracles will be trained. The workshop contains three parts. We start with a writing exercise in which we challenge the strictness of numbers with words. Then we will train our personal oracles by following the 1989 model Knowledge Discovery in Databases, and do manual pattern recognition with notecards and pens. After this hard work, we will ask three algorithms to do a bit of work as well. They will built your personal oracle, which you can consult at any time in the future! | |||

Many text mining services offer pre-trained classifiers through API's without specifying how the system is created. They promise to read meaning out of a text correctly. This unconditional trust is the basis of automated essay scoring systems (AES), psychology studies, job vacancy platforms and terrorism prevention. Automated systems are trained to detect sentiment, education level, mental illness, or suspicious behaviour. The techniques are used online in i.e. social media platforms, in the academic field and by the government. However, framing text mining techniques as readerly systems makes them immune for questions and discussion. When text mining results are considered as a product of writing, the presence of an author is emphasized. This makes it possible to discuss text mining results in relation to predefined goals and breaks the idea that text mining results represent an absolute truth. | |||

Latest revision as of 15:05, 13 February 2017

| I-could-have-written-that | |

|---|---|

| Creator | Manetta Berends |

| Year | 2016 |

| Bio | Manetta Berends (NL) has been educated as a graphic designer at ArtEZ, Arnhem before starting her master-education at the Piet Zwart Institute (PZI), Rotterdam. From an interest in linguistics, code and a research based design practice, she currently works on the systemization of language in the field of natural language processing and text mining. |

| Thumbnail | |

| Website | www.manettaberends.nl |

i-could-have-written-that is a two hour workshop, that critically examines the reading power of text mining software. The workshop dismantels how large sets of written documents are transformed into useful/meaningful/truthful information. A process that is presented by text mining companies as "the power to know", "the absolute truth", "with an accuracy that rivals and surpasses humans". This workshop challenges the image of this modern algorithmic oracle, by making our own. more info: www.i-could-have-written-that.info

i-could-have-written-that is a two hour workshop, in which we will write between the lines, raw data will be cooked, and personal oracles will be trained. The workshop contains three parts. We start with a writing exercise in which we challenge the strictness of numbers with words. Then we will train our personal oracles by following the 1989 model Knowledge Discovery in Databases, and do manual pattern recognition with notecards and pens. After this hard work, we will ask three algorithms to do a bit of work as well. They will built your personal oracle, which you can consult at any time in the future!

Many text mining services offer pre-trained classifiers through API's without specifying how the system is created. They promise to read meaning out of a text correctly. This unconditional trust is the basis of automated essay scoring systems (AES), psychology studies, job vacancy platforms and terrorism prevention. Automated systems are trained to detect sentiment, education level, mental illness, or suspicious behaviour. The techniques are used online in i.e. social media platforms, in the academic field and by the government. However, framing text mining techniques as readerly systems makes them immune for questions and discussion. When text mining results are considered as a product of writing, the presence of an author is emphasized. This makes it possible to discuss text mining results in relation to predefined goals and breaks the idea that text mining results represent an absolute truth.