User:Riviera/Special issue 22

Week 1

(Re)Prerecording a tape

In preparation for broadcasting on Tuesday 19 September, Maria suggested producing pre-recorded, taped material to share on air. In response to this, Riviera and Victor experimented with a Sanyo Stereo Radio Double Cassette Recorder and a collection of cassette tapes.

Ideas

- Record the sounds in between different radio stations (Maria)

- Layer the sounds by covering the erase head (Joseph)

Experimenting with the Capstan and Pinch Roller Openings

In a conversation with Victor, Joseph highlighted that is possible to 'layer' recordings onto a cassette tape. This is achieved by covering the Pinch and Capstan Roller Openings on a given cassette. Whilst playing around with a the Sanyo machine Victor and Riviera figured out how to do this using masking tape.

We found out that the right-hand opening corresponds to the erase head, whilst the left hand opening is where reading takes place. By covering the right-hand opening, we were able to layer the recording. The effect of layering sounds raised questions about composition and the relationship between noise and background.

Composition

We first tried recording an amalgamation of sounds: classical music, radio signals, interference and noise. The tape became a document of what we did throughout the recording session and as such it bore a particular relationship to time. Parts of the tape feature voices, quietly talking underneath classical music. Elsewhere there are blends of classical music. Other parts feature classical music alongside radio signals. Overall the quality of the composition is a mixture of experimentation, learning how to use the technology and various effects. This also led towards questioning authorship with Victor pointing out that the sounds one receives between radio stations are multiply authored.

Hardware Effects

The Sanyo device has a built in radio capable to picking up long-wave, medium-wave, short-wave and FM signals. It also has a button which switches between 'dub speed' and 'beat cancel'. Pressing this button changes the speed at which the tape plays. Thirdly, it is has a dial which filters out high and low frequency sounds. The machine does not display the amount of time which has elapsed since the tape began playing. Due to this, it is necessary to estimate amounts of time when recording.

Generating an echo/offset effect

- record recorded material onto a second tape

- record that recording onto the first tape, offset by some time.

Week 2

Caretaker Meeting For Week Three's Broadcast

On Monday 25th September I, along with Senka and Lorenzo, volunteered to be a caretakers for week three's radio broadcast. We met on Wednesday 27th to discuss ideas and structure the broadcast. There were three of us and 120 minutes of airtime to fill. Therefore we divided our broadcast into three 40 minute segments. We detailed what we would do in each segment on an Etherpad (https://pad.xpub.nl/p/plan-broadcast3). The pad became a record of our conversation. A trace of where our ideas came from.

Discussing Texts

Discussing The Death of the Author

We began by discussing Barthes' text The Death of the Author. I commented that I first encountered this text several years ago. At that time, I considered that the death of the Author entailed the death of the intention inherent in the author. In the Laurence Rassel Show the relationship between authorship and copyright is briefly discussed. The discussion in the radioplay, which critiques anti-copyright licenses for not abolishing the concept of authorship, resonates with Constant's Collective Conditions for Reuse (CC4r). Particularly the statement that '[t]he CC4r considers authorship to be part of a collective cultural effort and rejects authorship as ownership derived from individual genius'(Constant 2023). Barthes' concludes that the birth of the reader is on the flip side of the death of the author. Like Jacques Rancière's emancipated spectator the reader becomes an active participant, shaping the meaning of the text. In the context of anti-copyright licensing this has several implications. Taking software as an example, the reader can become a programmer, study the code comprising the software and make contributions to developing the software. In a cultural context the reader may be engaged in alternative ways. Consider, for example, a publication appropriately licensed and hosted on a git repository. Here arises the possibility for the reader to edit or add to the publication. The death of the author shifts the focus away from authorship towards readership.

Discussing The Ontology of Performance

The crux of Phelan's argument is her opening statement that 'Performance cannot be saved, recorded, documented, or otherwise participate in the circulation of representations of representations: once it does so, it becomes something other than performance.' (Phelan 1993, p.146). Phelan's argument proceeds through an analysis of several artworks including Angelika Festa's 1987 performance Untitled Dance (with fish and others). Phelan holds that '[t]o the degree that performance attempts to enter the economy of reproduction it betrays and lessens the promise of its own ontology' (Phelan 1993, p.146). During the discussion I aimed to articulate, and provide arguments which supported, Phelan's position. Senka disagreed with Phelan arguing that performance is something which can be reproduced. Senka stated that they were reading Phelan's text adjacent to Boris Groys' Religion in the Age of Digital Reproduction (2009). Groys argues 'a digital image that can be seen cannot be merely exhibited or copied (as an analogue image can) but always only staged or performed'. In this sense, Senka argued, performance is reproducible. The digital image can be replayed and thus performed again. In response to this, I turned to the meontological writings of Nishida Kitarō who writes that in the physical world time is reversible and in the biological world time is irreversible (1987). I suggested that reproducibility and reversibility are analogous. Performance in the biological world is therefore non-reproducible whilst performance in the physical world is reproducible. Lorenzo suggested that the text was biased towards the medium of performance. I agree that Phelan may be biased, though not towards the medium of performance. I suggest that Phelan's biases are towards performance in the biological world.

Synthesising Ideas

This was a key section of our Etherpad in which we developed a structure for the broadcast.

| Time | Content |

| 0-10 | Introduction |

| 10-40 | Sound boards / Ursula Franklin |

| 40-80 | Imaginary Future protocols and live coding archive remix |

| 80-120 | Phelan reading |

We developed protocols to facilitate with structuring the broadcast. Following a suggestion in the group's reflective discussion we decided we would ask our peers to contribute material for the radio show.

Imaginary Future Protocols Protocol

- Write down a protocol on this wiki page https://pzwiki.wdka.nl/mediadesign/Personal_Protocols. (It could be a routine, personal protocol, or one that relates to our group).

- Record yourself expanding on the meaning of the protocol (Why this protocol? What does it mean to you?)

- The protocols are only accepted when done voluntarily (you don't have to)

- Post the recording on the wiki page.

Shell Commands to Activate the Archive

find

The command find is an effective way to retrieve a list of .mp3 files in the Worm Radio Archive.

tree

Tree displays the structure of files and subdirectories for a given directory.

In the above command, two options have been passed to tree, -i and -f. The manual page for tree details what these options do:

Prints the full path for each file.

-i

Makes tree not print the indentation lines, useful when used in conjunction with the -f option.

ffmpeg

This command makes it possible to convert files from one audio format to another.

This command also takes many options. If you need a different sampling rate for better resolution, say 48000 Hz, use the following

Preparation for the Broadcast

In preparation for the broadcast, Senka and Lorenzo were kind enough to provide audio snippets from files retrieved from the recycle bin. Lorenzo and Senka took different approaches. Lorenzo sent 64 files of lengths varying from two seconds to five minutes. Senka sent eight files the longest of which was 11 seconds.

Week 3

Go Fish

In the shell I find a marvelous mess of constellations, nebulae, interstellar gaps, awesome gullies, that provokes in me an indescribable sense of vertigo, as if I am hanging from earth upside down on the brink of infinite space, with terrestrial gravity still holding me by the heels but about to release me any moment.

Nancy Mauro-Flude, 2008

The shell is a computer programme which launches other programmes. This efficient, textual method of interacting with a computer raises practical and ethical questions. On a practical level the question arises as to which shell to choose from. bash is the default login shell on Linux distributions such as Debian and Rasbian. However, it is possible to choose a different shell. This choice leads to a discussion of the ethical issue of accessibility. A feature of this discussion involves unpacking the relationship between norms and defaults. In this wiki page I outline some implications of switching to a shell other than bash by way of several examples. I describe why I have chosen to use one shell, rather than another shell and argue in favour of fish as a default shell. Overall I argue that shell scripting (with fish or bash) could be an effective way in which to activate Radio Worm's sonic archive.

fish in practice

fish (https://fishshell.com/) has many features that other shells do not have. This includes enhanced autocompletion, a different way of scripting, improved syntax highlighting and idiosyncratic configuration. Three quarters of these features make it easier to get started with using the command line to interface with the computer. In implementing a different scripting language to bash, fish scripts pose a hurdle relating to interoperability. One must have access to a working installation of the software to run the script and often this is not the case by default.

fish departs from it's normative counterpart bash in both helpful and obscure ways. Firstly, there is no file such as .fishrc. Instead fish keeps configuration data in various user-specific and site-wide configuration files. Extending the functional capabilities of the shell involves adding scripts under ~/.config/fish/functions/. Command expansion is written differently. For more examples, see fish for bash users.

Going Fishing: A Syntax Highlighter

Below are two fish scripts, the second works in tandem with the first. The first function, highlight, utilises the sed command to edit HTML snippets generated by Emacs' Org Mode's HTML export backend. The second script works by calling the highlight function several times on the same file. Really this should be a single script, but I cannot figure out how to execute sed this many times in a row...

higlight.fish

function highlight -d "Highlight HTML code" -a file

argparse --name='highlight' 'h/help' -- $argv; sed -i 's/<pre/<div/' $argv; sed -i 's/<\/pre>/<\/div>/' $argv; sed -i 's/class=/style=/' $argv; sed -i -E 's/"src src-[[:alpha:]]*"/"font-family: Monospace; background-color: #dbe6f0;"/' $argv; sed -i 's/"color:teal"/"color:teal"/' $argv; sed -i 's/"color:teal"/"color:teal"/' $argv; sed -i 's/"color:green"/"color:green"/' $argv; sed -i 's/"color:yellow"/"color:yellow"/' $argv; sed -i 's/"color:orange"/"color:orange"/' $argv; sed -i 's/"color:olive"/"color:olive"/' $argv; sed -i 's/"color:gray"/"color:gray"/' $argv; sed -i 's/"color:purple"/"color:purple"/' $argv;

end

The s command in sed language is a shorthand for substitute. s commands are of the form

's/find/replace/'

With the s command, sed queries a text file for the presence of a particular string, or regular expression, and replaces what is found with user defined text. In the above example, classes of HTML elements are restyled with colour to highlight different parts of the script. Represented as a table, the changes are:

| find | replace |

| <pre | <div |

| </pre> | </div> |

| class= | style= |

| "src src-[[:alpha:]]*" | "font-family: Monospace; background-color: #dbe6f0" |

| "org-comment-delimiter" | "color:teal" |

| "org-comment" | "color:teal" |

| "org-keyword" | "color:green" |

| "org-function-name" | "color:yellow" |

| "org-variable-name" | "color:orange" |

| "org-string" | "color:olive" |

| "org-constant" | "color:gray" |

| "org-builtin" | "color:purple" |

higlight_all.fish

function highlight_all -d "Call the highlight command several times" -a file

set -l options (fish_opt -s h -l help) argparse --name='highlight_all' 'h/help' -- $argv; for n in (seq 1 5); highlight $argv; end

end

This command calls highlight multiple times to ensure the script runs to completion. I could improve this such that only one script is required.

Week 4

Podcasts Continued

The other week I started writing a shell script to generate podcasts in an RSS format. I first outlined the connection between podcasts and rss feeds following a discussion we had in class. Then I wrote about how I utilised grep, cat and sed. I combined these commands in a shell script. The aim was to write a file containing the tag-structure of an XML document whilst removing content. This outline structure became the basis of the podcast generator script, skeleton. Along the way I analysed and improved the first version of the script. I am summarising what I did the other week here because the commentary on the code is somewhat inaccurate. I utilised a less than consistent document production workflow to write the wiki page. However, I have since decided upon a more consistent way of writing wiki pages (using https://pandoc.org) and so pages will be better maintained starting from now.

What I worked on was quite relevant to our discussion of regular expressions on Monday 9th. It could furthermore bear relevance to CSS and paged media if an HTML page was made for the channel. I envision a publication made with weasyprint, perhaps using the technique of imposition. Each page might contain a link to a radio broadcast from the past, along with some text.

Discussion of the script

The script now features several additional flags. The "verbose" flag sends to stdout descriptive information about what the script is doing whilst it does it. This information is useful for debugging. It is also a more convenient means of documenting the software than writing about it. The "add" flag allows the user to add an mp3 file to a podcast. If the podcast does not exist, skelegen creates the channel. If the channel exists, skelegen adds the item to the channel. This is a flexible way of creating podcasts. Lastly, the "auto" flag automatically inserts a title and description for the item(s) / channel.

skelegen --generate /media/worm/radio/ --channel ~/public_html/podcast.xml

I turned the script into a zine using free and open source software. In particular I used Emacs, pandoc, ConTeXt and pdfcpu.

Creating a podcast with random content using cron

0 14 * * 7 skelegen --auto --add (random choice (tree -if /media/worm/radio/ | grep -Eo "(/[^/]+)+.mp3")) --channel /home/$USER/public_html/random-podcast.xml

The above line of code is an example of how the shell script can be used to generate a podcast. Here I have combined the script with cron, an automatic task scheduler. The above line of code goes in a crontab file. It calls skelegen at 1400 every Sunday. The cronjob adds a random .mp3 file from Worm's radio archive to a podcast channel called 'random-podcast'. The "auto" flag ensures the script runs to completion. The podcast was inspired by worm's 'random-radio' which plays when nobody is broadcasting live.

Week 5

Week 6

Mixing A Tape

This wiki post was made through a combination of writing and voice recording techniques. What I am going to do is read what I have written and expand, where appropriate, upon what I have written before returning to the main flow of the text. The text itself is fairly short. However, I feel that by expanding on what I have written by speaking, it will become longer and more detailed. I intend to then transcribe what I have said and edit the material. Then post the edited transcription on the wiki. So, without further ado…

On the afternoon of October 26, and during most of Friday 27th I made tape recordings, I am not referring to cassette tapes, but long reels of tape. The tape I was recording on to was approximately 500 meters in length, I made a recording which was the complete length of the tape on the Friday. In what follows I discuss my experiences of recording onto tape

The most effective setup

I sent audio from my laptop to channels one and two on a 16 channel mixing desk. As we can see in the photo taken on the Thursday I had several machines connected to each another. For the purposes of recording audio, connecting my laptop to the mixing desk was one aspect of this setup. On the Friday, I also plugged a radio into channels three and four. Working with the 16 channel mixing desk was far more effective than working with the eight channel mixing desk. I did not take a photograph of my setup on a Friday. However, I will elaborate on two reasons as to why it was more effective to use the setup with the 16 channel mixing desk. Firstly, the effects panel is not working on the eight channel mixing desk, but it is working on the 16 channel mixing desk. Secondly, the eight channel mixing desk picks up a lot of noise and the 16 channel desk does not do this. I connected the 16 channel mixing desk to an Akai tape recording and playback machine. This machine belongs to one of Joseph's friends, and I made sure to be careful with it. It was According to Joseph the best tape machine out of the three which are available. Indeed, it was. I had difficulty getting the results I wanted on the pieces of hardware which I was using on the Thursday. In short, I was recording sounds from two devices on to tape and using the mixing desk as a way to get these machines to communicate.

Drawing on the prototyping classes, which we had on the Monday and the Tuesday was key to the activities I engaged in later in the week. In these prototyping clauses, we looked at how to record sounds in analog and digital ways. We also created HTML audio mixers with functions which enabled looping the audio file and adjusting the playback rate with greater granularity. I built upon this HTML soundboard by adding eight separate audio tracks and adding a volume level slider. Each audio recording was a file in a series of recordings in Worm's radio archive. There were many variables which allowed me to alter the sound. This came from all of the hardware that I was using. I had plugged the laptop with this setup into the mixing desk, so that was one set of variables I could control (the playback volume and the playback speed). As I could also choose particular files and the time at which to play them from it was possible to layer these audio recordings. However, all the tracks were one hour in length. It was simpler to simply reduce the playback rate, so that the tracks continued to play for a very long time. In part, I was inspired by my previous collaboration with Victor in which we made a tape recording and overlaid sounds onto the tape. I placed this idea in a digital context and played multiple tracks simultaneously for an overlaid sound effect. These signals went directly into the mixer, the analog mixer, the physical piece of hardware made by Behringer. Specifically, the signals went into channels one and two which were panned to left and right respectively. The signal was then sent to the first and second output bus channels. The hardware has four output bus channels which meant that I was able to set four output levels individually.

Moreover, there were two pieces of hardware that I connected to the mixer. one of the pieces of hardware was my laptop, the other piece of hardware was a radio. I added the latter into the mix on the Friday in order to get radio signals. Partly because it was mentioned that radio was effective back in week one. I wanted to retrieve similar sounds and combine with other sounds on the tape. And sometimes it works sometimes it didn't work. A time when it was effective was when I took out the connection between the radio and the mixing desk in order to tune it to a signal which I thought sounded right before reintroducing the sound. I was able to do this because of the four output bus channels. The radio was plugged into input channels three and four, which were panned also to left and right initially. As before, channels three and four were being sent to output bus channels three and four. I could set the levels of each of these output bus channels individually so what I could do was fade the radio all the way down, take out the connection, find a signal that I liked, remake the connection and then reintroduce the sound of the radio.

Likewise, there were physical buttons on the mixer, which controlled which output bus channel the input signal was to go to. It was possible to press eight of these buttons simultaneously with eight fingers and switch all the channels going to buses one and two to buses three and four and vice versa. This had a really pleasant effect. If the levels of output bus channels two, one, three and four were set to different levels, then it was possible to get a really nice effect by redirecting signals to the different levels of the master faders. It was also good that the effects panel was working, because I was able to apply effects such as reverb, flange and delay to the signal. For example, I applied a flange effect to voices speaking live on the radio. Because the effects panel was broken on the eight channel mixing desk, which I was using on a Thursday, it wasn't possible to apply these effects during that recording session.

The following table lists the variables which I was in charge of during the recording session.

| Laptop | Radio | Mixing Desk | Tape Machine |

|---|---|---|---|

| Playback Rate | Volume | Pan | Line levels |

| Volume | (Fine) Tuning | Gain | |

| Loop function | Tone | EQ | |

| Send buttons | |||

| Effects | |||

| Levels |

Whilst experimenting with these machines it was important, above all, to monitor the levels of the lines into the tape machine. I wanted to be as careful as possible about ensuring that the sound was not clipping or peaking and putting too much pressure on the tape machine. Conveniently, the tape machine has physical, analog indicators which show the amount of volume which is going through the left and right channels at any given time. These indicators move independently of one another. There's a lot of manual engagement with the technology which lends itself to subtle differences in volume or subtle differences in pan and gain. This entails different experiences in the left and right channels. Furthermore the sounds I was recording were stereo signals. For these reasons it's very important to monitor the levels going into channels one and two because they can vary sometimes considerably.

Perhaps I will record more onto the tape which I was using to make it different. Currently, it's quite lengthy. It lasts for approximately an hour and a half I imagine. It would be very straightforward to plug more devices into this setup. I think there's an opportunity to add to the content of the tape, to change it up, to introduce other tapes halfway through at different times. This would add in more discontinuity. But returning to the table, which I was speaking about. On the mixing desk, there was as I mentioned, a pan as well as the equalizer. And the equalizer was nice. Sometimes if it got too bassy, you could turn the bass down. Or if I wanted to emphasise the treble, I could increase the treble level. In general, one could record a variety of sounds into the tape using the mixing desk as a intermediary.

The influence of live coding

I have titled this section 'The influence of live coding' because of the performative quality of the experience of experimenting as I did. In short, I regard performance as the connective tissue which binds live coding to recording onto tape. In my previous broadcast with Senka and Lorenzo I attempted to live code with sounds taken from Worm's radio archive. That was an exciting and yet challenging experience for several reasons. Firstly, it was quite audible when I was typing. That was disrupting the experience of listening to the algorithms because there was this thumping noise in the background. Secondly, live coding was tricky because the samples were of a very variable length. Normally I live code with percussive samples and semi-percussive tones. Working with samples of various lengths was challenging. However, I believe this was something which this experiment with the tape recorder could have benefited from. I selected audio recordings from Worm's archive using broadcasts which were from the same folder. Presumably they were all part of the same series of broadcasts. All were exactly an hour in length and I hoped their similarities would produce an effect that was aesthetically consistent.

However, the tracks were somewhat dissimilar in content. There were times when you heard people shouting. There were times when there was heavy club music. There were other times when there were bird noises, for example. So there was a lot of variety in these shows. But primarily, I used instrumental sounds. If I had used shorter sounds that might have been effective because of the loop functionality of the HTML mixer which I had made. The ability to loop could have been used to a rhythmic effect. But that's not something which I attempted. Nevertheless, I imagined using the browser-based mixer as a live interface with which to create sounds on the recording. it's not live coding, just mixing sounds in the browser. Nevertheless, I've been reflecting on my live coding practice having spent some time in a wood workshop and in a ceramics workshop. It was interesting to work with these materials. I had worked with them in a limited capacity when I was a pre-teenager but not since. And it's interesting to come back to these materials after so many years. They never really featured previously in my practice, in any way whatsoever. So, spending time in the Word workshop, one afternoon, turning wood, just to see what it was like. And to create various shapes with curves out of a square block of wood was extremely satisfying. And got me thinking about crafting materials. With the ceramics, the plasticity of the material, the ability to transform it into so many different shapes, made it difficult to work with. On the whole, cutting wood with machines is more mathematical than preparing and shaping clay.

I started to think about these experiences in terms of my own practice. For me it's about shaping something. I was thinking about the materiality of code and I was thinking about shaping code in the same way that one shapes a piece of wood, or a block of clay. With live coding, one can start with an algorithm which is so simple and expand it into a different shape. Or apply other variables and pass different keywords to the algorithm. Or construct it differently. It's possible to shape visual and sonic output in a way analogous to shaping wood and clay. I hadn't thought about live coding in that way before. I thought about it, in part, as a demonstration of how I was using a computer, because I felt like it was quite different to the way many other people use computers. Also, live coding is performative and I was trying to make performance art. So, live coding seemed like a practical solution. And I didn't think about it in relation to workshop practices involving materials such as clay and wood.

In a sense, the experiment required me to turn from software to hardware. I was using buttons and dials to shape the sound and experiment with different effects. What happens if I switch the button to a different position? How do I ensure that the signal coming through is loud and clear? What happens if the bass is too high and needs to be reduced? It was deliberate experimentation with particular constrictions except that the constrictions are there in front of you. They're not concealed within the source code, even open source code . Because there is a physical interface to work with.

Week 7

Broadcast Seven

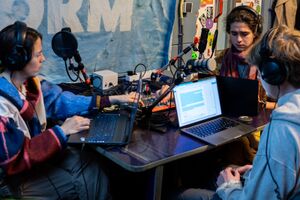

On the morning of Halloween 2023, me, Senka and Victor met at Worm to broadcast a radio show. The title of the broadcast was 'Personal Accounts of Irreplaceable Lace: A 200 year long history of lost and found'. This wiki post discusses that broadcast. I focus on the discussions which were had in the run up to the show, the broadcast itself and the feedback which was given in the Aquarium during the afternoon.

Making the Broadcast

In preparation for the broadcast I recorded a 45 minute long 1/4" tape. That process is discussed here. Initially, there was a plan to transport the tape machine to the Radio Booth at Worm. In the end, I decided against this idea as the machine belonged to one of Joseph's friends and the bag for transporting the equipment did not seem very sturdy. It would have been necessary to carry the machine to Worm (it's heavy) and bring it back again. To reduce the chances of potentially damaging the machine, I instead plugged the Akai GX-4400D into a Sony IC Recorder ICD-PX470. Conveniently, the handheld recorder has a line input which made making a digital copy quite simple. The digital file was then broadcast on Tuesday.

Me, Senka and Victor had three meetings in advance of the broadcast. During these meetings we shared ideas, produced material and rehearsed what we would perform. Our first meeting was mostly conversational and sought to demarcate the direction in which we would take the broadcast. There was discussion of aliens and zombies, resources were shared and the decision was made to craft a narrative. Our next meeting was both longer and more productive. We figured out that the narrative would be split into three sections: past, present and future. The narrative would span 200 years from 1928 to 2128. We came up with names for the archivists who would be the protagonists of the narrative: Erhka and Akhre. These names are anagrams of Arkhe, the Greek word from which the English term "archive" is derived. Erhka, it was decided, would be an archivist from the past whilst Akhre would be the archivist from the future. Victor took the future, Senka took the past and that left me with the present. The middle section of the broadcast was structured a bit differently to the opening and closing sections. Rather than writing character-driven, fictional voice memos, I spoke as a narrator and discussed the 'history' of the archive between 1998 and 2057. This was with the agreement of Senka and Victor.

During our second meeting, we worked with three pieces of paper, one for each time period. We decided an 'exquisite corpse' would be an effective way to generate material, and introduce a diversity of ideas into the narrative. Each of us had a say in each section of the storyline, providing ideas, keywords and possible plot points. We then transcribed the paper based notes onto an Etherpad so that each of us could be reminded of the content of the discussion and what had been written down. Because it was Halloween we were interested to make a story with elements of Gothic horror, but also speculative fiction. We explored dichotomies and themes which would characterise each time period. The following table illustrates these themes.

| Past | Present | Future |

|---|---|---|

| Birth of Modernism | Liminal | Solar Punk |

| Industrial Revolution | Translation | Alive (Undead) |

| Consumer Society | Passion (Passive + Active) | Hopeful |

| European politics | Change (Climate (/) Politics) | Repurposed |

| disposable things |

To forge a coherent narrative, I built on what had been discussed in our meetings and copy which had been written in the Etherpad. For example, Senka wrote about the quality of the material of Lace — a fictional audio recording material which we invented and around which the narrative unfolded. There was a practical reason for inventing the material; at first we thought simply to go with tape. However, Senka, whilst researching the historical context of the history of tape discovered something surprising. Tape was invented in Germany in the 1920s, however it was kept a secret and was not widely used until the end of WWII. After finding this out Senka wrote about it in the Etherpad. They made a few suggestions about how we might proceed:

- Adjust the dates of the fictional narrative to fit with the history of tape

- Write an alternative history in which WWII never happened

We agreed that the first option was inconvenient; beginning in the 1950s would have been disruptive to what Victor and I had written. The second option was inspired by a podcast Senka had heard of called 'Within the wires'. This podcast was a fictional narrative which imagined a 20th Century without the concept of a nuclear family. The podcast, to paraphrase Senka, elaborated on an alternative history in which WWII never happened. This was partly because nation states looked very different because nuclear families were considered barbaric. I suggested that whilst that might work in that podcast, it might not work in our broadcast. An alternative, I suggested, was to imagine a fictional audio recording medium. Doing so would allow us to keep the dates the same without ending in the murkiest territories of alternative histories about WWII. Senka responded with an enthusiastic 'YES' in the Etherpad and so we went ahead with the history of lace. I decided to call the material lace because I thought that flat shoelaces looked a bit like magnetic tape, but were sufficiently different.

Senka went on to describe the qualities of this fictional material. I expanded on Senka's discussions of the medium, referring to it's 'gummy' quality and the optimal temperature at which it should be stored. This provided consistency in the narrative. As the author of most of the material in the present moment, I also endeavoured to leave the plot open such that Victor could pickup on narrative strands in the future. Likewise I was inspired by what he had written and sought to write copy which would bridge the lives of Erhka and Akhre.

At our third meeting, I played Victor and Senka the tape I had made. Whilst doing so, we read through the material we had produced to check how much time it would take to read aloud. Following the read-through, we fed back to each other, highlighting plot-points and discussing opportunities to make the broadcast more coherent. We next collaborated on developing the written material through another exquisite corpse exercise. With regard to the structure of the broadcast, we decided to intersperse the sounds of the tape and the spoken narrative elements. This was both a practical — it was difficult to speak over the noisy sounds — and aesthetic — to suggest the passing of time — decision. At this meeting, I also recorded the sound of the tape being rewound from the end to the beginning. Vice versa, I recorded the tape being played on fast forward from the start to the end. This gave us an additional ten minutes of audio material.

Broadcasting on Tuesday

We met around 9:45 at Worm to set up for the broadcast. I had borrowed a mini-jack to phono cable and two phono to jack adaptors from the XML lab to connect my laptop to the mixing desk. Generating pre-recorded material and playing this on air was pragmatic for several reasons. Firstly, we knew how much material we would have. Secondly, and more importantly, it allowed us to mute the microphones in the radio booth and confer with each other about our next steps during the broadcast. This was in contrast to the first broadcast I made with Lorenzo and Senka in week three. Then, the mics were live for much of the broadcast which gave us little opportunity to discuss with each other and cue various moments.

Unbeknown to we caretakers, the streaming software which normally runs in the background on the computer in the radio booth was not running. As such, it only transpired that we were not broadcasting what we were performing after forty minutes. Senka's partner messaged Senka and asked when we were going to start speaking. It turned out that ska music and string instruments were being broadcast for a third of the time that we were supposed to be on air. Fortunately, we were recording everything and there was an opportunity to replay the 'lost' audio to the group on Tuesday afternoon. Doing so, the group agreed, made the narrative make more sense.

We encountered a second technical hitch during the broadcast. Fortunately, this arose just after we fixed the streaming issue so we were not confronted with two issues simultaneously. In short, Audacity stopped working. Why? Because the file had not been saved to the correct location and the computer hard drive rapidly became full. Thankfully Florian was there and he helped us fix both issues as they arose. We lost a couple of minutes of the tape recording being broadcast in the recording, but this was a relatively inconspicuous error. It was a good stroke of luck that the recording did not stop whilst we were talking / telling the narrative.

Feedback following the broadcast

Due to the technical difficulties which we encountered in the morning part of the afternoon was spent listening to the part which was missed. This was important, because everything which Senka had written and said was not broadcast, which made the narrative difficult to understand. It was good, therefore, to have time to listen to this section of the broadcast in the afternoon.

As Thijs pointed out, we caretakers had messaged the group encouraging everyone to stay at home, rather than meet in the Aquarium for a more comfortable listening experience. We also did not share information about what it was we would be broadcasting but preferred to keep this a secret/surprise. During the feedback session in the afternoon, I contended that it would be better if, moving forwards, we were more open with each with regards to the content of the broadcast. There were two reasons for this. Firstly, it was a practical matter. If, as it happened, something were to go wrong, keeping each other in the loop about what to expect could ensure that some technical issues could be detected quicker and resolved sooner. Secondly, it was a matter of audience. We are aiming to reach audiences broader than our own group. Thus, there are opportunities to input on, feedback about and shape each others practices prior to moments of publication. We are valuable resources for one another and we can afford to take advantage of that. I emphasised that the broadcasts, the moments at which things are made public, can still be novel and exciting for wider audiences.

Another key takeaway from the feedback session was as follows. It would have been interesting to talk about the methods we utilised in making the broadcast within the broadcast itself. This had occurred to me in a slightly different guise during the week. The reason we decided not to do this was because we wanted to immerse the audience in the narrative. However, half of the broadcast was full of prerecorded audio. It would have been possible to cut this down somewhat to allow for a discussion of the methods which we utilised to make the narrative.

See Also

Week 8

Editorial Team Meeting Recap

I took the bakfiets from WORM to Karel Doormanhof this afternoon. When I arrived back at the Wijnhaven building I found that two groups had formed: the editorial team and the installation team. I joined the editorial team and spoke with Senka, Mania and Michael about what had been going on. I asked for the readers digest of the conversation so far. It had been decided to hold a series of interviews for the special issue. Connecting with people at WORM, such as Ash, Lieuwe and Lukas would be the starting point.

Possible Questions for Structured Interviews with Radio Makers

We spoke about interviewing radio makers in a structured way. That is, putting the same questions to each interviewee to collect data which could be better tabulated and drawn upon in a structured way.

Here are the questions which were written in the Etherpad. Perhaps we could vote on these to decide what to ask radio makers?

- Who are your listeners?

- Who are you listening to?

- Do you have an archive?

- Is the element of liveness important to you (does “archiving” matter)?

- How do your listeners use it?

- How did you start with radio worm?

- Why/What is important to make public / in making a public?

- What does (Radio) Worm mean to them (two questions ;)?

- What do you not choose to broadcast? (public / private) What are the errors? (Ash’s example of turning on the microphones to hear the way decisions get made)

- What languages do you use in your radio? (acess and who can understand these languages)

- Would you like to be include in the archive and if so how? (Are there “other” materials/recordings that could represent your broadcast / audience)

- How do you share the (radio) air?

- What do you find important to transmit and broadcast and why?

Beyond Audacity

Mania and I had interviewed several people at Zine Camp for the presentation about the Special Issue which took place on the Sunday. We recorded these conversations on my Sony handheld recorder. On the Saturday I edited the audio for the presentation in Audacity. I spoke with Hackers & Designers (H&D) about their tools and collective practices. At one point I put forward the following question:

To which the response was

Thus, I was glad we were working in small groups. When asked about the nature of collaboration in H&D this member of the group emphasised that

I raised this point with the group in relation to working with sound files in Audacity.

Weeks 9 and 10

Week 9

Broadcast Tuesday

I offered to be one of four caretakers for the broadcast on Tuesday. There were three parts to the broadcast. I uploaded the file to chopchop on 21st November. The broadcast was split into three parts. In the first part, Senka and Maria spoke about the field report they had written the previous Wednesday. I sat at the table but said nothing for this part of the broadcast. If I recall correctly I also did not speak, nor did I vote, during the middle section when we chose a metaphor. I did not vote early on because I wanted to hear each of the metaphors before casting a vote. By the time I was ready to cast my vote I had ended up with a vote which would have resolved a tie between four possible metaphors. I could have thrown my vote away on purpose, giving it to a metaphor not involved in the tie. Alternatively I could have resolved the tie. I did neither. In the last part of the broadcast I spoke during a reading of the fictional piece which Thijs, Michel, me and Victor had written the previous Wednesday. We, the caretakers, read it collectively.

Prototyping for the apocalypse

Rosa and I met in the studio during the week and made some wiki documentation about our ideas. We met up in the studio on Friday to develop ideas further. I wrote two bash scripts. One of these was called fm2vtt. Files with a .fm extension look like this:

Hello, I am Caption One

https://hub.xpub.nl/chopchop/~river/podcasts/2a8d64aa-7867-415e-9837-7c1bd63a6d9a/e/fb41c2a6152b6732b6f6c7f95d975a6102ea91baf6c612f15d80a8fddb8b617b/recording.mp3#t=04:27.207

I am caption two

The following script can be executed on chopchop to see fm2vtt in action.

#!/usr/bin/env bash

# curl 'https://pad.xpub.nl/p/script-for-rosa-nov-16/export/txt' | bash

mkdir -p $HOME/fm2vtt

curl "https://pad.xpub.nl/p/sample.fm/export/txt" > $HOME/fm2vtt/sample.fm

curl "https://pad.xpub.nl/p/fm2vtt/export/txt" > $HOME/fm2vtt/fm2vtt

chmod +x $HOME/fm2vtt/fm2vtt

command $HOME/fm2vtt/fm2vtt -i $HOME/fm2vtt/sample.fm -o $HOME/fm2vtt/out.vtt

cat $HOME/fm2vtt/out.vtt

The above script is unlikely to survive in an apocalyptic context. However Rosa introduced me to DuskOS/CollapseOS. I got the former working on my machine. It is not possible, however, that I could write a version of the fm2vtt script for that operating system. At least not in time for the special issue release. Notwithstanding, fm2vtt is a useful script capable of creating webvtt subtitle files. Primarily the script was a result of the following question: How can we collaborate on audio files without using audacity?

Saturday 18th: Extratonal Infrastructure 9

Indeed, on Saturday 18th I had a gig at Varia. I spent some time preparing for the performance in the run up to it. At the event I performed for 20 minutes with Fluxus and Tidal Cycles, both of which are free / open source pieces of software. Here’s some of the feedback I received from the audience:

‘Congratulations’

‘You’re so fast at coding’

‘It’s very interesting what you are doing, I’ve never seen this before’

‘You created a universe and I cannot do that’

‘You should create more compositions’

The audience were quiet throughout the performance. Perhaps it would have been better if more of them had been seated during the performance. However, I chose not to force the audience sit down, some voluntarily sat on the floor. In any case I was averagely satisfied with the performance. The performance hadn’t changed much since I last performed it in March. If anything I was a bit rusty. I had a good conversation with the violinist after the concert about music theory. In particular, I found out about the tonic, subdominant and dominant pattern within scales.

Now that I have done my performance at Varia, I am left thinking about performance practices involving free software which do not feature live coding. I have amassed a considerable amount of material in preparation for the performance. I have at least 41 practice sessions from November 2023. I could execute shell commands on these files to gain statistical data about the files. Perhaps there’s a way I can incorporate this into the .fm (fragmented media) file type.

Sunday 19th

On Sunday 19th of November I attended a free and improvised music concert at WORM. The concert took place in three parts and involved five musicians. In the first part of the concert there were three acts. At first, two double bassists played together. Then a solo act by a violinist and composer. Lastly a percussionist and a saxophonist. We took a break before the second part of the concert in which the group of five musicians was spilt into two improvisational acts. Finally, all the musicians played together. All acts were improvised.

At one point I listened to a saxophone which was evidently full of spit. At other moments, bassists placed sticks or metal objects in between the strings of their instruments and continued to play as if that were normal. The bass players demonstrated a variety of techniques for playing double bass: bows ran across strings at right angles, strings were re-tuned mid-performance, percussive elements featured also. I had never previously witnessed two bass players improvising simultaneously and it was a pleasure to listen to. In some ways I was thinking to myself, ‘this is like jazz’ but it was more like improvisation than jazz. It was interesting to think about the concert in the context of my own performance practice.

Week 10

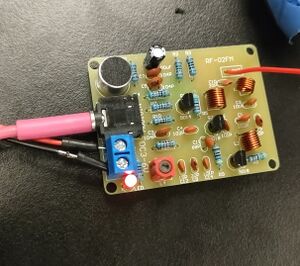

Nov 21st: Soldering

On November 21st I soldered an FM radio transmitter together. This was the first time I had ever soldered anything and it worked! I learned from Victor. It was enjoyable to practice my soldering technique with others and talk about things. I didn’t get a photograph of the soldering process or the underneath of the Printed Circuit Boards.

Nov 24th and 25th

Open Sound Control (OSC)

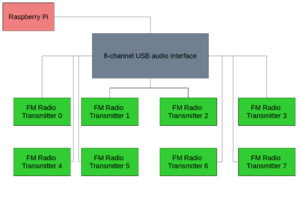

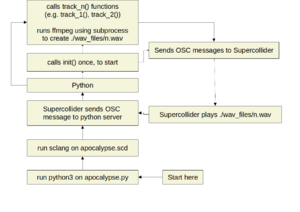

OSC is a communication protocol for many pieces of audio software and hardware. During week nine and ten, Rosa and Riviera began developing a prototype for the FM radio transmitters (Figure 1). There are several of these transmitters and an equal number of receivers. On these transmitters we intend to broadcast excerpts from Worm’s Radio archive, including the contributions we have made as part of SI#22. To achieve this, Riviera and Rosa realised it would be necessary to develop some software. Figure 2 illustrates the concept we have in mind for the hardware. We believe this setup is more pragmatic than connecting a raspberry pi to each radio transmitter, although this would be possible. Figure 3 illustrates how the software works.

A supercollider server running on the raspberry pi will expect the pi to be connected to a USB audio interface with eight outputs. The supercollider server listens for OSC messages on port 58110. Simultaneously running on the Pi is another OSC application based on the python-osc python library. The python application is comprised of a server, dispatcher and client. The dispatcher is essential because it is capable of calling the client when the server receives a message. The python client sends OSC messages to the Supercollider server. Supercollider sends messages back to the python server. The OSC messages contain a ‘channel’ and a path to a WAV file. To begin with, start the python server. Then execute the supercollider document with sclang. Supercollider will introduce itself to the python server, and then the process of playing audio files enters into a loop.

Launch Material

Listen Closely

Technical and Spiritual Manual for Post-apocalyptic Radio Making

The Technical and Spiritual Manual for Postapocalyptic Radio Making was produced as part of SI22: Signal Lost, Archive Unzipped. The text was composed of two parts which concerned the how and why of postapocalyptic radio making. Part one was comprised of several diagrams illustrating how to make various pieces of FM radio equipment; transmitters and receivers for example. The second part included interviews with radio makers at Radio Worm. The Manual was licensed under Collective Conditions for Re-use. This license was developed by Constant and informs the following discussion of the text.

In short, the interviews had many transcription errors. Concerns were raised about the fact of publishing a text which did not accurately represent the people directly concerned with the material. There was a sense that interviewees were disappointed by the way the transcripts had mangled their spoken words. There was apprehension about the possibility of interviewees having words attributed to them which they never said. Furthermore, these apprehensions were raised by an interviewee after the event in the context of a reflective discussion. Circumspect of these concerns, I have been selective about the material presented in the slideshow and have not included excerpts from the interviews.

My questions are as follows: Does the CC4r provide a framework that assists with navigating through the ethical concerns outlined above? Or do the ethical concerns override? The manual was contentious. My contribution to the text was primarily in typesetting it with ConTeXt. As Maria has pointed out, however, I also rendered the transcripts which were, with minor changes here and there, placed in the Manual.

Perhaps I could have been more careful about which pages from the text to upload to the Wiki. I was clear with myself that I would not upload pages from the interviews. However, I did not fully take into account other concerns raised by interviewees which I have tangentially touched on above. Instead, what I uploaded sought to demonstrate capabilities afforded by the ConTeXt typesetting software. I also wanted to share some tricks I had picked up relating to vertical spacing. In view of the likelihood and location of someone encountering documentation of the manual, I have removed images from the slideshow which may be considered contentious.

I believe that scope for making such mistakes is built into the CC4r. On the one hand the license ‘favours re-use and generous access conditions’(Constant, 2023). On the other hand, it notes ‘that there may be reasons to refrain from release and re-use’ (Ibid.). Suppose I were to frame an act of release or re-use in terms of an antonymous or pseudonymous term. Is this a way to sidestep or shift the terms of what is at stake in the CC4r, namely re-use? For example, if I spoke of redistributing these parts of the Manual rather than re-using them. Would that be coherent with the CC4r? What does re-use become in such contexts? Is such a version of events a satisfying outcome of taking the ‘implications of (re-)use into account’ (Ibid.)?

https://hub.xpub.nl/bootleglibrary/book/866

Bibliography

Barthes, R. (1977) ‘The Death of the Author’, in Image, music, text: essays, 13. [Dr.]., London, Fontana, pp. 142–148.

Constant (2023) CC4r * COLLECTIVE CONDITIONS FOR RE-USE [Online]. Available at https://constantvzw.org/wefts/cc4r.en.html (Accessed 28 September 2023).

Grojs, B. (2009) ‘Religion in the Age of Digital Reproduction’, e-flux, no. 4 [Online]. Available at https://www.e-flux.com/journal/04/68569/religion-in-the-age-of-digital-reproduction/ (Accessed 28 September 2023).

Mauro-Flude, N. (2008) ‘Linux for Theatre Makers: Embodiment & Nix Modus Operandi’, in Mansoux, A. and Valk, M. de (eds), Floss + art, Poitiers, France, GOTO 10, in association with OpenMute, pp. 206–223.

Nishida, K. (1987) Last writings: nothingness and the religious worldview, Honolulu, University of Hawaii Press.

Phelan, P. (1993) ‘The ontology of performance: representation without reproduction’, in Unmarked: the politics of performance, London ; New York, Routledge, pp. 146–166.