User:Riviera/Notes from week 10

Recap of last week

Broadcast Tuesday

I offered to be one of four caretakers for the broadcast on Tuesday. There were three parts to the broadcast. I uploaded the file to chopchop on 21st November. The broadcast was split into three parts. In the first part, Senka and Maria spoke about the field report they had written the previous Wednesday. I sat at the table but said nothing for this part of the broadcast. If I recall correctly I also did not speak, nor did I vote, during the middle section when we chose a metaphor. I did not vote early on because I wanted to hear each of the metaphors before casting a vote. By the time I was ready to cast my vote I had ended up with a vote which would have resolved a tie between four possible metaphors. I could have thrown my vote away on purpose, giving it to a metaphor not involved in the tie. Alternatively I could have resolved the tie. I did neither. In the last part of the broadcast I spoke during a reading of the fictional piece which Thijs, Michel, me and Victor had written the previous Wednesday. We, the caretakers, read it collectively.

Prototyping for the apocalypse

Rosa and I met in the studio during the week and made some wiki documentation about our ideas. We met up in the studio on Friday to develop ideas further. I wrote two bash scripts. One of these was called fm2vtt. Files with a .fm extension look like this:

Hello, I am Caption One

https://hub.xpub.nl/chopchop/~river/podcasts/2a8d64aa-7867-415e-9837-7c1bd63a6d9a/e/fb41c2a6152b6732b6f6c7f95d975a6102ea91baf6c612f15d80a8fddb8b617b/recording.mp3#t=04:27.207

I am caption two

The following script can be executed on chopchop to see fm2vtt in action.

#!/usr/bin/env bash

# curl 'https://pad.xpub.nl/p/script-for-rosa-nov-16/export/txt' | bash

mkdir -p $HOME/fm2vtt

curl "https://pad.xpub.nl/p/sample.fm/export/txt" > $HOME/fm2vtt/sample.fm

curl "https://pad.xpub.nl/p/fm2vtt/export/txt" > $HOME/fm2vtt/fm2vtt

chmod +x $HOME/fm2vtt/fm2vtt

command $HOME/fm2vtt/fm2vtt -i $HOME/fm2vtt/sample.fm -o $HOME/fm2vtt/out.vtt

cat $HOME/fm2vtt/out.vtt

The above script is unlikely to survive in an apocalyptic context. However Rosa introduced me to DuskOS/CollapseOS. I got the former working on my machine. It is not possible, however, that I could write a version of the fm2vtt script for that operating system. At least not in time for the special issue release. Notwithstanding, fm2vtt is a useful script capable of creating webvtt subtitle files. Primarily the script was a result of the following question: How can we collaborate on audio files without using audacity?

Saturday 18th: Extratonal Infrastructure 9

Indeed, on Saturday 18th I had a gig at Varia. I spent some time preparing for the performance in the run up to it. At the event I performed for 20 minutes with Fluxus and Tidal Cycles, both of which are free / open source pieces of software. Here’s some of the feedback I received from the audience:

‘Congratulations’

‘You’re so fast at coding’

‘It’s very interesting what you are doing, I’ve never seen this before’

‘You created a universe and I cannot do that’

‘You should create more compositions’

The audience were quiet throughout the performance. Perhaps it would have been better if more of them had been seated during the performance. However, I chose not to force the audience sit down, some voluntarily sat on the floor. In any case I was averagely satisfied with the performance. The performance hadn’t changed much since I last performed it in March. If anything I was a bit rusty. I had a good conversation with the violinist after the concert about music theory. In particular, I found out about the tonic, subdominant and dominant pattern within scales.

Now that I have done my performance at Varia, I am left thinking about performance practices involving free software which do not feature live coding. I have amassed a considerable amount of material in preparation for the performance. I have at least 41 practice sessions from November 2023. I could execute shell commands on these files to gain statistical data about the files. Perhaps there’s a way I can incorporate this into the .fm (fragmented media) file type.

Sunday 19th

On Sunday 19th of November I attended a free and improvised music concert at WORM. The concert took place in three parts and involved five musicians. In the first part of the concert there were three acts. At first, two double bassists played together. Then a solo act by a violinist and composer. Lastly a percussionist and a saxophonist. We took a break before the second part of the concert in which the group of five musicians was spilt into two improvisational acts. Finally, all the musicians played together. All acts were improvised.

At one point I listened to a saxophone which was evidently full of spit. At other moments, bassists placed sticks or metal objects in between the strings of their instruments and continued to play as if that were normal. The bass players demonstrated a variety of techniques for playing double bass: bows ran across strings at right angles, strings were re-tuned mid-performance, percussive elements featured also. I had never previously witnessed two bass players improvising simultaneously and it was a pleasure to listen to. In some ways I was thinking to myself, ‘this is like jazz’ but it was more like improvisation than jazz. It was interesting to think about the concert in the context of my own performance practice.

This week

Nov 20th: machine listening workshop with Ahnjili Zhuparris

This was an intense workshop day in which we made DeepFake Voice Clones and analysed voices.

Nov 21st: Soldering

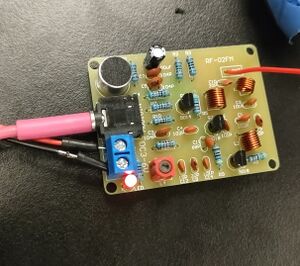

On November 21st I soldered an FM radio transmitter together. This was the first time I had ever soldered anything and it worked! I learned from Victor. It was enjoyable to practice my soldering technique with others and talk about things. I didn’t get a photograph of the soldering process or the underneath of the Printed Circuit Boards.

Nov 24th and 25th

Open Sound Control (OSC)

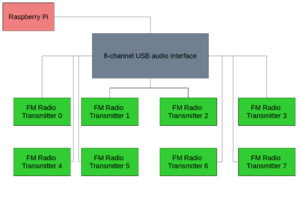

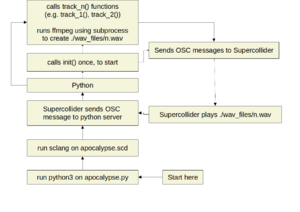

OSC is a communication protocol for many pieces of audio software and hardware. During week nine and ten, Rosa and Riviera began developing a prototype for the FM radio transmitters (Figure 1). There are several of these transmitters and an equal number of receivers. On these transmitters we intend to broadcast excerpts from Worm’s Radio archive, including the contributions we have made as part of SI#22. To achieve this, Riviera and Rosa realised it would be necessary to develop some software. Figure 2 illustrates the concept we have in mind for the hardware. We believe this setup is more pragmatic than connecting a raspberry pi to each radio transmitter, although this would be possible. Figure 3 illustrates how the software works.

A supercollider server running on the raspberry pi will expect the pi to be connected to a USB audio interface with eight outputs. The supercollider server listens for OSC messages on port 58110. Simultaneously running on the Pi is another OSC application based on the python-osc python library. The python application is comprised of a server, dispatcher and client. The dispatcher is essential because it is capable of calling the client when the server receives a message. The python client sends OSC messages to the Supercollider server. Supercollider sends messages back to the python server. The OSC messages contain a ‘channel’ and a path to a WAV file. To begin with, start the python server. Then execute the supercollider document with sclang. Supercollider will introduce itself to the python server, and then the process of playing audio files enters into a loop.