User:Pedro Sá Couto/Hackpact

01

Research station printing machine

From the git

https://git.xpub.nl/pedrosaclout/Jsort_scrape

While discussing with Michael in a prototyping class about my project proposal he was telling me about his experience in the library. He wanted to photocopy a printed publication but he didn't have a school card to use the printing machines in all the WdKA building, He found out an old printing machine that still worked with coins and that was how he was able to do it. It is interesting to see how the shift of all these processes into digital either by the swipe of a card or with an user and password might limit the spread of knowledge within the school.

Last year in prototyping I started writing a script to download papers from JSTOR without the watermarks, ip adress and other traces, I see it as a digital approach to the problem that Michael had.

# import libraries

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

import os

import time

import datetime

from pprint import pprint

import requests

import multiprocessing

import base64

i = 1

name = (input("How do you want to call you files? "))

while True:

try:

# get the url from the terminal

url = (input("Enter jstor url (include 'https://' AND exlude 'seq=%i#metadata_info_tab_contents' — ") + ("seq=%i#metadata_info_tab_contents"%i))

# Tell Selenium to open a new Firefox session

# and specify the path to the driver

driver = webdriver.Firefox(executable_path=os.path.dirname(os.path.realpath(__file__)) + '/geckodriver')

# Implicit wait tells Selenium how long it should wait before it throws an exception

driver.implicitly_wait(10)

driver.get(url)

time.sleep(1)

# get the image bay64 code

img = driver.find_element_by_css_selector('#page-scan-container.page-scan-container')

src = img.get_attribute('src')

# check if source is correct

# pprint(src)

# strip type from Javascript to base64 string only

base64String = src.split(',').pop();

pprint(base64String)

# decode base64 string

imgdata = base64.b64decode(base64String)

# save the image

filename = (name + '%i.gif'%i)

with open(filename, 'wb') as f:

f.write(imgdata)

driver.close()

i+=1

print("DONE! Closing Window")

except:

print("Impossible to print image")

driver.close()

break

time.sleep(0.2)

02

Watermarking

Book publishers and Academic journals append visible watermarks to the downloaded files, making us aware of our accountability when sharing them.

In this script, I create my own watermark.

From the git

https://git.xpub.nl/pedrosaclout/Hackpact_01

from PIL import Image, ImageDraw, ImageFont

import socket

import datetime

import pprint

import geocoder

#Color mode

#Widht and Heigh

#Background Color

img = Image.new('RGB', (1920, 1280), color = (255, 255, 255))

fontsize = 40

#Margin top of 10 and later on in the loop choose the leading

# leading = 10

#Raw input is determined here

place = geocoder.ip('me')

hostname = socket.gethostname()

ip = socket.gethostbyname(hostname)

date = datetime.date.today()

font = ImageFont.truetype('/Library/Fonts/Times New Roman.ttf', fontsize)

draw = ImageDraw.Draw(img)

for line in range(0, 4):

draw.text((10,10),"Where: " + str(place.latlng), font=font, fill=(0, 0, 0))

draw.text((10,55),"Who: " + hostname, font=font, fill=(0, 0, 0))

draw.text((10,100),"IP: " + ip, font=font, fill=(0, 0, 0))

draw.text((10,145),"Date: " + str(date), font=font, fill=(0, 0, 0))

#Save the image - name, resolution, format, smapling, quality is maximum

img.save('sticker.png', dpi=(300,300), format='PNG', subsampling=0, quality=100)

img01 = Image.open("sticker.png")

img02 = Image.open("logo.png")

r1, g1, b1 = img01.split()

r2, g2, b2 = img02.split()

merged = Image.merge("RGB", (r2, g1, b1))

merged.save("merged.png")

03

Append Watermark the first page of the pdf

From the git

https://git.xpub.nl/pedrosaclout/Add_Watermark_to_pdf

from PIL import Image

import PIL.ImageOps

#open both the image and watermark

image = Image.open('split/page1.png')

logo = Image.open('merged.png')

#rotate the watermark

rotatedlogo = logo.rotate(18, expand=True)

#invert the watermark

invertedlogo = PIL.ImageOps.invert(rotatedlogo)

invertedlogo.save('rotatedlogo.png')

#rescaling the logo

basewidth = 1050

finallogo = Image.open("rotatedlogo.png")

wpercent = (basewidth/float(finallogo.size[0]))

hsize = int((float(finallogo.size[1])*float(wpercent)))

finallogo = finallogo.resize((basewidth,hsize), Image.ANTIALIAS)

#overlaying

image_copy = image.copy()

#if you want to position in the bottom right corner use:

#position = ((image_copy.width - logo.width), (image_copy.height - logo.height))

position = ((100), (750))

image_copy.paste(finallogo, position)

image_copy.save('split/page1.png')

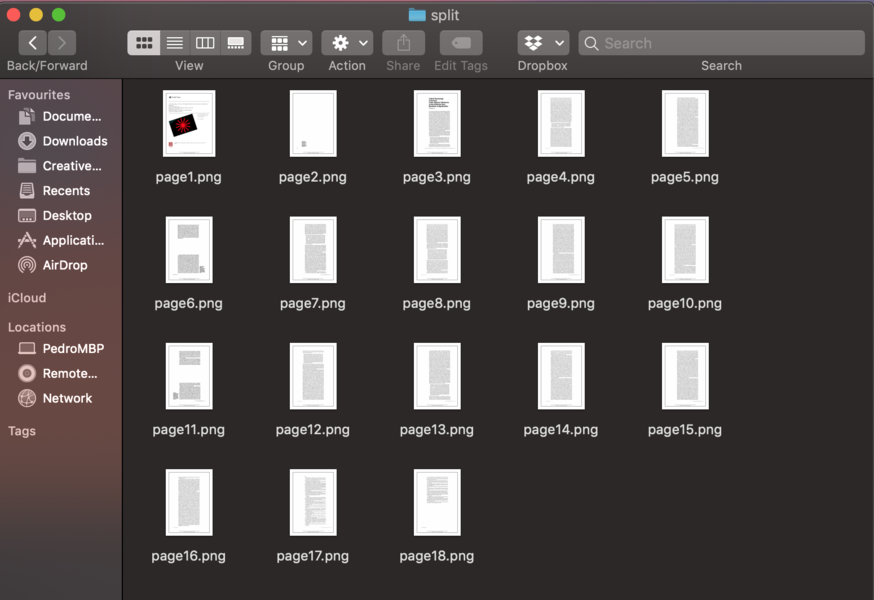

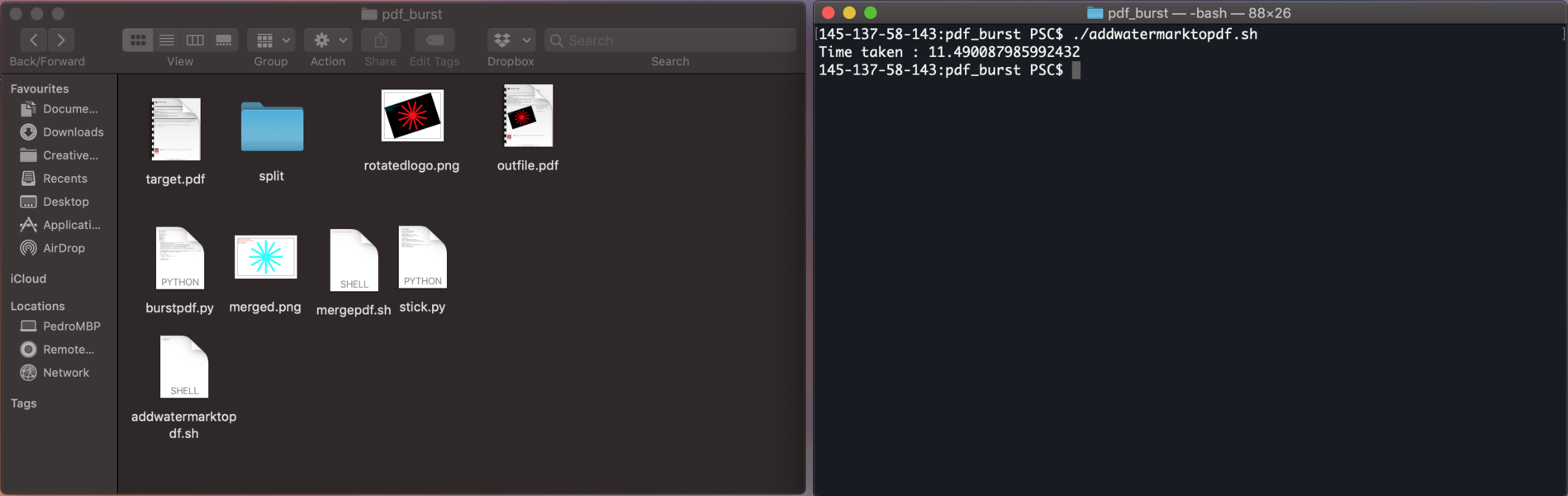

04

Reasemble pdf

From the git

https://git.xpub.nl/pedrosaclout/Add_Watermark_to_pdf

convert "split/*.{png,jpeg}" -quality 100 outfile.pdf

05

All in one stream

From the git

https://git.xpub.nl/pedrosaclout/Add_Watermark_to_pdf

python3 burstpdf.py

python3 stick.py

./mergepdf.sh

06

Blocked Face

Porto is my home team and they were playing against Feyenoord in Rotterdam. I had never been to an away match and I was really shocked by the police presence controlling the supporters. There was a constant camera at the top of a police van recording us and there was also a crew of photographers/policemen constantly taking pictures of the crowd. It left me thinking about what would happen to the images, where would they be stored, would they be deleted? What were they going to use them for?

I created a script that iterates within the images of a folder and it would be able to detect a face and black it out.

Before

After

From the git

https://git.xpub.nl/pedrosaclout/Face_Block

import numpy as np

import cv2

import time

import datetime

from pprint import pprint

from PIL import Image

import os, os.path

import glob

face_cascade = cv2.CascadeClassifier('haarcascade_frontalface_default.xml')

imgs = ['img/01.jpg','img/02.jpg','img/03.jpg','img/04.jpg','img/05.jpg','img/06.jpg','img/07.jpg','img/08.jpg','img/09.jpg']

pprint (imgs)

d = 0

while True:

#read selected image

image = cv2.imread(imgs[d])

d+=1

imagegray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

faces = face_cascade.detectMultiScale(imagegray, 1.3, 5)

for (x,y,w,h) in faces:

cv2.rectangle(image,(x,y),(x+w,y+h),(0,0,0),-1)

roi_gray = imagegray[y:y+h, x:x+w]

roi_color = image[y:y+h, x:x+w]

print ("Displaying image")

cv2.imshow(('blocked%d.jpg'%d),image)

print ("Writing image")

cv2.imwrite(('blocked%d.jpg'%d),image)

k = cv2.waitKey(5) & 0xff

time.sleep(0.5)

if k == 27:

break

cap.release()

cv2.destroyAllWindows()