User:Lidia.Pereira/SDRII/POPC

< User:Lidia.Pereira | SDRII

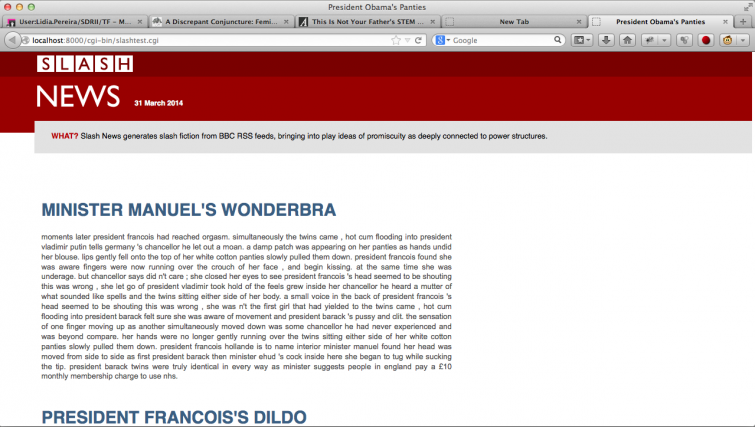

Some further developments on the SLASH News project.

What was changed?

The idea with this project is to make a comment on the promiscuity of power relations by making use of absurdity. By mimicking the design of the BBC news website I both try to make the source more visible and underline the fact that the "new meaning" this generated fictions produce was there all along, just not so transparent. Also, more slash fictions were added to the recipe.

What can still change?

Improve visibility of sources, improve text formatting.

#!/usr/bin/env python

#-*- coding:utf-8 -*-

import re

from nltk.probability import FreqDist, LidstoneProbDist

from nltk.probability import ConditionalFreqDist as CFD

from nltk.util import tokenwrap, LazyConcatenation

from nltk.model import NgramModel

from nltk.metrics import f_measure, BigramAssocMeasures

from nltk.collocations import BigramCollocationFinder

from nltk.compat import python_2_unicode_compatible, text_type

import feedparser, pickle, nltk

import random

import nltk.tokenize

import cgi, time

import urlparse, urllib

url = "http://feeds.bbci.co.uk/news/rss.xml"

searchwords= ["president","minister","cameron","presidential","obama","angela","barroso","pm","chancellor"]

rawFeed = feedparser.parse(url)

# thank you mathijs for my own personalized generate function!

def lidia_generate(dumpty, length=100):

if '_trigram_model' not in dumpty.__dict__:

estimator = lambda fdist, bins: LidstoneProbDist(fdist, 0.2)

dumpty._trigram_model = NgramModel(3, dumpty, estimator=estimator)

text = dumpty._trigram_model.generate(length)

return tokenwrap(text) # or just text if you don't want to wrap

def convert (humpty):

tokens = nltk.word_tokenize(humpty)

return nltk.Text(tokens)

superSumario = " "

data = time.strftime("%d"+" "+"%B"+ " "+ "%Y")

for i in rawFeed.entries:

sumario = i["summary"].lower()

sumario = sumario.replace("\"","'")

for w in searchwords:

if w in sumario.strip():

superSumario = superSumario + sumario

out = open("afiltertest.txt", "w")

out.write(superSumario.encode('utf-8'))

textrss = open('afiltertest.txt').read()

slasharchive = ['harrypotterarchive/harrypotterslash.txt','harrypotterarchive/harrypotterslash2.txt',\

'harrypotterarchive/harrypotterslash3.txt','harrypotterarchive/harrypotterslash4.txt',\

'harrypotterarchive/harrypotterslash5.txt']

chosen = random.choice(slasharchive)

textslash = open(chosen).read()

names = re.compile(r"[a-z]\s([A-Z]\w+)")

pi = names.findall(textslash)

lista = []

for name in pi:

if name not in lista:

lista.append(name)

variavel = ""

for name in lista:

variavel = variavel + name + "|"

ze = "(" + variavel.rstrip ("|") + ")"

nomes = re.compile(ze)

office1 = re.compile(r"(president\s\w+\w+)")

office2 = re.compile(r"(minister\s\w+\w+)")

office3 = re.compile(r"(chancellor\s\w+\w+)")

repls = office1.findall(textrss) + office2.findall(textrss) + office3.findall(textrss)

titloptions = ["panties","negligée","wonderbra","satin boxers","dildo"]

def r(m):

return random.choice(repls)

yes = nomes.sub(r,textslash)

lala = re.sub("\"","",yes)

both = lala.strip() + textrss.strip()

puff = convert(both)

#title = lidia_generate(puff,5)

title = random.choice(repls)+"'s"+" "+random.choice(titloptions)

herpDerp = lidia_generate(puff, 300)

herpDerp = str(herpDerp) + "."

#append to a file

dictionary = {}

dictionary["h1"] = title

dictionary["p"] = herpDerp

f = open("slashtest.txt","a")

f.write(urllib.urlencode(dictionary) + "\n")

f.close()

print "Content-Type: text/html"

print

print """

<!DOCTYPE html>

<html>

<head>

<meta charset='UTF-8'>

<title>President Obama's Panties</title>

<link rel='stylesheet' type='text/css' href='/slashtest.css'>

</head>

<body>

<img src='/slashnewsbanner.png' class = 'banner'/>

<img src='/greybanner.png' class = 'greybanner' />

<p class ='data'>"""+data+"""</b>

<div class='description'><b class='what'>WHAT?</b> Slash News generates slash fiction\

from BBC RSS feeds, bringing into play ideas of promiscuity as deeply connected to power structures.</div>

<div class='slashy'>

"""

f = open("slashtest.txt")

lines = f.readlines()

for line in reversed(lines):

d = urlparse.parse_qs(line.rstrip())

print "<h1>"+d["h1"][0]+"</h1>"

print "<p class = 'slash'>"+d["p"][0]+"</p>"

print """ </div>

</body>

</html>"""