User:Jasper van Loenen/grad/draft proposal

Tentative Title

< Insert Very Tentative Title Here >

General Introduction

Looking back at last year, a lot of things done revolved around the net and social networks like our beloved Facebook. But at this point I'm a bit bored by this topic. There is of course still a lot to be said, but there are also a lot of cliches by now.

Looking for inspiration for the graduation project I focused more on the practical projects I've done - the work related to my love for physical installations and electronics. As some sort of starting point, I looked up different types of teleconferencing devices after fellow student Mano Szollosi showed me a video of a device that's basically an iPad on a Segway. You can control it over the internet and in the commercial a couple actually uses it to 'visit' a gallery space while sitting on their couch at home. Some of these devices are more interesting than others and offer features like arms you can move around. But there are also some that try too look as much as a real human as possible.

The part of all this that I find most interesting is how people try to come up with devices to represent their bodies when they can't be at a certain location themselves - some are basically trying to create a copy of your own body. But you could also approach these devices from the other side: what is needed for a computer or robot to be perceived as a living being, or perhaps even a human. The latter seems to be very hard, with lifelike robots often being right in the middle of the uncanny valley, the area where a robot looks a lot like a human but not quite, making them plain creepy. The first is something that seems to happen more often - we automatically personificate objects or programs that seem to have some sort of intelligence.

Relation to previous practice

Though unrelated to robots or artificial intelligence, my previous work was also about the manifestation of a digital system; Test Screen was an interface to connect to an abstract piece of code. The installation consists out of groups of dials and switches (96 in total) around a flatscreen monitor, set in a large (110x60cm) wooden panel. I wrote a small computer program that would display an image similar to the old television test screens and each of the dials and switches was connected to a part of the script of this program. By manipulating the controls, the audience was able to change my code and change what would be displayed. I choose to not use a generic computer with keyboard and mouse but to built a custom interface as a way to get the audience more involved, since for many using such an unknown interface is far more appealing - and telling by the time some visitors spent at the installation it worked. The form of the object (it's size and layout of switches and dials) was based on the code you were able to change with it and wouldn't really fit any other program - it reflected the character of the system.

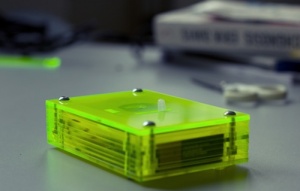

The same can be said for The Poking Machine (TPM), which I made together with Bartholomäus Traubeck. Facebook Users can poke each other by clicking a button on their profile pages and the recipient will receive a message saying he or she was poked. TPM is a small (9 x 7 x 5 cm) box made out of green transparent Perspex which you can strap to your upper arm using the attached piece of velcro. It will then use your phoneʼs internet connection to keep track of incoming pokes and when it detects one, a small plastic lever will come out of the box to physically poke you in the arm. This translates this meaningless written message into an actual poking gesture. Again, this was an object, or interface, made to servo a single program.

Relation to a larger context

For decades - if not longer - man has thought of creating life - from the Golem to Asimo. Creating artificial life similar to ours still seems far away, but there is no denying that we are more and more surrounded by 'smart' systems and the way we connect to them has moved from consoles and buttons to more natural ways that entangle them with our lives. < expand, particularly about the art context >

Practical steps

Creating world's best AI or robot would of course be great, but is not very realistic. Instead I'll be focussing on how we see artificial entities, from computer scripts to physical devices. Why and when can you see a man made object as a thinking character? The first small experiments I did involved both a scripted system and a random moving mechanical object that both seem to be thinking or trying to reach some set goal, even though this was not the case at all. <needs clarification>

References

- Hoffmann, E.T.A., (1816) The Sandman

- N. Katherine Hayles, (2005) My mother was a computer

- N. Katherine Hayles, (1999) How we became post human, virtual bodies in cybernetics, literature, and informatics

- R Barbrook (2005) Imaginary Futures,

- The Hunt for AI (2012), Marcus Du Sautoy

- Where is my robot? (2008), Danny Wallace

- Hugo (2011), Martin Scorsese (based on the children's book by Brian Selznick)

- Isaac Asimov