User:Alexander Roidl/CGI Tunnels

Building a cgi search

Through texts

#!/usr/bin/env python3

import cgi

import cgitb; cgitb.enable()

import csv

import sys

import re

import time

def common_data(list1, list2):

result = False

# traverse in the 1st list

for x in list1:

# traverse in the 2nd list

for y in list2:

# if one common

if x == y:

result = True

return result

return result

print ('Content-type:text/html;charset=utf-8')

print()

#cgi.print_environ()

f = cgi.FieldStorage()

keywords = f.getvalue("search", "").split(" ")

keyword = keywords[0]

start_time = time.time()

#read csv, and split on "," the line

csv_file = csv.reader(open('tfidf.csv', "r"), delimiter=",")

csv = list(csv_file)

texts = csv[0]

in_text = {}

#loop through csv list

for row in csv:

#if current rows 2nd value is equal to input, print that row

for keyword in keywords:

if keyword == row[0]:

for i, val in enumerate(row[1:]):

val = float(val)

if val > 0:

if texts[i+1] in in_text:

in_text[texts[i+1]] = in_text[texts[i+1]]+val

else:

in_text[texts[i+1]] = val

in_text = sorted(in_text.items(), key=lambda x:float(x[1]), reverse=True)

count = 0;

print("""

<form method="get" action = "/cgi-bin/form.cgi">

<input type="text" name="search" placeholder="search books…">

<input type="submit" name="submit">

</form>""")

print("""

<link rel="stylesheet" type="text/css" href="/style.css">

<p class="name">Results for: {0}</p>

""".format(" ".join(keywords)))

if not in_text:

from autocorrect import spell

print("""

<p class="name">no result</p>

""")

print("""

<p>Did you mean <a href='form.cgi?search={0}&submit=Submit'>{0}</a>?</p>

""".format(spell(" ".join(keywords)))

)

else:

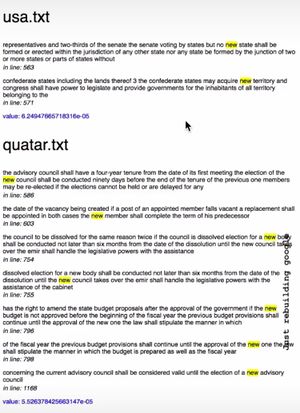

for result in in_text:

print("""

<p class='resultname'>{}</p>

""".format(result[0]))

f = open("texts/"+result[0], 'r', encoding="utf-8", errors='ignore')

f = f.readlines()

for i, line in enumerate(f):

# Use Regex to remove punctuation and isolate words

words = re.findall(r'\b\w[\w-]*\b', line.lower())

sent = " ".join(words)

for key in keywords:

sent = sent.replace(key, "<span class='mark'>{}</span>".format(key))

try:

sentbefore = " ".join(re.findall(r'\b\w[\w-]*\b', f[i-1].lower()))

except:

sentbefore = ""

try:

nextone = " ".join(re.findall(r'\b\w[\w-]*\b', f[i+1].lower()))

except:

nextone = ""

try:

nextnextone = " ".join(re.findall(r'\b\w[\w-]*\b', f[i+2].lower()))

except:

nextnextone = ""

if common_data(keywords, words):

count +=1;

print("""

<div class='excerpt'>

<p class='excerpt_text'>{4} {1} {2} {3}</p>

<p class='excerpt_line'>in line: {0}</p>

</div>

""".format(i, sent,nextone, nextnextone, sentbefore ))

print("""

<p class='value'>value: {}</p>

""".format(result[1]))

print("""<br><br>

<p class="detail">{0} results in {1} seconds.</p>

""".format(count, (time.time() - start_time)))