Wang SI23

Performance at Varia

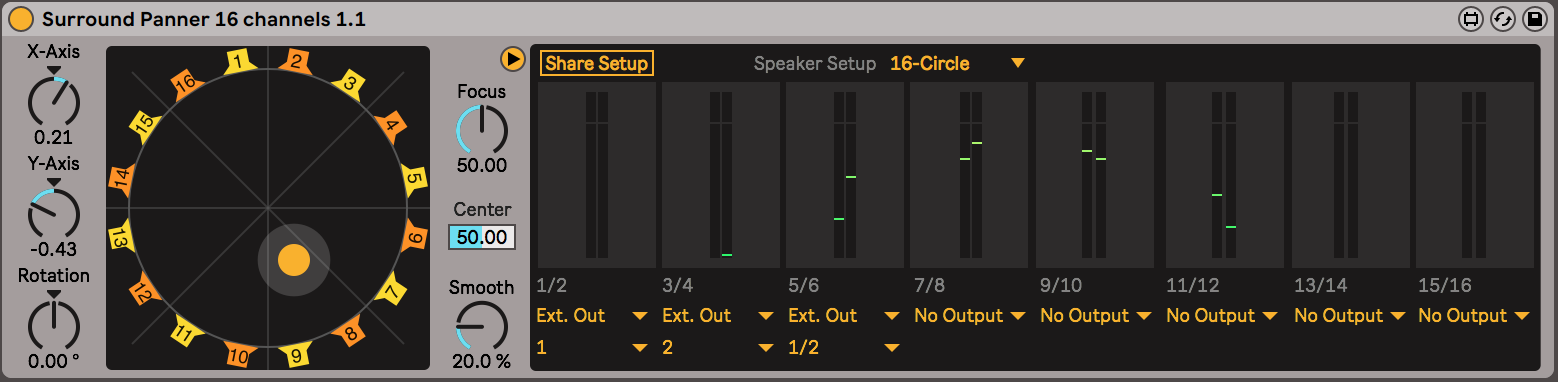

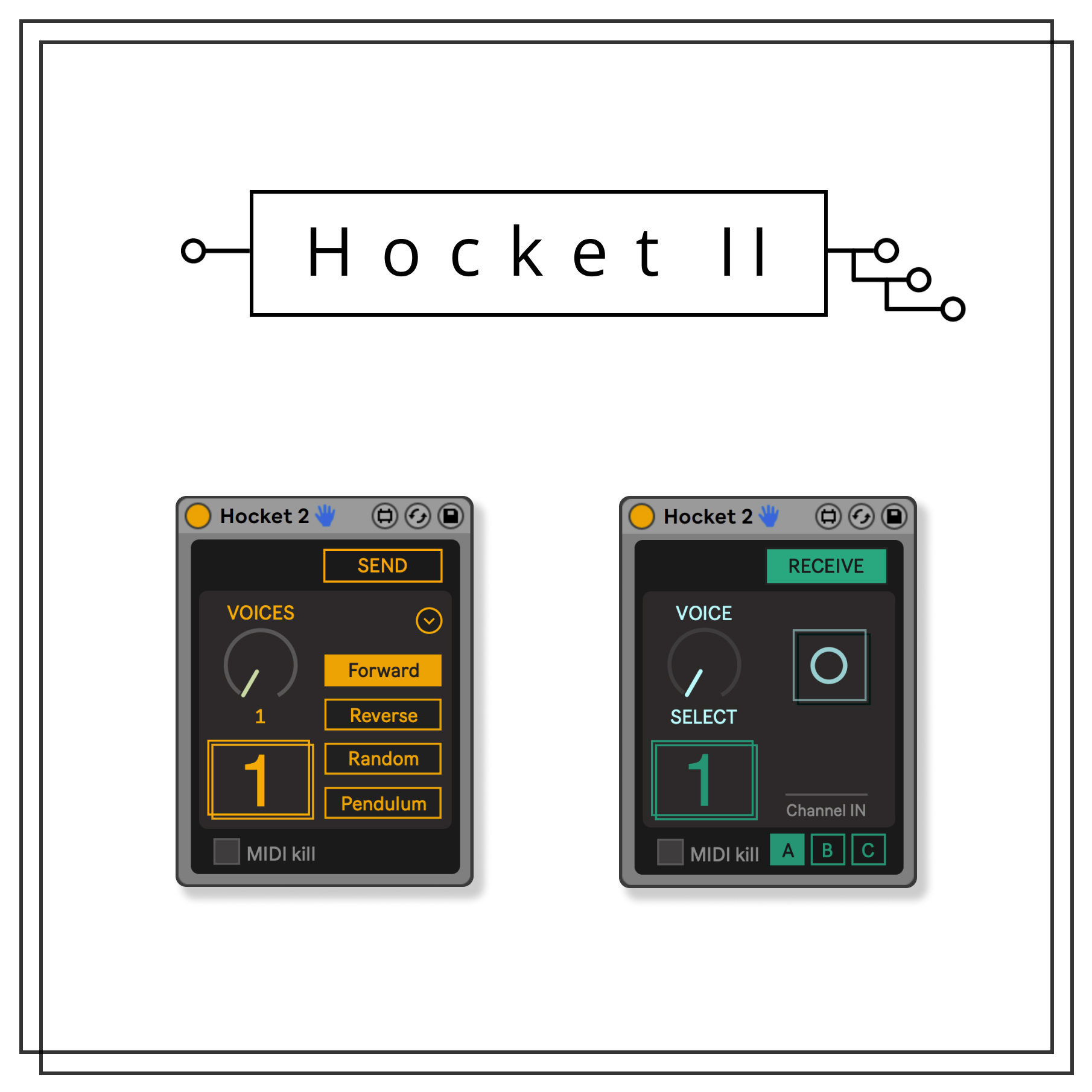

I’ve been using the Surround Panner to create a surround stereo sound experience with 4 speakers through a 4-output audio interface. The ARP sound runs through the speakers in a circular pattern. The main tools for this are the "surround panner" Max for Live plugin and "Hocket." While still in the experimental phase, I am working to ensure its seamless functionality.

The M4L plugins I've been using:

During the visit to "Sonic Acts" in Amsterdam, I attended the listening party of Zone2source event, featuring an 8-output stereo sound setup. This experience provided valuable information: 1) Quality noise doesn't necessitate exceeding volume limits, and 2) I hadn't considered presenting sound in parallel. Each speaker played a different audio in distinct positions of the space, a departure from my usual approach of using one audio across multiple speakers. This new perspective is definitely inspiring and worth exploring further.It also reminds me of the reference that Michael provided during SI22 (https://issue.xpub.nl/18/04/), suggesting another effective method to experiment with parallel samples through interaction with users.

ImageMagick

During the class, we used ImageMagick for creating picture collages/quilting from a PDF. It's an inspiring method for collage creation. I also discovered that the 'convert' function within ImageMagick is a powerful tool for converting PDF to JPG/PNG or vice versa. This is particularly useful on Mac, where performing such conversions without dedicated software can be challenging.

cd /path/directory convert 1.png 2.png 3.png 4.png 5.png output.pdf

convert {1..5}.png output.pdf

convert -density 300 -background white -flatten input.pdf output.png

convert -density 600 -quality 100 input.pdf output.jpg

Javascript

At the beginning of this trimester, Jossef recommended Tone.js, a powerful Web Audio framework for creating interactive music in the browser. I found it very interesting to explore sound design based on websites and to interact with users. I conducted a few tests using Tone.js.

A sequencer controlled by the strange items in the universe.

A pingpong table where the ping pong movements are mapped to the X and Y coordinates, influencing the delay time and pitch.(https://hub.xpub.nl/chopchop/~wang/pic/pingpong.html) A canvas controlled manually by a circle, with the X and Y coordinates influencing the delay time and pitch.(https://hub.xpub.nl/chopchop/~wang/pic/testxy.html)

A canvas with randomly positioned jumping points, where the X and Y coordinates are mapped to the delay time and pitch.(https://hub.xpub.nl/chopchop/~wang/pic/randommoving.html)

The bats represent different synths, and when a user clicks on a bat icon, the cursor changes to the selected icon. Clicking on all the icons generates a random sound. The number box controls the tempo of the synth.(https://hub.xpub.nl/chopchop/~wang/pic/battest.html)

By mapping the X and Y coordinates in the canvas, it prompted me to consider creating a tangible controller that can map not only X and Y but also Z. This brought to mind "the hands" of Michel Waisvisz(https://www.youtube.com/watch?v=U1L-mVGqug4). I believe "the hands" is a remarkable creation, as it transitions the MIDI controller into the 3D era, making sound tangible. It transforms sound control from a 2D automation envelope to something that can be influenced by movements, speed, angles, and more. I am enthusiastic about continuing my research and experiments with this touchable 3D MIDI controller.