User:Tash/Special Issue 05: Difference between revisions

| Line 94: | Line 94: | ||

documents = [] | documents = [] | ||

all_words = [] | all_words = [] | ||

def read_input_text(filename, category): | def read_input_text(filename, category): | ||

Revision as of 11:36, 25 March 2018

Reflections on Book Scanning: A Feminist Reader

Key questions:

- How to use feminist methodologies as tool to unravel the known / unknown history of book scanning?

- What is lost and what is gained when we scan / digitize a book?

- What culture do we reproduce when we scan?

The last question became the focus of my individual research this trimester. Both my reader and my script are critiques on the way human bias can multiply from medium to medium, especially when the power structures that regulate them remain unchallenged and when knowledge spaces present themselves as universal or immediate.

My research

Key topics:

- How existing online libraries like Google Books select, structure and use literary works

- The human bias in all technological processes, algorithms and media

- Gender and power structures in language, and especially the written word

- Women's underrepresentation in science, technology and literature

- The politics of transparency and seamlessness of digital interfaces

- The male-dominated canon and Western-centric systems of knowledge production and regulation

Being a writer myself, I wanted to explore a feminist critique on the literary canon - on who is included and excluded when it comes to the written world. The documentary on Google Books and the World Brain also sparked questions on who is controlling these processes, and to what end? Technology is not neutral, even less so than science is, as it is primarily concerned with the creation of artefacts. In book scanning (largely seen as the ultimate means of compiling the entirety of human knowledge) it is still people who write the code, select the books to scan and design the interfaces to access them. To separate this labour from its results is to overlook much of the social and political aspect of knowledge production.

As such, my reader questions how human biases and cultural blind spots are transferred from the page to the screen, as companies like Google turn books into databases, bags of words into training sets, and use them in ways we don't all know about. The conclusion is, that if we want to build more inclusive and unbiased knowledge spaces, we have to be more critical of the politics of selection, and as Johanna Drucker said, "call attention to the made-ness of knowledge."

Final list of works included

On the books we upload

- The Book: Its Past, Its Future: An Interview with Roger Chartier

- Webs of Feminist Knowledge Online by Sanne Koevoets

On the canon which excludes

- Feminist Challenges to the Literary Canon by Lillian Robinson

- I am a Woman Writer, I am a Western Writer: An Interview with Ursula Le Guin

- Merekam Perempuan Penulis Dalam Sejarah Kesusastraan: Wawancara dengan Melani Budianta

- Linguistic Sexism and Feminist Linguistic Activism by Anne Pauwels

On what the surface hides

- Windows and Mirrors: The Myth of Transparency by Jay Bolter and Diane Gromala

- Performative Reality and Theoretical Approaches to Interface by Johanna Drucker

- On Being Included by Sara Ahmed

To see my Zotero library: click here

Design & Production

The design of my reader was inspired by the following feminist methodologies:

- Situated knowledges

The format we chose for the whole reader (each of us making our own unique chapter) reflects the idea that knowledge is inextricable from its context: its author, their worldview, their intentions. This is also the reason why I chose to include a small biography of myself, and to weave my own personal views and annotations throughout the content of my reader.

- Performative materiality

The diverse formats, materials and designs of all of our readers also means that the scanning process will never be the same twice. It becomes more performative, as decisions will have to be made like which reader to scan first? In which direction (as some text is laid out in different angles)? What will be left out, what will be kept? Again we ask the audience to pay more attention to who is scanning and how things are being scanned.

- Intersectional feminism

My reader also includes an article written in Indonesian. As an Indonesian artist I am always aware of how Western my education is and has been. I wanted to comment on the fact that a huge percentage of the books that have been scanned today are of Anglo-American origin.

- Diversity in works

9 out of the 13 authors/interview subjects in my reader are women. I learnt how important and revealing citation lists can be.

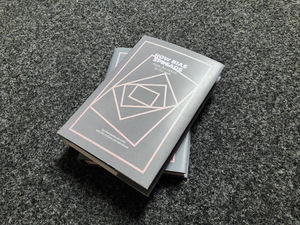

As my subject was the literary canon, I decided to design my reader as a traditional hardcover book. I set up the layout following Jan Tschichold's rules of style. Within these typical forms, I decided to make untypical choices, like the use of pink paper and setting all of my annotations on a 90 degree angle. The graphic image on the dust cover was designed to evoke the mutation of media, from page to screen and back.

PDF: File:ReaderNB Final Spreads.pdf

Software

- Following on from my research for my reader, my central question became how to visualise / play with / emphasize the way cultural biases or blind spots are multiplied from medium to medium

- other concepts include the echo chamber of the internet, inclusion and visibility of minorities, coded gaze, how design can challenge or perpetuate dominant narratives

- Important refs:

- "If the content of the books we scan are exclusive and incomplete, how can we ensure that they are at least distributed and treated as such?" - from my reading of Johanna Drucker's Performative Materiality

- "Feminist and race theorists over generations have taught us that to inhabit a category of privilege is not to come up against the category... When a category allows us to pass into the world, we might not notice that we inhabit that category. When we are stopped or held up by how we inhabit what we inhabit, then the terms of habitation are revealed to us." - Sara Ahmed, On Being Included (2012)

- "The past interrupts the present." - Grada Kilomba

- "Calls for tech inclusion often miss the bias that is embedded in written code. Frustrating experiences with using computer vision code on diverse faces remind me that not all eyes or skin tones are easily recognized with existing code libraries." - Joy Buolamwini (https://medium.com/mit-media-lab/incoding-in-the-beginning-4e2a5c51a45d)

Tests & experiments

Session with Manetta & Cristina on training supervised classifiers: positive vs negative and rational vs emotional, binaries, data sets and protocols Script:

import nltk

import random

import pickle

input_a = 'input/emotional.txt'

input_b = 'input/rational.txt'

documents = []

all_words = []

def read_input_text(filename, category):

txtfile = open(filename, 'r')

string = txtfile.read()

sentences = nltk.sent_tokenize(string)

vocabulary = []

for sentence in sentences:

words = nltk.word_tokenize(sentence)

vocabulary.append(words)

documents.append((words, category))

for word in words:

all_words.append(word.lower())

return vocabulary

vocabulary_a = read_input_text(input_a, 'emotional')

vocabulary_b = read_input_text(input_b, 'rational')

print('Data size:', len(vocabulary_a))

print('Data size:', len(vocabulary_b))

baseline_a = len(vocabulary_a) / (len(vocabulary_a) + len(vocabulary_b)) * 100

baseline_b = len(vocabulary_b) / (len(vocabulary_a) + len(vocabulary_b)) * 100

print('Baseline: '+str(baseline_a)+'% / '+str(baseline_b)+'%')

random.shuffle(documents)

words = []

for w in all_words:

w = w.lower()

words.append(w)

most_freq = nltk.FreqDist(words).most_common(100)

def create_document_features(document): # document=list of words coming from the two input txt files, which have been reshuffled

if type(document) == str:

document = nltk.word_tokenize(document)

document_words = set(document) # set = list of unique words

features = {}

# add word-count features

for word, num in most_freq:

count = document.count(word)

features['contains({})'.format(word)] = count

# features['contains({})'.format(word)] = (word in document_words)

# # add Part-of-Speech features

# selected_tag_set = ['JJ', 'CC', 'IN', 'DT', 'TO', 'NN', 'AT','RB', 'PRP', 'VB', 'NNP', 'VBZ', 'VBN', '.'] #the feature set

# tags = []

# document_pos_items = nltk.pos_tag(document)

# if document_pos_items:

# for word, t in document_pos_items:

# tags.append(t)

# for tag in selected_tag_set:

# features['pos({})'.format(tag)] = tags.count(tag)

print('\n')

print(document)

print(features)

return features

# *** TRAIN & TEST ***

featuresets = []

for (document, category) in documents:

features = create_document_features(document)

featuresets.append((features, category))

# training

train_num = int(len(featuresets) * 0.8) # 80% of your data

test_num = int(len(featuresets) * 0.2)

train_set, test_set = featuresets[:train_num], featuresets[test_num:] # : slices part of the list

classifier = nltk.NaiveBayesClassifier.train(train_set)

classifier.classify(create_document_features('Language is easy to capture but difficult to read.'))

print(nltk.classify.accuracy(classifier, test_set)) # http://www.nltk.org/book/ch06.html#accuracy

# shows how accurate your classifier is, based on the test results

# 0.7666666666666667

# save classifier as .pickle file

# f = open('my_classifier.pickle', 'wb')

# pickle.dump(classifier, f)

# f.close()