User:Ruben/Prototyping/Sound and Voice: Difference between revisions

< User:Ruben | Prototyping

No edit summary |

No edit summary |

||

| Line 2: | Line 2: | ||

This script has undergone many iterations. | This script has undergone many iterations. | ||

[[File:VoiceDetection1.png|200px|thumbnail|right|ugly graph of the second version]] | |||

The first version merely extracted the spoken pieces. | The first version merely extracted the spoken pieces. | ||

The second version created an ugly graph to show how many was spoken in a certain part of a film (according to speech recognitions, which often detects things which are not there) | The second version created an ugly graph to show how many was spoken in a certain part of a film (according to speech recognitions, which often detects things which are not there) | ||

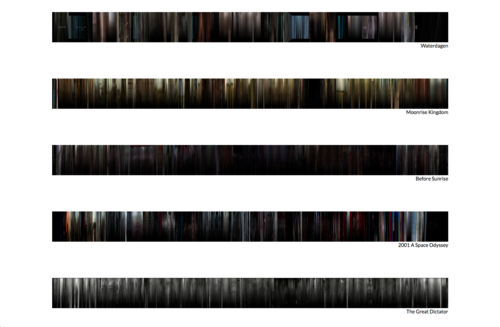

A third version could detect the spoken language using Pocketsphinx. Then it used ffmpeg and imagemagick to extract frames from the film, which are appended into a single image. This image is then overlaid by a black gradient when there is spoken text, as to 'hide' the image. | A third version could detect the spoken language using Pocketsphinx. Then it used ffmpeg and imagemagick to extract frames from the film, which are appended into a single image. This image is then overlaid by a black gradient when there is spoken text, as to 'hide' the image. | ||

[[File:VoiceDetection2.png|500px|thumbnail|none|A third version]] | |||

<references></references> | <references></references> | ||

Revision as of 01:04, 15 January 2015

A project using voice recognition (Pocketsphinx) with Python. [1]

This script has undergone many iterations.

The first version merely extracted the spoken pieces.

The second version created an ugly graph to show how many was spoken in a certain part of a film (according to speech recognitions, which often detects things which are not there)

A third version could detect the spoken language using Pocketsphinx. Then it used ffmpeg and imagemagick to extract frames from the film, which are appended into a single image. This image is then overlaid by a black gradient when there is spoken text, as to 'hide' the image.