User:Manetta/media-objects/i-will-tell-you-everything

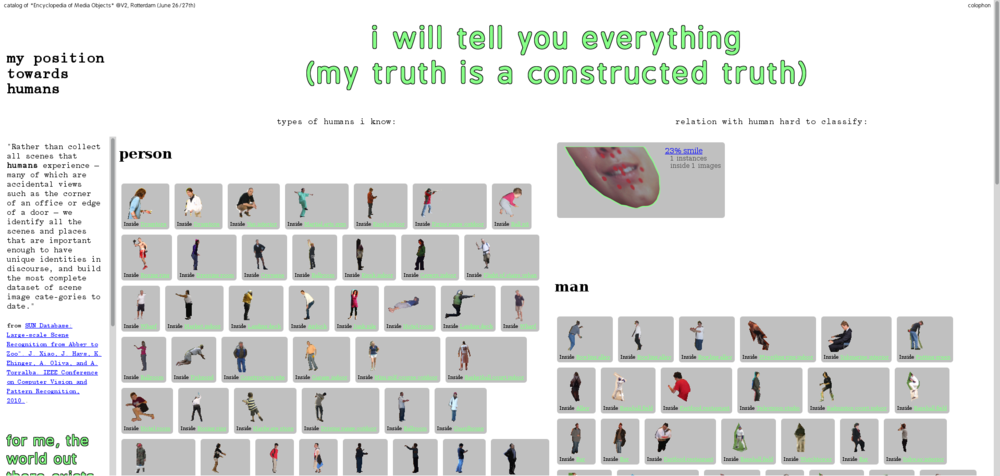

i will tell you everything (my truth is a constructed truth)

i will tell you everything, catalog for *An Encyclopedia of Media Objects*

link to the "i will tell you everything" interface

training set = "contemporary encyclopedia"

In the process of making an encyclopedia, categories are decided on wherein various objects are placed. It is search for an universal system to describe the world.

Training sets are used to train data-mining algorithms for pattern recognition. These training sets are the contemporary version of the traditional encyclopedia. From the famous Encyclopédie, ou dictionnaire raisonné des sciences, des arts et des métiers initiated by Diderot and D'Alembert in 1751, the encyclopedia was a body of information to share knowledge from human to human. But the contemporary encyclopedia's are constructed to rather share structures and information of humans with machines. As the researcher of the SUN dataset phrase in their concluding paper:

we need datasets that encompass the richness and varieties of environmental scenes and knowledge about how scene categories are organized and distinguished from each other.

In order to automate processes of recognition purposes, researchers are again triggered to reconsider their categorization structures and to question the classification of objects in these categories. The training sets give a glimpse on the process of constructing such simplified model of reality, and reveals the difficulties that appear along the way.

steps of constructing such encyclopedia:

1. The material of the SUN group trainingset is collected by running queries in searchengines. Result: the training set is constructed with typical digital images of low quality that are common to appear on the web.

2. The SUN group decides on category merges, drops, and occurings, by judging their visual and semantic strength.

3. The SUN group asks 'mechanical turks' to annotate the images, by vectorizing the objects that appear in a scene. Small objects disappear, common objects become the common objects that will be recognized.

Also, the most often annotated scene is 'living room', followed by 'bedroom' and 'kitchen'. The probability that 'living room' will be the outcome of the recognition-algorithm is therefore much higher than for scenes that are not annotated that often. These results are hence also types of categories in themselves. Although not directly decided upon by the research group, this hierarchy orginates thanks to the selection of scenes that the annotators worked on.

4. Start datamining: an unknown set of images is given to the algorithm. The algorithm returns its output in how much % an image seems to be a certain scene.

5. The results of the algorithm are being transformed into a level accurateness. If needed, the trainingset is adjusted in order to reach better and thus more accurate results. Categories are merged, dropped or invented.

i will tell you everything is based on the structures of the SUN dataset