User:Laurier Rochon/notes/proposalv0.1: Difference between revisions

(Created page with "__NOTOC__ == Proposal v0.1 > 2011 == ''I'm putting this up way in advance (12 days), because we all know how much it sucks to work all week-end.'' ''Will amend every few days....") |

|||

| Line 36: | Line 36: | ||

So we are faced with a strange situation, where the tools that make cyberspace more effective on the Internet (from a commercial perspective), are also the tools that make the Internet more regulable by governement. For example, technologies of identification reduce the chance of fraud for businesses, technologies that reveal your true geographical position (from your IP) allows for customized ads, more accurate shopping experiences while lessening the chance of fraud (again) and technologies that gather and analyze data more efficiently can reduce the chances of security threats by screening packets for malicious data. Simultaneously, better technologies of identification allow for more effective enforcement of laws by governments, technologies to reveal your geographical position are ideal for tracking people, and technologies that gather and analyze data are perfect to eavesdrop on citizens suspected of illegal activity. There is much to say on this topic, but the obvious problem here is that government doesn’t even have to nudge a single bit, and industry can (and seemingly will) provide all the tools needed to regulate the Internet effectively. As mentioned earlier, there is no inherent nature to the Internet. If, however, there is an invisible hand, it is clearly meant to transform the Internet into a tool for perfect control (Lessig). | So we are faced with a strange situation, where the tools that make cyberspace more effective on the Internet (from a commercial perspective), are also the tools that make the Internet more regulable by governement. For example, technologies of identification reduce the chance of fraud for businesses, technologies that reveal your true geographical position (from your IP) allows for customized ads, more accurate shopping experiences while lessening the chance of fraud (again) and technologies that gather and analyze data more efficiently can reduce the chances of security threats by screening packets for malicious data. Simultaneously, better technologies of identification allow for more effective enforcement of laws by governments, technologies to reveal your geographical position are ideal for tracking people, and technologies that gather and analyze data are perfect to eavesdrop on citizens suspected of illegal activity. There is much to say on this topic, but the obvious problem here is that government doesn’t even have to nudge a single bit, and industry can (and seemingly will) provide all the tools needed to regulate the Internet effectively. As mentioned earlier, there is no inherent nature to the Internet. If, however, there is an invisible hand, it is clearly meant to transform the Internet into a tool for perfect control (Lessig). | ||

[[File:Graph proposal v1.png|650px]] | |||

== Hypothetical (no)things == | == Hypothetical (no)things == | ||

Revision as of 22:36, 19 October 2011

Proposal v0.1 > 2011

I'm putting this up way in advance (12 days), because we all know how much it sucks to work all week-end.

Will amend every few days.

Past work

In the past year, I have made 3 projects. The first was a grumpy chat program that interfered with the normal flow of a conversation, the second one a subscription-based soap opera newsletter inspired by daily news reports, and the last one, an encryption/decryption algorithm that protected your message from scrutiny, but revelaed your intentions of communicating under the radar.

My main concern while making these works was to highlight the mediation power held by technologies. Some works did this more literally than others, but my goal was often to try and make visible certain characteristics of technology that make us believe that it could be apolitical, agnostic to influence or simply objective. If there is anything inherent at all to Internet technology, I believe it to be its immunity to complete objectivity from social, political, economical and legislative pressures. This is discussed more in depth later on. In addition to this subjectivity I attempted to reveal, the works I've produced cast cyberspace in a particular light in terms of its constitution - I believe it to be a highly narrative, open-ended space.

Code as architecture, code as law

Real space is not cyberspace. Made possible by the advent of the Internet, cyberspace has different laws that governs it, different rules people adhere to and different morals people can choose to adopt. The reason these things are possible is simply that the architecture of the Internet is different from the one in real space. If a bowling ball is dropped from a skyscraper in real life, there’s not much to do except hope for the best. If the same is done is a MMORPG (massively multiplayer online role-playing game) or a MUD (multi-user dimension), the ball might drop, or it might not, depending on what the rules of these spaces. Hence the design, or architecture of a space defines what is possible and what is not within it. Many of the rules and conventions we must live by are either inherent to our world (we can’t decide if a meteor crashes onto earth or not) or simply outside of our reach (we cannot choose to escape the consequences or our actions). This is not the case in cyberspace.

The architecture of the Internet is made up of code. It is code that decides of these “rules” I have just mentioned, it is code that governs the world of possibilities in cyberspace. If the code says that gravity does not exist in a space, then bowling balls cannot drop from skyscrapers. If the code says that you can’t have access to certain data due to your geographical location, then there is not much you can do about it - this is implemented in the code, and will remain the same until the design of it changes. In short, “code is law” (Lessig). Cyberspace doesn’t have anything “inherent” or “natural” about it, although many believe this to be true. It has formed itself through an accumulation of decisions taken by different people at different times. Code cannot simply “grow” or “be found” anywhere, it is always the result of one or many taking certain decisions. In this light, coders are architects, they are the ones to decide what the Internet looks like, and how it functions. They are the ones creating the protocols used by the Internet, their work defines its design and its design informs its users of the agency it provides.

But as coding has shifted from “research circles” (initially, it was built by hackers and people in academic circles) to industry and commercial entities, we’ve seen an uproar in the popularity of Internet-based technology use. There are many reasons for this happening, one of them being the financial opportunities afforded by making “stuff that just works” for everyday users. This shift from “first” generation to “second” generation Internet (mid 1990s onwards - n.b. this is different depending on authors), from building out of curiosity and principle to building for commercial purposes, has deep implications for governments, industry and most importantly, citizens using such technology.

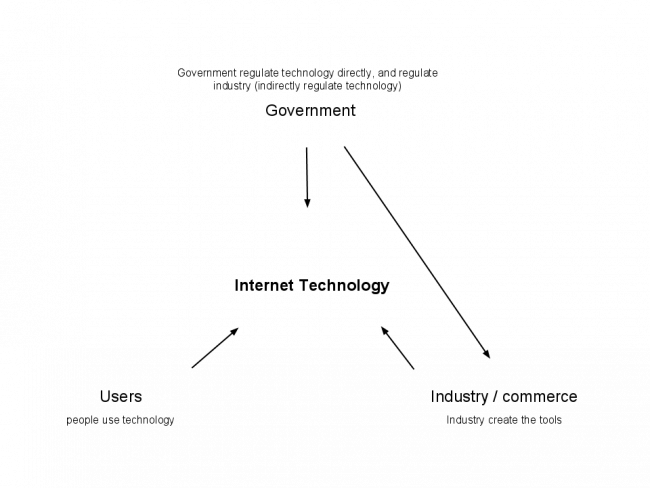

Cyberspace is still considered to be a vast jungle of non-regulable space by many, a far west of sorts where anything goes. This is hardly true anymore, as evidence seems to point out that we are moving at full speed towards a much more controlled Internet. The way this happens is immensely complex, but a part of it could be summarized by the graph below - the dance that occurs between government, industry and customers. In just a few words, governments can regulate technology directly by making laws, or indirectly by influencing the market (ex: subsidizing a technology). Industry, the makers of software and hardware, has much interest in obeying those laws. People buy and use their tools profusely, which at once serve the companies (money, the main reason corporations exist) and governments (regulation, which is one of the reasons why governments exist). This is the thick of what I intend on researching : the interactions between users, governments and commerce.

So we are faced with a strange situation, where the tools that make cyberspace more effective on the Internet (from a commercial perspective), are also the tools that make the Internet more regulable by governement. For example, technologies of identification reduce the chance of fraud for businesses, technologies that reveal your true geographical position (from your IP) allows for customized ads, more accurate shopping experiences while lessening the chance of fraud (again) and technologies that gather and analyze data more efficiently can reduce the chances of security threats by screening packets for malicious data. Simultaneously, better technologies of identification allow for more effective enforcement of laws by governments, technologies to reveal your geographical position are ideal for tracking people, and technologies that gather and analyze data are perfect to eavesdrop on citizens suspected of illegal activity. There is much to say on this topic, but the obvious problem here is that government doesn’t even have to nudge a single bit, and industry can (and seemingly will) provide all the tools needed to regulate the Internet effectively. As mentioned earlier, there is no inherent nature to the Internet. If, however, there is an invisible hand, it is clearly meant to transform the Internet into a tool for perfect control (Lessig).

Hypothetical (no)things

- After having read for the n-th time Neal Stephenson's "In the Beginning Was the Command Line" (which I highly recommend!), I perhaps would be interested in looking more closely at the functioning of operating systems. They are often the building blocks atop of which the different layers of the internet (4-7) connect with everyday user applications. They are probably the single most important piece of software at use today, and the OS war is as strong as ever.

- Albeit a harder endeavour, it could be great if I managed to make a physical artifact to demonstrate these relationships. The relationships I intend on unearthing are quite insidious, and giving them physicality would probably make for a stronger argument.

- Binaries : surveillance, security, identification. Although I'm not overly interested in these topics per se (but rather what creates these things, what kind of ecosystem breeds the values that reflect these things), it could be interesting to lean into one or the other specifically. They are all very double edged processes, where for example surveillance can increase security, but decrease individual liberty. Added security makes us less vulnerable to harm, but again constrains us in our liberty.

- Open-source/closed source code. Open sourcing code can make governments more accountable for what they do. If "code is law", then it becomes critical "which kind" of code, if we can ever hope to change it.

Works to read/reference

- Vincent Mosco ("The Digital sublime")

- Brian Arthur ("The Nature of Technology")

- Charles Dickens ("Tale of two cities")

- Howard Rheingold ("Smart mobs")

- Lawrence Lessig ("code v2")

- Tim Wu ("Network Neutrality, Broadband Discrimination")

- Goldsmith ("Who controls the net")

- Benkler ("The wealth of networks")

- Zittrain ("The generative internet")

- John Perry

- Neal Stephenson

- David Lyon

- William Gibson

- John Perry Barlow

- David Shenk

- Michael Geist

- Nudge (Richard Thaler and Cass Sunstein)

- The Net Delusion (Evgeny Morozov)