User:Artemis gryllaki/PrototypingIII: Difference between revisions

No edit summary |

No edit summary |

||

| Line 13: | Line 13: | ||

Using Montage<br> | Using Montage<br> | ||

montage -label "%f" *.JPG \<br> | montage -label "%f" *.JPG \<br> | ||

-shadow | -shadow <br> | ||

-geometry 1000x1000+100+100 | -geometry 1000x1000+100+100 <br> | ||

montage.caption.jpg<br> | -montage.caption.jpg<br> | ||

Using pdftk to put things together<br><br> | Using pdftk to put things together<br><br> | ||

Revision as of 21:56, 8 July 2019

Publishing an “image gallery”

Imagemagick’s suite of tools includes montage which is quite flexible and useful for making a quick overview page of image.

- mogrify

- identify

- convert

- Sizing down a bunch of images

Warning: MOGRIFY MODIES THE IMAGES – ERASING THE ORIGINAL – make a copy of the images before you do this!!!

mogrify -resize 1024x *.JPG

Fixing the orientation of images

mogrify -auto-orient *.JPG

Using Montage

montage -label "%f" *.JPG \

-shadow

-geometry 1000x1000+100+100

-montage.caption.jpg

Using pdftk to put things together

OCR

simple tesseract:

tesseract nameofpicture.png outputbase

https://github.com/tesseract-ocr/tesseract

See pad: https://pad.xpub.nl/p/IFL_2018-05-14

Prototyping

Image classifier for annotations

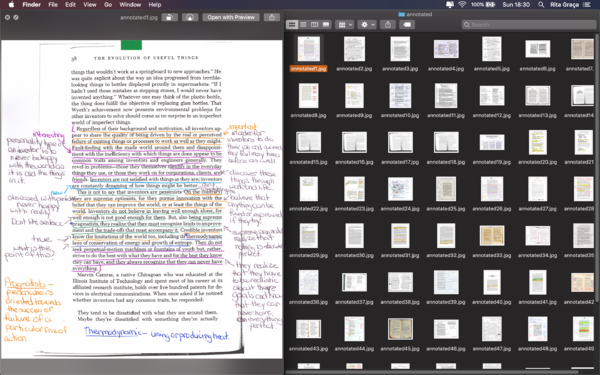

At the time of this special issue, a point of interest for everyone was annotations. We were reading and annotating texts together and debating the possibilities of sharing these notes. One particular discussion was about what could/should be considered as annotation: folding corners of pages, linking to other contents, highlighting, scribbling, drawing. I was curious if we could train a computer to see all of these traces, so I started prototyping some examples.

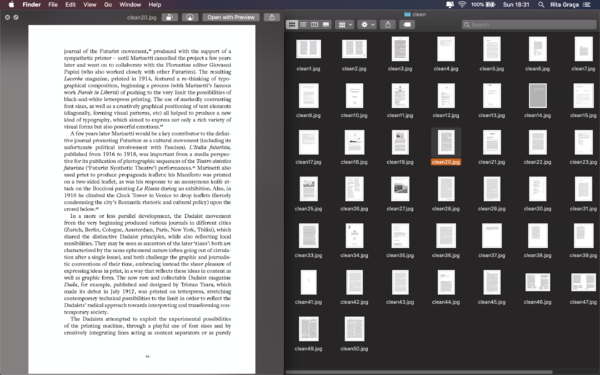

Aim: make the computer recognize "clean" pages of books or "annotated" pages of books.

Using the script from .py.rate.chnic session 2, pad notes here, and Alex's git here. My data set here.

Each set (test and training) had 50 examples of "clean" pages and "annotated" pages, it makes sense to add more in the future.

The results were not very accurate. Pages with hand-written text gave better results while highlighting and computer notes were often misinterpreted.

It’s useful to try to see what the computer is looking for, understand if the script is breaking the image in parts, and try other scripts.

Some results:

Computer categorization for text files

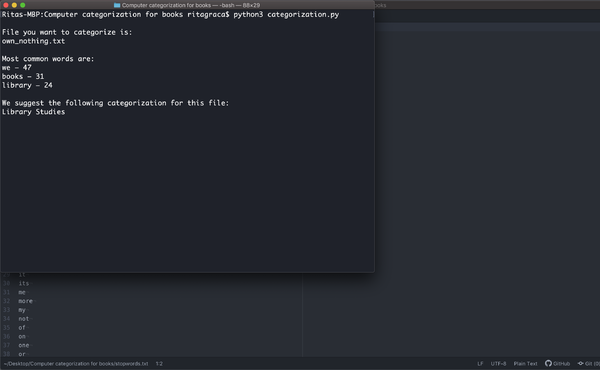

The actions of categorizing and cataloging happen in the most mundane activities, but they are not innocent. They translate values and certain visions of the world.

In the Rietveld Academy Library, we saw how the librarians are challenging the Library of Congress classification. With Dušan we browsed in the Monoskop Index, an interesting combination of a “book index, library catalog, and tag cloud”.

With this script, I was experimenting with an automated classification of text files. The script searches for the three most common words in the text and tries to match these words to a category. For example, if one of the most common words is “books” the category of the text is considered “Library Studies”. The same would happen with the word “archives”, “author”, “bibliographic”, “bibliotheca”, “book”, “bookcase”, etc. The script only has one category right now, but it would be easy to add more. By doing so, I would be making associations that are very personal, sometimes inaccurate, and I would be creating a bias in the catalog.