Sniff, Scrape, Crawl (Thematic Project): Difference between revisions

Renee Turner (talk | contribs) (Created page with "'''Sniff, Scrape, Crawl… <br>Trimester 2, Jan.-March 2011 <br>Thematic Project Tutors: Aymeric Mansoux, Michael Murtaugh, Renee Turner''' '' Our society is one not of spectac...") |

No edit summary |

||

| (65 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

'''Sniff, Scrape, Crawl… | ''''''Sniff, Scrape, Crawl…''' | ||

<br>Trimester 2, Jan.-March 2011 | '''<br>Trimester 2, Jan.-March 2011''' | ||

<br>Thematic Project Tutors: Aymeric Mansoux, Michael Murtaugh, Renee Turner''' | '''<br>Thematic Project Tutors: Aymeric Mansoux, Michael Murtaugh, Renee Turner'''<br> | ||

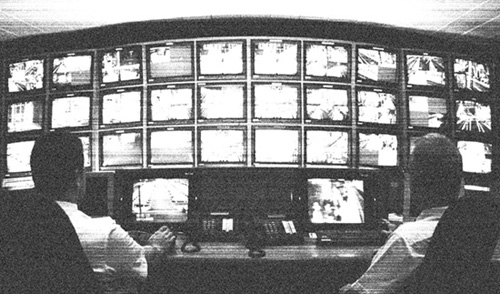

'' | [[file:Watching_you.jpg]]<br> | ||

Our society is one not of spectacle, but of surveillance…'' | |||

<br>''Our society is one not of spectacle, but of surveillance…'' | |||

<br>Michel Foucault, '''Discipline and Punish: The Birth of the Prison''', trans. Alan Sheridan (New York, 1979), p. 217 | <br>Michel Foucault, '''Discipline and Punish: The Birth of the Prison''', trans. Alan Sheridan (New York, 1979), p. 217 | ||

| Line 12: | Line 13: | ||

Where once surveillance technologies belonged to governmental agencies, the web has added another less optically-driven means of both monitoring and monetizing our lived experiences. As the line between public and private has become more blurred and the desire for convenience ever greater, our personal data has become a prized commodity upon which industries thrive. Perversely, we have become consumers who simultaneously produce the product through our own consumption. | Where once surveillance technologies belonged to governmental agencies, the web has added another less optically-driven means of both monitoring and monetizing our lived experiences. As the line between public and private has become more blurred and the desire for convenience ever greater, our personal data has become a prized commodity upon which industries thrive. Perversely, we have become consumers who simultaneously produce the product through our own consumption. | ||

Sniff, Scrape, Crawl… is a thematic project examining how surveillance and data-mining technologies shape and influence our lives, and what consequences they have on our civil liberties. We will look at the complexities of sharing information in exchange for waiving privacy rights. Next to this, we will look at how our fundamental understanding of private life has changed as public display has become more pervasive through social networks. Bringing together practical exercises, theoretical readings and a series of guest lectures, Sniff, Scrape, Crawl… will attempt to map the data trails we leave behind and look critically at the buoyant industries that track and commodify our personal information. | '''Sniff, Scrape, Crawl…''' is a thematic project examining how surveillance and data-mining technologies shape and influence our lives, and what consequences they have on our civil liberties. We will look at the complexities of sharing information in exchange for waiving privacy rights. Next to this, we will look at how our fundamental understanding of private life has changed as public display has become more pervasive through social networks. Bringing together practical exercises, theoretical readings and a series of guest lectures, Sniff, Scrape, Crawl… will attempt to map the data trails we leave behind and look critically at the buoyant industries that track and commodify our personal information. | ||

References: Michel Foucault, Discipline and Punish: The Birth of the Prison, trans. Alan Sheridan (New York, 1979) | References: Michel Foucault, '''Discipline and Punish: The Birth of the Prison''', trans. Alan Sheridan (New York, 1979) | ||

Wendy Hui Kyong Chun, Control and Freedom: power and paranoia in the age of fiber optics, (London, 2006) | Wendy Hui Kyong Chun, '''Control and Freedom: power and paranoia in the age of fiber optics''', (London, 2006) | ||

Note: This Thematic Project will be organized and taught by Aymeric Mansoux, Michael Murtaugh, Renee Turner and will involve a series of related guest lectures and presentations. | Note: This Thematic Project will be organized and taught by Aymeric Mansoux, Michael Murtaugh, Renee Turner and will involve a series of related guest lectures and presentations. | ||

== Workshop 1: 20-21 Jan, 2011 == | |||

=== Day 1 === | |||

==== Morning: SNIFF, Web 0.0 ==== | |||

* The joy of basic client/server [[Netcat Chat]] | |||

* Internet geography http://hulu.com | |||

* Connect to pzwart3 "geoip" script with netcat [[Get some HTTP content without a browser | like this]] '''TODO: put script on server, make a recipe and link it here''' | |||

* Repeat the same with a browser and witness "Software sorting" | |||

* Create generic user account on non discriminated machine, following [[Create a new user account on your GNU/Linux machine| these instructions]] | |||

* tunnel traffic through this machine [[Tunnel your HTTP traffic with ssh | with this trick]] | |||

* [[Wrap network traffic of almost any application with a SOCKS proxy]] | |||

* Sniff requested URL on the proxy machine with [[Sniff HTTP traffic on your machine | urlsnarf]] | |||

* A word on traceRoute & DNS, Internet topology (http://laramies.blogspot.com/2006/05/2d-and-3d-traceroute-with-scapy.html, http://www.visualcomplexity.com/vc/project.cfm?id=332) | |||

* A word on VPN, proxies and other delicatesses | |||

* Back to "geoblocking" script and explain the code and [[Simple CGI Script in Python|Python CGI]] | |||

* Unix permissions (basics, but also evt. link to API "permissions" in the Web 2.0 world) | |||

* [http://www.youtube.com/watch?v=VTS6wUF4Y7E sniffy the pony] really does exist | |||

==== Afternoon: SCRAPE, Web 1.0 / Simple Spider ==== | |||

Start with ipython. | |||

Getting documentation from ipython | |||

Using urllib, open a connection to a page (connect) | |||

Scan for images in a page. | |||

Loop | |||

# Do everything by hand.... | |||

# Make Script, put in bin | |||

(how to ) | |||

Open connections, interrogate the response. | |||

Using [http://code.google.com/p/html5lib/ HTML5lib] & [http://codespeak.net/lxml/ lxml] for easy webpage parsing in Python. | |||

* HTTP, URL, CSS, get vs. post, query strings, urlencoding | |||

Cookbook: [[Simple Web Spider in Python]] | |||

=== Day 2 === | |||

==== Morning: APIness / Web 2.0 ==== | |||

We now move from the surface of the "scrape" to the deeper database structure of a web 2.0 service and the data available via an API. | |||

We took a look at the [http://www.readwriteweb.com/archives/this_is_what_a_tweet_looks_like.php anatomy of a tweet], as an introduction to the "cloud" of data around the 140 characters of a tweet publicly available via the Twitter API. | |||

We looked at an example of an "[http://www.readwriteweb.com/archives/us_announces_120000_ipad_users_had_data_stolen_att_hack.php IPad hack]" involving cross referencing device identifiers and emails via different publicly available sites. | |||

Changes announced on the [http://developers.facebook.com/blog/post/446 Facebook blog] in response to privacy concerns. | |||

Goal: Apply the same spider script -- now using data/connections available via the API. | |||

Some topics dicussed: the new-style [[wikipedia:REST | "RESTful"]] APIs, and the use of [[wikipedia:JSON | JSON]] | |||

Cookbook: [[JSON parsing from an API and do something with it]] | |||

''' API links ''' | |||

* [http://www.flickr.com/services/api/ flickr] | |||

* [http://dev.twitter.com/ twitter] http://dev.twitter.com/doc/get/search | |||

* [http://developers.facebook.com/ facebook] | |||

* [http://developer.skype.com/ skype] | |||

* [http://wiki.developer.myspace.com/index.php?title=Category:RESTful_API myspace] | |||

* [http://www.last.fm/api/intro LastFM] | |||

==== Afternoon: CRAWL with Django ==== | |||

slowly but steadily, a new structure emerges. | |||

Mapping JSON/API structure to a database. | |||

Code to map source to db. | |||

Using Django admin to visualize data. | |||

Creating views... | |||

>> assignment in teams: for instance: | |||

* get a list of tags from a userid | |||

[[Building a web crawler with Django]] | |||

* quick note about scrapy | |||

[[File:Djangopony.jpg]] | |||

=== Assignment: Tactical Crawler === | |||

Assignment is to move from a '''Survey to a Strategy''', and to prepare a plan for a "tactical crawler" related to your research. | |||

Your plan should both: | |||

# Express a strategy to explore a particular / personal research question / interest / frustration / concern, and be focused on a particular use / audience / context. | |||

# Be grounded in actual data / connections such as those explored / viewed in the workshop (via scraping or API explorations). '''Show particular data''' gathered from scraping/api's gathered "by hand" or by simple scripts (such as those used in the workshop) to support your plan. | |||

Important: Unless it already fits your plan, you do not necessarily need | |||

to work with the Django code / crawler example from the last afternoon | |||

session. This will (most likely) become more important as your work | |||

develops -- for now the important thing is the creation of your plan and | |||

your initial scrapes & the specifics of the kind of data available from | |||

APIs. | |||

We realize that the two day workshop was an intense session that might | |||

have been a little overwhelming. Keep in mind that this was meant to | |||

give you an overview of the different tools and strategies available for | |||

scraping and data mining. It is now up to you to decide on which | |||

elements you wish to focus more and spend the rest of the trimester | |||

working with. | |||

'''Your assignment/plan will be assessed this coming Tuesday, 25 January''' | |||

Tuesday's meeting will involve each of you presenting your plan to the group and a discussion of the plans. | |||

''Happy Sniffing & Scraping!'' | |||

== Resources == | |||

* [http://scraperwiki.com/ ScraperWiki] [http://onlinejournalismblog.com/2010/07/07/an-introduction-to-data-scraping-with-scraperwiki/ discussed in relation to journalism] | |||

* http://en.citizendium.org/wiki/Welcome_to_Citizendium & Larry Sanger | |||

* http://www.goodiff.org/ | |||

* http://fffff.at/google-alarm/ | |||

* http://en.wikipedia.org/wiki/Eleanor_Antin | |||

[[Category:Thematic project]] | |||

Latest revision as of 18:35, 15 September 2014

'Sniff, Scrape, Crawl…

Trimester 2, Jan.-March 2011

Thematic Project Tutors: Aymeric Mansoux, Michael Murtaugh, Renee Turner

Our society is one not of spectacle, but of surveillance…

Michel Foucault, Discipline and Punish: The Birth of the Prison, trans. Alan Sheridan (New York, 1979), p. 217

We are living in an age of unprecedented surveillance. But unlike the ominous specter of Orwell’s Big Brother, where power is clearly defined and always palpable, today’s methods of information gathering are much more subtle and woven into the fabric of our everyday life. Through the use of seemingly innocuous algorithms Amazon tells us which books we might like, our trusted browser tracks our searches and Last.fm connects us with people who have similar tastes in music. Immersed in social media, we commit to legally binding contracts by agreeing to ‘terms of use’. Having made the pact, we Twitter our subjective realities in less than 140 characters, wish dear friends happy birthday on facebook and mobile-upload our geotagged videos on youtube.

Where once surveillance technologies belonged to governmental agencies, the web has added another less optically-driven means of both monitoring and monetizing our lived experiences. As the line between public and private has become more blurred and the desire for convenience ever greater, our personal data has become a prized commodity upon which industries thrive. Perversely, we have become consumers who simultaneously produce the product through our own consumption.

Sniff, Scrape, Crawl… is a thematic project examining how surveillance and data-mining technologies shape and influence our lives, and what consequences they have on our civil liberties. We will look at the complexities of sharing information in exchange for waiving privacy rights. Next to this, we will look at how our fundamental understanding of private life has changed as public display has become more pervasive through social networks. Bringing together practical exercises, theoretical readings and a series of guest lectures, Sniff, Scrape, Crawl… will attempt to map the data trails we leave behind and look critically at the buoyant industries that track and commodify our personal information.

References: Michel Foucault, Discipline and Punish: The Birth of the Prison, trans. Alan Sheridan (New York, 1979) Wendy Hui Kyong Chun, Control and Freedom: power and paranoia in the age of fiber optics, (London, 2006)

Note: This Thematic Project will be organized and taught by Aymeric Mansoux, Michael Murtaugh, Renee Turner and will involve a series of related guest lectures and presentations.

Workshop 1: 20-21 Jan, 2011

Day 1

Morning: SNIFF, Web 0.0

- The joy of basic client/server Netcat Chat

- Internet geography http://hulu.com

- Connect to pzwart3 "geoip" script with netcat like this TODO: put script on server, make a recipe and link it here

- Repeat the same with a browser and witness "Software sorting"

- Create generic user account on non discriminated machine, following these instructions

- tunnel traffic through this machine with this trick

- Wrap network traffic of almost any application with a SOCKS proxy

- Sniff requested URL on the proxy machine with urlsnarf

- A word on traceRoute & DNS, Internet topology (http://laramies.blogspot.com/2006/05/2d-and-3d-traceroute-with-scapy.html, http://www.visualcomplexity.com/vc/project.cfm?id=332)

- A word on VPN, proxies and other delicatesses

- Back to "geoblocking" script and explain the code and Python CGI

- Unix permissions (basics, but also evt. link to API "permissions" in the Web 2.0 world)

- sniffy the pony really does exist

Afternoon: SCRAPE, Web 1.0 / Simple Spider

Start with ipython. Getting documentation from ipython Using urllib, open a connection to a page (connect) Scan for images in a page. Loop

- Do everything by hand....

- Make Script, put in bin

(how to )

Open connections, interrogate the response.

Using HTML5lib & lxml for easy webpage parsing in Python.

- HTTP, URL, CSS, get vs. post, query strings, urlencoding

Cookbook: Simple Web Spider in Python

Day 2

Morning: APIness / Web 2.0

We now move from the surface of the "scrape" to the deeper database structure of a web 2.0 service and the data available via an API.

We took a look at the anatomy of a tweet, as an introduction to the "cloud" of data around the 140 characters of a tweet publicly available via the Twitter API.

We looked at an example of an "IPad hack" involving cross referencing device identifiers and emails via different publicly available sites.

Changes announced on the Facebook blog in response to privacy concerns.

Goal: Apply the same spider script -- now using data/connections available via the API.

Some topics dicussed: the new-style "RESTful" APIs, and the use of JSON

Cookbook: JSON parsing from an API and do something with it

API links

Afternoon: CRAWL with Django

slowly but steadily, a new structure emerges.

Mapping JSON/API structure to a database. Code to map source to db. Using Django admin to visualize data.

Creating views...

>> assignment in teams: for instance:

- get a list of tags from a userid

Building a web crawler with Django

- quick note about scrapy

Assignment: Tactical Crawler

Assignment is to move from a Survey to a Strategy, and to prepare a plan for a "tactical crawler" related to your research.

Your plan should both:

- Express a strategy to explore a particular / personal research question / interest / frustration / concern, and be focused on a particular use / audience / context.

- Be grounded in actual data / connections such as those explored / viewed in the workshop (via scraping or API explorations). Show particular data gathered from scraping/api's gathered "by hand" or by simple scripts (such as those used in the workshop) to support your plan.

Important: Unless it already fits your plan, you do not necessarily need to work with the Django code / crawler example from the last afternoon session. This will (most likely) become more important as your work develops -- for now the important thing is the creation of your plan and your initial scrapes & the specifics of the kind of data available from APIs.

We realize that the two day workshop was an intense session that might have been a little overwhelming. Keep in mind that this was meant to give you an overview of the different tools and strategies available for scraping and data mining. It is now up to you to decide on which elements you wish to focus more and spend the rest of the trimester working with.

Your assignment/plan will be assessed this coming Tuesday, 25 January

Tuesday's meeting will involve each of you presenting your plan to the group and a discussion of the plans.

Happy Sniffing & Scraping!