Interfacing the law prototyping Zalan Szakacs

Revision as of 14:12, 6 June 2018 by Zalán Szakács (talk | contribs)

CGI & TF-IDF search engine – Michael

Comma separated catalogue – Andre + Michael

Pad of the lesson: CSV-libgen

CSV:

- comma-separated values

- plain-text file

- commas are used as field seperators

- A record ends at a line terminator (such as new line \n)

- All records have to have the same number of fields

How to handle it? Tools for handling CSV

- head - output the first part of files

- tail - output the last part of files

- CSVKit - "swiss army knife" of CSV files. Install: sudo pip3 install csvkit

Documentation: https://csvkit.readthedocs.io/en/1.0.1/#

- Python CSV core lib https://docs.python.org/3.5/library/csv.html

- structured-text-tools https://github.com/dbohdan/structured-text-tools

What is it? How is it structure? What data does it contain? Is it incomplete or messy?

- Headers are in seperate file libgen_columns.txt, we might need to integrate it into content.csv, as python CSV library takes the first row of a CSV file as the headers row

- convert libgen_columns.txt to CSV:

A alternative: cat libgen_columns.txt | sed "s/\ \ \`/\"/g" | sed "s/\`.*/\",/g"| tr --delete '\n'

MAC: cat libgen_columns.txt | sed "s/\ \ \`/\"/g" | sed "s/\`.*/\",/g" | tr -d '\n'

converting txt to csv (MAC): libgen_columns.txt | sed "s/\ \ \`/\"/g" | sed "s/\`.*/\",/g" | tr -d '\n' > headers.csv

Workshop 1 Building our own search engine – Dusan Barok (monoskop)

#!//usr/local/bin/python3

import cgi

import cgitb; cgitb.enable()

import nltk

import re

print ("Content-type:text/html;charset=utf-8")

print ()

#cgi.print_environ()

f = cgi.FieldStorage()

submit1 = f.getvalue("submit1", "")

submit2 = f.getvalue("submit2", "")

text = f.getvalue("text", "")

### SORTING

import os

import csv

import string

import pandas as pd

import sys

### SEARCHING

#input keyword you want to search

keyword = text

print ("""<!DOCTYPE html>

<html>

<head>

<title>Search</title>

<meta charset="utf-8">

</head>

<body>

<p style='font-size: 20pt; font-family: Courier'>Search by keyword</p>

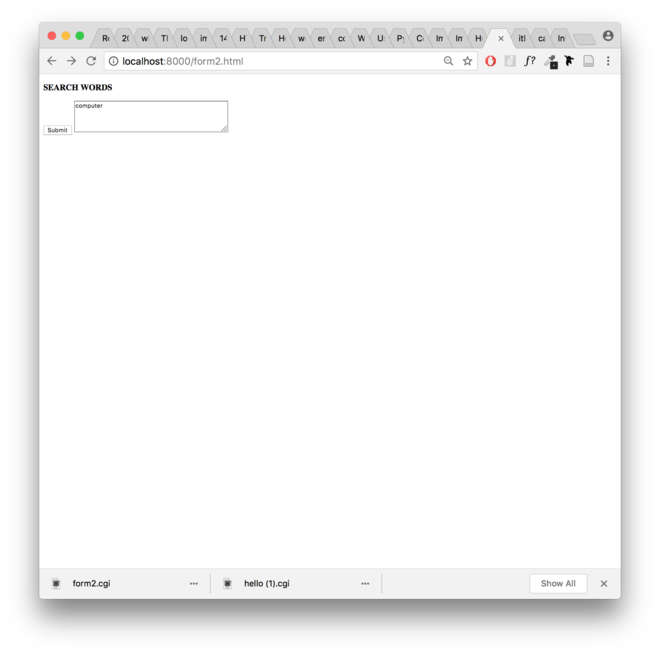

<form method="get">

<textarea name="text" style="background: yellow; font-size: 10pt; width: 370px; height: 28px;" autofocus></textarea>

<input type="submit" name="submit" value="Search" style='font-size: 9pt; height: 32px; vertical-align:top;'>

</form>

<p style='font-size: 9pt; font-family: Courier'>

webring <br>

<a href="http://145.24.204.185:8000/form.html">joca</a>

<a href="http://145.24.198.145:8000/form.html">alice</a>

<a href="http://145.24.246.69:8000/form.html">michael</a>

<a href="http://145.24.165.175:8000/form.html">ange</a>

<a href="http://145.24.254.39:8000/form.html">zalan</a>

</p>

</body>

</html>""")

x = 0

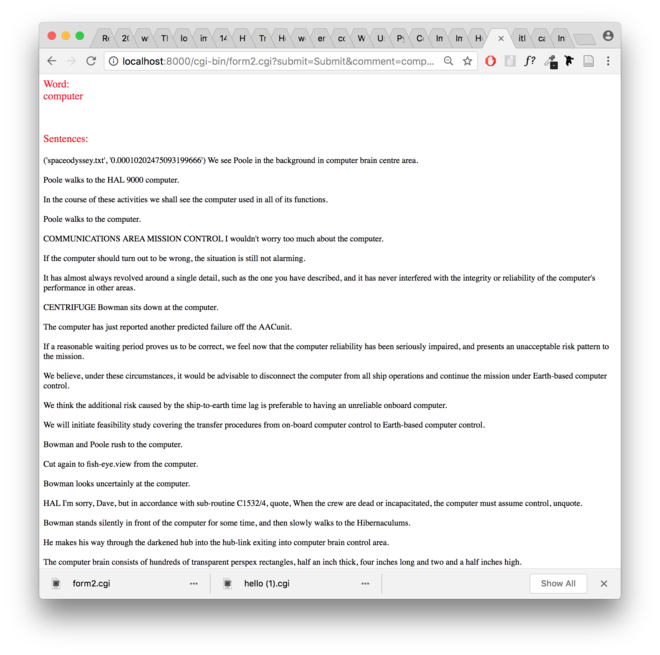

if text :

#read csv, and split on "," the line

csv_file = csv.reader(open('tfidf.csv', "r"), delimiter=",")

col_names = next(csv_file)

#loop through csv list

for row in csv_file:

#if current rows value is equal to input, print that row

if keyword == row[0] :

tfidf_list = list(zip(col_names, row))

del tfidf_list[0]

sorted_by_second = sorted(tfidf_list, key=lambda x:float(x[1]), reverse=True)

print ("<p></p>")

print ("--------------------------------------------------------------------------------------")

print ("<p style='font-size: 20pt; font-family: Courier'>Results</p>")

for item in sorted_by_second:

x = x+1

print ("--------------------------------------------------------------------------------------")

print ("<br></br>")

print(x, item)

n = item[0]

f = open("cgi-bin/texts/{}".format(n), "r")

sents = nltk.sent_tokenize(f.read())

for sentence in sents:

if re.search(r'\b({})\b'.format(text), sentence):

print ("<br></br>")

print(sentence)

f.close()

print ("<br></br>")