User:Manetta/thesis/chapter-intro

i could have written that - intro

0.0 - problematic situation

Attempt 1:

Written language is primarily a communication technology. Text mining is an undesired side effect of the information economy (ref...!). Text mining becomes part of business plans, where tracking of online-behavior is crucial to make profitable deals with advertisers. But next to mining-business-plans, text mining becomes a technology that seems to be able to 'extract' how people feel. A commonly applied algorithm is the sentiment algorithm, used for opinion mining for example on Twitter, to be able to use tweeted material as part of news-reports or decision making processes. The World Well Being Project goes even a step further, and aims to use Twitter to reveal “how social media can also be used to gain psychological insights“ (http://wwbp.org/papers/sam2013-dla.pdf).

Attempt 2:

Thanks to the technologies of the Internet, a lot of different sources for written text are available to researchers and corporations. The availability of this material combined with the fact that it comes in such high amount, offers them a possibility to use and transform it for their own good. Is text mining a technique that is built on a possibility, and slowly transformed into a desirability?

Text mining seems to go beyond its own capabilities here, by convincing people to believe that it is the data that 'speaks'. The actual process is hardly re-traceable, the output explains intangible phenomena, and it seems to be that the process is automated and therefor precise.

A little list of applications where text mining can be 'spotted' (well... if searched for actively) in the wild:

- Search engine algorithmic results

- Twitter/Facebook algorithmic feeds

- algorithmic recommendations in web stores

- Advertisements appear that could be painful?

- Possibility rate that someone is a criminal correlated to writing style?

- A chatter is accused of pedophilia as of pretending to be 14 when having the writing style of an older man?

- Who decides on these categories? Who is in power? Software-governance.

- Written text is a material form & government of control?

problem formulation

Text mining is regarded to be an analytical 'reading' machine that extracts information from large sets of written text. (→ consequences of 'objectiveness', claims that 'no humans are involved' in such automated processes because it is 'the data speaks')

hypothesis

The results of text mining software are not 'mined', results are constructed. What if text mining software is rather regarded as writing systems?

0.1 - intro

in-between-language / inter-language / middleware

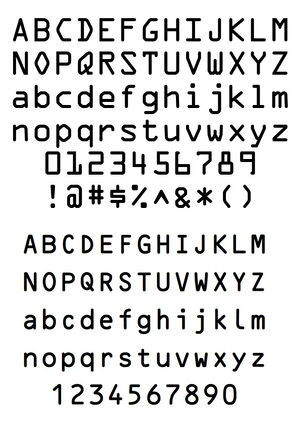

In Volume I, Number 2 in 1967, the Journal of Typographic research presents OCR-B, a typeface designed by the Swiss type designer Adrian Frutiger. In the article 'OCR-B: A Standardized Character for Optical Recognition' the typeface is (optimistically) described as the latest standard for machine reading. The development of OCR-B is even called a success on a humane level, and put in a direct historical line from Egyptian stone-carving techniques to the development of today's printers. (Journal of Typographic Research, V1N2_1967)

The article expects automatic optical reading to “widen the bounds of the field of data processing”. Interestingly enough, the term 'data' is referring here to a character or word on paper, either typed on a typewriter or printed from a computer. Full sentences are the data, that needs to be transformed into a plain digital text file. Neglecting typography or layout choices along the way.

OCR-B is designed as a reaction to OCR-A, developed at the same time and for similar purposes. Adrian Frutiger was asked to combine the challenge to design a font that can be read automatically by machines with another challenge: to make a font that is at the same time aesthetically friendly for the human eye.

Both OCR-A and OCR-B are products of automated reading technologies. They respond to conditions that are needed for both reading in the traditional sense and an efficient automated reading process executed by computer software. They become a sort of inter-language that originated out of aims for efficient data-processing systems.

This is a simple example how a tool's functioning is both examined by software's and human eye's conditions.

→ how is text mining a technology that occurs on similar inter-conditions?

(computer-reading by counting documents is needed to process written text, but outcomes are only approved when 'checkable' by human expectations? This is part of what I would like to name 'algorithmic agreeability'. The circular effect of judging the outcomes on assumptions and expectations. → for chapter 3?)

Natural Language Processing (NLP)

NLP is a field of research that is concerned with the interaction between human language and machine language. NLP is mainly present in the field of computer science, artificial intelligence and computational linguistics. On a daily basis people deal with services that contain NLP techniques: translation engines, search engines, speech recognition, auto-correction, chat bots, OCR (optical character recognition), license plate detection, text-mining. How is NLP software constructed to understand human language, and what side-effects do these techniques have? (bit about a specific NLP project, maybe Weizenbaum?)

links