User:Δεριζαματζορπρομπλεμιναυστραλια/PrototypingnetworkedmediaSniff Scrape Crawl

archive.org

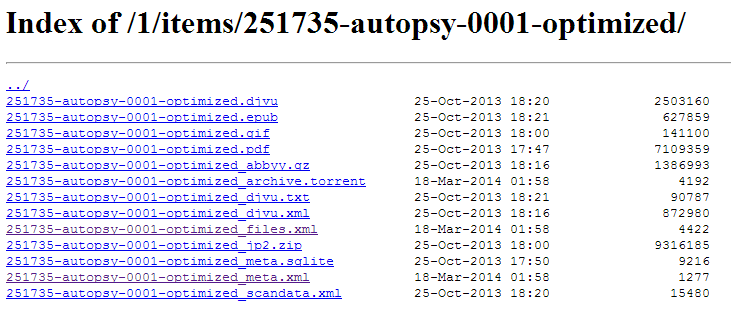

Items include multiple files dublin core or fuller marxml records that use the L.o.C. marc21 format for bibliographic data

Information about every file in this directory by viewing the file ending in _files.xml , all of the metadata for the item, view the file ending in _meta.xml :

Identifier

The globally unique ID of a given item on archive.org

collections and items all have a unique identifier

(taken by the Title field of the entry)

item: 251735-autopsy-0001-optimized

collection: opensource_Afrikaans

Archive.org and python

found this code to open item in command line

# open-webpage.py

import urllib2

url = 'https://archive.org/details/dasleidenunsersh00bras'

response = urllib2.urlopen(url)

webContent = response.read()

print webContent[0:500]

and then this for html output .

# save-webpage.py

import urllib2

url = 'https://archive.org/details/bplill'

response = urllib2.urlopen(url)

webContent = response.read()

f = open('bplill.html', 'w')

f.write(webContent)

f.close

internetarchive 0.6.6 =A python interface to archive.org

1) ia for using the archive from the command line

2)internet archive for programmatic access to the archive

Accessing an IA Collection in Python to see number of items:

import internetarchive

search = internetarchive.Search('collection:nasa')

print search.num_found

Accessing an item

ia download 251735-autopsy-0001-optimized (or types, mp4etc)

ia metadata 251735-autopsy-0001-optimized (metadata in command line)

import internetarchive

item = internetarchive.Item('251735-autopsy-0001-optimized')

item.download()

Data mining

failed to install gevent error with vcvarsall.bat

ia mine can be used to retrieve metadata for items via the IA Metadata API.

Get all links from a collection

I also found this nice code!

(with beautiful soup)

import urllib2

from bs4 import BeautifulSoup

# Get the HTML for each list of results from the BPL collection. URLs look

# like this: http://archive.org/search.php?query=collection%3Abplscas&page=1

for resultsPage in range(1, 9768):

url = "https://archive.org/search.php?query=collection%3Aopensource&page=" + str(resultsPage)

response = urllib2.urlopen(url).read()

soup = BeautifulSoup(response)

links = soup.find_all(class_="titleLink")

for link in links:

itemURL = link['href']

f = open('collections2nikos.txt','a')

f.write('http://archive.org' + itemURL + '\n')

f.close()

and with this we can get an html page with the links of each list of results.

SOURCES

http://blog.archive.org/2011/03/31/how-archive-org-items-are-structured/

http://programminghistorian.org/lessons/data-mining-the-internet-archive

http://programminghistorian.org/lessons/working-with-web-pages