User:Riviera/Rapid prototypes

September 19th 2024

incus-tramp.el

This file is an edit of Yc.S' lxd-tramp.el. It allows Emacs to TRAMP into Incus containers.

;;; incus-tramp.el --- TRAMP integration for incus containers -*- lexical-binding: t; -*-

;; Copyright (C) 2018 Yc.S <onixie@gmail.com>; 2024 Riviera Taylor <riviera@klankschool.org>

;; Author: Yc.S <onixie@gmail.com>; Riviera Taylor <riviera@klankschool.org>

;; URL: https://github.com/onixie/lxd-tramp.git

;; Keywords: incus, convenience

;; Version: 0.1

;; Package-Requires: ((emacs "24.4") (cl-lib "0.6"))

;; This file is NOT part of GNU Emacs.

;;; License:

;; This program is free software; you can redistribute it and/or modify

;; it under the terms of the GNU General Public License as published by

;; the Free Software Foundation, either version 3 of the License, or

;; (at your option) any later version.

;; This program is distributed in the hope that it will be useful,

;; but WITHOUT ANY WARRANTY; without even the implied warranty of

;; MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

;; GNU General Public License for more details.

;; You should have received a copy of the GNU General Public License

;; along with this program. If not, see <http://www.gnu.org/licenses/>.

;;; Commentary:

;;

;; `incus-tramp' offers a TRAMP method for Incus containers

;;

;; ## Usage

;;

;; Offers the TRAMP method `incus` to access running containers

;;

;; C-x C-f /incus:user@container:/path/to/file

;;

;; where

;; user is the user that you want to use (optional)

;; container is the id or name of the container

;;

;;; Code:

(eval-when-compile (require 'cl-lib))

(require 'tramp)

(require 'subr-x)

(defgroup incus-tramp nil

"TRAMP integration for incus containers."

:prefix "incus-tramp-";

:group 'applications

:link '(url-link :tag "GitHub" "https://github.com/onixie/lxd-tramp.git")

:link '(emacs-commentary-link :tag "Commentary" "lxd-tramp"))

(defcustom incus-tramp-incus-executable "incus"

"Path to incus executable."

:type 'string

:group 'incus-tramp)

;;;###autoload

(defconst incus-tramp-completion-function-alist

'((incus-tramp--parse-running-containers ""))

"Default list of (FUNCTION FILE) pairs to be examined for incus method.")

;;;###autoload

(defconst incus-tramp-method "incus"

"Method to connect to incus containers.")

(defun incus-tramp--running-containers ()

"Collect running container names."

(cl-rest

(cl-loop for line in (ignore-errors (process-lines incus-tramp-incus-executable

"list" "--columns=n")) ; Note: --format=csv only exists after version 2.13

for count from 1

when (cl-evenp count) collect (string-trim (substring line 1 -1)))))

(defun incus-tramp--parse-running-containers (&optional ignored)

"Return a list of (user host) tuples.

TRAMP calls this function with a filename which is IGNORED. The

user is an empty string because the incus TRAMP method uses bash

to connect to the default user containers."

(cl-loop for name in (incus-tramp--running-containers)

collect (list "" name)))

;;;###autoload

(defun incus-tramp-add-method ()

"Add incus tramp method.";

(add-to-list 'tramp-methods

`(,incus-tramp-method

(tramp-login-program ,incus-tramp-incus-executable)

(tramp-login-args (("exec") ("%h") ("--") ("su - %u")))

(tramp-remote-shell "/bin/sh")

(tramp-remote-shell-args ("-i" "-c")))))

;;;###autoload

(eval-after-load 'tramp

'(progn

(incus-tramp-add-method)

(tramp-set-completion-function incus-tramp-method

incus-tramp-completion-function-alist)))

(provide 'incus-tramp)

;; Local Variables:

;; indent-tabs-mode: nil

;; End:

;;; incus-tramp.el ends here

Incus List

This is a list of containers running inside the Klankserver. Each has an IPV4 address and a corresponding IPV6 address which I have redacted just in case....

$ incus list +-------------+---------+----------------------+-----------------------------------------------+-----------+-----------+ | NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS | +-------------+---------+----------------------+-----------------------------------------------+-----------+-----------+ | calendar | RUNNING | REDACTED (eth0) | REDACTED (eth0) | CONTAINER | 0 | +-------------+---------+----------------------+-----------------------------------------------+-----------+-----------+ | flok | RUNNING | REDACTED (eth0) | REDACTED (eth0) | CONTAINER | 0 | +-------------+---------+----------------------+-----------------------------------------------+-----------+-----------+ | funkwhale | RUNNING | REDACTED (eth0) | REDACTED (eth0) | CONTAINER | 0 | +-------------+---------+----------------------+-----------------------------------------------+-----------+-----------+ | gitea | RUNNING | REDACTED (eth0) | REDACTED (eth0) | CONTAINER | 0 | +-------------+---------+----------------------+-----------------------------------------------+-----------+-----------+ | ldap | RUNNING | REDACTED (eth0) | REDACTED (eth0) | CONTAINER | 0 | +-------------+---------+----------------------+-----------------------------------------------+-----------+-----------+ | mail | RUNNING | REDACTED (eth0) | REDACTED (eth0) | CONTAINER | 0 | +-------------+---------+----------------------+-----------------------------------------------+-----------+-----------+ | nginx | RUNNING | REDACTED (eth0) | REDACTED (eth0) | CONTAINER | 0 | +-------------+---------+----------------------+-----------------------------------------------+-----------+-----------+ | openproject | RUNNING | REDACTED (eth0) | REDACTED (eth0) | CONTAINER | 0 | +-------------+---------+----------------------+-----------------------------------------------+-----------+-----------+ | pages | RUNNING | REDACTED (eth0) | REDACTED (eth0) | CONTAINER | 0 | +-------------+---------+----------------------+-----------------------------------------------+-----------+-----------+ | pgadmin | RUNNING | REDACTED (eth0) | REDACTED (eth0) | CONTAINER | 0 | +-------------+---------+----------------------+-----------------------------------------------+-----------+-----------+ | postgresql | RUNNING | REDACTED (eth0) | REDACTED (eth0) | CONTAINER | 0 | +-------------+---------+----------------------+-----------------------------------------------+-----------+-----------+ |

29 September 2024

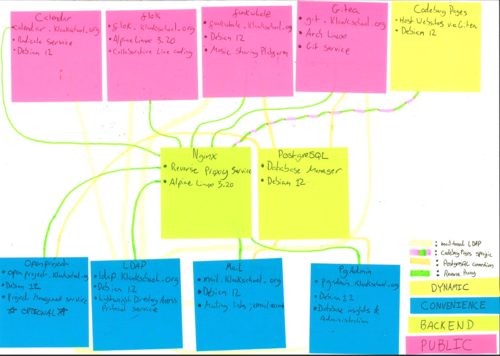

Klankserver Service Types and Connections

I created a collection of README files on the klankserver with information about each container. The files detailed the purpose, endpoint, OS and name of each container as well as links to useful webpages. I then placed filtered information on post-it notes, which were colour-coordinated according to the type of the service. I placed these on a piece of A3 paper and drew lines with highlighters to represent the interactions between these containers. Doing this gave me insight into which services were most important (that is, public facing or backend) and which were merely for convenience. As such, I was able to prioritise which services would need setting up first and foremost.

2 October 2024

Some reflections on transcribing speech

The following text features half-baked reflections on transcribing interviews using AI software. More could be said about the relationship between Natural Language Processing and AI transcription models. Further researching Special Issue 13, as well as knitting the discussion of di-versionings with research into server maintenance practices are constructive next steps

I am planning to conduct several interviews with people about servers and server maintance. In preparation for this undertaking, I am practising transcribing interviews. I am motivated to do so following an execerise proposed in InterViews: Learning the craft of qualitative research interviews, by Brinkmann and Kvale (2015, p.70). Recently, I found a talk on Archive.org by Cristina Cochior and Julie Boschat-Thorez about vernacular language processing. I am guessing from the context that it was given at the Hackers and Designer’s Summer Camp in 2022. I decided to process the talk, albeit not an interview, with OpenAI’s Whisper.

The irony of transcribing a talk about vernacular language processing with OpenAI software was not lost on me. Whereas the software is accurate, it could not by itself reconstruct the formatting of vernaculars in the publication Vernaculars Come to Matter. This was most notable when the speakers performed a reading of excerpts from this spiral-bound text during the talk. The phantasmagoric through-line offered reminds me of TV adverts for compilation albums. How might this sensation be reflected in a transcription? For now, given that this is a rapid prototype, I have settled for re-using material from the VLTK wiki, formatting and all. Doing so leads me to questioning to what extent I have re-enforced ‘a binary opposition, between the natural and the artificial’ (Boschat-Thorez in Radio Echo Collective, 2022). There is a stark contrast within the mélange between the text yanked from hypertexts elsewhere and the speech processed by AI. Likewise, the language of the publication bled into the edited, computationally processed copy when omitted hyphenations were reintroduced to terms such as ‘re-formations’.

On the topic of errors, I decided to review the transcribed material several times. I often did so while listening to the talk at a slower pace. Although Brinkmann and Kvale’s text predates this software, I expect there were some pieces of software available a decade ago that had the ability to transcribe audio. Thus, these authors would likely have been cognisant of the influence of ever-advancing technology on the practice of transcribing. The form of accuracy which contemporary AI models strive for in transcribing speech arguably reflects (techno-)solutionism. As Radford et al. (n.d.) point out: ‘The goal of a speech recognition system should be to work reliably “out of the box” in a broad range of environments’. Given the permissive license, it can reasonably be asked what (or who) benefits from this technology and for what purpose it is most useful. By extension, this invites imagining and practising (in) alternative modes of transcribing.

How, then, might transcribing be practised otherwise? In the context of interview-based, qualitative research, what might de-centering speech-to-text workflows, for example through co-authored or cartographic techniques, contribute to sharing knowledge around server maintenance? What free software infrastructure could be self hosted to support documenting such knowledge?

I found some of the ideas in the talk captivating. At one point in the talk, ‘actions that can be carried’, including ‘re-formations’ and ‘di-versionings’, were offered as basically ‘non-extractive’ (Radio Echo Collective, 2022). I found this language fleshed out statements in the Wishlist for Trans*Feminist servers. Specifically: ‘Trans*feminist servers … will towards non-extractive relationships, but in the meantime, are accountable for the ones they are complicit with’. Given that I transcribed the talk with OpenAI’s Whisper, I decided to intervene in the output at an appropriate junction (highlighted in bold) to reflect what I understand by ‘di-versioning’:

[N]atural language processing dictates many of the ways in which languages are being ordered digitally and this has direct consequences in shaping a particular understanding of the wor(l)d. … [T]his has, for instance, some very concrete consequences in terms of exclusion, criminalization, or personality profiling.

— Boschat-Thorez in Radio Echo Collective, 2022

This editorial decision was made on the basis of what I could hear in the speaker's voice, common expressions, and the AI's decision to choose the word 'word' at this point.

The talk can be read on this wiki page

7 October 2024

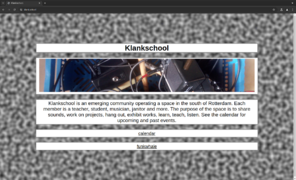

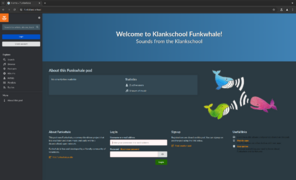

Klankschool Web Services

Over the weekend I setup various web services which are running publicly on a domain. Notably, The klank.school website is a static site served from a git repository. For this I used Gitea and codeberg-pages in tandem with HAProxy. The codeberg pages server implements an ACME client. As such, SSL termination needs to take place inside the container running the pages server for https connections to be made successfully. It was a bit tricky to figure out where to run the pages server. It kept competing with services running on subdomains, causing them to stop working. I think the workaround will hold, at least for now! At the moment, I'm feeling optimistic about the stability of the setup. In the near future I plan to implement a collaborative live coding environment at live.klank.school.

9 October 2024

Setting up a containerised mail server

I am setting up a mail server at klank.school. The server is running inside a Linux container (Inucs / Debian 12). It uses Postfix for SMTP and Dovecot for IMAP. I'm using postfixadmin for virtual mailboxes which is making use of a PostgreSQL database running in another container. Currently, I can login to the server via Thunderbird on my laptop using STARTTLS. The outgoing server uses SSL/TLS on port 465. Thunderbird is able to detect these settings automatically. The SSL certificate is signed by letsencrypt. The trick was to use dns-01 challenges rather than http challenges. This is because traffic reaches the containerised mail server via an HAProxy backend. The backend is TCP-based, rather than HTTP-based and the proxy is running in yet another container. In other words, I have been able to sidestep the issues certbot was reporting when sending HTTP challenges to the mail server. Besides this, I have set up SPF. DKIM and DMARC are also working. The next step is to install roundcube and spamassassin.

15 October 2024

Composing with Tidal Cycles

Rosa and I have agreed to work on something together for the upcoming public moment. We’re keeping our notes in an Etherpad. Yesterday we had the chance to get into the Cantina and move some furniture around in preparation for The Office - Klankschool Edition. The piece presented will draw on our previous collaboration: Printer Jam. I am focussing specifically on the server-based infrastructural dimensions of this collaboration. For that I am using the Klankschool server’s flok instance. The nice thing about Flok — a collaborative, browser-based live coding environment — is that it can init GHCI with a custom Tidal boot script. It's convenient to be able to execute custom start up code when running Tidal Cycles. The following code snippets form a simple Tidal Cycles / Python OSC application.

# office.py

from osc4py3.as_eventloop import *

from osc4py3 import oscbuildparse

import time

osc_startup()

osc_udp_client("127.0.0.1", 6010, "tidal")

while True:

x = 0

for n in range(4):

x = x + 0.2

msg = oscbuildparse.OSCMessage("/ctrl", ",sf", ["key", x])

osc_send(msg, "tidal")

print(x)

osc_process()

time.sleep(1)

x = 1

for n in range(4):

x = x - 0.2

msg = oscbuildparse.OSCMessage("/ctrl", ",sf", ["key", x])

print(x)

osc_send(msg, "tidal")

osc_process()

time.sleep(1)

osc_terminate()

-- office.tidal

printerOne = do

d1 $ fast 6 $ sound "sn" # gain (cF 0.5 "key")

-- 0.5 is the default value for the "key" parameter and it's a float

printerTwo = do

d2 $ fast 4 $ sound "bd" # pan (cF 0.5 "key")

office = do

printerOne

printerTwo

office

The fact is, everything in Haskell is a function. There are no variables. Thijs informs me that even the space character is an infix function which expects two arguments. Because Haskell is purely functional, it does not “change state”. Thus, one cannot say x = 3 and then later, in the same programme state that x = 5 because x is not a variable to which a value can be (re)assigned. GHCI is deceptive in this regard because one works with an interactive Haskell process. So in this case, one can say x = 3 and then say x = 5 but in doing so, one is still actually redefining the function x.

Either way, Rosa and I are wondering how we might utilise Open Sound Control (again). As part of the development of Tidal Cycles, Alex McLean wrote a library called hosc, which is a haskell implementation of OSC. It is really integral to tidal cycles, so I don’t know how much sense it makes to use this library in addition to Tidal. A reason to do so, might be to trigger certain conditions in a pre-evaluated tidal script. In other words, it’s likely that the piece will not feature any live coding. Rather, the algorithm will be sketched out beforehand and evaluated at the beginning of the piece.

Bibliography

Kvale, S. and Brinkmann, S. (2015) InterViews: learning the craft of qualitative research interviewing, Third edition., Los Angeles, Sage Publications.

Radford, A., Kim, J. W., Xu, T., Brockman, G., McLeavey, C. and Sutskever, I. (n.d.) ‘Robust Speech Recognition via Large-Scale Weak Supervision’,.

Radio Echo Collective (2022) VLTK, a talk by Cristina Cochior and Julie Boschat-Thorez (varia), Hackers and Designers [Online]. Available at http://archive.org/details/varia-hd (Accessed 2 October 2024).