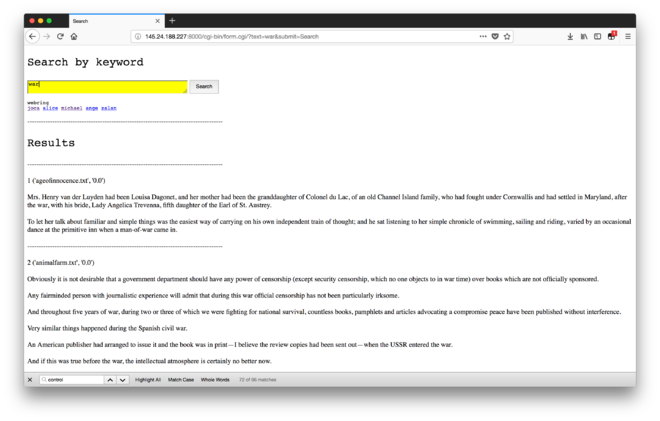

User:Tash/Prototyping 03

CGI & TF-IDF search engine

#!//usr/local/bin/python3

import cgi

import cgitb; cgitb.enable()

import nltk

import re

print ("Content-type:text/html;charset=utf-8")

print ()

#cgi.print_environ()

f = cgi.FieldStorage()

submit1 = f.getvalue("submit1", "")

submit2 = f.getvalue("submit2", "")

text = f.getvalue("text", "")

### SORTING

import os

import csv

import string

import pandas as pd

import sys

### SEARCHING

#input keyword you want to search

keyword = text

print ("""<!DOCTYPE html>

<html>

<head>

<title>Search</title>

<meta charset="utf-8">

</head>

<body>

<p style='font-size: 20pt; font-family: Courier'>Search by keyword</p>

<form method="get">

<textarea name="text" style="background: yellow; font-size: 10pt; width: 370px; height: 28px;" autofocus></textarea>

<input type="submit" name="submit" value="Search" style='font-size: 9pt; height: 32px; vertical-align:top;'>

</form>

<p style='font-size: 9pt; font-family: Courier'>

webring <br>

<a href="http://145.24.204.185:8000/form.html">joca</a>

<a href="http://145.24.198.145:8000/form.html">alice</a>

<a href="http://145.24.246.69:8000/form.html">michael</a>

<a href="http://145.24.165.175:8000/form.html">ange</a>

<a href="http://145.24.254.39:8000/form.html">zalan</a>

</p>

</body>

</html>""")

x = 0

if text :

#read csv, and split on "," the line

csv_file = csv.reader(open('tfidf.csv', "r"), delimiter=",")

col_names = next(csv_file)

#loop through csv list

for row in csv_file:

#if current rows value is equal to input, print that row

if keyword == row[0] :

tfidf_list = list(zip(col_names, row))

del tfidf_list[0]

sorted_by_second = sorted(tfidf_list, key=lambda x:float(x[1]), reverse=True)

print ("<p></p>")

print ("--------------------------------------------------------------------------------------")

print ("<p style='font-size: 20pt; font-family: Courier'>Results</p>")

for item in sorted_by_second:

x = x+1

print ("--------------------------------------------------------------------------------------")

print ("<br></br>")

print(x, item)

n = item[0]

f = open("cgi-bin/texts/{}".format(n), "r")

sents = nltk.sent_tokenize(f.read())

for sentence in sents:

if re.search(r'\b({})\b'.format(text), sentence):

print ("<br></br>")

print(sentence)

f.close()

print ("<br></br>")

Self directed research

Brainstorm 23.04.2018

Interface: How do you visualize that which is UNSTABLE? Serendipity? Missing data? Uncertainty? Dissent? Multiple views? On data provenance and feminist visualization: https://civic.mit.edu/feminist-data-visualization HOW can you GET data that's MISSING ?! E.G. from LibGen: where is the UPLOAD DATA? what could we do with it?

Simple test to highlight absent information: in LibGen's catalogue CSV there are row without titles How to search for blanks?

something like:

csvgrep -c Title -m "" content.csv

^ this solution matches spaces but doesn't look for empty state cells.

csvgrep -c Author -r "^$" content.csv

^ this solution finds rows with empty state cells in the 'Author' column

andre's exciting explorations of the archive.org api search: Internet Archive - Advanced search: https://archive.org/advancedsearch.php ghost in the mp3