User:Manetta/thesis/chapter-3

chapter 3 – training truth (from 'mining' to Knowledge Discovery in Databases (KDD))

To refer to results that are created through means of statistical computation, the metaphor of text- or data 'mining' is often used to refer to actual creation of the results. What side-effects do occur when this metaphor is used?

The metaphor of 'to mine' or 'mining' is often used these days by both corporations [REF?] but also in the academic field [REF?], to point to a process of information gathering from a large set of data. The term became more popular for businesses and corporations (Piatetsky-Shapiro & Parker, 2011)1. But there has been attempts to use a different term already in a workshop in 19892 in Detroit. During this event the term Knowledge Discovery in Databases (KDD) was coined by Gregory Piatetsky-Shapiro. This term describes the full process from selecting input data to the interpretations of results in different steps.

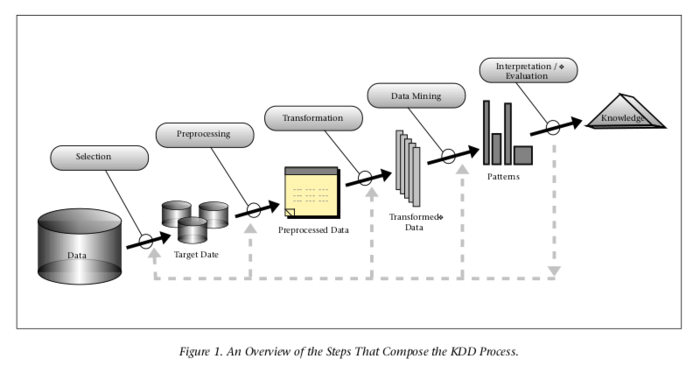

The initial KDD model was coined by Fayyad & Piatetsky-Shapiro & Smyth (1996). It did not only describe the data-related steps in the process:

step 1 → identifying the goal step 2 → creating a target data set step 3 → cleaning & preprocessing step 4 → data reduction & projection step 5 → matching the goals (step 1) to a particular data-mining method step 6 → exploratory analysis of model selection step 7 → data mining step 8 → interpreting mined patterns step 9 → acting on the discovered knowledge

note: “The KDD process can involve significant iteration and can contain loops between any two steps”. (see image below)

This model of the KDD steps starts with the identification of the goal that is aimed for, which puts the focus immediately on the presence of a data analyst. This model is published in 1996 and written with the aim to produce a more nuanced framework for the techniques that were overwhelmed by a wave of excitement in the 90s – already! It is telling that the model by Calders & Custers – published in 2013 – puts its focus much less on the analyst, and immediately on the data. In a time where 'data science' is an official department title in universities since 2011, data is placed central. The act of collecting data is presented as the very first step of the process.

Although 'data' is crucial material for the whole process, 'data mining' is only one of the multiple steps in all the KDD steps versions. The 'data mining' step is the moment in the process where the analyst searches for patterns in the data, by using one of the many different algorithms that are available3. Although this step is crucial, it is not possible to execute pattern recognition without collecting data and preparing it to be useful. By using the term 'data mining' to refer to the whole process of creating information out of data, many human decisions and subjective choices are placed in the shadow of the algorithmic calculations on the data. The term 'data mining' functions then as an objective curtain for a more tumultuous process.

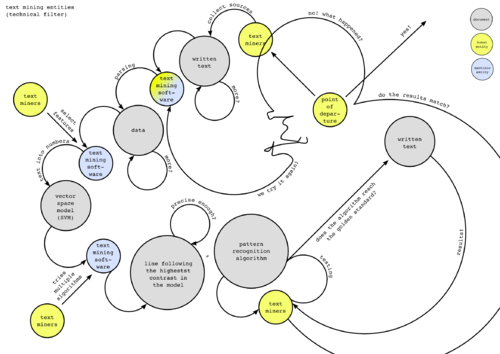

The moment of 'data mining' and finding patterns in the data is the most complex KDD step, and therefore difficult to fully understand. The way that these algorithms actually detect patterns in the data is even for many analysts rather vague and unclear. Different algorithms are applied on the dataset and their outcomes are compared (Hans Lammerant, how to ref a workshop moment?). Because the actual behaviour of the algorithms is complex, a trial-and-error method is needed to come to results – which is already described by the makers of the model from 1996. In this way, the KDD steps become a system in which an analyst can always take a few steps back to increase the quality of his or her results.

By using the term 'data' mining to refer to a full information creating process, it becomes almost impossible for a wider public to discuss outcomes or even disagree with them. As the exact behaviour of an algorithm is difficult to grasp, the 'data mining' step is a complex subject for a conversation. Both analysts and a broader public that is interested to know more would have difficulties to describe what calculations are made by the software, and why.

If it is important to speak about the constructions of results, the KDD steps should be the framework for analysts to document their findings. The initial model from 1996 includes not only data-treating steps, but also the subjective acts as 'identifying' and 'exploratory analysis'. It will take the moments of subjective human intervention out of the shadow of complex algorithmic calculations. It could bring decisions and human subjectivity back again in conversations about these systems. System that create information, useful information, or – also called – 'knowledge'.

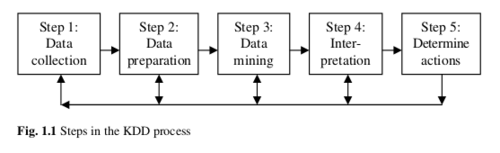

Different variations of these KDD steps are circulating. One of them is described in (Calders & Custers, 2013, p.7):

step 1 → data collection step 2 → data preparation step 3 → data mining step 4 → interpretation step 5 → determine actions

The model of Calders & Custers is named Knowledge Discovery in Data steps, which is a small variation on the discovery of knowledge in Databases. The other thing that stands out is that they use rather objective descriptions of the steps. Their KDD-steps start with the collection of data. They thereby bypass the moment where the analyst sets its intentions that leads to the goal of the KDD process (Fayyad & Piatetsky-Shapiro & Smyth, 1996). Step 2 is called 'data preparation' and is described as the act of 'rearranging and ordering' of the data (Calders & Custers, 2013, p.8), which does make it sound like an step which does not touch the data itself, but merely its order and categories. They thereby leave aside that the act of preprocessing can also be referred to as the moment where the data is 'cleaned up'. Such cleaning operations include “removing noise if appropriate, collecting the necessary information to model or account for noise, deciding on strategies for handling missing data fields, and accounting for time-sequence information and known changes” (Fayyad & Piatetsky-Shapiro & Smyth, 1996 p.42).

Only step 4, where the 'interpretation' of the found patterns takes place, is pointing to a moment of doubt, uncertainty or at least human subjectivity, where the analyst needs to interpret the output of the algorithm. This is the moment where results are rated as either useful or wrong. But useful or wrong according to what?

As opposed to data mining the term Knowledge Discovery in Databases enables to create a better balance between computer processes and human decision moments.

threshold tweaking

def uncertain(sentence, threshold=0.5):

return modality(sentence) <= threshold

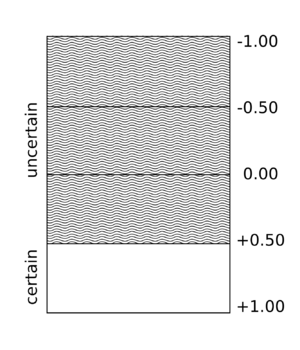

The modality function is followed by a super short function to decide where the border between certainty and uncertainty exactly lies. The function uncertain() can be used to quickly return a 'yes' or 'no'. The border between certainty and uncertainty is set here at exactly '0.5', a number that also can be translated into the words 'a half'. Is this coincidence? Next to that is the number '0.5' relatively high according to the full range of -1.00 to +1.00. It means that according to the uncertain() function, there is a higher chance that a sentence is uncertain, and only a small amount of sentences could be called certain.

+ positive/negative treshold

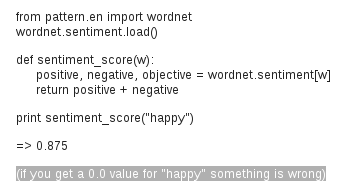

+ if 'happy' is 0.0, something is wrong

tweaking & quality expectations?

source?

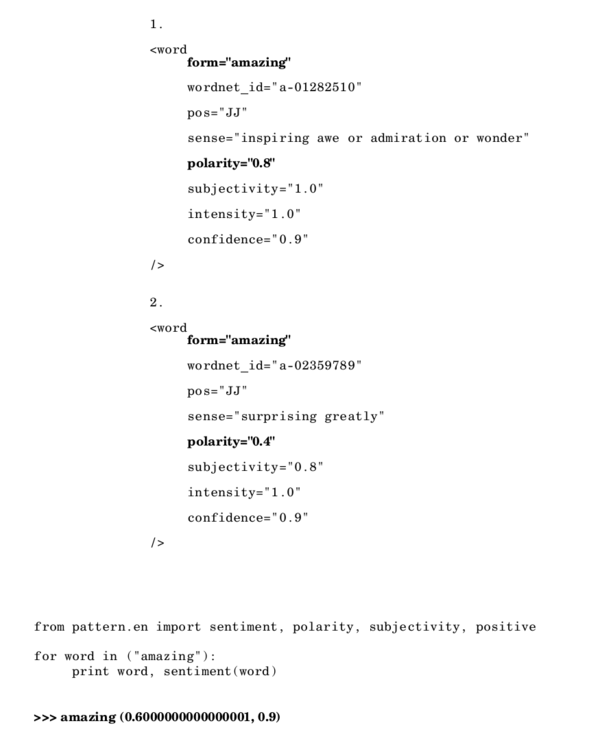

semantic averaging

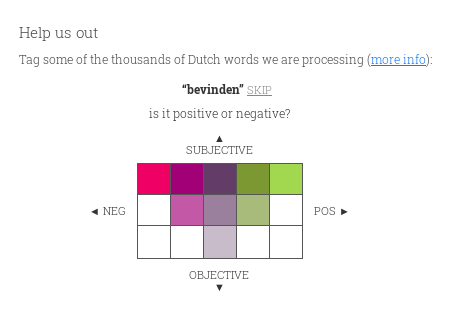

annotation workflow

categories

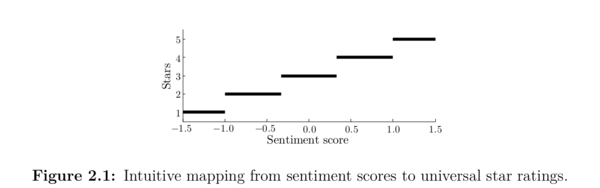

intuitive mapping, from: Alexander Hoogenboom's PhD thesis