User:Manetta/thesis/chapter-2: Difference between revisions

(Created page with "<div style="width:750px;"> __TOC__ =i could have written that - chapter 2= ==deriving information from written text → the material form of language== ===text analytics...") |

No edit summary |

||

| Line 1: | Line 1: | ||

<div style="width:750px;"> | <div style="width:750px;"> | ||

__TOC__ | __TOC__ | ||

= | =chapter 2 - various approaches of raw language, three case studies= | ||

'''manager (economy PhD candidate)''' | |||

* using raw data to make decisions | |||

'''magician (psychologist)''' | |||

* using the rawness of data as a smoke screen, making use of common sense, clichés and assumptions | |||

'''archaeologist (comp. linguist)''' | |||

* using the rawness of the words as material to work with, to carefully derive information from, by following different standards and procedures | |||

==the manager – case 1, the department of Economy== | |||

[[File:EUR-PhD-defence-sentiment-mining.JPG|thumb|left]] | |||

It is a wet Friday afternoon, mid November 2015. I'm sitting in the back of a lecture room in the Erasmus University in Rotterdam, to attend a PhD defence on the topic of sentiment analysis in text. Although i'm happy to hear that this topic has been researched at the University of my hometown, i'm also surprised to understand how these techniques are studies at an Economy department1. | |||

A PhD candidate stands behind a lectern. He waits patiently for the moment he can start defending his PhD thesis. A woman dressed in traditional academic garment enters the lecture room. The people in the audience stand up from their seats. The woman carries a stick with bells. They tinkle softly. It seems to be her way to tell everyone to be silent and stay focused on what is coming. A group of two women and seven men dressed in robes follow the woman. They walk to their seats behind the jury table. The doctoral candidate starts his defence. He introduces his area of research by describing the increasing amount of information that is published on the internet these days in the form of text. Text that could be used to extract information about the reputation of a company. It is important for decision makers to know how the public feels about their products and services. Online written material such as reviews, are a very useful source for companies to extract that information from. How could this be done? The candidate illustrates his methodology with an image of a branch with multiple leaves. When looking at the leaves, one could order them by colour, or shape. Such ordering techniques can be applied to written language as well: by analysing and sorting words. The candidate's topic of research has been 'sentiment analysis in written texts'. This is nothing new. Sentiment analysis is a common tool in the text mining field. The candidate's aim is to improve this technique. He proposes to detect emoticons as sentiment, and to add more weight to more important segments of a sentence. | |||

One of the professors2 opens the discussion in a critical tone. He asks the candidate to his definition of the word 'sentiment'. The candidate replies by saying that sentiment is what people intend to convey. There is the sentiment that the reader perceives, and there is sentiment that the writer conveys. In the case of reviews, sentiment is a judgement. The professor states that the candidate only used the values '-1' and '+1' to describe sentiment in his thesis, which is not a definition. The professor continues by asking if the candidate could offer a theory where the thesis has been based on. But there is again no answer that fulfils the professor's request. The professor claims that the candidate's thesis only presents numbers, no definitions. | |||

Another professor continues and asks for the 'neutral backbone' that is used in the research to validate the sentiment of certain words. Did the candidate collaborate with psychologists for example? The candidate replies that he collaborated with a company that manually rated the sentiment values of words and sentences. He cannot give a description about how that annotation process has been executed. The professor highlights the importance of an external backbone that is needed in order to be able to give results. Which brings him to his next question. The professor counted 6000 calculations that have been done to confirm the candidate's hypothesis. This 'hypothesis testing' phenomenon is a recurring element in the arguments of the thesis. The candidate is asked if he wasn't over-enthusiastic in his results. | |||

the | |||

But the jury must also admit that it is quite an achievement to write a doctoral thesis on the topic of text mining at a university where there is no department of linguistics, nor computer science. The candidate was located at the Economics department under the 'Erasmus Research Institute of Management'. Though, when the candidate was asked about his plans to fix the gaps in his thesis, he replied by saying that he already had a job in the business, and rewriting his thesis would not be a priority nor his primary interest. | |||

words, | ==the magician – case 2, the department of Psychology== | ||

2:11 Now let's start with something that's relatively clear, | |||

2:14 and let's see if it makes sense. | |||

2:15 See the words that are most typical, most discriminative, most predictive, | |||

2:21 of being female. | |||

2:23 (Laughter) | |||

2:30 Yeah, it's a little bit embarrassing, I'm sorry, but I didn't make this up! | |||

2:34 It's very cliché, but these are the words. | |||

[[File:TED-talk-screenshot_Lyle-Unger-World-Well-Being-Project_predicting-heartdiseases-using-Twitter.png|thumb|left]] | |||

The video1 reaches the 2:11 minutes when Lyle Ungar2 starts his introduction to the first text mining results that he will present his TED audience tonight. Luckily enough he can start with something that is ''relatively clear: the words that are most typical, most discriminative, most predictive, of being female''. Nothing too complicated to start with. He proposes to team up with his audience, to see together if the outcomes ''make sense''. Only 10 seconds later Lyle hits the button on the TED slide remote controller. In the reflection of his glasses appears a bright semblance. | |||

[[File:Lyle-Unger most-female-words TEDxPENN.png|400px|center]] | |||

<div style="text-align:center"><small>screenshot from [https://youtu.be/FjibavNwOUI?t=2m31s Lyle Ungar's TEDx presentation: Using Twitter to predict Heart Diseases]</small></div> | |||

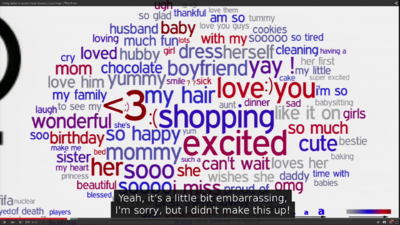

The audience slowly starts to titter. Lyle looks up to face his audience. He frowns, turns his head, walks a few steps to the right and sighs theatrically and somewhat too loud. While Lyle's posture speaks the language of shameful soreness, a white slide with colourful words appears on the screen. '<3' is typeset in the largest font size, followed by 'excited', 'shopping', 'love you' and 'my hair', surrounded by another +-50 words that together form the shape of a cloud. '(Laughter)', appears in the subtitles. The audience seems to recognize the words, and responds to them with a stifled laughter. Is it the term 'shopping' that appears so big that is funny? Because it confirms a stereotype? Or is it surprising to see what extreme expressions appear to be typical of being female? Lyle had seen it coming, and quickly excuses himself for the results by saying: ''I didn't make this up! It's very cliché, but these are the words''. By excusing himself, and stating that these results are not his', this statement removes all human responsibilities that would have had influence on the results to let the technique bear them all. The ideals of raw data echo through Unger's statement. | |||

text | [[File:Wwbp-field.png|400px|center]] | ||

<div style="text-align:center"><small>the [http://www.wwbp.org World Well Being website's] background image</small></div> | |||

These results are part of a text mining research project of the University of Pennsylvania called the 'World Well Being Project' (WWBP). The project is located at the 'Positive Psychology Center', and aims to measure psychological well-being and physical health by analysing written language on social media. For the results that Lyle Ungar presented at the TED presentations in Pennsylvania 2015, a group of 66.000 Facebook users were asked to share their messages and posts with the research group, together with their age and gender. They were also asked to fill in the 'big five personality test'. A widely used questionnaire that is used by psychologists to describe human personalities that returns a value for 'openness', 'conscientiousness', 'extraversion', 'agreeableness', and 'neuroticism'. Text mining here is used as a technique to derive information about Facebook users by connecting their word usage to their age, gender and personality profile. | |||

==the archaeologist – case 3, the department of Computational Linguistics== | |||

[[File:Cqrrelations_Guy-de-Pauw-CLiPS-Pattern-introduction_small.jpg|thumb|left]] | |||

Guy de Pauw1 is in the middle of his presentation, when he calls text mining a technology of shallow understanding. It is a cold week in mid January 2015. The room on the first floor of Dutch/Flemish cultural organization De Buren in Brussels is filled with 40 artists, researchers, designers, activists, students (among others), of which most are interested in, or working with free software. The group came to Brussels to study data mining processes together during the event 'Cqrrelations'2. A lot of the people sit with laptops on their laps, trying to keep up with the speed and amount of information. Not many people in the audience are familiar with text mining techniques, and Guy's presentation is full of text mining jargon. To make as many notes as possible seems to be the best strategy for the moment. In the meanwhile, Guy formulates the fundamental problems that text mining is facing: how to transform text from form to meaning? How to deal with semantics and meaning? And, how can a computer 'understand' natural language without any world knowledge? It is telling how much effort Guy takes to show the problematic points in text understanding practices. In one of his next slides, Guy shows an image where one sentence is interpreted in five different ways. Each version of the sentence pretty little girl's school is illustrated to reveal the different meanings that this short sentence contains. Guy | |||

transcribes shortly: “Version one: the pretty school for little girls. Version two: the seemingly little girl and her school. Version three: the beautiful little girl and her school. And so forth.” | |||

[[File:From_CLiPS-presentations-during-Cqrrelations_jan-2015_Brussels-Pretty-little-girls-school.png|center]] | |||

could | A few minutes earlier, Guy showed an image of two word clouds that represent words, phrases and topics most highly distinguishing females and males. '<3', 'shopping' and 'excited' are labelled as being most typical female. 'Fuck', 'wishes', and 'he' are presented as most typically 'male'. A little rush of indignation moved through the room. 'But, how?!'. You could see question marks rising above many heads. How is this graph constructed? Where does it come from? Guy explained how he is interested in gender detection in a different sense. In the graph, words were connected to themes and topics, whereupon it is only a small step to speak about 'meaning' and what females 'are'. Guy's next slide showed how he is more interested to look at gender in a grammatical way. By analysing the structures of sentences that are written by females and comparing these to male-written sentences. Then, all there is to say is: women use more relational language and men more informative language. | ||

= | <div style="color:gray;">What did Guy mean with shallow understanding? He shows the website 'biograph.be' to illustrate his statement. It is a text mining project where connections are drawn between hypotheses of academic papers. The project can be used for prevention, diagnosis or treatment purposes. 'Automated knowledge discovery' is promised to prevent anyone from 'drowning in information'. Guy adds some critical remarks: using this technology in medical contexts “will lead to a fragmentation of the field” as well as to “poor communication between subfields”.</div> | ||

Guy is invited to speak and introduce the group to a text mining software package. The software is called 'Pattern' and developed at the university of Antwerp, where Guy is part of the CLiPS research group: 'Computational Linguistics & Psycholinguistics'. Coming from a linguistic background, the CLiPS research group is approaching their project rather from structural approaches than statistical. This nuance is difficult to grasp when only results are presented. Guy hits a button on his keyboard and his presentation jumps to the next slide. It is an overview of linguistic approaches to text understanding for computers. The slide shows a short bullet-pointed overview. Coming from a knowledge representation approach in the 70s, where sentence structures were described in models that were fed into the computer. Via a knowledge-based approach in the 80s, where corpora were created to recognize sentence structures on a word-level. Word types as 'noun', 'verb' or 'adjective' functioned for example as labels. Towards the period that started in the mid 90s: a statistical and shallow understanding approach. Text understanding became scalable, efficient and robust. Making linguistic models became easier and cheaper. Guy adds immediately a critical remark: is this a phenomenon of scaling up by dumbing down? [!!! extend a bit more] | |||

Revision as of 14:38, 30 April 2016

chapter 2 - various approaches of raw language, three case studies

manager (economy PhD candidate)

- using raw data to make decisions

magician (psychologist)

- using the rawness of data as a smoke screen, making use of common sense, clichés and assumptions

archaeologist (comp. linguist)

- using the rawness of the words as material to work with, to carefully derive information from, by following different standards and procedures

the manager – case 1, the department of Economy

It is a wet Friday afternoon, mid November 2015. I'm sitting in the back of a lecture room in the Erasmus University in Rotterdam, to attend a PhD defence on the topic of sentiment analysis in text. Although i'm happy to hear that this topic has been researched at the University of my hometown, i'm also surprised to understand how these techniques are studies at an Economy department1.

A PhD candidate stands behind a lectern. He waits patiently for the moment he can start defending his PhD thesis. A woman dressed in traditional academic garment enters the lecture room. The people in the audience stand up from their seats. The woman carries a stick with bells. They tinkle softly. It seems to be her way to tell everyone to be silent and stay focused on what is coming. A group of two women and seven men dressed in robes follow the woman. They walk to their seats behind the jury table. The doctoral candidate starts his defence. He introduces his area of research by describing the increasing amount of information that is published on the internet these days in the form of text. Text that could be used to extract information about the reputation of a company. It is important for decision makers to know how the public feels about their products and services. Online written material such as reviews, are a very useful source for companies to extract that information from. How could this be done? The candidate illustrates his methodology with an image of a branch with multiple leaves. When looking at the leaves, one could order them by colour, or shape. Such ordering techniques can be applied to written language as well: by analysing and sorting words. The candidate's topic of research has been 'sentiment analysis in written texts'. This is nothing new. Sentiment analysis is a common tool in the text mining field. The candidate's aim is to improve this technique. He proposes to detect emoticons as sentiment, and to add more weight to more important segments of a sentence.

One of the professors2 opens the discussion in a critical tone. He asks the candidate to his definition of the word 'sentiment'. The candidate replies by saying that sentiment is what people intend to convey. There is the sentiment that the reader perceives, and there is sentiment that the writer conveys. In the case of reviews, sentiment is a judgement. The professor states that the candidate only used the values '-1' and '+1' to describe sentiment in his thesis, which is not a definition. The professor continues by asking if the candidate could offer a theory where the thesis has been based on. But there is again no answer that fulfils the professor's request. The professor claims that the candidate's thesis only presents numbers, no definitions.

Another professor continues and asks for the 'neutral backbone' that is used in the research to validate the sentiment of certain words. Did the candidate collaborate with psychologists for example? The candidate replies that he collaborated with a company that manually rated the sentiment values of words and sentences. He cannot give a description about how that annotation process has been executed. The professor highlights the importance of an external backbone that is needed in order to be able to give results. Which brings him to his next question. The professor counted 6000 calculations that have been done to confirm the candidate's hypothesis. This 'hypothesis testing' phenomenon is a recurring element in the arguments of the thesis. The candidate is asked if he wasn't over-enthusiastic in his results.

But the jury must also admit that it is quite an achievement to write a doctoral thesis on the topic of text mining at a university where there is no department of linguistics, nor computer science. The candidate was located at the Economics department under the 'Erasmus Research Institute of Management'. Though, when the candidate was asked about his plans to fix the gaps in his thesis, he replied by saying that he already had a job in the business, and rewriting his thesis would not be a priority nor his primary interest.

the magician – case 2, the department of Psychology

2:11 Now let's start with something that's relatively clear, 2:14 and let's see if it makes sense. 2:15 See the words that are most typical, most discriminative, most predictive, 2:21 of being female. 2:23 (Laughter) 2:30 Yeah, it's a little bit embarrassing, I'm sorry, but I didn't make this up! 2:34 It's very cliché, but these are the words.

The video1 reaches the 2:11 minutes when Lyle Ungar2 starts his introduction to the first text mining results that he will present his TED audience tonight. Luckily enough he can start with something that is relatively clear: the words that are most typical, most discriminative, most predictive, of being female. Nothing too complicated to start with. He proposes to team up with his audience, to see together if the outcomes make sense. Only 10 seconds later Lyle hits the button on the TED slide remote controller. In the reflection of his glasses appears a bright semblance.

The audience slowly starts to titter. Lyle looks up to face his audience. He frowns, turns his head, walks a few steps to the right and sighs theatrically and somewhat too loud. While Lyle's posture speaks the language of shameful soreness, a white slide with colourful words appears on the screen. '<3' is typeset in the largest font size, followed by 'excited', 'shopping', 'love you' and 'my hair', surrounded by another +-50 words that together form the shape of a cloud. '(Laughter)', appears in the subtitles. The audience seems to recognize the words, and responds to them with a stifled laughter. Is it the term 'shopping' that appears so big that is funny? Because it confirms a stereotype? Or is it surprising to see what extreme expressions appear to be typical of being female? Lyle had seen it coming, and quickly excuses himself for the results by saying: I didn't make this up! It's very cliché, but these are the words. By excusing himself, and stating that these results are not his', this statement removes all human responsibilities that would have had influence on the results to let the technique bear them all. The ideals of raw data echo through Unger's statement.

These results are part of a text mining research project of the University of Pennsylvania called the 'World Well Being Project' (WWBP). The project is located at the 'Positive Psychology Center', and aims to measure psychological well-being and physical health by analysing written language on social media. For the results that Lyle Ungar presented at the TED presentations in Pennsylvania 2015, a group of 66.000 Facebook users were asked to share their messages and posts with the research group, together with their age and gender. They were also asked to fill in the 'big five personality test'. A widely used questionnaire that is used by psychologists to describe human personalities that returns a value for 'openness', 'conscientiousness', 'extraversion', 'agreeableness', and 'neuroticism'. Text mining here is used as a technique to derive information about Facebook users by connecting their word usage to their age, gender and personality profile.

the archaeologist – case 3, the department of Computational Linguistics

Guy de Pauw1 is in the middle of his presentation, when he calls text mining a technology of shallow understanding. It is a cold week in mid January 2015. The room on the first floor of Dutch/Flemish cultural organization De Buren in Brussels is filled with 40 artists, researchers, designers, activists, students (among others), of which most are interested in, or working with free software. The group came to Brussels to study data mining processes together during the event 'Cqrrelations'2. A lot of the people sit with laptops on their laps, trying to keep up with the speed and amount of information. Not many people in the audience are familiar with text mining techniques, and Guy's presentation is full of text mining jargon. To make as many notes as possible seems to be the best strategy for the moment. In the meanwhile, Guy formulates the fundamental problems that text mining is facing: how to transform text from form to meaning? How to deal with semantics and meaning? And, how can a computer 'understand' natural language without any world knowledge? It is telling how much effort Guy takes to show the problematic points in text understanding practices. In one of his next slides, Guy shows an image where one sentence is interpreted in five different ways. Each version of the sentence pretty little girl's school is illustrated to reveal the different meanings that this short sentence contains. Guy transcribes shortly: “Version one: the pretty school for little girls. Version two: the seemingly little girl and her school. Version three: the beautiful little girl and her school. And so forth.”

A few minutes earlier, Guy showed an image of two word clouds that represent words, phrases and topics most highly distinguishing females and males. '<3', 'shopping' and 'excited' are labelled as being most typical female. 'Fuck', 'wishes', and 'he' are presented as most typically 'male'. A little rush of indignation moved through the room. 'But, how?!'. You could see question marks rising above many heads. How is this graph constructed? Where does it come from? Guy explained how he is interested in gender detection in a different sense. In the graph, words were connected to themes and topics, whereupon it is only a small step to speak about 'meaning' and what females 'are'. Guy's next slide showed how he is more interested to look at gender in a grammatical way. By analysing the structures of sentences that are written by females and comparing these to male-written sentences. Then, all there is to say is: women use more relational language and men more informative language.

Guy is invited to speak and introduce the group to a text mining software package. The software is called 'Pattern' and developed at the university of Antwerp, where Guy is part of the CLiPS research group: 'Computational Linguistics & Psycholinguistics'. Coming from a linguistic background, the CLiPS research group is approaching their project rather from structural approaches than statistical. This nuance is difficult to grasp when only results are presented. Guy hits a button on his keyboard and his presentation jumps to the next slide. It is an overview of linguistic approaches to text understanding for computers. The slide shows a short bullet-pointed overview. Coming from a knowledge representation approach in the 70s, where sentence structures were described in models that were fed into the computer. Via a knowledge-based approach in the 80s, where corpora were created to recognize sentence structures on a word-level. Word types as 'noun', 'verb' or 'adjective' functioned for example as labels. Towards the period that started in the mid 90s: a statistical and shallow understanding approach. Text understanding became scalable, efficient and robust. Making linguistic models became easier and cheaper. Guy adds immediately a critical remark: is this a phenomenon of scaling up by dumbing down? [!!! extend a bit more]