User:Max Dovey/ PT/TRIMESTER 1 ntw6: Difference between revisions

No edit summary |

No edit summary |

||

| Line 433: | Line 433: | ||

http://palewi.re/posts/2008/04/20/python-recipe-grab-a-page-scrape-a-table-download-a-file/ | http://palewi.re/posts/2008/04/20/python-recipe-grab-a-page-scrape-a-table-download-a-file/ | ||

===week 6=== | |||

Looking at EPUB, Calbire and free publishing tools. | |||

http://dpt.automatist.org/digitalworkflows/ | |||

Revision as of 12:21, 4 November 2013

Week 1

Alan Turing's Universal Turing Machine (UTM) http://en.wikipedia.org/wiki/Universal_machine

was a concept for an infinite loop of tape that seperated into frames, each frame would present a different state. This created an infinite programming potential for reading.

deconstructing the seamlessness of the factory line. The pipeline.

in the afternoon we played with Turtle.

http://opentechschool.github.io/python-data-intro/core/recap.html

https://github.com/OpenTechSchool/python/wiki/Facebook-Client

http://bitsofpy.blogspot.nl/2010/04/in-my-cosc-lab-today-few-students-were.html

facebook page query

[

>>> import json

>>> import urllib2

>>> def load_facebook_page(facebook_id):

... addy = 'https://graph.facebook.com/548951431'

... return json.load(urllib2.urlopen(addy))

load_facebook_page(548951431)

{u'username': u'max.dovey', u'first_name': u'Max', u'last_name': u'Dovey', u'name': u'Max Dovey', u'locale': u'en_US', u'gender': u'male', u'link': u'http://www.facebook.com/max.dovey', u'id': u'548951431'}

]

stuff to do & Resources - start fetching data from the twitter api https://code.google.com/p/python-twitter/ http://pzwart3.wdka.hro.nl/wiki/PythonTwitter http://www.lynda.com/Python-tutorials/Up-Running-Python/122467-2.html

mining the social web by o'reilly https://github.com/ptwobrussell/Mining-the-Social-Web updated github for twitter oauth http://www.pythonforbeginners.com/python-on-the-web/how-to-access-various-web-services-in-python/ http://www.greenteapress.com/thinkpython/thinkpython.pdf http://hetland.org/writing/instant-python.html

replacing "Music" with "Crap" on 20 most popular video search from youtube

import json

import requests

r = requests.get("http://gdata.youtube.com/feeds/api/standardfeeds/top_rated?v=2&alt=jsonc")

r.text

data = json.loads(r.text)

#print data

for item in data['data']["items"]:

print " %s" % (item['category'].replace("Music", "CRAP"))

CRAP

Entertainment

CRAP

CRAP

CRAP

CRAP

CRAP

CRAP

CRAP

Comedy

CRAP

CRAP

Comedy

CRAP

CRAP

CRAP

CRAP

Entertainment

CRAP

CRAP

CRAP

Week2=

In the morning we looked at SVG files, and how you can edit the xml of them in a live editor within inskape. You can also execute python commands by pasting in python drawings and the vectors will be generated within inskape.

In the afternoon we looked at making api grabs , loading them with Json libs

ajax.googleapis.com/ajax

add json extension for firefox

Json turns xml into a javascript object.

json has lists - lists [] append("milk")

and dictionary {} foods = [foods["chocolate"] = "love to eat it"]

runcron - can execute pythonn scripts from a server timed.

i got my twitter search function to write to a text file. Im going to look at automating that text file to network printer for this cloud project.

useful link http://lifehacker.com/5652311/print-files-on-your-printer-from-any-phone-or-remote-computer-via-dropbox http://docs.python.org/2/tutorial/inputoutput.html facebook - https://github.com/OpenTechSchool/python/wiki/Facebook-Client youtube api - http://gdata.youtube.com/ http://nealcaren.web.unc.edu/an-introduction-to-text-analysis-with-python-part-1/

Week3=

This script prints a json grab from twitter api, loops and sends to printer every 55 seconds.

uth = twitter.oauth.OAuth(OAUTH_TOKEN, OAUTH_TOKEN_SECRET,

CONSUMER_KEY, CONSUMER_SECRET)

twitter_api = twitter.Twitter(domain='api.twitter.com',

api_version='1.1',

auth=auth

)

filepath = "Desktop/autoprinting/todo/" #define file path

filename = "cloud101" #def file name

#if file path does not exist make this

if not os.path.exists('Desktop/autoprinting/todo'):

os.makedirs('Desktop/autoprinting/todo')

#add filename to path

completepath = os.path.join(filepath, filename+".txt")

while True:

q = "the_cloud"

count = 30

f = open (completepath, "w",)

search_results= twitter_api.search.tweets(q=q, count=count)

# search_results['meta_data']

for status in search_results['statuses']:

text = status['text']

date = status['created_at']

simplejson.dump(text + date, f)

f.writelines("\n")

f.close()

time.sleep(55)

#if path exists

if os.path.exists('Desktop/autoprinting/todo/cloud101.txt'):

#lbr print

os.system("lpr -p -r Desktop/autoprinting/todo/cloud101.txt")

week 4

basic arithmetic (moving the digit to another column when u reach *power 10*) keeping the count. binary systems would use 10 figures ( 0,1,2,3,4,5,6,7,8,9) and then when it gets to 10 will give to another cog to start counting giving you an infinite counting system. The difference engine

George Boole began implementing numerical binary into text in logic. He is the inventor of what is known as Boolean logic, true and false statements and conditionals.

Lewis carroll wrote logic conditionals in alice and wonderland "It is a very inconvenient habit of kittens (Alice had once made the remark) that, whatever you say to them, they Always purr. 'If them would only purr for "yes" and mew for "no," or any rule of that sort,' she had said, 'so that one could keep up a conversation! But how can you talk with a person if they always say the same thing?' Lewis Carroll (1832 - 1898)

Alice predicament allows us to talk with computers. Alice's whole adventure is based on encountering scenarios and applying logic conditionals.

8-bit = 8 on and offs. giving you 255 characters to allocate. all commmands are distributed via the ascII table. http://en.wikipedia.org/wiki/ASCII unicode (utf-8) (UTF-16) Think about the task of the translator by Walter Benjamin/ Everything is interpreted. every webpage is being encoded all bytes are counters for their 8 bit reference gives them a Glyph. see last years page for more on human computation http://pzwart3.wdka.hro.nl/wiki/Human_Computation_(Slides) PYTHON AND AUDIO wav files have a header like how utf is encoded at the top of html. (16 bit little endian , rate 44100 hz, stereo) number of samples / sample rate = time of piece. using this example http://zacharydenton.com/generate-audio-with-python/

This code generates a sound byte the same frequency as the temperature of Rotterdam that day.

#!/usr/bin/env python

#-*- coding:utf-8 -*-

import json

import requests

import urllib2

url = ('http://api.openweathermap.org/data/2.5/weather?q=rotterdam,nl')

r = requests.get(url)

data = r.text

r.text

#urllib2.urlopen(url)

#json.dump(url)

data = json.loads(r.text)

t = data["main"]["temp"]

import wave, struct

filename = "rotterdam.wav"

nframes=None

nchannels=2

sampwidth=1 # in bytes so 2=16bit, 1=8bit

framerate=44100

bufsize=2048

w = wave.open(filename, 'w')

w.setparams((nchannels, sampwidth, framerate, nframes, 'NONE', 'not compressed'))

max_amplitude = float(int((2 ** (sampwidth * 8)) / 2) - 1)

# split the samples into chunks (to reduce memory consumption and improve performance)

#for chunk in grouper(bufsize, samples):

# frames = ''.join(''.join(struct.pack('h', int(max_amplitude * sample)) for sample in channels) for channels in chunk if channels is not None)

# w.writeframesraw(frames)

freq = int(t)

# this means that FREQ times a second, we need to complete a cycle

# there are FRAMERATE samples per second

# so FRAMERATE / FREQ = CYCLE LENGTH

cycle = framerate / freq

for x in range(freq):

data = ''

for i in range(1):

for x in range(cycle/2):

data += struct.pack('h', int(0.5 * max_amplitude))

data += struct.pack('h', 0)

for x in range(cycle/2):

data += struct.pack('h', int(-0.5 * max_amplitude))

data += struct.pack('h', 0)

w.writeframesraw(data)

w.close()

Image Generation

The sound of weather - Rotterdam, Netherlands and Lagos, Nigeria

#!/usr/bin/env python

#-*- coding:utf-8 -*-

import json

import requests

import urllib2

import time

url = ('http://api.openweathermap.org/data/2.5/weather?q=lagos,ni')

r = requests.get(url)

data = r.text

r.text

#urllib2.urlopen(url)

#json.dump(url)

data = json.loads(r.text)

t1 = data["main"]["temp"]

url = ('http://api.openweathermap.org/data/2.5/weather?q=rotterdam,nl')

r = requests.get(url)

data = r.text

r.text

#urllib2.urlopen(url)

#json.dump(url)

data = json.loads(r.text)

t2 = data["main"]["temp"]

import wave, struct

filename = "weatherreport.wav"

nframes=None

nchannels=2

sampwidth=2 # in bytes so 2=16bit, 1=8bit

framerate=22150

bufsize=2048

if nframes is None:

nframes = -1

w = wave.open(filename, 'w')

w.setparams((nchannels, sampwidth, framerate, nframes, 'NONE', 'not compressed'))

max_amplitude = float(int((2 ** (sampwidth * 8)) / 2) - 1)

# split the samples into chunks (to reduce memory consumption and improve performance)

#for chunk in grouper(bufsize, samples):

# frames = ''.join(''.join(struct.pack('h', int(max_amplitude * sample)) for sample in channels) for channels in chunk if channels is not None)

# w.writeframesraw(frames)

freq = int(t2 * 10)

freq2 = int(t1 * 10)

# this means that FREQ times a second, we need to complete a cycle

# there are FRAMERATE samples per second

# so FRAMERATE / FREQ = CYCLE LENGTH

cycle = framerate / freq

for x in range(5):

data2 = ''

data = ''

for i in range(1):

for x in range(cycle*1000):

data += struct.pack('h', int(0.5 * max_amplitude))

data += struct.pack('h', 1)

for x in range(cycle*1000):

data += struct.pack('h', int(-0.5 * max_amplitude))

data += struct.pack('h', 1)

for i in range(1):

#if freq2 >= 600:

for x in range(cycle):

data2 += struct.pack('h', int(0.5 * max_amplitude))

data2 += struct.pack('h', 0)

for x in range(cycle):

data2 += struct.pack('h', int(-0.5 * max_amplitude))

data2 += struct.pack('h', 0)

# newstring = ''.join([data] + [data2])

w.writeframesraw(data)

w.writeframesraw(data2)

w.close()

Audio output

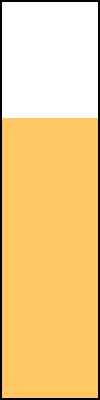

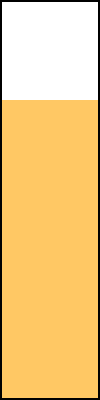

WEATHER visualization graphic

import struct, array

import random

import json

import requests

import urllib2

url = ('http://api.openweathermap.org/data/2.5/weather?q=antartica')

r = requests.get(url)

data = r.text

r.text

data = json.loads(r.text)

temp = data["main"]["temp"]

width = 100

height = 400

filename="antartica.tga"

datafile = open(filename, "wb")

# TGA format: http://gpwiki.org/index.php/TGA

# Offset, ColorType, ImageType, PaletteStart, PaletteLen, PalBits, XOrigin, YOrigin, Width, Height, BPP, Orientation

header = struct.pack("<BBBHHBHHHHBB", 0, 0, 2, 0, 0, 8, 0, 0, width, height, 24, 1 << 5)

datafile.write(header)

base = (height - int(temp)) #because the co-ordinates begin at 0 , deduct temp from height to make base that will show white, and color will fill from then on.

data = ''

for y in xrange(height):

for x in xrange(width):

r, g, b = 0, 0, 0

if y > 0 and y < 400:

b = 255

g = 255

r = 255

if y > base and y < 400:

r = 0

g = 0

b = 240

if x <= 100 and y <= 1: #bottom border

b = 0

g = 0

r = 0

if x <= 100 and y >= 398: #top border

b = 0

g = 0

r = 0

if y <= 400 and x <= 1: #left border

b = 0

g = 0

r = 0

if y <= 400 and x >= 98: #right border

b = 0

g = 0

r = 0

data += struct.pack('B', b)

data += struct.pack('B', g)

data += struct.pack('B', r)

datafile.write(data)

datafile.close()

Rotterdam temp, Lagos Temp and Anartica

http://palewi.re/posts/2008/04/20/python-recipe-grab-a-page-scrape-a-table-download-a-file/

week 6

Looking at EPUB, Calbire and free publishing tools. http://dpt.automatist.org/digitalworkflows/