User:Tash/grad prototyping: Difference between revisions

No edit summary |

No edit summary |

||

| Line 1: | Line 1: | ||

== Prototyping | == Prototyping 1 & 2 == | ||

[[File:Jamesbridle1.png|400px|thumbnail|right|Every Redaction, by James Bridle]] | [[File:Jamesbridle1.png|400px|thumbnail|right|Every Redaction, by James Bridle]] | ||

<br> | <br> | ||

| Line 45: | Line 45: | ||

scrapy crawl titles -o titles.json | scrapy crawl titles -o titles.json | ||

</source> | </source> | ||

<br> | <br> | ||

| Line 55: | Line 54: | ||

* self-censorship: can you track the things people write but then retract? | * self-censorship: can you track the things people write but then retract? | ||

* [https://pzwiki.wdka.nl/mediadesign/An_Anthem_to_Open_Borders An Anthem to Open Borders] | * [https://pzwiki.wdka.nl/mediadesign/An_Anthem_to_Open_Borders An Anthem to Open Borders] | ||

<br> | |||

== | == Scrape, rinse, repeat! == | ||

===== HTML5lib ===== | |||

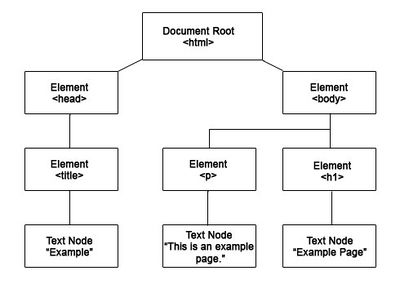

[[File:Elementtree.jpg|400px|thumbnail|right]] | [[File:Elementtree.jpg|400px|thumbnail|right]] | ||

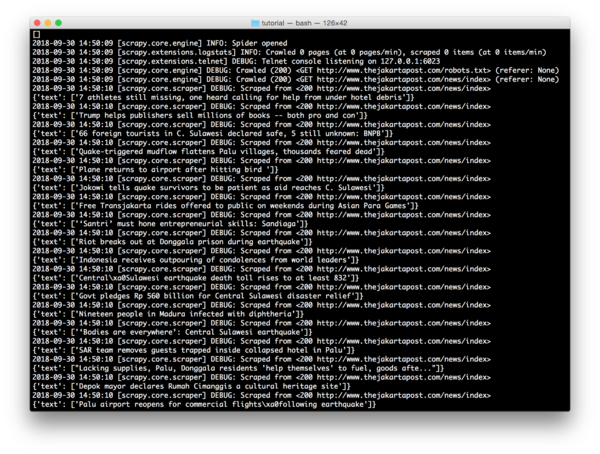

Back to basics: using html5lib and elementtree to extract data from web sites. | |||

While Scrapy has built-in mechanisms which make it easier to programme spiders, this method feels more open to intervention. | |||

I can see every part of the code and manipulate it how I like. | |||

<source lang=python> | <source lang=python> | ||

| Line 73: | Line 76: | ||

if x.text != None and 'trump' in x.text.lower() and x.tag != 'script': | if x.text != None and 'trump' in x.text.lower() and x.tag != 'script': | ||

print (x.text) | print (x.text) | ||

</source> | |||

===== Selenium ===== | |||

Selenium is a framework which automates browsers. | |||

It uses a webdriver to simulate sessions, allowing you to programme actions like following links, scrolling and waiting. | |||

This means its more powerful and can handle more complex scraping! | |||

<br> | |||

Here's the first code that I put together, to scrape some Youtube comments: | |||

<br> | |||

<source lang=python> | |||

# import libraries | |||

from selenium import webdriver | |||

from selenium.webdriver.common.keys import Keys | |||

import os | |||

import time | |||

import datetime | |||

today = datetime.date.today() | |||

# get the url from the terminal | |||

url = input("Enter a url to scrape (include https:// etc.): ") | |||

# Tell Selenium to open a new Firefox session | |||

# and specify the path to the driver | |||

driver = webdriver.Firefox(executable_path=os.path.dirname(os.path.realpath(__file__)) + '/geckodriver') | |||

# Implicit wait tells Selenium how long it should wait before it throws an exception | |||

driver.implicitly_wait(10) | |||

driver.get(url) | |||

time.sleep(3) | |||

# Find the title element on the page | |||

title = driver.find_element_by_xpath('//h1') | |||

print ('Scraping comments from:') | |||

print(title.text) | |||

# scroll to just under the video in order to load the comments | |||

driver.execute_script("window.scrollTo(1, 300);") | |||

time.sleep(3) | |||

# scroll again in order to load more comments | |||

driver.execute_script('window.scrollTo(1, 2000);') | |||

time.sleep(3) | |||

# scroll again in order to load more comments | |||

driver.execute_script('window.scrollTo(1, 4000);') | |||

time.sleep(3) | |||

# Find the element on the page where the comments are stored | |||

comment_div=driver.find_element_by_xpath('//*[@id="contents"]') | |||

comments=comment_div.find_elements_by_xpath('//*[@id="content-text"]') | |||

authors=comment_div.find_elements_by_xpath('//*[@id="author-text"]') | |||

# Extract the contents and add them to the lists | |||

# This will let you create a dictionary later, of authors and comments | |||

authors_list = [] | |||

comments_list = [] | |||

for author in authors: | |||

authors_list.append(author.text) | |||

for comment in comments: | |||

comments_list.append(comment.text) | |||

dictionary = dict(zip(authors_list, comments_list)) | |||

# Print the keys and values of our dictionary to the terminal | |||

# then add them to a print_list which we'll use to write everything to a text file later | |||

print_list = [] | |||

for a, b in dictionary.items(): | |||

print ("Comment by:", str(a), "-"*10) | |||

print (str(b)+"\n") | |||

print_list.append("Comment by: "+str(a)+" -"+"-"*10) | |||

print_list.append(str(b)+"\n") | |||

# Done? Great! | |||

# Change the list into a collection of strings | |||

# Open a txt file and put them there | |||

# In case the file already exists, then just paste it at the bottom | |||

# Super handy if you want to run the script for multiple sites and collect text | |||

# in just one file | |||

print_list_strings = "\n".join(print_list) | |||

text_file = open("results.txt", "a+") | |||

text_file.write("Video: "+title.text+"\n") | |||

text_file.write("Date:"+str(today)+"\n"+"\n") | |||

text_file.write(print_list_strings+"\n") | |||

text_file.close() | |||

# close the browser | |||

driver.close() | |||

</source> | </source> | ||

See workshop pad here: https://pad.xpub.nl/p/pyratechnic1 | See workshop pad here: https://pad.xpub.nl/p/pyratechnic1 | ||

Revision as of 13:41, 6 October 2018

Prototyping 1 & 2

Possible topics to explore:

- anonymity

- creating safe, temporary, local networks (freedom of speech - freedom of connection!) http://rhizome.org/editorial/2018/sep/11/rest-in-peace-ethira-an-interview-with-amalia-ulman/

- censorship

- scraping, archiving

- documenting redactions

- steganography?

- meme culture

Learning to use Scrapy

Scrapy is an application framework for crawling web sites and extracting structured data which can be used for a wide range of useful applications, like data mining, information processing or historical archival. Even though Scrapy was originally designed for web scraping, it can also be used to extract data using APIs (such as Amazon Associates Web Services) or as a general purpose web crawler.

Documentation: https://docs.scrapy.org/en/latest/index.html

Scraping headlines from an Indonesian news site:

Using a spider to extract header elements (H5) from: http://www.thejakartapost.com/news/index

import scrapy

class TitlesSpider(scrapy.Spider):

name = "titles"

def start_requests(self):

urls = [

'http://www.thejakartapost.com/news/index',

]

for url in urls:

yield scrapy.Request(url=url, callback=self.parse)

def parse(self, response):

for title in response.css('h5'):

yield {

'text': title.css('h5::text').extract()

}

Crawling and saving to a json file:

scrapy crawl titles -o titles.json

To explore

- NewsDiffs – as a way to expose the historiography of an article

- how about looking at comments? what can you scrape (and analyse) from social media?

- how far can you go without using an API?

- self-censorship: can you track the things people write but then retract?

- An Anthem to Open Borders

Scrape, rinse, repeat!

HTML5lib

Back to basics: using html5lib and elementtree to extract data from web sites. While Scrapy has built-in mechanisms which make it easier to programme spiders, this method feels more open to intervention. I can see every part of the code and manipulate it how I like.

import html5lib

from xml.etree import ElementTree as ET

from urllib.request import urlopen

with urlopen('https://www.dailymail.co.uk') as f:

t = html5lib.parse(f, namespaceHTMLElements=False)

#finding specific words in text content

for x in t.iter():

if x.text != None and 'trump' in x.text.lower() and x.tag != 'script':

print (x.text)

Selenium

Selenium is a framework which automates browsers.

It uses a webdriver to simulate sessions, allowing you to programme actions like following links, scrolling and waiting.

This means its more powerful and can handle more complex scraping!

Here's the first code that I put together, to scrape some Youtube comments:

# import libraries

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

import os

import time

import datetime

today = datetime.date.today()

# get the url from the terminal

url = input("Enter a url to scrape (include https:// etc.): ")

# Tell Selenium to open a new Firefox session

# and specify the path to the driver

driver = webdriver.Firefox(executable_path=os.path.dirname(os.path.realpath(__file__)) + '/geckodriver')

# Implicit wait tells Selenium how long it should wait before it throws an exception

driver.implicitly_wait(10)

driver.get(url)

time.sleep(3)

# Find the title element on the page

title = driver.find_element_by_xpath('//h1')

print ('Scraping comments from:')

print(title.text)

# scroll to just under the video in order to load the comments

driver.execute_script("window.scrollTo(1, 300);")

time.sleep(3)

# scroll again in order to load more comments

driver.execute_script('window.scrollTo(1, 2000);')

time.sleep(3)

# scroll again in order to load more comments

driver.execute_script('window.scrollTo(1, 4000);')

time.sleep(3)

# Find the element on the page where the comments are stored

comment_div=driver.find_element_by_xpath('//*[@id="contents"]')

comments=comment_div.find_elements_by_xpath('//*[@id="content-text"]')

authors=comment_div.find_elements_by_xpath('//*[@id="author-text"]')

# Extract the contents and add them to the lists

# This will let you create a dictionary later, of authors and comments

authors_list = []

comments_list = []

for author in authors:

authors_list.append(author.text)

for comment in comments:

comments_list.append(comment.text)

dictionary = dict(zip(authors_list, comments_list))

# Print the keys and values of our dictionary to the terminal

# then add them to a print_list which we'll use to write everything to a text file later

print_list = []

for a, b in dictionary.items():

print ("Comment by:", str(a), "-"*10)

print (str(b)+"\n")

print_list.append("Comment by: "+str(a)+" -"+"-"*10)

print_list.append(str(b)+"\n")

# Done? Great!

# Change the list into a collection of strings

# Open a txt file and put them there

# In case the file already exists, then just paste it at the bottom

# Super handy if you want to run the script for multiple sites and collect text

# in just one file

print_list_strings = "\n".join(print_list)

text_file = open("results.txt", "a+")

text_file.write("Video: "+title.text+"\n")

text_file.write("Date:"+str(today)+"\n"+"\n")

text_file.write(print_list_strings+"\n")

text_file.close()

# close the browser

driver.close()

See workshop pad here: https://pad.xpub.nl/p/pyratechnic1