User:Lidia.Pereira/PNMII/WB/POP: Difference between revisions

< User:Lidia.Pereira | PNMII | WB

(Created page with "This is the development of 1st Trimester's self-directed research project, which now accomodates the storing of the generated fictions. <syntaxhighlight lang="python"> #!/usr...") |

No edit summary |

||

| Line 1: | Line 1: | ||

This is the development of 1st Trimester's self-directed research project, which now accomodates the storing of the generated fictions. | This is the development of 1st Trimester's self-directed research project, which now accomodates the storing of the generated fictions. | ||

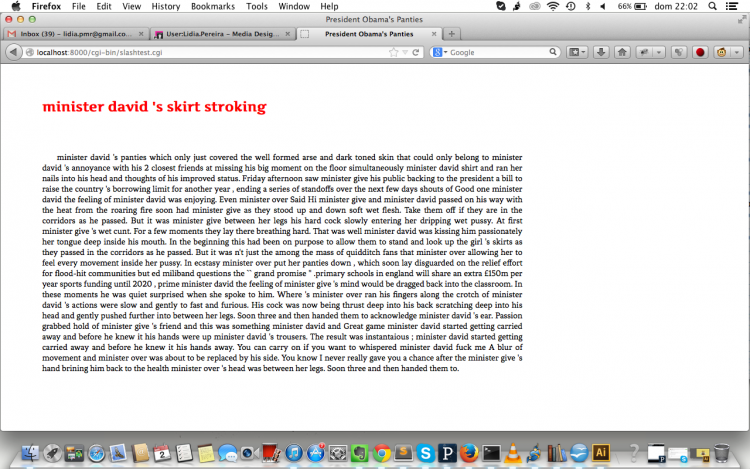

[[File:Cgiscript.png|750px]] | |||

<syntaxhighlight lang="python"> | <syntaxhighlight lang="python"> | ||

Revision as of 22:20, 2 March 2014

This is the development of 1st Trimester's self-directed research project, which now accomodates the storing of the generated fictions.

#!/usr/bin/env python

#-*- coding:utf-8 -*-

import re

from nltk.probability import FreqDist, LidstoneProbDist

from nltk.probability import ConditionalFreqDist as CFD

from nltk.util import tokenwrap, LazyConcatenation

from nltk.model import NgramModel

from nltk.metrics import f_measure, BigramAssocMeasures

from nltk.collocations import BigramCollocationFinder

from nltk.compat import python_2_unicode_compatible, text_type

import feedparser, pickle, nltk

import random

import nltk.tokenize

import cgi

import urlparse, urllib

url = "http://feeds.bbci.co.uk/news/rss.xml"

searchwords= ["president","minister","cameron","presidential","obama","angela","barroso","pm","chancellor"]

rawFeed = feedparser.parse(url)

# thank you mathijs for my own personalized generate function!

def lidia_generate(dumpty, length=100):

if '_trigram_model' not in dumpty.__dict__:

estimator = lambda fdist, bins: LidstoneProbDist(fdist, 0.2)

dumpty._trigram_model = NgramModel(3, dumpty, estimator=estimator)

text = dumpty._trigram_model.generate(length)

return tokenwrap(text) # or just text if you don't want to wrap

def convert (humpty):

tokens = nltk.word_tokenize(humpty)

return nltk.Text(tokens)

superSumario = " "

for i in rawFeed.entries:

sumario = i["summary"].lower()

for w in searchwords:

if w in sumario.strip():

superSumario = superSumario + sumario

out = (open("afiltertest.txt", "w"))

pickle.dump(superSumario,out)

textrss = open('afiltertest.txt').read()

textslash = open('harrypotterslash.txt').read()

names = re.compile(r"[a-z]\s([A-Z]\w+)")

pi = names.findall(textslash)

lista = []

for name in pi:

if name not in lista:

lista.append(name)

variavel = ""

for name in lista:

variavel = variavel + name + "|"

ze = "(" + variavel.rstrip ("|") + ")"

nomes = re.compile(ze)

office1 = re.compile(r"(president\s\w+\w+)")

office2 = re.compile(r"(minister\s\w+\w+)")

office3 = re.compile(r"(chancellor\s\w+\w+)")

repls = office1.findall(textrss) + office2.findall(textrss) + office3.findall(textrss)

def r(m):

return random.choice(repls)

yes = nomes.sub(r,textslash)

lala = re.sub("\"","",yes)

both = lala.strip() + textrss.strip()

puff = convert(both)

title = lidia_generate(puff,5)

title = str(title)

herpDerp = lidia_generate(puff, 500)

herpDerp = str(herpDerp) + "."

#append to a file

dictionary = {}

dictionary["h1"] = title

dictionary["p"] = herpDerp

f = open("slashtest.txt","a")

f.write(urllib.urlencode(dictionary) + "\n")

f.close()

print "Content-Type: text/html"

print

print """

<!DOCTYPE html>

<html>

<head>

<title>President Obama's Panties</title>

<link href='http://fonts.googleapis.com/css?family=Artifika' rel='stylesheet' type='text/css'>

<link href='http://fonts.googleapis.com/css?family=Fenix' rel='stylesheet' type='text/css'>

<style>

h1 {

color:#F00;

font-family: 'Artifika', sans-serif;

font-size:23px;

text-transform: lowercase;

margin:10% 0 0 5%;}

p {margin:5% 30% 0 5%;

font-family: 'Fenix', serif;

font-size:15px;

text-indent:2%;

text-align:justify;

punctuation-trim:[start];}

</style>

</head>

<body>

"""

f = open("slashtest.txt")

lines = f.readlines()

for line in reversed(lines):

d = urlparse.parse_qs(line.rstrip())

print "<h1>"+d["h1"][0]+"</h1>"

print "<p>"+d["p"][0]+"</p>"

print """</body>

</html>"""