Comparing Terms: Difference between revisions

Lucia Dossin (talk | contribs) (Created page with "Being written...") |

Lucia Dossin (talk | contribs) No edit summary |

||

| Line 1: | Line 1: | ||

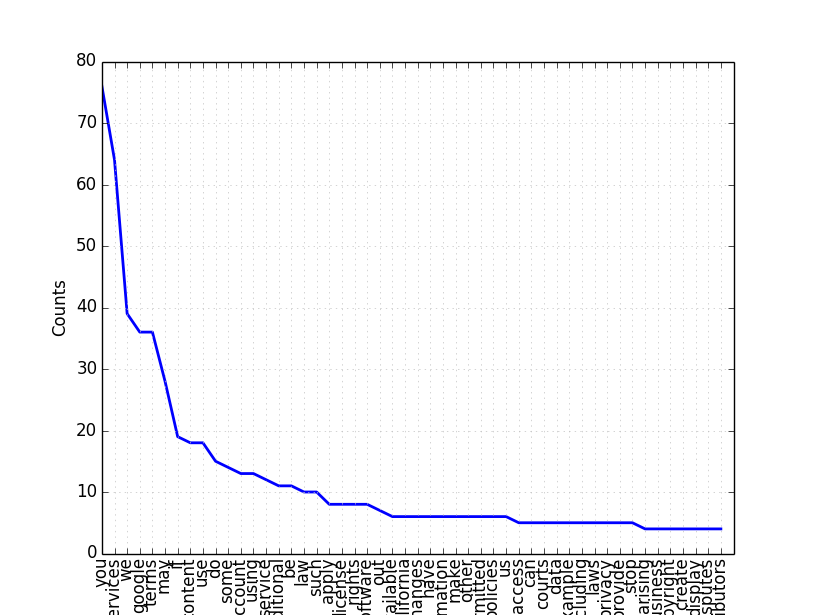

Python code, using NLTK, that compares 3 (offline) versions of Google Terms of Service: current version (Nov 2013), previous version (Mar 2012) and one before that (Apr 2007). After reading each one of the text files, the code identifies the 50 most frequently used words, in each one of them and displays a png image containing the data diagram.<br><br> | |||

<source lang='python'> | |||

from nltk import FreqDist | |||

from pylab import * | |||

import nltk | |||

exclist = ['to', 'or', 'the', 'and', 'our', 'of', 'that', 'in', 'your', 'any', 'a', 'an', 'not', 'for', 'will', 'these', 'are', 'is', 'by', 'as', 'with','about','from', 'under', 'those','on', 'this', 'at', 'which', '\'s', 'n\'t', 'its', 'it', 'The','.',',',':',';','!','?','(',')','\''] | |||

tdates=['Nov 11, 2013','Mar 01, 2012','Apr 16, 2007'] #no longer in use | |||

for l in range(3): | |||

st = str(l) | |||

lname = 'list'+st | |||

lname = [] | |||

for line in open('google-0'+st+'.txt'): | |||

line = line.translate(None, '.') #removes '.' from end of the sentence | |||

words = nltk.word_tokenize(line) | |||

for word in words: | |||

if(word.lower() not in exclist): | |||

lname.append(word.lower()) | |||

fd = FreqDist(lname) | |||

fd.plot(50) | |||

</source> | |||

Output files:<br> | |||

Figure 1 (Nov 2013)<br> | |||

[[File:Figure 1.png]] | |||

Improvements to be done: <br> | |||

. read texts directly from Google servers, parse html<br> | |||

. define words to exclude through code, according to word category (adverb, article, preposition, etc)<br> | |||

. save the plot as .png files through code | |||

Revision as of 09:04, 9 December 2013

Python code, using NLTK, that compares 3 (offline) versions of Google Terms of Service: current version (Nov 2013), previous version (Mar 2012) and one before that (Apr 2007). After reading each one of the text files, the code identifies the 50 most frequently used words, in each one of them and displays a png image containing the data diagram.

from nltk import FreqDist

from pylab import *

import nltk

exclist = ['to', 'or', 'the', 'and', 'our', 'of', 'that', 'in', 'your', 'any', 'a', 'an', 'not', 'for', 'will', 'these', 'are', 'is', 'by', 'as', 'with','about','from', 'under', 'those','on', 'this', 'at', 'which', '\'s', 'n\'t', 'its', 'it', 'The','.',',',':',';','!','?','(',')','\'']

tdates=['Nov 11, 2013','Mar 01, 2012','Apr 16, 2007'] #no longer in use

for l in range(3):

st = str(l)

lname = 'list'+st

lname = []

for line in open('google-0'+st+'.txt'):

line = line.translate(None, '.') #removes '.' from end of the sentence

words = nltk.word_tokenize(line)

for word in words:

if(word.lower() not in exclist):

lname.append(word.lower())

fd = FreqDist(lname)

fd.plot(50)

Output files:

Figure 1 (Nov 2013)

Improvements to be done:

. read texts directly from Google servers, parse html

. define words to exclude through code, according to word category (adverb, article, preposition, etc)

. save the plot as .png files through code