User:SN/Sketch 004: Difference between revisions

No edit summary |

No edit summary |

||

| (5 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

<div style='width:900px;'> | |||

{{vimeo|172228213}} | |||

== About the project == | == About the project == | ||

Sketch #004 | Sketch #004 was made for an exhibition 'Boundaries of the Archive' that took place at EYE Museum 12/05 — 24/05/2016. | ||

The piece is a manipulation of silent films from the Eye Archive and Prelinger collection. The metadata used for indexing the material provides the starting point. The formal parameters of the image source are separated from the narrative to produce sound. Motion vectors and histogram data were isolated from the source to generate an audio track and reassociate it with the footage. The categorisation processes distort the understanding of the narrative content of the silent film the same way the formal characteristics of the video distort the sound. The work emphasizes that the selective nature of any archival system is biased. | The piece is a manipulation of silent films from the Eye Archive and Prelinger collection. The metadata used for indexing the material provides the starting point. The formal parameters of the image source are separated from the narrative to produce sound. Motion vectors and histogram data were isolated from the source to generate an audio track and reassociate it with the footage. The categorisation processes distort the understanding of the narrative content of the silent film the same way the formal characteristics of the video distort the sound. The work emphasizes that the selective nature of any archival system is biased. | ||

| Line 19: | Line 17: | ||

==Process== | ==Process== | ||

* Research indexing and archival system of the EYE archive, primary selection of the videos | |||

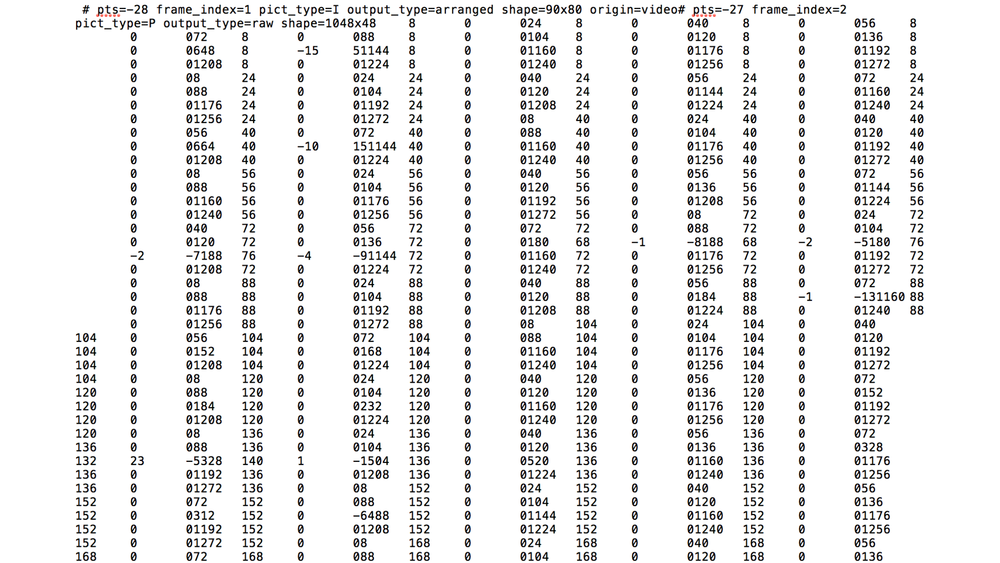

* Video source analysis, motion vector extraction and processing with Python 2.7 | |||

[[File:Motion vectors.png|1000px]] | |||

<source lang="python"> | |||

import math | |||

frame_data = {} | |||

s = 0 | |||

n = 0 | |||

fps = 5 | |||

mpers = [] | |||

for line in open('vectors.txt', 'r'): | |||

if line.startswith('#'): | |||

# filling dictionary keys with frame indexes | |||

options = {key: value for key, value in | |||

[token.split('=') for token in line[1:].split()] | |||

} | |||

curr_frame = int(options['frame_index']) | |||

curr_data = [] | |||

frame_data[curr_frame] = curr_data | |||

else: | |||

# filling dictionary values with motion vector magnitude | |||

x, y, vx, vy = map(int, line.split()) | |||

frame_data[curr_frame].append((math.sqrt(vx**2 + vy**2))) | |||

# arithmetic mean of motion vectors magnitude per frame | |||

for key, value in frame_data.iteritems(): | |||

if not len(value)==0: | |||

frame_data[key]=sum(value)/len(value) | |||

if len(value)==0: | |||

frame_data[key]= 0 | |||

print "done", frame_data | |||

# arithmetic mean of motion vectors magnitude per second | |||

for k,v in frame_data.iteritems(): | |||

s += v | |||

if k%fps == 0: | |||

mpers.append((s/fps)) | |||

s = 0 | |||

print mpers, len(mpers) | |||

# writing to a file | |||

vectors = open('vectors12.txt', 'w+') | |||

for m in mpers: | |||

vectors.write(str(n+1) + ", " + str(m) + ";" + "\n") | |||

n = n+1 | |||

vectors.close() | |||

print "done" | |||

</source> | |||

* Frames extraction and brightness/contrast analysis with FFMPEG, Python 2.7 | |||

<source lang="python"> | |||

from glob import glob | |||

from PIL import Image | |||

from PIL import ImageStat | |||

import math | |||

import os, sys | |||

from cStringIO import StringIO | |||

n = 0 | |||

brightness = [] | |||

ff = glob("frames/*") | |||

ff.sort() | |||

print len(ff) | |||

for f in ff: | |||

im = Image.open(f) | |||

stat = ImageStat.Stat(im) | |||

r,g,b = stat.mean | |||

bright = math.sqrt(0.241*(r**2) + 0.691*(g**2) + 0.068*(b**2)) | |||

print bright | |||

brightness.append(bright) | |||

print len(brightness) | |||

data = open('brightness.txt', 'w+') | |||

for d in brightness: | |||

data.write(str(n+1) + ", " + str(d) + ";" + "\n") | |||

n = n+1 | |||

data.close() | |||

</source> | |||

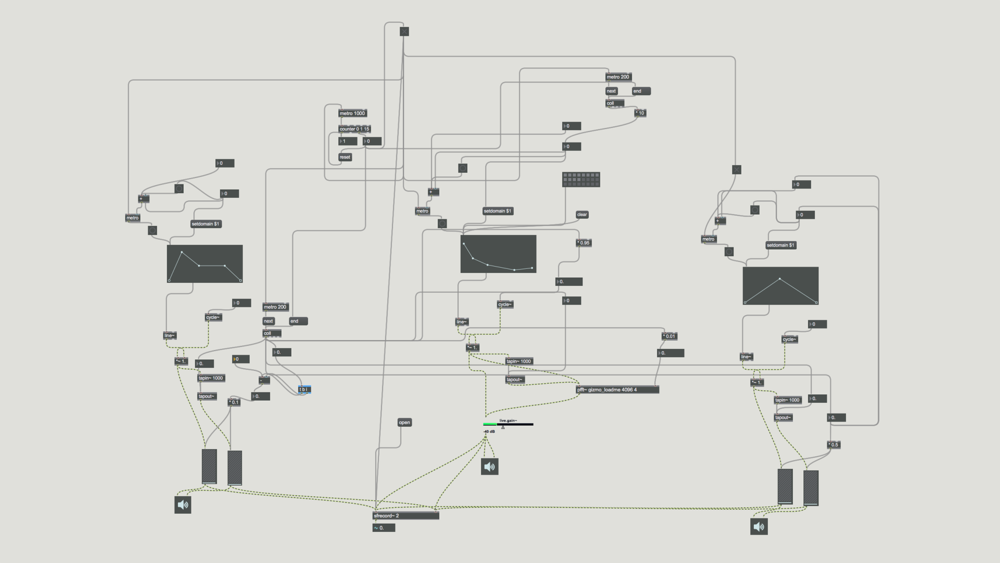

* Sound generation with MAX7 | |||

[[File:Sound_generation.png|1000px]] | |||

* Compositing in After Effects | |||

</div> | |||

Latest revision as of 14:05, 5 December 2016

About the project

Sketch #004 was made for an exhibition 'Boundaries of the Archive' that took place at EYE Museum 12/05 — 24/05/2016.

The piece is a manipulation of silent films from the Eye Archive and Prelinger collection. The metadata used for indexing the material provides the starting point. The formal parameters of the image source are separated from the narrative to produce sound. Motion vectors and histogram data were isolated from the source to generate an audio track and reassociate it with the footage. The categorisation processes distort the understanding of the narrative content of the silent film the same way the formal characteristics of the video distort the sound. The work emphasizes that the selective nature of any archival system is biased.

About the exhibition

Piet Zwart Institute, Master Media Design ResearchLab focuses on the boundaries of the archive. As media practitioners studying the structures and cultural impacts of our media technologies, students concentrates on the intricate and usually hidden aspects of EYE’s extensive archive.

The EYE Collection is internationally recognized for its outstanding historical breadth and quality, particularly in relation to Dutch cinema culture. Any limitations, frictions and little-noticed quirks in the archival system serve as poetic inspiration.

The timing of this exhibition coincides with the moving of the EYE Collection to new premises. It provides a starting point to explore the materiality of both digital and analog films, the poetics of cataloging them, and the fragile semantics of a vast collection database.

Process

- Research indexing and archival system of the EYE archive, primary selection of the videos

- Video source analysis, motion vector extraction and processing with Python 2.7

import math

frame_data = {}

s = 0

n = 0

fps = 5

mpers = []

for line in open('vectors.txt', 'r'):

if line.startswith('#'):

# filling dictionary keys with frame indexes

options = {key: value for key, value in

[token.split('=') for token in line[1:].split()]

}

curr_frame = int(options['frame_index'])

curr_data = []

frame_data[curr_frame] = curr_data

else:

# filling dictionary values with motion vector magnitude

x, y, vx, vy = map(int, line.split())

frame_data[curr_frame].append((math.sqrt(vx**2 + vy**2)))

# arithmetic mean of motion vectors magnitude per frame

for key, value in frame_data.iteritems():

if not len(value)==0:

frame_data[key]=sum(value)/len(value)

if len(value)==0:

frame_data[key]= 0

print "done", frame_data

# arithmetic mean of motion vectors magnitude per second

for k,v in frame_data.iteritems():

s += v

if k%fps == 0:

mpers.append((s/fps))

s = 0

print mpers, len(mpers)

# writing to a file

vectors = open('vectors12.txt', 'w+')

for m in mpers:

vectors.write(str(n+1) + ", " + str(m) + ";" + "\n")

n = n+1

vectors.close()

print "done"

- Frames extraction and brightness/contrast analysis with FFMPEG, Python 2.7

from glob import glob

from PIL import Image

from PIL import ImageStat

import math

import os, sys

from cStringIO import StringIO

n = 0

brightness = []

ff = glob("frames/*")

ff.sort()

print len(ff)

for f in ff:

im = Image.open(f)

stat = ImageStat.Stat(im)

r,g,b = stat.mean

bright = math.sqrt(0.241*(r**2) + 0.691*(g**2) + 0.068*(b**2))

print bright

brightness.append(bright)

print len(brightness)

data = open('brightness.txt', 'w+')

for d in brightness:

data.write(str(n+1) + ", " + str(d) + ";" + "\n")

n = n+1

data.close()

- Sound generation with MAX7

- Compositing in After Effects