User:ThomasW/Documentation: Difference between revisions

No edit summary |

No edit summary |

||

| (6 intermediate revisions by the same user not shown) | |||

| Line 19: | Line 19: | ||

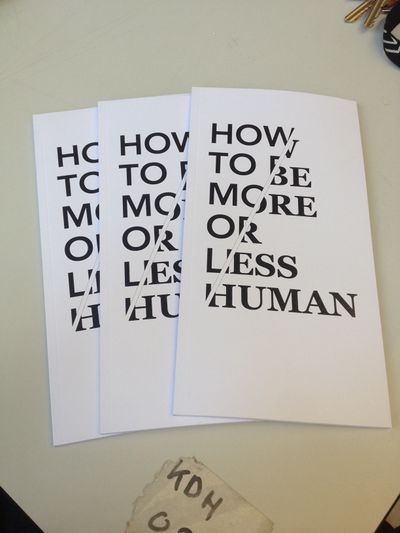

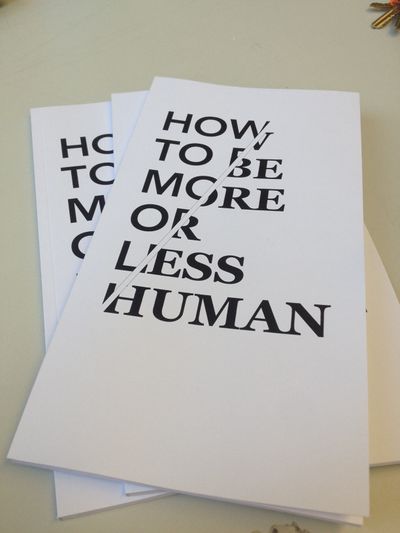

===Prototypes=== | ===Prototypes=== | ||

Small Collection of prototypes | |||

[[File:MaxPrototypeDocumentation.JPG|400px]] | |||

Human and Non Human, Colour reflect what's human and non human.. More human is green, less human is red | |||

[[File:MaxD01.jpg|200px]] | |||

[[File:MAXDdfront.jpg|200px]] | |||

[[File:MAXDd10.jpg|200px]] | |||

[[File:MAXDdintro.jpg|200px]] | |||

===Interview=== | ===Interview=== | ||

| Line 1,052: | Line 1,064: | ||

</code> | </code> | ||

=== | |||

===Typefaces=== | |||

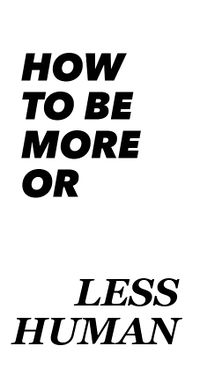

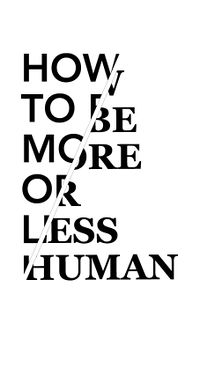

I wanted the design to reflex the contrast of human-nonhuman. I am using the typeface [https://en.wikipedia.org/wiki/Avenir_%28typeface%29 Avenir] (that I use as a symbol for the nonhuman and corperat side of the book. [https://en.wikipedia.org/wiki/Georgia_%28typeface%29 Georgia] I am using for the more human side of the project. | |||

===Final=== | ===Final=== | ||

[[File:MaxDocumentation006.JPG|400px]] | |||

[[File:MaxDocumentation004.JPG|400px]] | |||

[[File:MaxDocumentation001.JPG|200px]] | |||

[[File:MaxDocumentation002.JPG|200px]] | |||

[[File:MaxDocumentation003.JPG|200px]] | |||

[[File:MaxDocumentation005.JPG|200px]] | |||

Latest revision as of 18:13, 5 July 2015

Algorithm theater by Max

PLAN

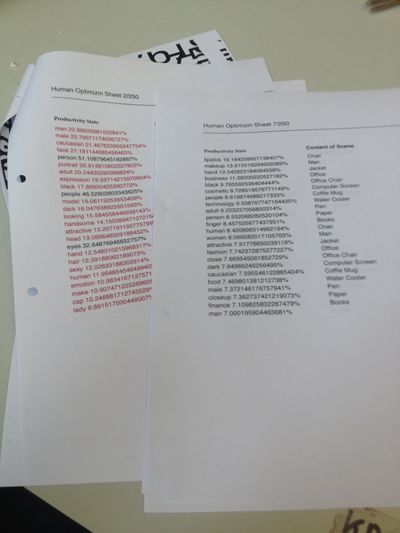

Max are doing a "algorithm" theatre where he performance a theatre piece based on words that are generated from image recognizing software. The plan is to make a book based on the generated text and images to document the piece. By just document the text, it will make every future performance different.

About the project

How To Be More Or Less Human is a performance investigating how humans are identified by computer vision software. Looking specifically at how the human subject is identified and classified by image recognition software, a representation of the human body is formed.

The living presence of a human being cannot be sensed by computer vision, so the human subject becomes a quantifiable data object with a set of attributes and characteristics. Seeing ourselves in this digital mirror allows us to reflect on other models of perception and develop an understanding of how the human subject is ‘seen’ by the machinic ‘other’.

Looking at ourselves through the automated perception of image recognition can highlight how gender, race and ethnicity have been processed into a mathematical model. The algorithm is trained to ‘see’ certain things forcing the human subject to identify themselves within the frame of computer vision.

Prototypes

Small Collection of prototypes

Human and Non Human, Colour reflect what's human and non human.. More human is green, less human is red

Interview

Max Dovey is 28.3% man, 14.1% artist and 8.4% successful. His performances confront how computers, software and data affect the human condition. Specifically he is interested in how the meritocracy of neo-liberal ideology is embedded in technology and digital culture. His research is in liveness and real-time computation in performance and theatre..

By looking at you enjoy critiquing corporate lifestyle, do you agree with that statment? Yes, in a lot of digital services, digital technology companies like to commodify and exploit people and I want to present and preforme this tendency of capitalism in the techno cultures. But I have never see it to be the main subject, but they are always there, always present.

In one of you past project, The Emotional Stock Market’. How did that come about ? t came from me using Twitter as a script to perform with and using the real-time nature of social media as material to generate stories and narratives, it was made in the form of the classic “stock market floor”, but instead of selling goods, we where selling emotions with search words like “happy”, “love” and “sad”, but there is an irony there, now corporations are doing that, they are starting to commodify emotions.

You had another project, this one while at the Piet Zwart Institute, The project “We Believe In Cloud Computing” where you send printed messages into the sky with balloons. Whats your story of the piece and your opinions on the cloud? Its problematic to deal with the concept of the cloud and digital materiality, its computers and services, but on someones else’s computer, therefore I thought it would be funny to take that abstraction sending data to the sky with balloons, Cloud computing is more a belief, more than a brandIt’s backing everything up and you no longer have responsibility over it. That is the idealogy of the cloud.

You are questioning some systems, but are you maybe even more questioning the reason that the system exists in the first place? It was not really constructed for how it is used now. That is an interesting idea... yes of course, like social media..its was not really constructed in the first place like now. Mark Zuckerberg made it to rate of woman, then expanded on it and it turned into what its now. Cooperation have learn to capitalise the technology.

What do you think happens after Facebook? Well now we are so used to working with the internet on a really personal level, putting yourself in the center. It has capitalized on basic human needs, being loved, being popular, The basic the basic human desire to feel close to others has become an economic commodity and I don’t know what happens after that.

There is a movement to take back control, to make new systems.. but even I feel its hard to go back.. you kinda make yourself an outsider now if you log off. Even if I want to leave, you got the rest who stays.. Some people only use Facebook to keep in contact so its hard to break off.. you become a luddite. We are all free to leave, but the market demands me to have a Facebook account.

You started on an idea to do your performance in an office environment , but was that the original idea? How did the idea came about? Last year, I was using a program to see if objects could articulate language, for example if the coffee grinder could speak what would it say? The program gave a voice to the objects and I was really interested in how the computer can be a co-performer. I am taking a product and misusing it in a really different compared to what is was made to do. You really need to go to the extremes, to show the absurdity of it. I found it really fascinating that a computer can be so confident in what it sees.

What are the program you are using? Its called Imagga and is used to organize images based on keywords and values it find in them. Its using a programmed data sett, thats how it can see a “man” in a photo of a man, but the program is really not accurate at all, it have a programmed bias on what it “sees”. Its shows an image of society value feelings and imitation, whats is successful or whats happy is totally a construct of who have programmed it.

Think about the story of Sleeping Beauty where the princess ask the mirror “mirror mirror on the wall” but only its a computer program and not a mirror that tell us who is the fairest of them all. You need to be on board with me for the sake of the piece to work to mean anything.. the values can mean nothing, but they coloured to not something 100% man or sexy.

You want to take it to the absurd and make it funny? There is a danger there, that people will not take the topic seriously. I am slightly amazed at how a computer can visually interpret the world through shapes, colours and patterns.am concerned that everything in our world can be categorized by a computer. So I want the computer to make mistakes, I want to show the error in the system, but I don’t want the computer to look stupid, its far more smarter then I am.

Do you see a danger of making computers funny or humanizing computers ? That is how the robotics and AI industry are marketing new technology, putting cute faces on computers to make our relationship towards them more empathetic.

I want to imply that the computer’s modal of perception is the dominate way of seeing. Then human perception which is way too subjective. The way the computer sees things is a way more effective way of seeing then the human one. We fall in love with things and become empathetic towards them.

You started with the idea to have it in an office, but has that changed?I started by putting the story of the performance in the office a Silicon Valley software company with the company that makes the programs. But all the correspondence goes to them trying to improve their software and charge me over 1000 euros for a customized algorithm that would identify me as a man with 100% confidence

The piece is now set in a health center ,the patient gets sent in and he goes through a series of exams on his vision. Then I take off my clothes and see how it gender is interpreted by the image recognition software. The suit makes the man but when i am naked i become 5% man and 5% lady. That is how I have figured out how the program works, dressing things like a man to see how human the objects are, a dustbin is really a human with just a suit on.

I was looking at “visual agnosia”, that is a human condition that makes people have problems naming things difficult..like the foot is a shoe. There is a book called “The man mistook his wife for a hat” by Oliver Sacks (1985) and I was looking at this computer program that I am using that perceives the world in a really similar way. It guesses what object is based on associations, apple-fruit-banana-food.

So the human shape is irrelevant,its all about the suit and clothing? Clothing is one element used by the computer to identify a human subject. I got this theory when it saw me from behind and the software perceived me as a lady. The white shirt and suit is the identifier of human male, and in the end i get replaced by a dustbin, because a dustbin is more human than me.. I am just an another data object in front of the computer.

I like the existence crisis it makes, that I can be replaced by a dustbin, because a dustbin with a shirt on is more of a man than me.I like the existential crisis it makes that a dustbin with a white shirt on can be equal to a living being.

You are touching the topic about humans getting replaced by computers. Yes, like what is the role for us now anymore, like if a dustbin can be as human as me now, then whats my purpose then. And its kinda like a sci-fy fantasy like we all have, its got two ends of the argument.

One is that we will always be able to program the computer and therefore will always be in control or the humanist and technophobic argument, that the computer is going to take over, singularity and it goes beyond your control and I like to play with the feelings, for I have some of those fears too, of us losing control of computers.

Like I am at self services check out at Albert Heijn I get really frustrated with it, I think it not even close as good as a human, but we have still decided to use it over a human-being, for they are quicker and more efficient and need less breaks. So with this performance I am getting analysed by the computer like I am at an automated health services.

“Yes you there, come in, take off your clothes and we will see how human you are” and that is kinda happening now, we go online and check up your illnesses, 80% of people ow self-diagnose themselves first on Wikipedia. so we can laugh about it. What I am doing is to be really eccentric about the confidence levels of the computer. The computer is telling me that I am 40% human. I can live with that. It is kinda how people do when they read tabloids newspapers or watching all the horrible chat shows.. I am taking it to the extreme to reveal the errors and problems with software that could one day be better than me at certain tasks.

In 1950 Alan Turing proposed the famous Turing test where he speculated on the computers ability to deceive someone into thinking they were human. And in 1966 Joseph Weizenbaum created Eliza, a chatbot that could imitate a human by repeating sentences and conversing with a human.

He was shocked at how how simply a computer could deceive a human, that we could suspend our disbelief to such an extent to think a simple computer program could be human like. But as it transpires it is not a case of how well computers imitate us, it is how much we can incorporate and imitate them.

So let us take it to the extreme and ask what happens when a dustbin with a shirt on is more of a man than me?

Text

5575535184e97.png /////

black 11.84% Dark 9.99% person 9.90% people 9.73% 3d 9.38% business 8.12% light 7.87% man 7.56% shadow 7.52% interior 7.50% Night 7.46% home 7.40% design 7.26% studio 7.24% art 7.24% human 7.09% color 7.09% /////// 5575538c75351.png ////// leg 22.58% people 22.06% suit 21.82% trouser 21.46% person 18.99% man 17.46% adult 16.96% business 16.92% caucasian 15.95% male 15.15% businessman 14.38% success 13.67% attractive 13.17% work 12.68% corporate 11.67% sexy 11.43% professional ///// 557553f5037cf.png //// 46.80% suit 38.48% man 38.15% business 36.99% businessman 33.30% people 31.86% person 31.19% male 30.95% corporate 28.58% professional 28.00% adult ///// 55755412053c5.png /// 59.61% suit 47.68% businessman 44.78% man 43.74% business 40.55% corporate 36.77% male 35.00% executive 33.85% professional 32.22% people 31.03% success

/////

5575542eeb787.png

////

33.18%

people

30.75%

man

29.84%

person

28.12%

business

23.63%

adult

22.86%

businessman

21.82%

male

21.58%

success

21.29%

work

21.22%

caucasian

////

5575544bd3fbd.png

///

28.87%

people

27.65%

person

27.02%

leg

24.98%

man

24.38%

trouser

22.51%

adult

21.30%

business

20.26%

male

20.26%

caucasian

18.08%

women

/////

55755468d6fb8.png

////

73.42%

underwear

39.49%

body

25.49%

adult

24.77%

health

23.93%

sexy

21.13%

slim

20.62%

fit

20.57%

skin

19.63%

leg

19.25%

healthy

//////

55755485effff.png

/////

47.29%

underwear

36.40%

body

24.95%

adult

23.98%

health

22.73%

sexy

22.57%

swimsuit

20.31%

caucasian

19.64%

fit

18.82%

attractive

18.44%

healthy

///

557554c029055.png

///

62.97%

underwear

40.29%

body

26.69%

adult

26.40%

sexy

26.27%

health

21.50%

slim

20.39%

skin

20.02%

fit

19.06%

healthy

18.51%

attractive

/////

557554dd0aea4.png

////

60.40%

swimsuit

51.10%

underwear

29.47%

body

23.56%

garment

23.19%

sexy

20.32%

adult

18.74%

attractive

18.47%

model

18.38%

health

17.41%

caucasian

////

557554fa15fe1.png

////

26.61%

weight

25.54%

dumbbell

24.89%

body

22.79%

attractive

21.98%

underwear

21.80%

person

20.07%

adult

18.90%

caucasian

18.89%

sexy

17.63%

health

//////

55755516caabf.png

//

74.38%

underwear

39.21%

body

33.30%

swimsuit

25.09%

swimming trunks

23.58%

sexy

23.44%

adult

23.29%

health

21.17%

fit

20.97%

slim

18.47%

skin

///

557555511aa5c.png

//

27.84%

underwear

27.83%

body

25.27%

adult

23.60%

caucasian

21.99%

leg

21.28%

person

21.10%

sexy

20.39%

attractive

19.51%

hand

17.68%

trouser

//// 5575553427dac.png /// 86.68% underwear 37.67% body 23.32% adult 23.05% sexy 22.71% health 21.18% slim 19.45% fit 18.67% skin 17.41% leg 17.23% garment ////// 5575556e0d724.png // 34.03% people 32.47% man 31.80% business 29.08% person 28.97% male 28.94% adult 26.74% businessman 24.81% caucasian 22.06% happy 20.58% smiling ////// 5575558aef3f6.png //// 30.63% people 29.42% person 24.67% adult 22.49% man 21.17% caucasian 20.10% business 19.60% leg 19.24% male 18.98% attractive 18.90% women ////// 557555a8265b5.png //// 34.67% man 33.15% suit 32.70% people 32.64% business 29.27% businessman 28.83% male 28.38% person 27.61% adult 25.72% corporate 24.74% work ///// 557555c51a243.png //// 44.93% business 42.94% businessman 38.13% corporate 37.97% man 35.56% success 34.40% people 33.67% executive 32.66% professional 30.78% person 30.47% suit ///// 557555e23ac87.png //// 31.48% people 31.38% person 29.35% man 25.96% adult 23.37% suit 23.05% male 23.00% caucasian 22.94% business 22.09% attractive 21.08% businessman //// 557555ff0716b.png /// 64.51% suit 42.89% businessman 39.00% man 38.80% business 35.54% corporate 31.26% male 29.04% people 28.62% executive 27.94% garment 27.56% office ///// 55755638e850a.png //// 18.13% texture 17.33% design 16.02% wallpaper 14.33% frame 12.91% material 12.77% pattern 11.92% backdrop 11.39% highlight 11.25% graphic 11.22% sheet ///// 55755650c7bc3.png /// furniture 39.38% medicine chest 35.43% room 35.23% cabinet 22.90% furnishing 19.36% interior 19.36% bathroom 15.40% home 12.23% wardrobe 11.51% floor /////

//// 55755672cf628.png //// highlight 23.63% texture 19.46% pattern 19.28% design 17.54% wallpaper 14.74% graphic 13.56% art 13.37% material 13.09% space 12.88% light ////// 557556ad0861a.png /// black 13.06% scroll 12.70% sign 11.86% design 11.69% 3d 11.40% graphic 11.19% art 10.31% business 10.16% symbol 9.75% night //// 5575568bb5ba7.png /// soap dispenser 18.41% dispenser 17.84% container 14.12% black 13.26% milk 12.00% liquid 11.94% paper towel 10.85% towel 10.56% food 9.17% cup ///// 557556c6c6548.png /// lab coat 40.27% coat 34.73% man 31.08% male 29.20% people 27.84% overgarment 25.00% adult 24.36% person 23.69% worker 23.60% caucasian ///// 55755701f245d.png ///// adult 20.59% pretty 20.05% people 19.93% caucasian 19.88% sexy 19.46% attractive 19.11% person 18.17% body 17.71% hand 17.16% lifestyle ///// 55755721144fe.png /// black 12.96% scroll 11.36% design 11.31% sign 10.75% graphic 10.32% business 10.03% art 9.52% 3d 8.90% symbol 8.49% dark //////// 5575573cd1dd7.png ///// 10.85% light 10.12% guillotine 8.10% instrument of execution 7.93% home 7.59% man 7.44% house 7.33% color 7.14% design 7.02% black

/////// 55755777ec7a7.png //// guillotine 17.81% instrument of execution 13.87% tent 13.38% instrument 10.84% cradle 10.39% shelter 9.80% structure 9.57% person 9.43% people 9.35% device /////// 557557b29a55d.png /// 557557eda21d1.png ///// 55755828c5026.png //// 55755863c493d.png

Emails

Imagga is an Image Recognition Platform-as-a-Service providing Image Tagging APIs for developers and businesses to build and monetize scalable image intensive apps in the cloud.

The Technology We develop and democratize technologies for advanced image analysis, recognition and understanding in the cloud.

Our portfolio includes proprietary image auto-tagging, auto-categorization, color extraction and search, and smart cropping technologies.We’ve designed an infrastructure that can handle huge loads of images and auto-scale to accommodate a lot of concurrent queries.

The Solutions We offer a set of APIs for automated image categorization and meta-data extraction, intended for business customers. Our APIs can be used either separately or in a combination, and they can save a lot of time and effort otherwise spent on manual curation of images.

The application of our technology also leads to better user experience for the end-customers of our business customers. And last but not least, the level of automation that we offer, enables a lot of monetization opportunities that are simply not feasible or even not possible if huge amounts of images need to be handled manually. Currently we offer our APIs on a platform-as-a-service pay-as-you-go basis.

(Source: http://imagga.com/company)

2.April Hi Max,

We are excited to announce some changes to our API pricing policy. We’ve got lots of feedback and requests for more affordable way to access our APIs.

Today, we are announcing Developer Plan for Imagga APIs, priced at $14/month that will allow you to use one of our APIs with up to 12 000 calls a month (3000/day, 2 requests/second). We believe this plan will give you more flexibility and the opportunity to apply our breakthrough technology on a more affordable price.

Hacker plan remains free but we are reducing the monthly calls to 2000 (200/day, 1 request per second) and will be available as before just for image tagging API. The change will take place on 15th of April.

We are eager to see you plug in our APIs in your projects. Send us feedback and any ideas you have regarding our technology offering in general or any tip you want to share.

Happy tagging!

2.April

Hi Pavel, thats good news about the developer plan, i am definetly interested. perhaps you can assist me with some questions regarding auto-tagging feature that i have emailed sales@imagga twice now and not received any response? below is a copy of the email ive sent if you could direct me to someone who may know a bit more about this id be very grateful., many thanks Max

Hello, i currently only have a hacker account but am looking to upgrade to one of your other services but i had a few more questions regarding the auto - tagging feature of imagga.

I have been mainly using it to auto-tag pictures of humans , and although am quite satisfied by the wide range of results I wanted to have a better understanding of the human terms available within the imagga dictionary. Ive seen ‘happiness, happy, smile, love and sexy and passion’ but I was wondering if you could inform me of the list of human associated terms, that are based around emotions.

I am looking to use Imagga for a project I am doing but would like to have a better understanding of the vocabulary available to describe human emotion.

9.April Hey Max,

I’m really sorry for the inconvenience caused with not answering your mail!

We do not have specific vocabulary for human expressions and emotions. I might say that you’ve listed more of them in your mail, but we can offer custom training based on user provided data. For example you can collect images with different human expressions and emotions and organise them in needed categories/tags.

Then we can train custom API algorithm based on your data. Usually we have pricing policy for the custom training, but your case sounds interesting and we can think on some collaboration.

We are happy that you find our new Developer plan useful! If you have any other questions, please let me know.

Best Pavel from Imagga

15.April Hi Pavel,

Thanks for replying, I hope you dont mind answering another question of mine before I get the developer plan. I had previously been using the api for auto tagging ‘http://api.imagga.com/draft/tags’ and was posting an image using python.

Now this API seems to have been taken offline and i am doing it via a get request to this api ‘ https://api.imagga.com/v1/tagging ‘ Is this the correct address that I should be using? if so is it possible to post a image rather than do a get request via a url link of an image? I would rather post images form my local machine then from a server if that is still possible? As for making a customising API, this is very interesting however I wont need that for this project. I am currently developing a performance using Imagga auto-tagging as a character in a theatre show. In this piece

I am exploring what elements trigger different tags and what visual images are 100% confident. Thats why I asked about the human related words, because I am trying to perform with Imagga’s auto tagging tool to reach 100% confidence, for example to become a 100% human or 100% man. So I am interested in finding out through a performed process what visual elements generate specific tags to find out how tags are related to certain visual elements.

The performance is still in development but will be toured in the summer and so if you were interested in collaborating it would be great to talk more about how that could work. Best, Max Dovey

21.April Hi Max,

You can upload an image for tagging through the /content endpoint. Just upload (with a POST request) your file to https://api.imagga.com/v1/content first, the API would respond with a content id which you can then provide to the /tagging endpoint via the “content” parameter (e.g. https://api.imagga.com/v1/tagging?content=mycontentid) and you will be given tags for the given image.

You can also take a look at the respective docs for the tagging and content endpoints: http://docs.imagga.com/#tagging-endpoint http://docs.imagga.com/#content

Feel free to write me if there is anything else I can help you with.

Best, Ivan Penchev, Imagga API Team

21.April Hi Ivan, thanks for this.

Ive signed up for the developer plan and began planning to execute auto tagging via browser webcam. however Ajax does not allow cross domain server requests. what do you recommend is the best way to do this? with a php script on my server that then posted to imagga ?

thanks Max

21.April Hi Max,

CORS should be allowed in the API. Could you share with me how you are submitting the request with ajax so I can help you identify the issue. Thank you.

Here is an example code for using the tagging API with jQuery ajax. You should just enter your api key and secret for the respective variables’ values.

Best Regards, Ivan Penchev

21.April Hello again,

I forgot to include a link to the mentioned sample code in my last email. Sorry about that. Here it is: http://jsfiddle.net/ivanvpenchev/ckkb1uL8/. Thanks.

Best, Ivan Penchev

21.April Hi Ivan,

thanks for the quick response and the example.

Thats very helpful, I want to take images from webcam. So do i have to get post the image into the DOM to get a source url?

what format can i post it to Tagging API. ive got a rough js fiddle here http://jsfiddle.net/maxdovey/5yyg4eff/

MX

22.April Hi Max,

Thanks for asking. We are not supporting base64 encoded image uploads yet so I think the best way to do this would be to send the base64 data from imgData to a php script on your side, decode it and I can think of two ways to continue from there.

The first one is to send the decoded data to our /content endpoint via a POST request (like you are making an ordinary file upload). Our API will issue you a content id which you should then send to our /tagging endpoint through the “content” parameter.

The second way is to save the file on your server and just submit a publicly accessible URL directly to the /tagging endpoint via the “url” parameter.

Hope this helps. Best Regards, Ivan Penchev

29.April Hi Pavel,

I’ve signed up for the Developer plan and am really enjoying your auto-tagging software.

I was wondering if it was possible for someone to tell me a little bit more about how the software is trained to recognise certain things?

If you have any information on the software training process? or what image library you are using? this would help me out alot.

Many thanks Max Dovey

29.April Hey Max,

Sorry for the late response! I’m glad to see that you are satisfied with our service!

On your first question, you can look at our technology page for more info - https://imagga.com/technology/auto-tagging.html. If you have any more specific question on this, please let me know.

On the second one - we need 1000+ sample images per category/tag and then we can run a training process based on this. Do you have specific use case that need custom training?

Regards Pavel from Imagga

2.May Hey Pavel, I would like for the auto tagging to be 100% confident with gender. For Custom training would i have to submit 1000+ pictures of men and women to achieve 100% confidence?

thanks Max

4.May кажу ми да даде и ще направим тест. дори да не е 100% когато не сме сигурно ще е по нисък върнатия конфидънс и ще си преценява дали да го показва или да ходи за модератор Tell him to give us images and we will make a test. Even if it is not 100%, when we are not sure the returning confidence will be lower and will decide whether to show it or go to a moderator.

4.May H Max,

Sorry for the late response!

We can do the test and see what will be the confidence. You can send us the sample images grouped by gender. If the results and the confidence rate are satisfying you’ll be charged $1199 which is our standard rate for custom training. If the results don’t fit your expectations, you’ll not be charged anything.

Let me know if you want to proceed with this.

Regards Pavel from Imagga

Code

import sys, os , time

import requests

import json

import subprocess

api_key = ‘********************************’

api_secret = ‘******************************’

with open(‘tags.txt’, ‘a’) as outfile:

#upload each picture to your server

for root, dirs, files in os.walk(“/Users/user/Desktop/PZI/1/PYTHON/imagga-py-master/tests/profile”):

count = 0

# path = root.split(‘/’)

for file in files:

path = root + ‘/’ + file

# print path

# print file

try:

#upload pic to folder on your server and save directory

cmd = os.system(“scp {} max@headroom.pzwart.wdka.hro.nl:public_html/images/profile/”.format(path))

# print cmd

url = “http://headroom.pzwart.wdka.hro.nl/~max/images/profile/{}”.format(file)

# print url

r = requests.get(‘https://api.imagga.com/v1/tagging?url=’ + url , auth=(api_key, api_secret))

data = json.loads(r.text)

listy = data[‘results’][0][‘tags’]

for i in listy:

word = i[‘tag’]

confidence = i[‘confidence’]

if word == ‘man’:

print file

conf = “%.1f” % confidence+”%”

print word, conf

# outfile.write(file)

# outfile.write(‘\n’)

# outfile.write(word)

# outfile.write(“ “)

# outfile.write(conf)

# outfile.write(‘\n’)

# outfile.close()

time.sleep(2)

except:

e = sys.exc_info()[0]

print ‘error’

Typefaces

I wanted the design to reflex the contrast of human-nonhuman. I am using the typeface Avenir (that I use as a symbol for the nonhuman and corperat side of the book. Georgia I am using for the more human side of the project.