User:Lucia Dossin/Self-directed Research/Exercise2: Difference between revisions

Lucia Dossin (talk | contribs) No edit summary |

Lucia Dossin (talk | contribs) No edit summary |

||

| (4 intermediate revisions by the same user not shown) | |||

| Line 4: | Line 4: | ||

<h2>How it works</h2> | <h2>How it works</h2> | ||

The user allows the browser to use the computer's mic and then speaks a word. This word is recognized by the computer and the word match is made | The user allows the browser to use the computer's mic and then speaks a word. This word is recognized by the computer and the word match is made (in this case, among the 11 items in the array described above). The match is then spoken by the computer, which demands the user to speak another word, which will be matched with another word, and so on. | ||

[[File:Lucia Dossin fai-voice-schema.jpg | 600px]] | [[File:Lucia Dossin fai-voice-schema.jpg | 600px]] | ||

| Line 18: | Line 18: | ||

In the current version, it uses [http://p5js.org p5.js] library - more specifically the p5.sound module and the [https://dvcs.w3.org/hg/speech-api/raw-file/tip/speechapi.html Web Speech API]. Check browser support [http://caniuse.com/#feat=web-speech here]. | In the current version, it uses [http://p5js.org p5.js] library - more specifically the p5.sound module and the [https://dvcs.w3.org/hg/speech-api/raw-file/tip/speechapi.html Web Speech API]. Check browser support [http://caniuse.com/#feat=web-speech here]. | ||

<source lang=" | <h3>mockup-red.html</h3> | ||

<source lang="html4strict"> | |||

<!DOCTYPE html> | <!DOCTYPE html> | ||

<html> | <html> | ||

| Line 139: | Line 140: | ||

</body> | </body> | ||

</html> | </html> | ||

</source> | |||

<h3>getMic.js</h3> | |||

<source lang="javascript"> | |||

var song, analyzer; | |||

function setup() { | |||

var myCanvas = createCanvas(600, 600); | |||

myCanvas.parent('canvasContainer'); | |||

mic = new p5.AudioIn(); | |||

mic.start(); | |||

// create a new Amplitude analyzer | |||

analyzer = new p5.Amplitude(); | |||

// Patch the input to an volume analyzer | |||

analyzer.setInput(mic); | |||

} | |||

function draw() { | |||

// Get the overall volume (between 0 and 1.0) | |||

var vol = analyzer.getLevel(); | |||

fill(255, 0, 0); | |||

stroke(255, 0, 0); | |||

background(255-(vol*150), 255-(vol*150), 255-(vol*150)); | |||

// Draw an ellipse with size based on volume | |||

ellipse(width/2, height/2, 300+vol*200, 300+vol*200); | |||

} | |||

</source> | |||

<h3>mockup-blue.php</h3> | |||

<source lang="php"> | |||

<?php | |||

$f = $_GET["f"]; | |||

if ($f > 11){ | |||

$f = 11; | |||

} | |||

?> | |||

<head> | |||

<script language="javascript" src="p5.js"></script> | |||

<script language="javascript" src="addons/p5.sound.js"></script> | |||

<style> body {padding: 0; margin: 0; text-align: center;} canvas{margin:0 auto;} </style> | |||

</head> | |||

<body> | |||

<script> | |||

var song, songs, analyzer; | |||

songs = ['assets/1darkness.mp3', 'assets/2tree.mp3', 'assets/3cake.mp3', 'assets/4pain.mp3', 'assets/5nothing.mp3', 'assets/6love.mp3', 'assets/7birthday.mp3', 'assets/8dog.mp3', 'assets/9circus.mp3', 'assets/10home.mp3', 'assets/11empty.mp3', 'assets/error.mp3']; | |||

function preload() { | |||

song = loadSound(songs[<? echo $f; ?>]); | |||

} | |||

function setup() { | |||

createCanvas(600, 600); | |||

song.play(); | |||

analyzer = new p5.Amplitude(); | |||

analyzer.setInput(song); | |||

} | |||

function draw() { | |||

var vol = analyzer.getLevel(); | |||

fill(0, 0, 255); | |||

stroke(0, 0, 255); | |||

background(255-(vol*150), 255-(vol*150), 255-(vol*150)); | |||

ellipse(width/2, height/2, 300+vol*200, 300+vol*200); | |||

if(song.currentTime() <= song.duration() - 0.05){ | |||

console.log('playing'); | |||

}else{ | |||

console.log('stopped'); | |||

song.stop(); | |||

location.href = "mockup-red.html"; | |||

} | |||

console.log(song.currentTime() + ' -> ' + song.duration()); | |||

} | |||

</script> | |||

</body> | |||

</source> | </source> | ||

Latest revision as of 16:45, 14 December 2014

Mockup

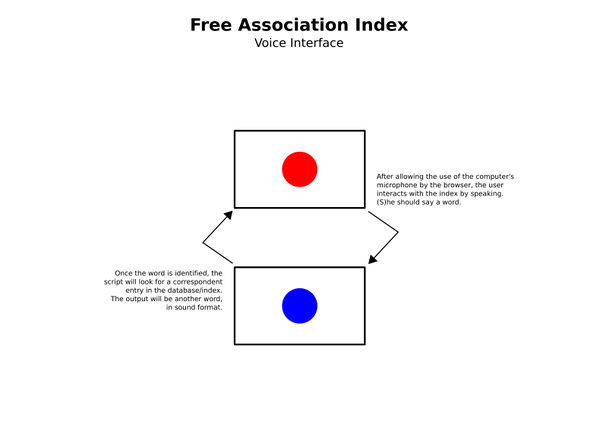

The idea behind this mockup is to study the possibility of using voice interface to navigate in the Free Association Index. In this mockup I'm using not really an index or a database, but simply an array containing no more than 11 items.

How it works

The user allows the browser to use the computer's mic and then speaks a word. This word is recognized by the computer and the word match is made (in this case, among the 11 items in the array described above). The match is then spoken by the computer, which demands the user to speak another word, which will be matched with another word, and so on.

When the user is supposed to speak, a red circle is displayed on the screen. While the computer is speaking, a blue circle is displayed instead. Both circles change their diameters according to the sound being spoken. The gif below illustrates this idea:

Code

The mockup can be found (and used) in the URL: http://headroom.pzwart.wdka.hro.nl/~ldossin/fai-voice/

In the current version, it uses p5.js library - more specifically the p5.sound module and the Web Speech API. Check browser support here.

mockup-red.html

<!DOCTYPE html>

<html>

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0"/>

<title>Web Speech API Mockup</title>

<script language="javascript" src="p5.js"></script>

<script language="javascript" src="addons/p5.sound.js"></script>

<script language="javascript" src="getMic.js"></script>

<style>

body

{

max-width: 600px;

margin: 2em auto;

font-size: 10px;

}

h1

{

text-align: center;

}

.buttons-wrapper

{

text-align: center;

}

.hidden

{

display: none;

}

#transcription,

#log

{

display: block;

width: 100%;

height: 2em;

text-align: center;

line-height: 1.0em;

}

#transcription{position: relative; top: 280px; color: #fff; z-index: 5;}

#canvasContainer{position: relative; top: -4em; z-index: 4;}

</style>

</head>

<body>

<div id="transcription" readonly="readonly"></div>

<div id='canvasContainer'></div>

<div id="log"></div>

<span id="ws-unsupported" class="hidden">API not supported. Please use Chrome.</span>

<script>

//my mini-fake 'db' of words

var words = ['night', 'bush', 'sugar', 'pity', 'value', 'fortune', 'child', 'stone', 'clown', 'fire', 'without'];

var wIndex;

function containsObject(obj, list) {

var i;

for (i = 0; i < list.length; i++) {

if (list[i] === obj) {

return i;

}

}

return false;

}

// Test browser support

window.SpeechRecognition = window.SpeechRecognition ||

window.webkitSpeechRecognition ||

null;

if (window.SpeechRecognition === null) {

document.getElementById('ws-unsupported').classList.remove('hidden');

document.getElementById('button-play-ws').setAttribute('disabled', 'disabled');

document.getElementById('button-stop-ws').setAttribute('disabled', 'disabled');

} else {

var recognizer = new window.SpeechRecognition();

var transcription = document.getElementById('transcription');

var log = document.getElementById('log');

// Recogniser doesn't stop listening even if the user pauses

recognizer.continuous = true;

// Start recognising

recognizer.onresult = function(event) {

transcription.textContent = '';

for (var i = event.resultIndex; i < event.results.length; i++) {

if (event.results[i].isFinal) {

transcription.textContent = event.results[i][0].transcript ;//+ ' (Confidence: ' + event.results[i][0].confidence + ')';

wIndex = containsObject(event.results[i][0].transcript, words);

if(wIndex === false){

//use a random number if word is not in my fake db

wIndex = Math.floor((Math.random() * words.length) + 0);

}

location.href = "mockup-blue.php?f="+wIndex;

} else {

transcription.textContent += event.results[i][0].transcript;

}

}

};

// Listen for errors

recognizer.onerror = function(event) {

log.innerHTML = 'Recognition error: ' + event.message + '<br />' + log.innerHTML;

};

recognizer.interimResults = "final";

try {

recognizer.start();

log.innerHTML = 'Recognition started. Please say a word.' + '<br />' + log.innerHTML;

} catch(ex) {

log.innerHTML = 'Recognition error: ' + ex.message + '<br />' + log.innerHTML;

}

}

</script>

</body>

</html>

getMic.js

var song, analyzer;

function setup() {

var myCanvas = createCanvas(600, 600);

myCanvas.parent('canvasContainer');

mic = new p5.AudioIn();

mic.start();

// create a new Amplitude analyzer

analyzer = new p5.Amplitude();

// Patch the input to an volume analyzer

analyzer.setInput(mic);

}

function draw() {

// Get the overall volume (between 0 and 1.0)

var vol = analyzer.getLevel();

fill(255, 0, 0);

stroke(255, 0, 0);

background(255-(vol*150), 255-(vol*150), 255-(vol*150));

// Draw an ellipse with size based on volume

ellipse(width/2, height/2, 300+vol*200, 300+vol*200);

}

mockup-blue.php

<?php

$f = $_GET["f"];

if ($f > 11){

$f = 11;

}

?>

<head>

<script language="javascript" src="p5.js"></script>

<script language="javascript" src="addons/p5.sound.js"></script>

<style> body {padding: 0; margin: 0; text-align: center;} canvas{margin:0 auto;} </style>

</head>

<body>

<script>

var song, songs, analyzer;

songs = ['assets/1darkness.mp3', 'assets/2tree.mp3', 'assets/3cake.mp3', 'assets/4pain.mp3', 'assets/5nothing.mp3', 'assets/6love.mp3', 'assets/7birthday.mp3', 'assets/8dog.mp3', 'assets/9circus.mp3', 'assets/10home.mp3', 'assets/11empty.mp3', 'assets/error.mp3'];

function preload() {

song = loadSound(songs[<? echo $f; ?>]);

}

function setup() {

createCanvas(600, 600);

song.play();

analyzer = new p5.Amplitude();

analyzer.setInput(song);

}

function draw() {

var vol = analyzer.getLevel();

fill(0, 0, 255);

stroke(0, 0, 255);

background(255-(vol*150), 255-(vol*150), 255-(vol*150));

ellipse(width/2, height/2, 300+vol*200, 300+vol*200);

if(song.currentTime() <= song.duration() - 0.05){

console.log('playing');

}else{

console.log('stopped');

song.stop();

location.href = "mockup-red.html";

}

console.log(song.currentTime() + ' -> ' + song.duration());

}

</script>

</body>